Pytorch-LSTM轴承故障一维信号分类(一)

目录

前言

1 数据集制作与加载

1.1 导入数据

第一步,导入十分类数据

第二步,读取MAT文件驱动端数据

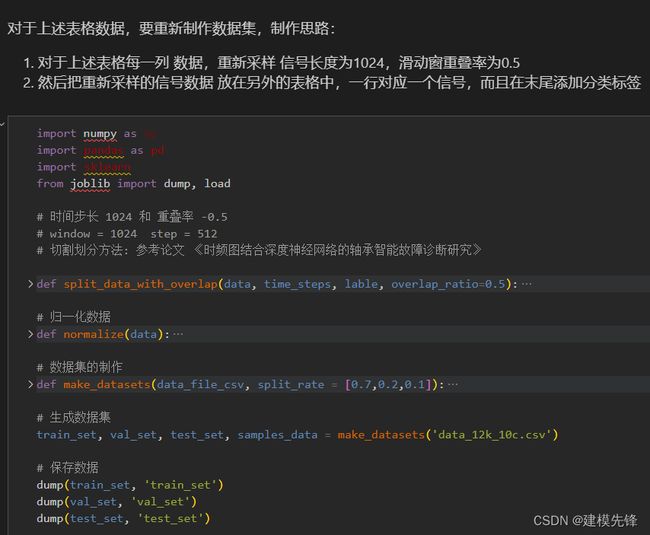

第三步,制作数据集

第四步,制作训练集和标签

1.2 数据加载,训练数据、测试数据分组,数据分batch

2 LSTM分类模型和超参数选取

2.1 定义LSTM分类模型

2.2 定义模型参数

2.3 模型结构

3 LSTM模型训练与评估

3.1 模型训练

3.2 模型评估

往期精彩内容:

Python-凯斯西储大学(CWRU)轴承数据解读与分类处理

Python轴承故障诊断 (一)短时傅里叶变换STFT

Python轴承故障诊断 (二)连续小波变换CWT

Python轴承故障诊断 (三)经验模态分解EMD

Python轴承故障诊断 (四)基于EMD-CNN的故障分类

Python轴承故障诊断 (五)基于EMD-LSTM的故障分类

前言

本文基于凯斯西储大学(CWRU)轴承数据,先经过数据预处理进行数据集的制作和加载,最后通过Python实现LSTM模型对故障数据的分类。凯斯西储大学轴承数据的详细介绍可以参考下文:

Python-凯斯西储大学(CWRU)轴承数据解读与分类处理

1 数据集制作与加载

1.1 导入数据

参考之前的文章,进行故障10分类的预处理,凯斯西储大学轴承数据10分类数据集:

第一步,导入十分类数据

import numpy as np

import pandas as pd

from scipy.io import loadmat

file_names = ['0_0.mat','7_1.mat','7_2.mat','7_3.mat','14_1.mat','14_2.mat','14_3.mat','21_1.mat','21_2.mat','21_3.mat']

for file in file_names:

# 读取MAT文件

data = loadmat(f'matfiles\\{file}')

print(list(data.keys()))第二步,读取MAT文件驱动端数据

# 采用驱动端数据

data_columns = ['X097_DE_time', 'X105_DE_time', 'X118_DE_time', 'X130_DE_time', 'X169_DE_time',

'X185_DE_time','X197_DE_time','X209_DE_time','X222_DE_time','X234_DE_time']

columns_name = ['de_normal','de_7_inner','de_7_ball','de_7_outer','de_14_inner','de_14_ball','de_14_outer','de_21_inner','de_21_ball','de_21_outer']

data_12k_10c = pd.DataFrame()

for index in range(10):

# 读取MAT文件

data = loadmat(f'matfiles\\{file_names[index]}')

dataList = data[data_columns[index]].reshape(-1)

data_12k_10c[columns_name[index]] = dataList[:119808] # 121048 min: 121265

print(data_12k_10c.shape)

data_12k_10c第三步,制作数据集

train_set、val_set、test_set 均为按照7:2:1划分训练集、验证集、测试集,最后保存数据

第四步,制作训练集和标签

# 制作数据集和标签

import torch

# 这些转换是为了将数据和标签从Pandas数据结构转换为PyTorch可以处理的张量,

# 以便在神经网络中进行训练和预测。

def make_data_labels(dataframe):

'''

参数 dataframe: 数据框

返回 x_data: 数据集 torch.tensor

y_label: 对应标签值 torch.tensor

'''

# 信号值

x_data = dataframe.iloc[:,0:-1]

# 标签值

y_label = dataframe.iloc[:,-1]

x_data = torch.tensor(x_data.values).float()

y_label = torch.tensor(y_label.values.astype('int64')) # 指定了这些张量的数据类型为64位整数,通常用于分类任务的类别标签

return x_data, y_label

# 加载数据

train_set = load('train_set')

val_set = load('val_set')

test_set = load('test_set')

# 制作标签

train_xdata, train_ylabel = make_data_labels(train_set)

val_xdata, val_ylabel = make_data_labels(val_set)

test_xdata, test_ylabel = make_data_labels(test_set)

# 保存数据

dump(train_xdata, 'trainX_1024_10c')

dump(val_xdata, 'valX_1024_10c')

dump(test_xdata, 'testX_1024_10c')

dump(train_ylabel, 'trainY_1024_10c')

dump(val_ylabel, 'valY_1024_10c')

dump(test_ylabel, 'testY_1024_10c')1.2 数据加载,训练数据、测试数据分组,数据分batch

import torch

from joblib import dump, load

import torch.utils.data as Data

import numpy as np

import pandas as pd

import torch

import torch.nn as nn

# 参数与配置

torch.manual_seed(100) # 设置随机种子,以使实验结果具有可重复性

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 有GPU先用GPU训练

# 加载数据集

def dataloader(batch_size, workers=2):

# 训练集

train_xdata = load('trainX_1024_10c')

train_ylabel = load('trainY_1024_10c')

# 验证集

val_xdata = load('valX_1024_10c')

val_ylabel = load('valY_1024_10c')

# 测试集

test_xdata = load('testX_1024_10c')

test_ylabel = load('testY_1024_10c')

# 加载数据

train_loader = Data.DataLoader(dataset=Data.TensorDataset(train_xdata, train_ylabel),

batch_size=batch_size, shuffle=True, num_workers=workers, drop_last=True)

val_loader = Data.DataLoader(dataset=Data.TensorDataset(val_xdata, val_ylabel),

batch_size=batch_size, shuffle=True, num_workers=workers, drop_last=True)

test_loader = Data.DataLoader(dataset=Data.TensorDataset(test_xdata, test_ylabel),

batch_size=batch_size, shuffle=True, num_workers=workers, drop_last=True)

return train_loader, val_loader, test_loader

batch_size = 32

# 加载数据

train_loader, val_loader, test_loader = dataloader(batch_size)2 LSTM分类模型和超参数选取

2.1 定义LSTM分类模型

注意:输入数据进行了堆叠 ,把一个1*1024 的序列 进行划分堆叠成形状为 32 * 32, 就使输入序列的长度降下来了

2.2 定义模型参数

# 定义模型参数

batch_size = 32

input_dim = 32 # 输入维度为一维信号序列堆叠为 32 * 32

hidden_layer_sizes = [256, 128, 64]

output_dim = 10

model = LSTMclassifier(batch_size, input_dim, hidden_layer_sizes, output_dim)

# 定义损失函数和优化函数

model = model.to(device)

loss_function = nn.CrossEntropyLoss(reduction='sum') # loss

learn_rate = 0.003

optimizer = torch.optim.Adam(model.parameters(), learn_rate) # 优化器2.3 模型结构

3 LSTM模型训练与评估

3.1 模型训练

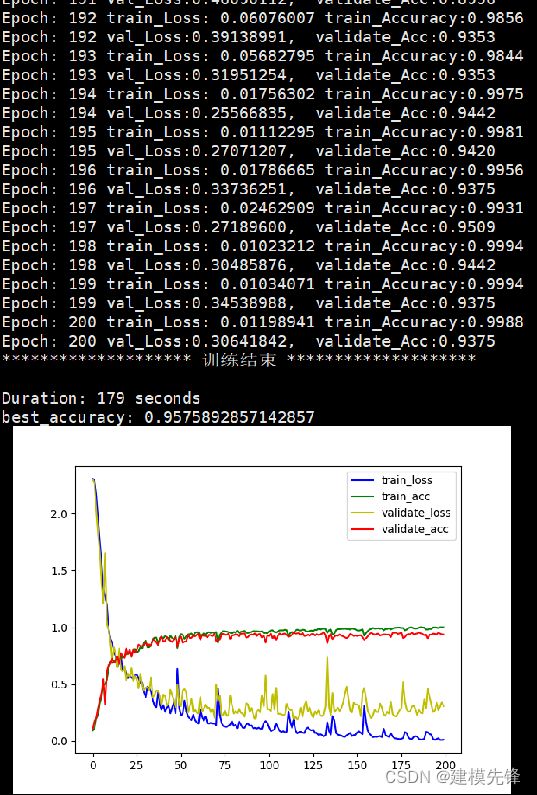

训练结果

200个epoch,准确率将近96%,LSTM网络分类模型效果良好,继续调参还可以进一步提高分类准确率。

注意调整参数:

-

可以适当增加 LSTM层数 和每层神经元个数,微调学习率;

-

增加更多的 epoch (注意防止过拟合)

-

可以改变一维信号堆叠的形状(设置合适的长度和维度)

3.2 模型评估

# 模型 测试集 验证

import torch.nn.functional as F

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 有GPU先用GPU训练

# 加载模型

# model =torch.load('best_model_lstm.pt')

model = torch.load('best_model_lstm.pt', map_location=torch.device('cpu'))

# 将模型设置为评估模式

model.eval()

# 使用测试集数据进行推断

with torch.no_grad():

correct_test = 0

test_loss = 0

for test_data, test_label in test_loader:

test_data, test_label = test_data.to(device), test_label.to(device)

test_output = model(test_data)

probabilities = F.softmax(test_output, dim=1)

predicted_labels = torch.argmax(probabilities, dim=1)

correct_test += (predicted_labels == test_label).sum().item()

loss = loss_function(test_output, test_label)

test_loss += loss.item()

test_accuracy = correct_test / len(test_loader.dataset)

test_loss = test_loss / len(test_loader.dataset)

print(f'Test Accuracy: {test_accuracy:4.4f} Test Loss: {test_loss:10.8f}')

Test Accuracy: 0.9570 Test Loss: 0.12100271