YOLOv8重要文件解读

本文为[365天深度学习训练营学习记录博客

参考文章:365天深度学习训练营

原作者:[K同学啊 | 接辅导、项目定制]

文章来源:[K同学的学习圈子](https://www.yuque.com/mingtian-fkmxf/zxwb45)

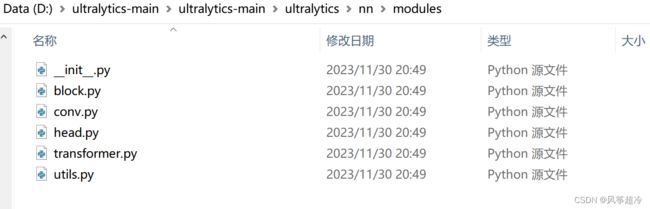

D:\ultralytics-main\ultralytics-main\ultralytics\nn\models\** 目录下的文件与YOLOv5commonpy中文件起到的作用相同,对应模型中的相应模块 。

conv.py文件

def autopad(k, p=None, d=1): # kernel, padding, dilation

"""Pad to 'same' shape outputs."""

if d > 1:

k = d * (k - 1) + 1 if isinstance(k, int) else [d * (x - 1) + 1 for x in k] # actual kernel-size

if p is None:

p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-pad

return p函数的参数包括:

k:卷积核的大小,可以是整数或整数列表。p:填充大小,可以是整数或整数列表,如果未提供,则自动计算。d:膨胀率(dilation rate),默认为1。

class Conv(nn.Module):

"""Standard convolution with args(ch_in, ch_out, kernel, stride, padding, groups, dilation, activation)."""

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):

"""Initialize Conv layer with given arguments including activation."""

super().__init__()

self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)

self.bn = nn.BatchNorm2d(c2)

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

def forward(self, x):

"""Apply convolution, batch normalization and activation to input tensor."""

return self.act(self.bn(self.conv(x)))

def forward_fuse(self, x):

"""Perform transposed convolution of 2D data."""

return self.act(self.conv(x))

class Conv2(Conv):

"""Simplified RepConv module with Conv fusing."""

def __init__(self, c1, c2, k=3, s=1, p=None, g=1, d=1, act=True):

"""Initialize Conv layer with given arguments including activation."""

super().__init__(c1, c2, k, s, p, g=g, d=d, act=act)

self.cv2 = nn.Conv2d(c1, c2, 1, s, autopad(1, p, d), groups=g, dilation=d, bias=False) # add 1x1 conv

def forward(self, x):

"""Apply convolution, batch normalization and activation to input tensor."""

return self.act(self.bn(self.conv(x) + self.cv2(x)))

def forward_fuse(self, x):

"""Apply fused convolution, batch normalization and activation to input tensor."""

return self.act(self.bn(self.conv(x)))

def fuse_convs(self):

"""Fuse parallel convolutions."""

w = torch.zeros_like(self.conv.weight.data)

i = [x // 2 for x in w.shape[2:]]

w[:, :, i[0]:i[0] + 1, i[1]:i[1] + 1] = self.cv2.weight.data.clone()

self.conv.weight.data += w

self.__delattr__('cv2')

self.forward = self.forward_fuse__init__ 方法用于初始化卷积层,参数包括输入通道数 c1,输出通道数 c2,卷积核大小 k,步幅 s,填充大小 p,分组数 g,膨胀率 d,以及是否使用激活函数 act。

class LightConv(nn.Module):

"""

Light convolution with args(ch_in, ch_out, kernel).

https://github.com/PaddlePaddle/PaddleDetection/blob/develop/ppdet/modeling/backbones/hgnet_v2.py

"""

def __init__(self, c1, c2, k=1, act=nn.ReLU()):

"""Initialize Conv layer with given arguments including activation."""

super().__init__()

self.conv1 = Conv(c1, c2, 1, act=False)

self.conv2 = DWConv(c2, c2, k, act=act)

def forward(self, x):

"""Apply 2 convolutions to input tensor."""

return self.conv2(self.conv1(x))

class DWConv(Conv):

"""Depth-wise convolution."""

def __init__(self, c1, c2, k=1, s=1, d=1, act=True): # ch_in, ch_out, kernel, stride, dilation, activation

"""Initialize Depth-wise convolution with given parameters."""

super().__init__(c1, c2, k, s, g=math.gcd(c1, c2), d=d, act=act)LightConv类:

LightConv类表示轻量级卷积,包含两个卷积层的堆叠。

DWConv类:

DWConv类表示深度可分离卷积。- 在初始化过程中,调用了父类

Conv的__init__方法,其中g参数被设置为输入通道数和输出通道数的最大公约数,从而实现深度可分离卷积。

class DWConvTranspose2d(nn.ConvTranspose2d):

"""Depth-wise transpose convolution."""

def __init__(self, c1, c2, k=1, s=1, p1=0, p2=0): # ch_in, ch_out, kernel, stride, padding, padding_out

"""Initialize DWConvTranspose2d class with given parameters."""

super().__init__(c1, c2, k, s, p1, p2, groups=math.gcd(c1, c2))

class ConvTranspose(nn.Module):

"""Convolution transpose 2d layer."""

default_act = nn.SiLU() # default activation

def __init__(self, c1, c2, k=2, s=2, p=0, bn=True, act=True):

"""Initialize ConvTranspose2d layer with batch normalization and activation function."""

super().__init__()

self.conv_transpose = nn.ConvTranspose2d(c1, c2, k, s, p, bias=not bn)

self.bn = nn.BatchNorm2d(c2) if bn else nn.Identity()

self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

def forward(self, x):

"""Applies transposed convolutions, batch normalization and activation to input."""

return self.act(self.bn(self.conv_transpose(x)))

def forward_fuse(self, x):

"""Applies activation and convolution transpose operation to input."""

return self.act(self.conv_transpose(x))-

DWConvTranspose2d类:

DWConvTranspose2d类表示深度可分离的转置卷积。- 在初始化过程中,调用了父类

nn.ConvTranspose2d的__init__方法,并设置了groups参数为输入通道数和输出通道数的最大公约数。

-

ConvTranspose类:

ConvTranspose类表示转置卷积 2D 层,与普通转置卷积相比,它包含了可选的批归一化和激活函数。__init__方法用于初始化转置卷积,参数包括输入通道数c1,输出通道数c2,卷积核大小k,步幅s,填充参数p,以及是否使用批归一化bn和激活函数act。- 在初始化过程中,创建了转置卷积层

conv_transpose,以及可选的批归一化层bn和激活函数act。

class Focus(nn.Module):

"""Focus wh information into c-space."""

def __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True):

"""Initializes Focus object with user defined channel, convolution, padding, group and activation values."""

super().__init__()

self.conv = Conv(c1 * 4, c2, k, s, p, g, act=act)

# self.contract = Contract(gain=2)

def forward(self, x):

"""

Applies convolution to concatenated tensor and returns the output.

Input shape is (b,c,w,h) and output shape is (b,4c,w/2,h/2).

"""

return self.conv(torch.cat((x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]), 1))

# return self.conv(self.contract(x))

-

Focus类(用于在通道维度上聚焦宽高信息):

Focus类继承自nn.Module,表示将宽高信息集中到通道空间的操作。- 在初始化过程中,创建了一个包含四个输入通道的卷积层

self.conv。卷积层将四个通道的信息进行卷积操作,然后输出到通道维度上,用于集中宽高信息。

-

forward方法:forward方法实现了前向传播操作。- 输入张量的形状为 (b, c, w, h),其中

b是批量大小,c是通道数,w和h是宽和高。 - 通过

torch.cat将输入张量沿着宽和高方向进行四次拼接,得到一个新的张量,形状为 (b, 4c, w/2, h/2)。

class GhostConv(nn.Module):

"""Ghost Convolution https://github.com/huawei-noah/ghostnet."""

def __init__(self, c1, c2, k=1, s=1, g=1, act=True):

"""Initializes the GhostConv object with input channels, output channels, kernel size, stride, groups and

activation.

"""

super().__init__()

c_ = c2 // 2 # hidden channels

self.cv1 = Conv(c1, c_, k, s, None, g, act=act)

self.cv2 = Conv(c_, c_, 5, 1, None, c_, act=act)

def forward(self, x):

"""Forward propagation through a Ghost Bottleneck layer with skip connection."""

y = self.cv1(x)

return torch.cat((y, self.cv2(y)), 1)Ghost Convolution 是通过两个卷积层组合的轻量级卷积操作,其主要功能是在保持模型轻量化的同时,增加网络的感受野和表征能力。

两个卷积层组合的轻量级卷积操作:

- 首先在初始化两个卷积层 self.cv1 = Conv(c1, c_, k, s, None, g, act=act) self.cv2 = Conv(c_, c_, 5, 1, None, c_, act=act)

在forward方法中实现两个卷积层组合:y = self.cv1(x) 将输入张量x传递给第一个卷积层self.cv1进行卷积,得到输出张量y。 return torch.cat((y, self.cv2(y)), 1) 将输出张量y和self.cv2(y)进行通道维度上的拼接,得到最终的输出。