浅析 fuse kernel mmap write 过程及性能问题

前言

最近在项目里面用到了fuse文件系统,在使用过程中遇到了一个内核在做mmap write的一个bug,目前并没有从根本上解决这个bug,而是通过修改fuse kernel module的一些参数,绕开了这个bug。这里记录一下这个问题,并顺便梳理一下fuse在做mmap write的过程,包括如何与项目里的后台服务程序交互的。

背景知识

内存映射

在讲述这个问题之前,先来看看操作系统的mmap操作到底做了什么。

上图是每一个运行时程序(进程)的内存布局,整体上可以分为两大部分,内核态内存和用户态内存,我们平时开发的应用大都运行在用户态,代码、数据、栈、堆,都在内存布局的下方,而内存的上方高字节是内核的代码、数据、栈、堆。重点是内存跟文件的映射部分。内存可以看作是磁盘的缓存,一般的read操作,都会先把数据从磁盘拷贝到内核的page cache部分,以4KB页为单位缓存磁盘页,然后再从page cache拷贝到用户态内存的堆或栈里分配的buffer中;一般的写操作,则从用户态内存的堆或栈里分配的buffer中,先拷贝到page cache,最后再异步地写到磁盘里。之所以这里有内存的两次拷贝,是因为内核空间是映射到每个用户进程的虚拟内存里的,而用户态内存大部分情况下是进程独立的,为了每个进程都能用到文件缓存,所以Page cache要放到内核空间,所以存在着两次拷贝的性能损耗。

针对这个两次拷贝的性能损失,操作系统提供了mmap这么一个对大文件读写性能优化的手段。mmap能够让用户态的某段内存与文件的某段数据建立映射关系,对于使用者来说,不需要用write,read这两个对文件读写的接口,而是可以用memcpy和memset对内存操作的接口,通过直接读写内存来修改和读取文件内容。mmap最大的优点是:由于它同样用page与文件一一映射了,与page cache有异曲同工之妙,所以能够与page cache直接关联起来,去掉了两次拷贝的操作,读写仍然是磁盘和page cache之间,但内存映射区域可以直接使用page cache,免去了内核态与用户态之间的拷贝。

mmap 有它的缺陷:

-

内存映射以后,不能调整空间大小,就是说文件大小要固定,只能有修改操作,不能有追加操作。一般来说是用于预先知道文件大小的场景,先用truncate预分配空间,然后mmap映射到内存。

-

初始化mmap开销较大,小文件读写比不上直接用read,write调用,适合大文件或者需要频繁读写同一段数据的场景。

fuse网络文件系统架构

一般的文件系统都是运行在内核态的(xfs,ext4,ext3,ext2…),程序遵循用户态->内核态->用户态这么一个状态切换,以mmap write操作为例,用户态程序用memcpy这个c库函数,发现拷贝的区域是内存映射区域,会陷入内核态,数据从用户态拷贝到page cache里,并把相应page标记为dirty,等待合适的时候再真正写到磁盘或者网络上,然后内核马上返回用户态,给用户返回写的结果。

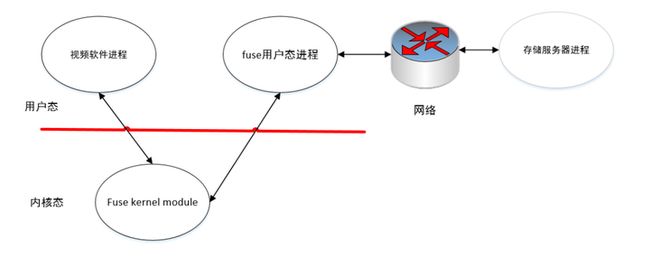

我们项目里用到的fuse文件系统,是一个用户态文件系统,可想而知性能比较差一点,但胜在开发门槛较低。使用fuse操作文件,程序遵循用户态->内核态->用户态->内核态->用户态这么一个状态切换。

上图展示了我们项目的客户端部分的系统架构。需要读写操作的第三方软件程序发起读写请求,陷入内核态,fuse有一个kernel module扮演生产者的角色,接收请求,并封装好请求数据,写到一个字符设备上,并唤醒用户态的fuse进程。用户态fuse进程作为消费者检测字符设备上是否有请求到达,接收请求,通过socket发到网络上。实际的读写操作由服务器进程处理,并把结果走socket返回给fuse用户态进程。fuse用户态进程接到响应后,把返回数据封装好写到字符设备上,并唤醒内核。fuse kernel module被唤醒后,从字符设备上读取数据,解析后返回给第三方软件。

相关视频推荐

c/c++存储开发需要掌握哪些知识,自上而下介绍存储架构体系

存储的三座大山,磁盘,内核文件系统以及分布式文件系统

存储开发,linux内核文件系统的实现

Linux C/C++开发(后端/音视频/游戏/嵌入式/高性能网络/存储/基础架构/安全)

需要C/C++ Linux服务器架构师学习资料加qun812855908获取(资料包括C/C++,Linux,golang技术,Nginx,ZeroMQ,MySQL,Redis,fastdfs,MongoDB,ZK,流媒体,CDN,P2P,K8S,Docker,TCP/IP,协程,DPDK,ffmpeg等),免费分享

fuse mmap write 性能问题

现象

某不知名第三方视频软件与我们的系统对接,把我们系统作为存储后端做视频存储。该软件在启动时候需要mmap一个或多个10MB的索引文件,然后读一部分数据出来,再写一部分数据回去。这个过程如果mmap的是本地文件系统(xfs),每个索引文件读写包括计算大概需要10~20ms。但接上了fuse文件系统后,有一定概率会出现mmap阻塞,这个mmap读写过程需要3分钟甚至更长!这样的话,一旦索引文件多起来(每1GB的视频文件需要10MB的索引文件),启动速度就变得不可接受了。

首先,我们排除掉了后台服务程序的问题,通过客户端的日志,发现每一个请求都是马上返回,但每个请求与请求之间有几秒的间隙,所以肯定是上层调用出了问题。

然后,我们排除了fuse用户态程序的问题。第一,我们先尝试用fuse官方的passthrough程序来做同样的mmap测试,发现仍然存在这个问题,基本排除是用户态的问题。第二,前面说到,用户态fuse进程是作为消费者,等待字符设备的数据到达。通过分析日志,发现字符设备上每个到达请求之间有几秒的间隙,所以确定问题是出在生产者fuse kernel module身上。

通过trace内核的执行,我们最终发现,罪魁祸首就在balance_dirty_pages()函数内,以下是trace的日志:

<...>-129940 [000] 110827.472935: funcgraph_entry: | balance_dirty_pages.isra.20() {

<...>-129940 [000] 110827.472935: funcgraph_entry: | global_dirty_limits() {

<...>-129940 [000] 110827.472936: funcgraph_entry: 0.084 us | global_dirtyable_memory();

<...>-129940 [000] 110827.472936: funcgraph_exit: 0.757 us | }

<...>-129940 [000] 110827.472937: funcgraph_entry: 0.244 us | bdi_dirty_limit();

<...>-129940 [000] 110827.472938: funcgraph_entry: 0.070 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.472939: funcgraph_entry: 0.086 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.472940: funcgraph_entry: 0.070 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.472941: funcgraph_entry: 0.090 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.472942: funcgraph_entry: 0.070 us | writeback_in_progress();

<...>-129940 [000] 110827.472942: funcgraph_entry: | bdi_start_background_writeback() {

<...>-129940 [000] 110827.472943: funcgraph_entry: | bdi_wakeup_thread() {

<...>-129940 [000] 110827.472943: funcgraph_entry: | _raw_spin_lock_bh() {

<...>-129940 [000] 110827.472943: funcgraph_entry: 0.070 us | local_bh_disable();

<...>-129940 [000] 110827.472944: funcgraph_exit: 0.683 us | }

<...>-129940 [000] 110827.472944: funcgraph_entry: | mod_delayed_work_on() {

<...>-129940 [000] 110827.472944: funcgraph_entry: | try_to_grab_pending() {

<...>-129940 [000] 110827.472945: funcgraph_entry: | del_timer() {

<...>-129940 [000] 110827.472945: funcgraph_entry: | lock_timer_base.isra.33() {

<...>-129940 [000] 110827.472945: funcgraph_entry: 0.084 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.472946: funcgraph_exit: 0.694 us | }

<...>-129940 [000] 110827.472947: funcgraph_entry: 0.150 us | detach_if_pending();

<...>-129940 [000] 110827.472948: funcgraph_entry: 0.090 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.472948: funcgraph_exit: 3.420 us | }

<...>-129940 [000] 110827.472949: funcgraph_exit: 4.120 us | }

<...>-129940 [000] 110827.472949: funcgraph_entry: | __queue_delayed_work() {

<...>-129940 [000] 110827.472950: funcgraph_entry: | __queue_work() {

<...>-129940 [000] 110827.472950: funcgraph_entry: 0.187 us | get_work_pool();

<...>-129940 [000] 110827.472951: funcgraph_entry: 0.083 us | _raw_spin_lock();

<...>-129940 [000] 110827.472951: funcgraph_entry: | insert_work() {

<...>-129940 [000] 110827.472952: funcgraph_entry: 0.077 us | get_pwq.isra.17();

<...>-129940 [000] 110827.472952: funcgraph_entry: | wake_up_worker() {

<...>-129940 [000] 110827.472953: funcgraph_entry: | wake_up_process() {

<...>-129940 [000] 110827.472953: funcgraph_entry: | try_to_wake_up() {

<...>-129940 [000] 110827.472953: funcgraph_entry: 0.077 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.472954: funcgraph_entry: 0.100 us | task_waking_fair();

<...>-129940 [000] 110827.472954: funcgraph_entry: | select_task_rq_fair() {

<...>-129940 [000] 110827.472955: funcgraph_entry: 0.070 us | source_load();

<...>-129940 [000] 110827.472955: funcgraph_entry: 0.070 us | target_load();

<...>-129940 [000] 110827.472956: funcgraph_entry: 0.087 us | effective_load.isra.40();

<...>-129940 [000] 110827.472957: funcgraph_entry: 0.070 us | effective_load.isra.40();

<...>-129940 [000] 110827.472957: funcgraph_entry: 0.077 us | idle_cpu();

<...>-129940 [000] 110827.472958: funcgraph_exit: 3.193 us | }

<...>-129940 [000] 110827.472958: funcgraph_entry: 0.073 us | _raw_spin_lock();

<...>-129940 [000] 110827.472959: funcgraph_entry: | ttwu_do_activate.constprop.91() {

<...>-129940 [000] 110827.472959: funcgraph_entry: | activate_task() {

<...>-129940 [000] 110827.472959: funcgraph_entry: | enqueue_task() {

<...>-129940 [000] 110827.472960: funcgraph_entry: 0.087 us | update_rq_clock.part.79();

<...>-129940 [000] 110827.472960: funcgraph_entry: | enqueue_task_fair() {

<...>-129940 [000] 110827.472960: funcgraph_entry: | enqueue_entity() {

<...>-129940 [000] 110827.472961: funcgraph_entry: 0.077 us | update_curr();

<...>-129940 [000] 110827.472961: funcgraph_entry: 0.090 us | __compute_runnable_contrib.part.57();

<...>-129940 [000] 110827.472962: funcgraph_entry: 0.077 us | update_cfs_rq_blocked_load();

<...>-129940 [000] 110827.472963: funcgraph_entry: 0.106 us | account_entity_enqueue();

<...>-129940 [000] 110827.472963: funcgraph_entry: 0.070 us | update_cfs_shares();

<...>-129940 [000] 110827.472964: funcgraph_entry: 0.070 us | place_entity();

<...>-129940 [000] 110827.472964: funcgraph_entry: 0.100 us | __enqueue_entity();

<...>-129940 [000] 110827.472965: funcgraph_exit: 4.247 us | }

<...>-129940 [000] 110827.472965: funcgraph_entry: 0.073 us | hrtick_update();

<...>-129940 [000] 110827.472966: funcgraph_exit: 5.478 us | }

<...>-129940 [000] 110827.472966: funcgraph_exit: 6.618 us | }

<...>-129940 [000] 110827.472966: funcgraph_exit: 7.148 us | }

<...>-129940 [000] 110827.472967: funcgraph_entry: | wq_worker_waking_up() {

<...>-129940 [000] 110827.472967: funcgraph_entry: 0.090 us | kthread_data();

<...>-129940 [000] 110827.472968: funcgraph_exit: 0.687 us | }

<...>-129940 [000] 110827.472968: funcgraph_entry: | ttwu_do_wakeup() {

<...>-129940 [000] 110827.472968: funcgraph_entry: | check_preempt_curr() {

<...>-129940 [000] 110827.472969: funcgraph_entry: 0.160 us | resched_task();

<...>-129940 [000] 110827.472969: funcgraph_exit: 0.766 us | }

<...>-129940 [000] 110827.472970: funcgraph_exit: 1.407 us | }

<...>-129940 [000] 110827.472970: funcgraph_exit: + 10.855 us | }

<...>-129940 [000] 110827.472970: funcgraph_entry: 0.060 us | _raw_spin_unlock();

<...>-129940 [000] 110827.472971: funcgraph_entry: 0.090 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.472971: funcgraph_exit: + 18.041 us | }

<...>-129940 [000] 110827.472972: funcgraph_exit: + 18.632 us | }

<...>-129940 [000] 110827.472972: funcgraph_exit: + 19.245 us | }

<...>-129940 [000] 110827.472972: funcgraph_exit: + 20.442 us | }

<...>-129940 [000] 110827.472972: funcgraph_entry: 0.070 us | _raw_spin_unlock();

<...>-129940 [000] 110827.472973: funcgraph_exit: + 23.136 us | }

<...>-129940 [000] 110827.472973: funcgraph_exit: + 23.802 us | }

<...>-129940 [000] 110827.472974: funcgraph_exit: + 29.159 us | }

<...>-129940 [000] 110827.472974: funcgraph_entry: | _raw_spin_unlock_bh() {

<...>-129940 [000] 110827.472974: funcgraph_entry: 0.130 us | local_bh_enable_ip();

<...>-129940 [000] 110827.472975: funcgraph_exit: 0.723 us | }

<...>-129940 [000] 110827.472975: funcgraph_exit: + 32.273 us | }

<...>-129940 [000] 110827.472975: funcgraph_exit: + 32.886 us | }

<...>-129940 [000] 110827.472976: funcgraph_entry: 0.067 us | _raw_spin_lock();

<...>-129940 [000] 110827.472976: funcgraph_entry: | __bdi_update_bandwidth() {

<...>-129940 [000] 110827.472977: funcgraph_entry: 0.153 us | _raw_spin_lock();

<...>-129940 [000] 110827.472977: funcgraph_entry: 0.070 us | _raw_spin_unlock();

<...>-129940 [000] 110827.472978: funcgraph_entry: 0.120 us | bdi_position_ratio.isra.13();

<...>-129940 [000] 110827.472979: funcgraph_entry: 0.144 us | bdi_dirty_limit();

<...>-129940 [000] 110827.472980: funcgraph_exit: 3.504 us | }

<...>-129940 [000] 110827.472980: funcgraph_entry: 0.064 us | _raw_spin_unlock();

<...>-129940 [000] 110827.472981: funcgraph_entry: 0.096 us | bdi_position_ratio.isra.13();

<...>-129940 [000] 110827.472982: funcgraph_entry: 0.173 us | bdi_min_pause.isra.14();

<...>-129940 [000] 110827.472982: funcgraph_entry: | io_schedule_timeout() {

<...>-129940 [000] 110827.472983: funcgraph_entry: | __delayacct_blkio_start() {

<...>-129940 [000] 110827.472983: funcgraph_entry: 0.143 us | ktime_get_ts64();

<...>-129940 [000] 110827.472984: funcgraph_exit: 0.710 us | }

<...>-129940 [000] 110827.472984: funcgraph_entry: | schedule_timeout() {

<...>-129940 [000] 110827.472984: funcgraph_entry: | lock_timer_base.isra.33() {

<...>-129940 [000] 110827.472985: funcgraph_entry: 0.084 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.472985: funcgraph_exit: 0.700 us | }

<...>-129940 [000] 110827.472985: funcgraph_entry: 0.070 us | detach_if_pending();

<...>-129940 [000] 110827.472986: funcgraph_entry: 0.074 us | get_nohz_timer_target();

<...>-129940 [000] 110827.472987: funcgraph_entry: | internal_add_timer() {

<...>-129940 [000] 110827.472987: funcgraph_entry: 0.090 us | __internal_add_timer();

<...>-129940 [000] 110827.472988: funcgraph_exit: 0.710 us | }

<...>-129940 [000] 110827.472988: funcgraph_entry: 0.090 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.472988: funcgraph_entry: | schedule() {

<...>-129940 [000] 110827.472989: funcgraph_entry: | __schedule() {

<...>-129940 [000] 110827.472989: funcgraph_entry: 0.074 us | rcu_note_context_switch();

<...>-129940 [000] 110827.472990: funcgraph_entry: 0.067 us | _raw_spin_lock_irq();

<...>-129940 [000] 110827.472990: funcgraph_entry: | deactivate_task() {

<...>-129940 [000] 110827.472990: funcgraph_entry: | dequeue_task() {

<...>-129940 [000] 110827.472991: funcgraph_entry: 0.093 us | update_rq_clock.part.79();

<...>-129940 [000] 110827.472991: funcgraph_entry: | dequeue_task_fair() {

<...>-129940 [000] 110827.472992: funcgraph_entry: | dequeue_entity() {

<...>-129940 [000] 110827.472992: funcgraph_entry: | update_curr() {

<...>-129940 [000] 110827.472992: funcgraph_entry: 0.073 us | update_min_vruntime();

<...>-129940 [000] 110827.472993: funcgraph_entry: 0.163 us | cpuacct_charge();

<...>-129940 [000] 110827.472994: funcgraph_exit: 1.356 us | }

<...>-129940 [000] 110827.472994: funcgraph_entry: 0.103 us | update_cfs_rq_blocked_load();

<...>-129940 [000] 110827.472995: funcgraph_entry: 0.060 us | clear_buddies();

<...>-129940 [000] 110827.472995: funcgraph_entry: 0.090 us | account_entity_dequeue();

<...>-129940 [000] 110827.472996: funcgraph_entry: 0.073 us | update_min_vruntime();

<...>-129940 [000] 110827.472996: funcgraph_entry: 0.067 us | update_cfs_shares();

<...>-129940 [000] 110827.472997: funcgraph_exit: 4.947 us | }

<...>-129940 [000] 110827.472997: funcgraph_entry: 0.067 us | hrtick_update();

<...>-129940 [000] 110827.472998: funcgraph_exit: 6.061 us | }

<...>-129940 [000] 110827.472998: funcgraph_exit: 7.271 us | }

<...>-129940 [000] 110827.472998: funcgraph_exit: 7.837 us | }

<...>-129940 [000] 110827.472999: funcgraph_entry: | idle_balance() {

<...>-129940 [000] 110827.472999: funcgraph_entry: 0.067 us | msecs_to_jiffies();

<...>-129940 [000] 110827.473000: funcgraph_exit: 0.693 us | }

<...>-129940 [000] 110827.473000: funcgraph_entry: 0.096 us | put_prev_task_fair();

<...>-129940 [000] 110827.473000: funcgraph_entry: 0.120 us | pick_next_task_fair();

<...>-129940 [000] 110827.473001: funcgraph_entry: 0.064 us | pick_next_task_idle();

<...>-129940 [000] 110827.557928: funcgraph_entry: 0.257 us | finish_task_switch();

<...>-129940 [000] 110827.557930: funcgraph_exit: # 84940.770 us | }

<...>-129940 [000] 110827.557930: funcgraph_exit: # 84941.446 us | }

<...>-129940 [000] 110827.557930: funcgraph_entry: | del_timer_sync() {

<...>-129940 [000] 110827.557931: funcgraph_entry: | try_to_del_timer_sync() {

<...>-129940 [000] 110827.557931: funcgraph_entry: | lock_timer_base.isra.33() {

<...>-129940 [000] 110827.557931: funcgraph_entry: 0.087 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.557932: funcgraph_exit: 0.667 us | }

<...>-129940 [000] 110827.557932: funcgraph_entry: 0.084 us | detach_if_pending();

<...>-129940 [000] 110827.557933: funcgraph_entry: 0.107 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.557934: funcgraph_exit: 2.520 us | }

<...>-129940 [000] 110827.557934: funcgraph_exit: 3.187 us | }

<...>-129940 [000] 110827.557934: funcgraph_exit: # 84950.076 us | }

<...>-129940 [000] 110827.557935: funcgraph_entry: | __delayacct_blkio_end() {

<...>-129940 [000] 110827.557935: funcgraph_entry: | delayacct_end() {

<...>-129940 [000] 110827.557935: funcgraph_entry: 0.134 us | ktime_get_ts64();

<...>-129940 [000] 110827.557936: funcgraph_entry: 0.106 us | set_normalized_timespec();

<...>-129940 [000] 110827.557937: funcgraph_entry: 0.090 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.557937: funcgraph_entry: 0.103 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.557938: funcgraph_exit: 2.580 us | }

<...>-129940 [000] 110827.557938: funcgraph_exit: 3.170 us | }

<...>-129940 [000] 110827.557939: funcgraph_exit: # 84956.297 us | }

<...>-129940 [000] 110827.557939: funcgraph_entry: 0.143 us | writeback_in_progress();

<...>-129940 [000] 110827.557940: funcgraph_exit: # 85004.885 us | }

<...>-129940 [000] 110827.557953: funcgraph_entry: | balance_dirty_pages.isra.20() {

<...>-129940 [000] 110827.557953: funcgraph_entry: | global_dirty_limits() {

<...>-129940 [000] 110827.557953: funcgraph_entry: 0.083 us | global_dirtyable_memory();

<...>-129940 [000] 110827.557954: funcgraph_exit: 0.910 us | }

<...>-129940 [000] 110827.557955: funcgraph_entry: 0.260 us | bdi_dirty_limit();

<...>-129940 [000] 110827.557956: funcgraph_entry: 0.066 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.557958: funcgraph_entry: 0.087 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.557958: funcgraph_entry: 0.070 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.557959: funcgraph_entry: 0.090 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.557960: funcgraph_entry: 0.066 us | writeback_in_progress();

<...>-129940 [000] 110827.557961: funcgraph_entry: | bdi_start_background_writeback() {

<...>-129940 [000] 110827.557961: funcgraph_entry: | bdi_wakeup_thread() {

<...>-129940 [000] 110827.557962: funcgraph_entry: | _raw_spin_lock_bh() {

<...>-129940 [000] 110827.557962: funcgraph_entry: 0.073 us | local_bh_disable();

<...>-129940 [000] 110827.557963: funcgraph_exit: 0.770 us | }

<...>-129940 [000] 110827.557963: funcgraph_entry: | mod_delayed_work_on() {

<...>-129940 [000] 110827.557964: funcgraph_entry: | try_to_grab_pending() {

<...>-129940 [000] 110827.557964: funcgraph_entry: | del_timer() {

<...>-129940 [000] 110827.557964: funcgraph_entry: | lock_timer_base.isra.33() {

<...>-129940 [000] 110827.557964: funcgraph_entry: 0.113 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.557965: funcgraph_exit: 0.744 us | }

<...>-129940 [000] 110827.557965: funcgraph_entry: 0.196 us | detach_if_pending();

<...>-129940 [000] 110827.557966: funcgraph_entry: 0.090 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.557967: funcgraph_exit: 2.597 us | }

<...>-129940 [000] 110827.557967: funcgraph_exit: 3.294 us | }

<...>-129940 [000] 110827.557967: funcgraph_entry: | __queue_delayed_work() {

<...>-129940 [000] 110827.557968: funcgraph_entry: | __queue_work() {

<...>-129940 [000] 110827.557968: funcgraph_entry: 0.183 us | get_work_pool();

<...>-129940 [000] 110827.557969: funcgraph_entry: 0.100 us | _raw_spin_lock();

<...>-129940 [000] 110827.557969: funcgraph_entry: | insert_work() {

<...>-129940 [000] 110827.557970: funcgraph_entry: 0.080 us | get_pwq.isra.17();

<...>-129940 [000] 110827.557970: funcgraph_entry: | wake_up_worker() {

<...>-129940 [000] 110827.557971: funcgraph_entry: | wake_up_process() {

<...>-129940 [000] 110827.557971: funcgraph_entry: | try_to_wake_up() {

<...>-129940 [000] 110827.557971: funcgraph_entry: 0.093 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.557972: funcgraph_entry: 0.103 us | task_waking_fair();

<...>-129940 [000] 110827.557972: funcgraph_entry: | select_task_rq_fair() {

<...>-129940 [000] 110827.557973: funcgraph_entry: 0.093 us | source_load();

<...>-129940 [000] 110827.557974: funcgraph_entry: 0.063 us | target_load();

<...>-129940 [000] 110827.557974: funcgraph_entry: 0.086 us | effective_load.isra.40();

<...>-129940 [000] 110827.557975: funcgraph_entry: 0.070 us | effective_load.isra.40();

<...>-129940 [000] 110827.557975: funcgraph_entry: 0.096 us | idle_cpu();

<...>-129940 [000] 110827.557976: funcgraph_exit: 3.183 us | }

<...>-129940 [000] 110827.557976: funcgraph_entry: 0.104 us | _raw_spin_lock();

<...>-129940 [000] 110827.557977: funcgraph_entry: | ttwu_do_activate.constprop.91() {

<...>-129940 [000] 110827.557977: funcgraph_entry: | activate_task() {

<...>-129940 [000] 110827.557977: funcgraph_entry: | enqueue_task() {

<...>-129940 [000] 110827.557978: funcgraph_entry: 0.083 us | update_rq_clock.part.79();

<...>-129940 [000] 110827.557978: funcgraph_entry: | enqueue_task_fair() {

<...>-129940 [000] 110827.557979: funcgraph_entry: | enqueue_entity() {

<...>-129940 [000] 110827.557979: funcgraph_entry: 0.090 us | update_curr();

<...>-129940 [000] 110827.557979: funcgraph_entry: 0.093 us | __compute_runnable_contrib.part.57();

<...>-129940 [000] 110827.557980: funcgraph_entry: 0.120 us | update_cfs_rq_blocked_load();

<...>-129940 [000] 110827.557981: funcgraph_entry: 0.103 us | account_entity_enqueue();

<...>-129940 [000] 110827.557981: funcgraph_entry: 0.067 us | update_cfs_shares();

<...>-129940 [000] 110827.557982: funcgraph_entry: 0.073 us | place_entity();

<...>-129940 [000] 110827.557982: funcgraph_entry: 0.107 us | __enqueue_entity();

<...>-129940 [000] 110827.557983: funcgraph_exit: 4.223 us | }

<...>-129940 [000] 110827.557983: funcgraph_entry: 0.073 us | hrtick_update();

<...>-129940 [000] 110827.557984: funcgraph_exit: 5.357 us | }

<...>-129940 [000] 110827.557984: funcgraph_exit: 6.517 us | }

<...>-129940 [000] 110827.557984: funcgraph_exit: 7.044 us | }

<...>-129940 [000] 110827.557985: funcgraph_entry: | wq_worker_waking_up() {

<...>-129940 [000] 110827.557985: funcgraph_entry: 0.093 us | kthread_data();

<...>-129940 [000] 110827.557986: funcgraph_exit: 0.693 us | }

<...>-129940 [000] 110827.557986: funcgraph_entry: | ttwu_do_wakeup() {

<...>-129940 [000] 110827.557986: funcgraph_entry: | check_preempt_curr() {

<...>-129940 [000] 110827.557986: funcgraph_entry: 0.173 us | resched_task();

<...>-129940 [000] 110827.557988: funcgraph_exit: 1.330 us | }

<...>-129940 [000] 110827.557988: funcgraph_exit: 2.014 us | }

<...>-129940 [000] 110827.557988: funcgraph_exit: + 11.394 us | }

<...>-129940 [000] 110827.557989: funcgraph_entry: 0.066 us | _raw_spin_unlock();

<...>-129940 [000] 110827.557989: funcgraph_entry: 0.090 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.557990: funcgraph_exit: + 18.879 us | }

<...>-129940 [000] 110827.557990: funcgraph_exit: + 19.555 us | }

<...>-129940 [000] 110827.557991: funcgraph_exit: + 20.251 us | }

<...>-129940 [000] 110827.557991: funcgraph_exit: + 21.555 us | }

<...>-129940 [000] 110827.557992: funcgraph_entry: 0.066 us | _raw_spin_unlock();

<...>-129940 [000] 110827.557992: funcgraph_exit: + 24.299 us | }

<...>-129940 [000] 110827.557993: funcgraph_exit: + 25.026 us | }

<...>-129940 [000] 110827.557993: funcgraph_exit: + 29.459 us | }

<...>-129940 [000] 110827.557993: funcgraph_entry: | _raw_spin_unlock_bh() {

<...>-129940 [000] 110827.557993: funcgraph_entry: 0.137 us | local_bh_enable_ip();

<...>-129940 [000] 110827.557994: funcgraph_exit: 0.730 us | }

<...>-129940 [000] 110827.557994: funcgraph_exit: + 32.846 us | }

<...>-129940 [000] 110827.557995: funcgraph_exit: + 33.550 us | }

<...>-129940 [000] 110827.557995: funcgraph_entry: 0.127 us | bdi_position_ratio.isra.13();

<...>-129940 [000] 110827.557996: funcgraph_entry: 0.173 us | bdi_min_pause.isra.14();

<...>-129940 [000] 110827.557997: funcgraph_entry: | io_schedule_timeout() {

<...>-129940 [000] 110827.557997: funcgraph_entry: | __delayacct_blkio_start() {

<...>-129940 [000] 110827.557997: funcgraph_entry: 0.117 us | ktime_get_ts64();

<...>-129940 [000] 110827.557998: funcgraph_exit: 0.667 us | }

<...>-129940 [000] 110827.557998: funcgraph_entry: | schedule_timeout() {

<...>-129940 [000] 110827.557998: funcgraph_entry: | lock_timer_base.isra.33() {

<...>-129940 [000] 110827.557999: funcgraph_entry: 0.070 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.557999: funcgraph_exit: 0.607 us | }

<...>-129940 [000] 110827.558000: funcgraph_entry: 0.074 us | detach_if_pending();

<...>-129940 [000] 110827.558000: funcgraph_entry: 0.073 us | get_nohz_timer_target();

<...>-129940 [000] 110827.558001: funcgraph_entry: | internal_add_timer() {

<...>-129940 [000] 110827.558001: funcgraph_entry: 0.104 us | __internal_add_timer();

<...>-129940 [000] 110827.558002: funcgraph_exit: 0.660 us | }

<...>-129940 [000] 110827.558002: funcgraph_entry: 0.090 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.558002: funcgraph_entry: | schedule() {

<...>-129940 [000] 110827.558003: funcgraph_entry: | __schedule() {

<...>-129940 [000] 110827.558003: funcgraph_entry: 0.073 us | rcu_note_context_switch();

<...>-129940 [000] 110827.558004: funcgraph_entry: 0.067 us | _raw_spin_lock_irq();

<...>-129940 [000] 110827.558004: funcgraph_entry: | deactivate_task() {

<...>-129940 [000] 110827.558005: funcgraph_entry: | dequeue_task() {

<...>-129940 [000] 110827.558005: funcgraph_entry: 0.093 us | update_rq_clock.part.79();

<...>-129940 [000] 110827.558006: funcgraph_entry: | dequeue_task_fair() {

<...>-129940 [000] 110827.558006: funcgraph_entry: | dequeue_entity() {

<...>-129940 [000] 110827.558006: funcgraph_entry: | update_curr() {

<...>-129940 [000] 110827.558006: funcgraph_entry: 0.107 us | update_min_vruntime();

<...>-129940 [000] 110827.558007: funcgraph_entry: 0.154 us | cpuacct_charge();

<...>-129940 [000] 110827.558008: funcgraph_exit: 1.517 us | }

<...>-129940 [000] 110827.558008: funcgraph_entry: 0.070 us | update_cfs_rq_blocked_load();

<...>-129940 [000] 110827.558009: funcgraph_entry: 0.067 us | clear_buddies();

<...>-129940 [000] 110827.558009: funcgraph_entry: 0.143 us | account_entity_dequeue();

<...>-129940 [000] 110827.558010: funcgraph_entry: 0.067 us | update_min_vruntime();

<...>-129940 [000] 110827.558011: funcgraph_entry: 0.066 us | update_cfs_shares();

<...>-129940 [000] 110827.558011: funcgraph_exit: 5.031 us | }

<...>-129940 [000] 110827.558012: funcgraph_entry: 0.067 us | hrtick_update();

<...>-129940 [000] 110827.558012: funcgraph_exit: 6.301 us | }

<...>-129940 [000] 110827.558012: funcgraph_exit: 7.551 us | }

<...>-129940 [000] 110827.558013: funcgraph_exit: 8.138 us | }

<...>-129940 [000] 110827.558013: funcgraph_entry: | idle_balance() {

<...>-129940 [000] 110827.558013: funcgraph_entry: 0.066 us | msecs_to_jiffies();

<...>-129940 [000] 110827.558014: funcgraph_exit: 0.734 us | }

<...>-129940 [000] 110827.558014: funcgraph_entry: 0.134 us | put_prev_task_fair();

<...>-129940 [000] 110827.558015: funcgraph_entry: 0.103 us | pick_next_task_fair();

<...>-129940 [000] 110827.558016: funcgraph_entry: 0.067 us | pick_next_task_idle();

<...>-129940 [000] 110827.642941: funcgraph_entry: 0.250 us | finish_task_switch();

<...>-129940 [000] 110827.642942: funcgraph_exit: # 84939.279 us | }

<...>-129940 [000] 110827.642943: funcgraph_exit: # 84939.935 us | }

<...>-129940 [000] 110827.642943: funcgraph_entry: | del_timer_sync() {

<...>-129940 [000] 110827.642943: funcgraph_entry: | try_to_del_timer_sync() {

<...>-129940 [000] 110827.642944: funcgraph_entry: | lock_timer_base.isra.33() {

<...>-129940 [000] 110827.642944: funcgraph_entry: 0.087 us | _raw_spin_lock_irqsave();

<...>-129940 [000] 110827.642945: funcgraph_exit: 1.417 us | }

<...>-129940 [000] 110827.642946: funcgraph_entry: 0.083 us | detach_if_pending();

<...>-129940 [000] 110827.642946: funcgraph_entry: 0.110 us | _raw_spin_unlock_irqrestore();

<...>-129940 [000] 110827.642947: funcgraph_exit: 3.454 us | }

<...>-129940 [000] 110827.642947: funcgraph_exit: 4.197 us | }

<...>-129940 [000] 110827.642948: funcgraph_exit: # 84949.467 us | }balance_dirty_pages 是控制什么时候触发写回的一个手段,可见问题出在 page cache write back 上。从trace log中发现,每次调用balance_dirty_pages都会消耗85ms左右的时间,而这个balance_dirty_pages会反复调用,并且都消耗大量的时间,推测是因为每次调用完了之后,并没有实际把脏页写回,所以balance_dirty_pages_ratelimited的判断每次都会成立。后面看一看内核的write back实现细节是怎么样的。

write back 过程

fuse mmap write是采用了其他文件系统常用的write back机制,就是写page cache成功以后,把page标记为dirty,直接返回,实际的写操作是由内核异步完成。write back机制是每个文件系统独立完成,一般情况下由以下条件触发:

页面Cache变得太满,并且还需要更多的内存页,或者脏页的数量非常大;脏页停留在内存中的时间过长;某个进程要求更改的块设备或某个文件数据刷新,通常调用sync系统来实现。

文件系统控制写回的数据结构–bdi设备

bdi是backing device info的缩写,每一个文件系统在内核里都维护这么一个结构,管理实际的write back。fuse内核模块在初始化的时候,会注册一个bdi。

static int fuse_bdi_init(struct fuse_conn *fc, struct super_block *sb)

{

int err;

fc->bdi.name = "fuse";

fc->bdi.ra_pages = (VM_MAX_READAHEAD * 1024) / PAGE_CACHE_SIZE;

// fc->bdi.ra_pages = 2560;

/* fuse does it's own writeback accounting */

fc->bdi.capabilities = BDI_CAP_NO_ACCT_WB | BDI_CAP_STRICTLIMIT;

// fc->bdi.capabilities = BDI_CAP_NO_ACCT_WB;

printk(KERN_INFO "fuse bdi version %i.%i)\n",

MAJOR(fc->dev), MINOR(fc->dev));

err = bdi_init(&fc->bdi);

if (err)

return err;

fc->bdi_initialized = 1;

if (sb->s_bdev) {

err = bdi_register(&fc->bdi, NULL, "%u:%u-fuseblk",

MAJOR(fc->dev), MINOR(fc->dev));

} else {

err = bdi_register_dev(&fc->bdi, fc->dev);

}

if (err)

return err;

/*

* For a single fuse filesystem use max 1% of dirty +

* writeback threshold.

*

* This gives about 1M of write buffer for memory maps on a

* machine with 1G and 10% dirty_ratio, which should be more

* than enough.

*

* Privileged users can raise it by writing to

*

* /sys/class/bdi//max_ratio

*/

bdi_set_max_ratio(&fc->bdi, 1);

return 0;

}

int bdi_init(struct backing_dev_info *bdi)

{

...

bdi_wb_init(&bdi->wb, bdi);

...

}

EXPORT_SYMBOL(bdi_init);

static void bdi_wb_init(struct bdi_writeback *wb, struct backing_dev_info *bdi)

{

memset(wb, 0, sizeof(*wb));

wb->bdi = bdi;

wb->last_old_flush = jiffies;

INIT_LIST_HEAD(&wb->b_dirty);

INIT_LIST_HEAD(&wb->b_io);

INIT_LIST_HEAD(&wb->b_more_io);

spin_lock_init(&wb->list_lock);

INIT_DELAYED_WORK(&wb->dwork, bdi_writeback_workfn);

}这里面fuse在注册bdi的时候,会启动一个线程,这个线程执行bdi_writeback_workfn函数,实际的write back操作由这个函数完成。下图给出bdi处理write back用到的主要数据结构及关系:

注意一下上面那个BDI_CAP_STRICTLIMIT属性,这个属性造成了这次的bug。如果把这个属性去掉,则性能问题消失。

周期性检查 write back

内核在内存紧张的时候是可以进行cache替换,把已经写回的page cache回收分配给其他用途,但这个page必须没有加上dirty或者wrieteback的标志位。为了防止进程短时间内制造大量脏页占用过量内存,linux会在linux每次写ratelimit_pages这么多的mmap内存以后,会调用以下函数,检查是否需要写回一部分脏页:

static long ratelimit_pages = 32;

void balance_dirty_pages_ratelimited(struct address_space *mapping)

{

struct backing_dev_info *bdi = mapping->backing_dev_info;

int ratelimit;

int *p;

...

if (unlikely(current->nr_dirtied >= ratelimit))

balance_dirty_pages(mapping, current->nr_dirtied);

}

EXPORT_SYMBOL(balance_dirty_pages_ratelimited);重点看一下current->nr_dirtied >= ratelimit这句,当当前写进程的脏页达到一个限制时,会调用balance_dirty_pages()函数,从而造成写进程阻塞,避免生成大量脏页占用大量内存。

balance_dirty_pages

balance_dirty_pages的逻辑有点复杂,只抽取重点部分看一下:

nr_reclaimable = global_page_state(NR_FILE_DIRTY) +

global_page_state(NR_UNSTABLE_NFS);

nr_dirty = nr_reclaimable + global_page_state(NR_WRITEBACK); // 当前总脏页数

global_dirty_limits(&background_thresh, &dirty_thresh); // 计算出后台正在写回的脏页以及总脏页的水位线

if (unlikely(strictlimit)) { // 设置了strict_limit则要把bdi设备的限制考虑在内

bdi_dirty_limits(bdi, dirty_thresh, background_thresh,

&bdi_dirty, &bdi_thresh, &bg_thresh);

dirty = bdi_dirty;

thresh = bdi_thresh;

} else { // 如果没有设置strict_limit则仅仅考虑全局的脏页

dirty = nr_dirty;

thresh = dirty_thresh;

bg_thresh = background_thresh;

}

/*

* Throttle it only when the background writeback cannot

* catch-up. This avoids (excessively) small writeouts

* when the bdi limits are ramping up in case of !strictlimit.

*

* In strictlimit case make decision based on the bdi counters

* and limits. Small writeouts when the bdi limits are ramping

* up are the price we consciously pay for strictlimit-ing.

*/

if (dirty <= dirty_freerun_ceiling(thresh, bg_thresh)) { // dirty <= 0.5 * (thresh + bg_thresh)

current->dirty_paused_when = now;

current->nr_dirtied = 0;

current->nr_dirtied_pause =

dirty_poll_interval(dirty, thresh);

break;

}

if (unlikely(!writeback_in_progress(bdi)))

bdi_start_background_writeback(bdi);

...

io_schedule_timeout(pause)这个判断条件里有没有strict_limit是不同的情况,之前看到fuse设置了strict_limit,那么检查脏页的时候就主要是受fuse本身的脏页限制,否则按照linux内核的限制。当满足限制的时候则调用bdi_start_background_writeback,这个函数会唤醒之前说的真正处理writeback的线程,执行bdi_writeback_workfn函数。

bdi_writeback_workfn 最终会调用fuse自定义的fuse_writepage函数把具体的写回任务交给fuse,然后fuse再把任务交给我们的存储服务器。

fuse_writepage

每一个脏页的写回,都会调用这个函数。主要关注一下page的状态变化。

static int fuse_writepage_locked(struct page *page)

{

struct address_space *mapping = page->mapping;

struct inode *inode = mapping->host;

struct fuse_conn *fc = get_fuse_conn(inode);

struct fuse_inode *fi = get_fuse_inode(inode);

struct fuse_req *req;

struct fuse_file *ff;

struct page *tmp_page;

set_page_writeback(page); // 取消page的dirty标记,打上writeback标记

req = fuse_request_alloc_nofs(1);

if (!req)

goto err;

req->background = 1; /* writeback always goes to bg_queue */

tmp_page = alloc_page(GFP_NOFS | __GFP_HIGHMEM); // fuse会向内核内存资源池申请一个4KB的新页

if (!tmp_page)

goto err_free;

spin_lock(&fc->lock);

BUG_ON(list_empty(&fi->write_files));

ff = list_entry(fi->write_files.next, struct fuse_file, write_entry);

req->ff = fuse_file_get(ff);

spin_unlock(&fc->lock);

fuse_write_fill(req, ff, page_offset(page), 0);

copy_highpage(tmp_page, page); // 将原page的内容拷贝到新page

req->misc.write.in.write_flags |= FUSE_WRITE_CACHE;

req->in.argpages = 1;

req->num_pages = 1;

req->pages[0] = tmp_page;

req->page_descs[0].offset = 0;

req->page_descs[0].length = PAGE_SIZE;

req->end = fuse_writepage_end; // 写回完成时异步回调函数

req->inode = inode;

inc_bdi_stat(mapping->backing_dev_info, BDI_WRITEBACK);

inc_zone_page_state(tmp_page, NR_WRITEBACK_TEMP);

spin_lock(&fc->lock);

list_add(&req->writepages_entry, &fi->writepages);

list_add_tail(&req->list, &fi->queued_writes);

fuse_flush_writepages(inode);

spin_unlock(&fc->lock);

end_page_writeback(page); // 取消旧page的writeback标记

return 0;

err_free:

fuse_request_free(req);

err:

end_page_writeback(page);

return -ENOMEM;

}-

set_page_writeback取消page的dirty标记,打上writeback标记。

-

fuse会向内核内存资源池申请一个4KB的新页。

-

copy_highpage将原page的内容拷贝到新page。

-

inc_bdi_stat(mapping->backing_dev_info, BDI_WRITEBACK)给bdi自身的写回计数器加1,inc_zone_page_state(tmp_page, NR_WRITEBACK_TEMP)会同时把该页的计数放到内核全局的NR_WRITEBACK_TEMP计数器里,正常情况应该是放到NR_WRITEBACK计数器里,否则如果不设置striclimit的话,内核是不会把该页统计为脏页的。

-

封装req数据,发送到字符设备,并唤醒用户态fuse进程。

-

取消旧page的writeback标记。

从上面函数可以看到fuse自己管理要写回的脏页,旧的由内核管理的脏页马上标记成已经写回成功了。这样内核的脏页计数就不会把该页和新页统计在里面,如果fuse的设置去掉了strict_limit标志以后,脏页就没有任何控制了。

目前解决办法

取消掉了strict_limit标记,但由于fuse在写回的时候选择异步的方式,把旧页直接清除掉writeback标记返回,真正的写回是用一个新页来完成,如果单纯取消strict_limit,那么fuse的脏页就既不由fuse控制又不受内核全局控制。目前的办法是把fuse_writepage_locked函数的inc_zone_page_state(tmp_page, NR_WRITEBACK_TEMP)改成inc_zone_page_state(tmp_page, NR_WRITEBACK)。