mvs一镜到底

mvs一镜到底

- 一、Use smart-unwrap to create uv coordinates and store your mesh as .obj file.

-

- 1. smart-unwrap

- 2. store your mesh as .obj file.

- 3. 关闭blender

- 二、grayscales rendering and viepoints' rotation and tranlation matrices.

-

- 1. 添加运动轨迹

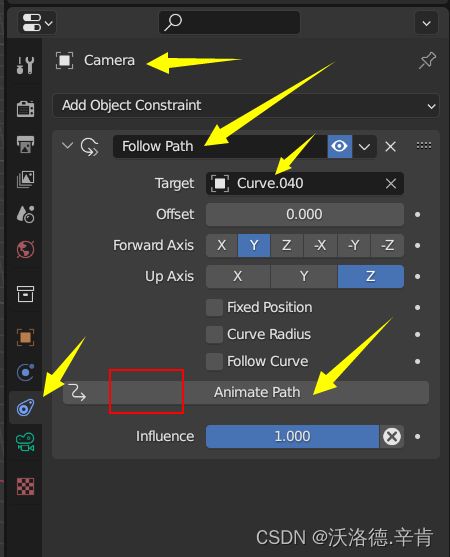

- 2. 添加相机约束

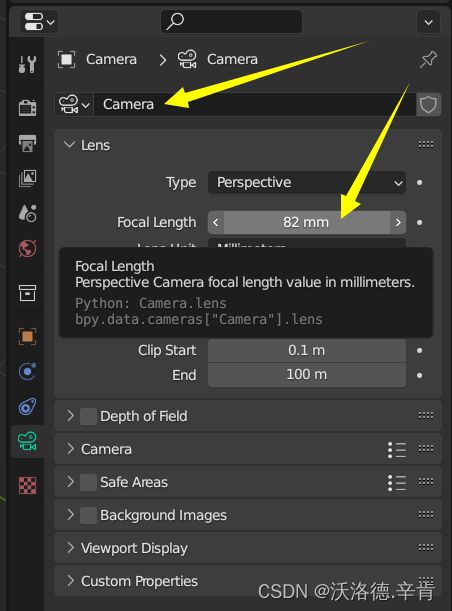

- 3. 调整相机视角至合适位置,并修改焦距

- 4. 修改帧范围,默认100帧转一圈

- 5. 添加材质

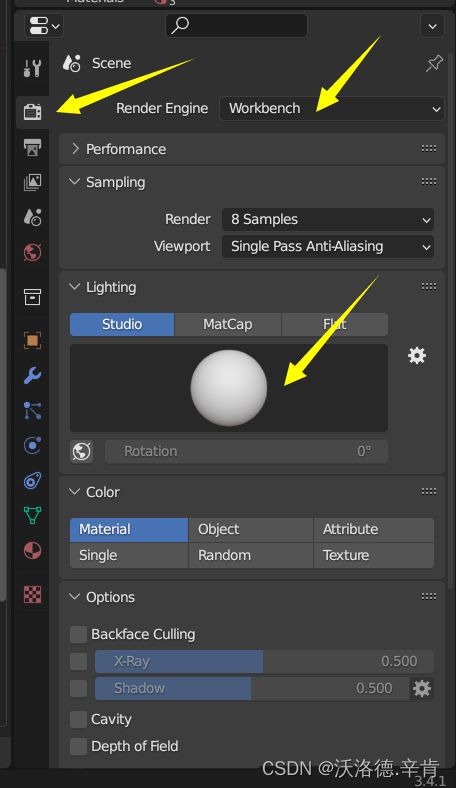

- 6. 设置渲染引擎

- 7. 修改对象名称,输出路径,输出格式

- 8. 保存.blender文件

- 三、Extract dictionaries and 2D-3D correspondances.

-

- 1.得到005.npy,pano2UV_proj里的_dict.json,visibility.npy

-

- 1.1 创建texture_mesh.py

- 1.2 创建文件夹pano2UV_proj,放在/scenes/005/下

- 1.3 运行texture_mesh.py

- 2.得到UV2pano,pano2UV里的RTm.npy

-

- 2.1 创建look_up_unrenderer.py

- 2.2 修改参数

- 2.3 将/images/RTm复制一份到/output/RTm

- 2.4 创建文件夹UV2pano,pano2UV。

- 2.5 运行look_up_unrenderer.py

一、Use smart-unwrap to create uv coordinates and store your mesh as .obj file.

1. smart-unwrap

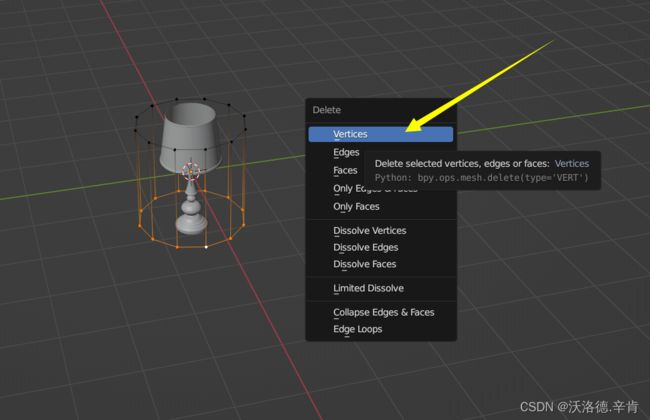

删除所有对象,包括灯光和相机,导入.obj。

Tab+A+U

2. store your mesh as .obj file.

3. 关闭blender

二、grayscales rendering and viepoints’ rotation and tranlation matrices.

1. 添加运动轨迹

2. 添加相机约束

3. 调整相机视角至合适位置,并修改焦距

4. 修改帧范围,默认100帧转一圈

5. 添加材质

6. 设置渲染引擎

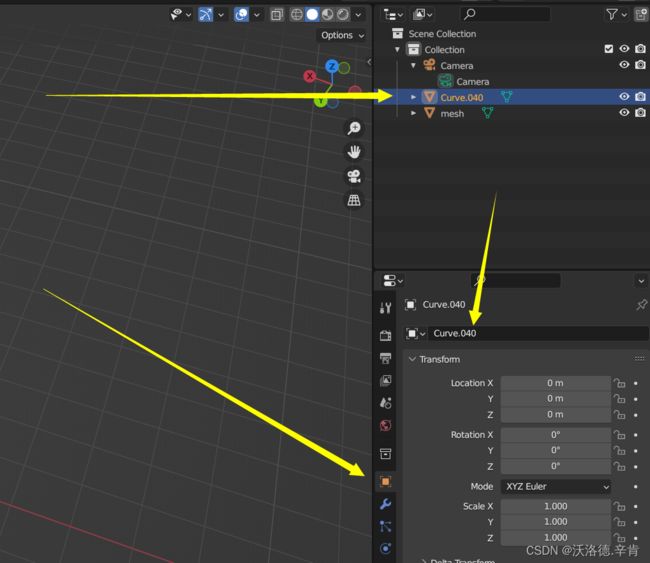

7. 修改对象名称,输出路径,输出格式

import bpy

import math

import os

from mathutils import Vector, Matrix

from math import radians

import bmesh

import datetime

import time

import numpy as np

import os

#from os import path

scenenumber='baseline_scene6_clean'

#basefolder=os.path.join(os.path.expanduser('~'), 'Documents', 'Zantis', scenenumber)

scene = bpy.data.scenes["Scene"]

#scene.camera.rotation_mode='YZX'

def length():

leg=0.00

p1=[0.0,0.0]

temp=0

ob = bpy.context.object # active object

for p in ob.data.splines.active.bezier_points:

p2=p1

p1=[p.co.x,p.co.y]

if(temp==0):

temp=1

continue

distance = math.sqrt( ((p1[0]-p2[0])**2)+((p1[1]-p2[1])**2) )

leg=leg+distance

return leg

item=0

multiply=40

camera = bpy.data.objects['Camera']

target=bpy.data.objects['mesh.001']

my_obj=[]

#my_obj.append("019")

#my_obj.append("021")

#my_obj.append("029")

#my_obj.append("030")

#my_obj.append("031")

#my_obj.append("032")

#my_obj.append("033")

#my_obj.append("034")

my_obj.append("040")

#my_obj.append("042")

t1 = time.time()

#import bpy

#for ob in bpy.context.selected_editable_objects:

# ob.active_material_index = 0

# for i in range(len(ob.material_slots)):

# bpy.ops.object.material_slot_remove({'object': ob})

for item in my_obj:

#break

# basefolder=os.path.join(os.path.expanduser('~'), 'Desktop', 'Urban_Enviroment_Texturing','Mesh_Texturing_Pipeline', 'Scenes', '005', 'images')

basefolder = os.path.join('E:\\', 'chromeDownload', 'mvs_test', 'scenes', '006', 'images')

t2 = time.time()

for ob in bpy.context.selected_objects:

ob.select_set(False)

curv='Curve.'+item

path = bpy.data.objects[curv]

camera.select_set(True)

path.select_set(True)

# bpy.context.view_layer.objects.active = path # parent

# bpy.ops.object.parent_set(type='FOLLOW') # follow path

# camera.location=path.matrix_world @ bpy.context.object.data.splines.active.bezier_points[0].co

# target.location=path.matrix_world @ bpy.context.object.data.splines.active.bezier_points[1].co

# direc=target.location-camera.location

# frames=(int)(length()*multiply)

# bpy.data.scenes["Scene"].frame_end=frames

# bpy.context.object.data.path_duration=frames

frame_is=0

# frames=frames+1

# print(frames)

count=0

for frame_is in range(scene.frame_start, scene.frame_end + 1):

# for frame_is in range(frames - 3):

t3 = time.time()

scene.frame_current=frame_is

#print(scene.frame_currents

# s=str(scene.objects['Camera'].matrix_world[0][3])+"_"+str(scene.objects['Camera'].matrix_world[1][3])+"_"+str(scene.objects['Camera'].matrix_world[2][3])+"_"

# s=s+str(direc[0])+"_"+str(direc[1])+"_"+str(direc[2])+".jpg"

s = str(scene.frame_current).zfill(5)+".jpg"

image_filepath=os.path.join(basefolder,s)

#print(s)

bpy.data.scenes['Scene'].render.filepath = image_filepath

# bpy.ops.render.render(write_still=True)

elapsedTime = time.time() - t3

print("Frame time passed {hours:d}:{minutes:d}:{seconds:d}"

.format(hours=int((elapsedTime / 60 ** 2) % (60 ** 2)), minutes=int((elapsedTime / 60) % (60)),

seconds=int(elapsedTime % 60)))

# print('path to create: ', os.path.join(os.path.expanduser('~'), 'Desktop', 'Data','Depth_data',scenenumber+'_'+item))

if not os.path.exists(os.path.join(os.path.expanduser('~'), basefolder)):

os.mkdir(os.path.join(os.path.expanduser('~'), basefolder))

if not os.path.exists(os.path.join(os.path.expanduser('~'), basefolder, 'RTm/')):

os.mkdir(os.path.join(os.path.expanduser('~'), basefolder, 'RTm/'))

# Set up rendering of depth map:

bpy.context.scene.use_nodes = True

tree = bpy.context.scene.node_tree

links = tree.links

# clear default nodes

for n in tree.nodes:

tree.nodes.remove(n)

# create input render layer node

rl = tree.nodes.new('CompositorNodeRLayers')

RGB2BW = tree.nodes.new(type="CompositorNodeRGBToBW")

links.new(rl.outputs[0], RGB2BW.inputs[0])

map = tree.nodes.new(type="CompositorNodeMapValue")

# Size is chosen kind of arbitrarily, try out until you're satisfied with resulting depth map.

map.size = [0.05]

map.use_min = True

map.min = [0]

map.use_max = True

map.max = [1]

links.new(rl.outputs[2], map.inputs[0])

mix_multi = tree.nodes.new(type="CompositorNodeMixRGB")

# Size is chosen kind of arbitrarily, try out until you're satisfied with resulting depth map.

mix_multi.blend_type = 'MULTIPLY'

mix_multi.inputs[2].default_value[0] = 0.5

mix_multi.inputs[2].default_value[1] = 0.5

mix_multi.inputs[2].default_value[2] = 0.5

# mix_multi.inputs[2].default = [0.5 , 0.5, 0.5, 1.0]

links.new(rl.outputs[3], mix_multi.inputs[1])

mix_multi2 = tree.nodes.new(type="CompositorNodeMixRGB")

# Size is chosen kind of arbitrarily, try out until you're satisfied with resulting depth map.

mix_multi2.blend_type = 'ADD'

mix_multi2.inputs[2].default_value[0] = 0.5

mix_multi2.inputs[2].default_value[1] = 0.5

mix_multi2.inputs[2].default_value[2] = 0.5

links.new(mix_multi.outputs[0], mix_multi2.inputs[1])

invert = tree.nodes.new(type="CompositorNodeInvert")

links.new(map.outputs[0], invert.inputs[1])

# The viewer can come in handy for inspecting the results in the GUI

depthViewer = tree.nodes.new(type="CompositorNodeViewer")

links.new(invert.outputs[0], depthViewer.inputs[0])

# Use alpha from input.

links.new(rl.outputs[1], depthViewer.inputs[1])

# Normal map

# The viewer can come in handy for inspecting the results in the GUI

depthViewer2 = tree.nodes.new(type="CompositorNodeViewer")

links.new(rl.outputs[1], depthViewer2.inputs[1])

links.new(mix_multi2.outputs[0], depthViewer2.inputs[0])

# fileOutput = tree.nodes.new(type="CompositorNodeOutputFile")

# fileOutput.base_path = os.path.join(os.path.expanduser('~'), 'Desktop', 'Data','Depth_data',scenenumber+'_'+item, 'depth')

## fileOutput.file_slots[0].path = str(scene.frame_current).zfill(5)

# links.new(invert.outputs[0], fileOutput.inputs[0])

#

# fileOutput2 = tree.nodes.new(type="CompositorNodeOutputFile")

# fileOutput2.base_path = os.path.join(os.path.expanduser('~'), 'Desktop', 'Data','Depth_data',scenenumber+'_'+item,'normal')

## fileOutput2.file_slots[0].path = str(scene.frame_current).zfill(5)

# links.new(mix_multi2.outputs[0], fileOutput2.inputs[0])

#

# fileOutput3 = tree.nodes.new(type="CompositorNodeOutputFile")

# fileOutput3.base_path = os.path.join(os.path.expanduser('~'), 'Desktop', 'Data','Depth_data',scenenumber+'_'+item,'panoramas_BW')

## fileOutput3.file_slots[0].path = str(scene.frame_current).zfill(5)

# links.new(RGB2BW.outputs[0], fileOutput3.inputs[0])

bpy.ops.render.render(write_still=True)

location, rotation = scene.objects['Camera'].matrix_world.decompose()[0:2]

R_world2bcam = rotation.to_matrix().transposed()

T_world2bcam = -1*R_world2bcam @ location

Camera_RT_matrix = Matrix((

R_world2bcam[0][:] + (T_world2bcam[0],),

R_world2bcam[1][:] + (T_world2bcam[1],),

R_world2bcam[2][:] + (T_world2bcam[2],),

[0,0,0,1],))

np.savetxt(os.path.join(os.path.expanduser('~'), basefolder, 'RTm')+'/'+str(frame_is).zfill(6)+"_RTm.txt",np.array(Camera_RT_matrix))

# break

scene.frame_current=0

path.select_set(False)

bpy.ops.object.parent_clear(type='CLEAR')

# camera.select_set(False)

elapsedTime = time.time() - t1

print("Total time passed {hours:d}:{minutes:d}:{seconds:d}"

.format(hours=int((elapsedTime / 60 ** 2) % (60 ** 2)), minutes=int((elapsedTime / 60) % (60)),

seconds=int(elapsedTime % 60)))

print("Finished")

8. 保存.blender文件

三、Extract dictionaries and 2D-3D correspondances.

1.得到005.npy,pano2UV_proj里的_dict.json,visibility.npy

1.1 创建texture_mesh.py

#!/usr/bin/env python

import sys, os

# Add the igl library to the modules search path

sys.path.insert(0, os.getcwd() + "/../libigl/python/")

import pyigl as igl

import argparse

import math

from tqdm import tqdm

import multiprocessing

import ctypes

import numpy as np

import cv2

import create_dict as dict_

parser = argparse.ArgumentParser()

parser.add_argument('--scene_path', type=str,

default="",

help="Location of obj filee")

parser.add_argument('--start', type=int, default=0, help="UV mapping")

parser.add_argument('--offset', type=int, default=200, help="UV mapping")

parser.add_argument('--visual', type=bool, default=False, help="Display map")

parser.add_argument('--fill_mesh', type=bool, default=True)

parser.add_argument('--create_dict', dest='create_dict', action='store_true')

parser.add_argument('--panoram_size', type=tuple, default=(512, 256))

parser.add_argument('--UV_size', type=tuple, default=(2048, 2048))

parser.add_argument('--scene_number', type=int, default=4)

parser.add_argument('--distance_threshold', type=int, default=20)

parser.add_argument('--processes_number', type=int, default=10)

parser.add_argument('--UV_output_path', type=str, default=10)

parser.add_argument('--step', type=int, default=4)

parser.add_argument('--no_dis_filter', type=bool, default=False)

parser.add_argument('--mesh_name', type=str, default='mesh')

# parser.add_argument('--create_3D_mapping', type=bool, default=False)

parser.add_argument('--create_3D_mapping', dest='create_3D_mapping', action='store_true')

parser.add_argument('--feature', dest='feature', action='store_true')

pars = parser.parse_args()

# 从指定目录加载相机信息,包括相机的旋转和平移矩阵。

def load_camera_info_np(directory, start=pars.start, offset=pars.offset):

camera_RT_matrices = []

camera_locations = []

count = 0

for filename in sorted(os.listdir(directory)):

if count < start + offset and count >= start and (count % pars.step == 0):

RTmatrix = np.loadtxt(os.path.join(directory, filename))

print('RTm: ', filename)

# Camera rotation and translation matrix

camera_RT_matrices.append(np.loadtxt(os.path.join(directory, filename)))

# World coordin<3 location

camera_locations.append(np.dot(-1 * RTmatrix[:, :-1].T, RTmatrix[:, -1]))

count += 1

print('Camera location list: ', len(camera_locations))

return camera_RT_matrices, camera_locations

# 从指定目录加载全景图像。

def load_panoramas(directory, start=pars.start, offset=pars.offset):

im_x, im_y = pars.panoram_size

A_source = igl.eigen.MatrixXuc(im_x, im_y)

R_source = igl.eigen.MatrixXuc(im_x, im_y)

G_source = igl.eigen.MatrixXuc(im_x, im_y)

B_source = igl.eigen.MatrixXuc(im_x, im_y)

images_R_source = []

images_G_source = []

images_B_source = []

images_A_source = []

count