k8s之kudeadm

kubeadm来快速的搭建一个k8s的集群:

二进制搭建适合大集群,50台以上主机

kubeadm更适合中小企业的业务集群

master:192.168.233.91 docker kubelet lubeadm kubectl flannel

node1:192.168.233.92 docker kubelet lubeadm kubectl flannel

node2:192.168.233.93 docker kubelet lubeadm kubectl flannel

harbor节点:192.168.233.94 docker docker-compose harbor

所有:

systemctl stop firewalld

setenforce 0

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

swapoff -a

前三台:master node1 node2

for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^.*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

vim /etc/sysctl.d/k8s.conf

#开启网桥模式:

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

#网桥的流量传给iptables链,实现地址映射

#关闭ipv6的流量(可选项)

net.ipv6.conf.all.disable_ipv6=1

#根据工作中的实际情况,自定

net.ipv4.ip_forward=1

wq!

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

sysctl --system

master1:

hostnamectl set-hostname master01

node1:

hostnamectl set-hostname node01

node2:

hostnamectl set-hostname node02

harror:

hostnamectl set-hostname

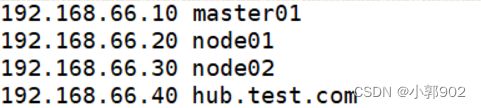

vim /etc/hosts

192.168.66.10 master01

192.168.66.20 node01

192.168.66.30 node02

192.168.66.40 harror

所有:

yum install ntpdate -y

ntpdate ntp.aliyun.com

date

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

systemctl start docker.service

systemctl enable docker.service

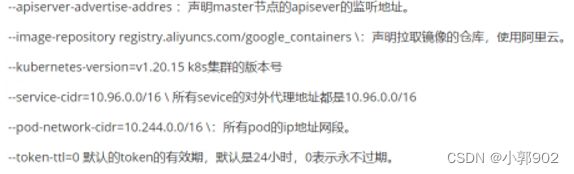

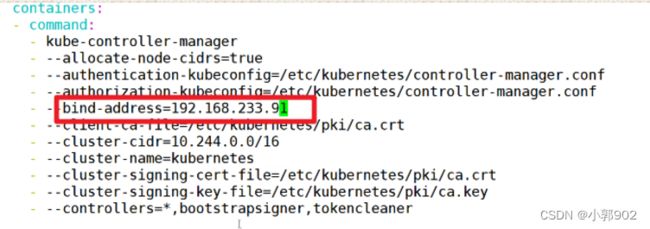

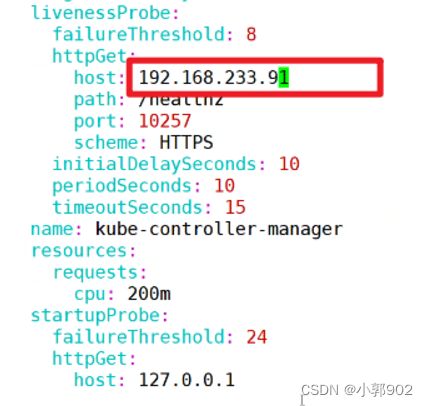

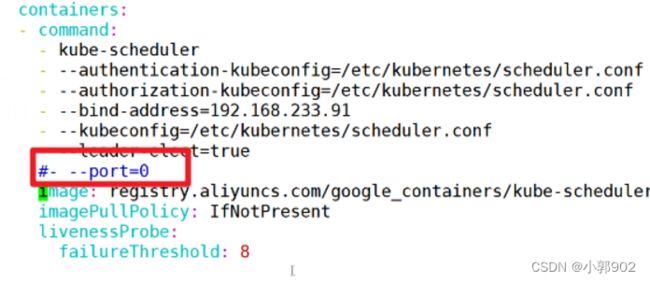

mkdir /etc/docker

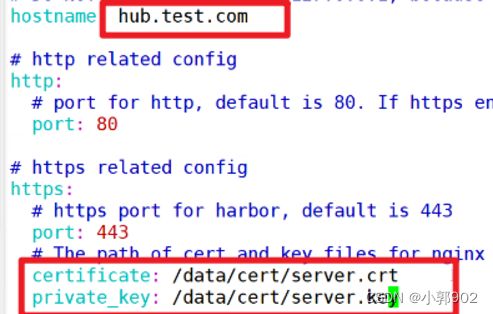

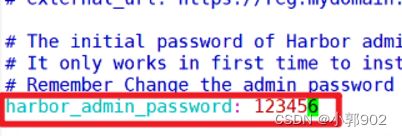

cat > /etc/docker/daemon.json < { "registry-mirrors": ["https://t7pjr1xu.mirror.aliyuncs.com"], "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" } } EOF systemctl daemon-reload systemctl restart docker systemctl enable docker master,node1,node2: cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF yum install -y kubelet-1.20.15 kubeadm-1.20.15 kubectl-1.20.15 master: kubeadm config images list --kubernetes-version 1.20.15 pause:特殊的pod pause会在节点上创建一个网络命名空间,其他容器可以加入这个网络命名空间 pod里面的容器可能使用不同的代码和架构编写。可以在一个网络空间里面实现通信,协调这个命名空间的资源。(实现pod内容器的兼容性) kubeadm安装的k8s组件都是以pod的形式运行在kube-system这个命名空间当中。 kubelet node管理器可以进行系统控制 ## kubeadm init \ --apiserver-advertise-address=192.168.66.10 \ --image-repository registry.aliyuncs.com/google_containers \ --kubernetes-version=v1.20.15 \ --service-cidr=10.96.0.0/16 \ --pod-network-cidr=10.244.0.0/16 \ --token-ttl=0 ## master01: kubeadm init \ > --apiserver-advertise-address=192.168.66.10 \ > --image-repository registry.aliyuncs.com/google_containers \ > --kubernetes-version=v1.20.15 \ > --service-cidr=10.96.0.0/16 \ > --pod-network-cidr=10.244.0.0/16 \ > --token-ttl=0 一定要把这个复制: node1,node2: kubeadm join 192.168.66.10:6443 --token ub8djv.yk7umnodmp2h8yuh \ --discovery-token-ca-cert-hash sha256:d7b5bd1da9d595b72863423ebeeb9b48dff9a2a38446ac15348f1b1b18a273e9 master: mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config systemctl restart kubelet kubectl edit cm kube-proxy -n=kube-system 搜索mode systemctl restart kubelet kubectl get node kubectl get cs vim /etc/kubernetes/manifests/kube-controller-manager.yaml vim /etc/kubernetes/manifests/kube-scheduler.yaml systemctl restart kubelet kubectl get cs kubectl get pods -n kube-system cd /opt 拖进去flannel,cni-plugins-linux-amd64-v0.8.6.tgz,kube-flannel.yml docker load -i flannel.tar mv /opt/cni /opt/cni_bak mkdir -p /opt/cni/bin tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin 两个从节点 拖flannel.tar ,cni-plugins-linux-amd64-v0.8.6.tgz docker load -i flannel.tar mv /opt/cni /opt/cni_bak mkdir -p /opt/cni/bin tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin master: kubectl apply -f kube-flannel.yml kubectl get node docker load -i flannel.tar mv /opt/cni /opt/cni_bak mkdir -p /opt/cni/bin tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin kubectl get node kubectl get pods kubectl get pods -o wide -n kube-system cd /etc/kubernetes/pki openssl x509 -in /etc/kubernetes/pki/ca.crt -noout -text | grep Not openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text | grep Not cd /opt 把update-kubeadm-cert.sh 拖进去 chmod 777 update-kubeadm-cert.sh ./update-kubeadm-cert.sh all openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text | grep Not kubectl get nodes kubectl get pods -n kube-system kubectl get cs vim /etc/profile source <(kubectl completion bash) wq! source /etc/profile kubectl describe pods kubectl get pod -n kube-system 这时候master即使主也是一个node 验证: kubectl create deployment nginx --image=nginx --replicas=3 kubectl get pods kubectl get pods -o wide kubectl expose deployment nginx --port=80 --type=NodePort kubectl get svc curl 192.168.66.10:30923 harror: cd /opt 把docker-compose 和harror拖进来 mv docker-compose-linux-x86_64 docker-compose mv docker-compose /usr/local/bin chmod 777 /usr/local/bin/docker-compose docker-compose -v tar -xf harbor-offline-installer-v2.8.1.tgz -C /usr/local cd /usr/local/harbor/ cp harbor.yml.tmpl harbor.yml vim /usr/local/harbor/harbor.yml mkdir -p /data/cert cd /data/cert openssl genrsa -des3 -out server.key 2048 123456 123456 openssl req -new -key server.key -out server.csr 123456 Cn JS NJ TEST TEST hub.kgc.com 一路回车 cp server.key server.key.org openssl rsa -in server.key.org -out server.key 123456 openssl x509 -req -days 1000 -in server.csr -signkey server.key -out server.crt chmod +x /data/cert/* cd /usr/local/harbor/ ./prepare ./install.sh https://192.168.66.40 admin 123456 node1:: mkdir -p /etc/docker/certs.d/hub.test.com/ k8s4: scp -r /data/ [email protected]:/ scp -r /data/ [email protected]:/ node1: cd /data/cert cp server.crt server.csr server.key /etc/docker/certs.d/hub.test.com/ vim /etc/hosts 192.168.66.40 hub.test.com vim /lib/systemd/system/docker.service --insecure-registry=hub.test.com systemctl daemon-reload systemctl restart docker docker login -u admin -p 123456 https://hub.test.com docker images 创建一个 docker tag nginx:latest hub.test.com/k8s/nginx:v1 docker images docker push hub.test.com/k8s/nginx:v1 node2: mkdir -p /etc/docker/certs.d/hub.test.com/ cd /data/cert cp server.crt server.csr server.key /etc/docker/certs.d/hub.test.com/ vim /etc/hosts 192.168.66.40 hub.test.com vim /lib/systemd/system/docker.service --insecure-registry=hub.test.com systemctl daemon-reload systemctl restart docker docker login -u admin -p 123456 https://hub.test.com docker images docker tag nginx:latest hub.test.com/k8s/nginx:v2 docker images docker push hub.test.com/k8s/nginx:v2 master1: kubectl create deployment nginx1 --image=hub.test.com/library/nginx:v1 --port=80 --replicas=3 kubectl get pods 记得把k8s改成公开 master1: cd /opt 拖进去 recommended.yaml kubectl apply -f recommended.yaml kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin kubectl get pods -n kubernetes-dashboard kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}') 访问 https://192.168.66.30:30001![]()

![]()