hive优化

一、 执行计划(Explain)

- 基本语法

EXPLAIN [EXTENDED | DEPENDENCY | AUTHORIZATION] query - demo:

hive (dyhtest)> explain select * from emp;

OK

Explain

STAGE DEPENDENCIES:

Stage-0 is a root stage

STAGE PLANS:

Stage: Stage-0

**Fetch Operator**

limit: -1 --- -1 :表示不限制条数

Processor Tree: --- 执行计划树

TableScan --- 表扫描

alias: emp --- 扫描的表

Statistics: Num rows: 1 Data size: 6600 Basic stats: COMPLETE Column stats: NONE

Select Operator --- 查询

expressions: empno (type: int), ename (type: string), job (type: string), mgr (type: int), hiredate (type: string), sal (type: double), comm (type: double), deptno (type: int) --- 查询的字段以及类型

outputColumnNames: _col0, _col1, _col2, _col3, _col4, _col5, _col6, _col7 --- 输出的字段

Statistics: Num rows: 1 Data size: 6600 Basic stats: COMPLETE Column stats: NONE

ListSink

Time taken: 2.817 seconds, Fetched: 17 row(s)

有mr任务生成的:

hive (dyhtest)> explain select deptno, avg(sal) avg_sal from emp group by deptno;

OK

Explain

STAGE DEPENDENCIES: --- 阶段依赖

Stage-1 is a root stage --- 跟根阶段(阶段1)

Stage-0 depends on stages: Stage-1 --- 阶段0依赖阶段1

STAGE PLANS: --- 阶段计划

Stage: Stage-1 --- 执行阶段1

Map Reduce --- 阶段1是map reduce操作

Map Operator Tree: --- map执行树

TableScan --- 扫描表

alias: emp --- 表名

Statistics: Num rows: 1 Data size: 6600 Basic stats: COMPLETE Column stats: NONE

Select Operator --- 查询操作 :工资 部门名称

expressions: sal (type: double), deptno (type: int)

outputColumnNames: sal, deptno --- 输出字段

Statistics: Num rows: 1 Data size: 6600 Basic stats: COMPLETE Column stats: NONE

Group By Operator --- 分组操作

aggregations: sum(sal), count(sal) --- 总薪水 总人数

keys: deptno (type: int) --- 分组字段

mode: hash --- 模式:hash

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 1 Data size: 6600 Basic stats: COMPLETE Column stats: NONE

Reduce Output Operator --- reduce 输出

key expressions: _col0 (type: int) --- 输出列及列类型

sort order: +

Map-reduce partition columns: _col0 (type: int)

Statistics: Num rows: 1 Data size: 6600 Basic stats: COMPLETE Column stats: NONE

value expressions: _col1 (type: double), _col2 (type: bigint)

Execution mode: vectorized

Reduce Operator Tree:

Group By Operator

aggregations: sum(VALUE._col0), count(VALUE._col1)

keys: KEY._col0 (type: int)

mode: mergepartial

outputColumnNames: _col0, _col1, _col2

Statistics: Num rows: 1 Data size: 6600 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: _col0 (type: int), (_col1 / _col2) (type: double)

outputColumnNames: _col0, _col1

Statistics: Num rows: 1 Data size: 6600 Basic stats: COMPLETE Column stats: NONE

File Output Operator

compressed: false

Statistics: Num rows: 1 Data size: 6600 Basic stats: COMPLETE Column stats: NONE

table:

input format: org.apache.hadoop.mapred.SequenceFileInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

Stage: Stage-0 --- 执行staage0

Fetch Operator --- 抓取操作

limit: -1 --- 全部输出

Processor Tree:

ListSink

Time taken: 1.422 seconds, Fetched: 53 row(s)

二、 抓取(Fetch)

- Hive中对某些情况的查询可以不必使用MapReduce计算。例如:SELECT * FROM employees;在这种情况下,Hive可以简单地读取employee对应的存储目录下的文件,然后输出查询结果到控制台。

- 在hive-default.xml.template文件中hive.fetch.task.conversion默认是more,老版本hive默认是minimal,该属性修改为more以后,在全局查找、字段查找、limit查找等都不走mapreduce。

hive.fetch.task.conversion

more

Expects one of [none, minimal, more].

Some select queries can be converted to single FETCH task minimizing latency.

Currently the query should be single sourced not having any subquery and should not have any aggregations or distincts (which incurs RS), lateral views and joins.

0. none : disable hive.fetch.task.conversion --- 禁用参数,任何操作都要跑mr

1. minimal : SELECT STAR, FILTER on partition columns, LIMIT only--- select* ,分区字段过滤,限制条件不跑mr

2. more : SELECT, FILTER, LIMIT only (support TABLESAMPLE and virtual columns) --- 查询 过滤 limit 抽样 取别名的列,都不用跑mr;是默认配置

查看默认配置:

hive (dyhtest)> set hive.fetch.task.conversion;

hive.fetch.task.conversion=more

二、表的优化

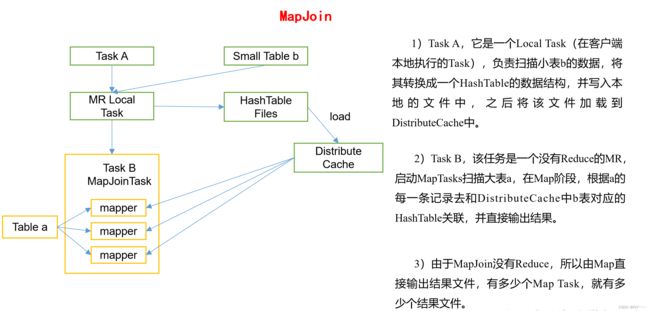

1. 小表和大表Join(MapJoin)

- 将key相对分散,并且数据量小的表放在join的左边,这样可以有效减少内存溢出错误发生的几率;

- 再进一步,可以使用map join让小的维度表(1000条以下的记录条数)先进内存。在map端完成join。

- 实际测试发现:新版的hive已经对小表JOIN大表和大表JOIN小表进行了优化。小表放在左边和右边已经没有明显区别。

开启MapJoin参数设置:

设置自动选择Mapjoin

set hive.auto.convert.join = true;

--- 查看参数

hive (dyhtest)> set hive.auto.convert.join;

hive.auto.convert.join=true --- 默认开启,默认值是true

大表小表的阈值设置(默认25M以下认为是小表):

set hive.mapjoin.smalltable.filesize = 25000000;

--- 查看阈值

hive (dyhtest)> set hive.mapjoin.smalltable.filesize;

hive.mapjoin.smalltable.filesize=25000000--- 默认是25mb

demo:

--- 创建大表

hive (dyhtest)> create table bigtable(id bigint, t bigint, uid string, keyword string, url_rank int, click_num int, click_url string) row format delimited fields terminated by '\t';

OK

Time taken: 3.133 seconds

--- 创建小表

hive (dyhtest)> create table smalltable(id bigint, t bigint, uid string, keyword string, url_rank int, click_num int, click_url string) row format delimited fields terminated by '\t';

OK

Time taken: 0.175 seconds

--- 创建 join表

hive (dyhtest)> create table jointable(id bigint, t bigint, uid string, keyword string, url_rank int, click_num int, click_url string) row format delimited fields terminated by '\t';

OK

Time taken: 0.096 seconds

--- 小表 join 大表

insert overwrite table jointable

select b.id, b.t, b.uid, b.keyword, b.url_rank, b.click_num, b.click_url

from smalltable s

join bigtable b

on b.id = s.id;

Time taken: 35.921 seconds

--- 大表join 小表

select b.id, b.t, b.uid, b.keyword, b.url_rank, b.click_num, b.click_url

from bigtable b

join smalltable s

on s.id = b.id;

然后分别加载数据,既可以进行测试

2. 大表和大表Join

a. 空KEY过滤:

- 有时join超时是由于某些key对应的数据太多,而相同key对应的数据都会发送到相同的reducer上,导致内存不够。此时应该分析这些异常的key,很多情况下,这些key对应的数据是异常数据,是否需要在SQL语句中进行过滤。

demo:

--- 创建空值id 表

hive (dyhtest)> create table nullidtable(id bigint, t bigint, uid string, keyword string, url_rank int, click_num int, click_url string) row format delimited fields terminated by '\t';

OK

Time taken: 0.171 seconds

--- 加载数据

---测试不过滤空id

insert overwrite table jointable select n.* from nullidtable n

left join bigtable o on n.id = o.id;

--- 测试过滤空id

insert overwrite table jointable select n.* from (select * from nullidtable where id is not null ) n left join bigtable o on n.id = o.id;

b. 空key转换:

有时虽然某个key为空对应的数据很多,但是相应的数据不是异常数据,必须要包含在join的结果中,此时我们可以表a中key为空的字段赋一个随机的值,使得数据随机均匀地分不到不同的reducer上。

不随机分布空null值:

设置5个reduce个数

---- 不加随机数

hive (dyhtest)> set mapreduce.job.reduces = 5;

--- JOIN两张表

insert overwrite table jointable

select n.* from nullidtable n left join bigtable b on n.id = b.id;

--- 加上随机数

JOIN两张表

insert overwrite table jointable

select n.* from nullidtable n full join bigtable o on

nvl(n.id,rand()) = o.id; --- 关联条件加上随机数

我们可以从yarn ui 上看map reduce的资源使用情况。

c. SMB(Sort Merge Bucket join):

桶join,把两个表分别分成相同的数量的桶,然后让对应的桶进行join; 满负载的情况下,分桶规则:cpu数-2(map reduce至少需要一个cpu)

win查看cpu: 任务管理器->性能->cpu->打开资源监视器->cpu->视图

--- 创建分桶表1

hive (dyhtest)> create table bigtable_buck1(

> id bigint,

> t bigint,

> uid string,

> keyword string,

> url_rank int,

> click_num int,

> click_url string)

> clustered by(id)

> sorted by(id)

> into 6 buckets

> row format delimited fields terminated by '\t';

OK

Time taken: 0.215 seconds

insert into bigtable_buck1 select * from bigtable;

--- 创建分桶表2

hive (dyhtest)> create table bigtable_buck2(

> id bigint,

> t bigint,

> uid string,

> keyword string,

> url_rank int,

> click_num int,

> click_url string)

> clustered by(id)

> sorted by(id)

> into 6 buckets

> row format delimited fields terminated by '\t';

OK

Time taken: 0.113 seconds

insert into bigtable_buck2 select * from bigtable;

--- 设置参数

set hive.optimize.bucketmapjoin = true; --- 桶join优化

set hive.optimize.bucketmapjoin.sortedmerge = true; --- merge方式

set hive.input.format=org.apache.hadoop.hive.ql.io.BucketizedHiveInputFormat; --- 数据读取方式

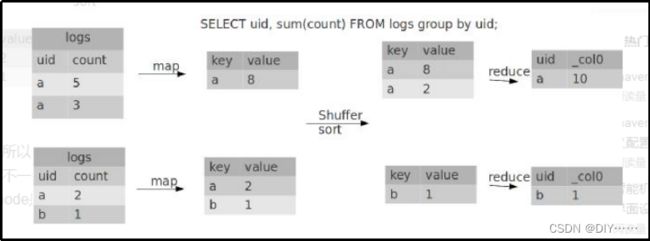

3. Group By

默认情况下,Map阶段同一Key数据分发给一个reduce,当一个key数据过大时就倾斜了。

并不是所有的聚合操作都需要在Reduce端完成,很多聚合操作都可以先在Map端进行部分聚合,最后在Reduce端得出最终结果。

a. 是否在Map端进行聚合,默认为True,不需要手动开启

hive (dyhtest)> set hive.map.aggr;

hive.map.aggr=true

b. 在Map端进行聚合操作的条目数目,不需要手动开启

hive (dyhtest)> set hive.groupby.mapaggr.checkinterval;

hive.groupby.mapaggr.checkinterval=100000

c. 有数据倾斜的时候进行负载均衡(默认是false),需要手动开启

hive (dyhtest)> set hive.groupby.skewindata;

hive.groupby.skewindata=false --- 默认false

--- 手动开启

hive (dyhtest)> set hive.groupby.skewindata=true;

- 当选项设定为 true,生成的查询计划会有两个MR Job。

- 第一个MR Job中,Map的输出结果会随机分布到Reduce中,每个Reduce做部分聚合操作,并输出结果,这样处理的结果是相同的Group By Key有可能被分发到不同的Reduce中,从而达到负载均衡的目的;

- 第二个MR Job再根据预处理的数据结果按照Group By Key分布到Reduce中(这个过程可以保证相同的Group By Key被分布到同一个Reduce中),最后完成最终的聚合操作

4. Count(Distinct) 去重统计

- 数据量小的时候无所谓,数据量大的情况下,由于COUNT DISTINCT操作需要用一个Reduce Task来完成,这一个Reduce需要处理的数据量太大,就会导致整个Job很难完成

- 一般COUNT DISTINCT使用先GROUP BY再COUNT的方式替换,但是需要注意group by造成的数据倾斜问题

demo:

--- 执行去重id查询

select count(distinct id) from bigtable;

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 7.12 sec HDFS Read: 120741990 HDFS Write: 7 SUCCESS

Total MapReduce CPU Time Spent: 7 seconds 120 msec

OK

c0

100001

Time taken: 23.607 seconds, Fetched: 1 row(s)

--- 采用GROUP by去重id

select count(id) from (select id from bigtable group by id) a;

Stage-Stage-1: Map: 1 Reduce: 5 Cumulative CPU: 17.53 sec HDFS Read: 120752703 HDFS Write: 580 SUCCESS

Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 4.29 sec2 HDFS Read: 9409 HDFS Write: 7 SUCCESS

Total MapReduce CPU Time Spent: 21 seconds 820 msec

OK

_c0

100001

Time taken: 50.795 seconds, Fetched: 1 row(s)

5. 笛卡尔积

- join的时候不加on条件

- 无效的on条件,

Hive只能使用1个reducer来完成笛卡尔积,避免出现笛卡尔积

6. 行列过滤

- 列处理:在SELECT中,只拿需要的列,如果有分区,尽量使用分区过滤,少用SELECT *。

- 行处理:在分区剪裁中,当使用外关联时,如果将副表的过滤条件写在Where后面

7. 分区分桶

三、合理设置Map及Reduce数

- 概述:

- 通常情况下,作业会通过input的目录产生一个或者多个map任务。

- 主要的决定因素有:input的文件总个数,input的文件大小,集群设置的文件块大小。

- 是不是map数越多越好?

答案是否定的。如果一个任务有很多小文件(远远小于块大小128m),则每个小文件也会被当做一个块,用一个map任务来完成,而一个map任务启动和初始化的时间远远大于逻辑处理的时间,就会造成很大的资源浪费。而且,同时可执行的map数是受限的。 - 是不是保证每个map处理接近128m的文件块,就高枕无忧了?

答案也是不一定。比如有一个127m的文件,正常会用一个map去完成,但这个文件只有一个或者两个小字段,却有几千万的记录,如果map处理的逻辑比较复杂,用一个map任务去做,肯定也比较耗时。

针对上面的问题2和3,我们需要采取两种方式来解决:即减少map数和增加map数;

2. 复杂文件增加Map数

当input的文件都很大,任务逻辑复杂,map执行非常慢的时候,可以考虑增加Map数,来使得每个map处理的数据量减少,从而提高任务的执行效率。

增加map的方法为:根据

computeSliteSize(Math.max(minSize,Math.min(maxSize,blocksize)))=blocksize=128M公式,调整maxSize最大值。让maxSize最大值低于blocksize就可以增加map的个数。

--- 设置最大切片值为100个字节

hive (dyhtest)> set mapreduce.input.fileinputformat.split.maxsize=100;

3. 小文件进行合并

- 默认对小文件进行合并

hive (dyhtest)> set hive.input.format;

hive.input.format=org.apache.hadoop.hive.ql.io.CombineHiveInputFormat

- 在Map-Reduce的任务结束时合并小文件的设置:

--- 在map-only任务结束时合并小文件,默认true

hive (dyhtest)> SET hive.merge.mapfiles;

hive.merge.mapfiles=true

--- 在map-reduce任务结束时合并小文件,默认false

hive (dyhtest)> SET hive.merge.mapredfiles = true;

--- 合并文件的大小,默认256M

hive (dyhtest)> SET hive.merge.size.per.task;

hive.merge.size.per.task=256000000

--- 当输出文件的平均大小小于该值时,启动一个独立的map-reduce任务进行文件merge

hive (dyhtest)> SET hive.merge.smallfiles.avgsize;

hive.merge.smallfiles.avgsize=16000000

- 合理设置Reduce数

--- 每个Reduce处理的数据量默认是256MB

hive (dyhtest)> set hive.exec.reducers.bytes.per.reducer;

hive.exec.reducers.bytes.per.reducer=256000000

--- 每个任务最大的reduce数,默认为1009

hive (dyhtest)> set hive.exec.reducers.max;

hive.exec.reducers.max=1009

四、并行执行

- Hive会将一个查询转化成一个或者多个阶段。这样的阶段可以是MapReduce阶段、抽样阶段、合并阶段、limit阶段。或者Hive执行过程中可能需要的其他阶段。

- 默认情况下,Hive一次只会执行一个阶段。不过,某个特定的job可能包含众多的阶段,而这些阶段可能并非完全互相依赖的,也就是说有些阶段是可以并行执行的,这样可能使得整个job的执行时间缩短。

- 不过,如果有更多的阶段可以并行执行,那么job可能就越快完成。

通过设置参数hive.exec.parallel值为true,就可以开启并发执行。不过,在共享集群中,需要注意下,如果job中并行阶段增多,那么集群利用率就会增加。

--- 并行执行默认是false

hive (dyhtest)> set hive.exec.parallel;

hive.exec.parallel=false

--- 手动开开启

hive (dyhtest)> set hive.exec.parallel = true;

--- 同一个sql允许最大并行度,默认为8。

hive (dyhtest)> set hive.exec.parallel.thread.number;

hive.exec.parallel.thread.number=8

在系统资源比较空闲的时候才有优势,否则,没资源,并行也起不来。

五、严格模式

-

分区表不使用分区过滤

将hive.strict.checks.no.partition.filter设置为true时,对于分区表,除非where语句中含有分区字段过滤条件来限制范围,否则不允许执行,其实就是用户不允许扫描所有分区。进行这个限制的原因是,通常分区表都拥有非常大的数据集,而且数据增加迅速。没有进行分区限制的查询可能会消耗令人不可接受的巨大资源来处理这个表。

--- 默认false

hive (dyhtest)> set hive.strict.checks.no.partition.filter;

hive.strict.checks.no.partition.filter=false

--- 手动开启

hive (dyhtest)> set hive.strict.checks.no.partition.filter = true;

- 使用order by没有limit过滤

将hive.strict.checks.orderby.no.limit设置为true时,对于使**用了order by语句的查询,要求必须使用limit语句。**因为order by为了执行排序过程会将所有的结果数据分发到同一个Reducer中进行处理,强制要求用户增加这个LIMIT语句可以防止Reducer额外执行很长一段时间。

hive (dyhtest)> set hive.strict.checks.orderby.no.limit;

hive.strict.checks.orderby.no.limit=false

hive (dyhtest)> set hive.strict.checks.orderby.no.limit = true;

- 笛卡尔积

将hive.strict.checks.cartesian.product设置为true时,会限制笛卡尔积的查询。对关系型数据库非常了解的用户可能期望在 执行JOIN查询的时候不使用ON语句而是使用where语句,这样关系数据库的执行优化器就可以高效地将WHERE语句转化成那个ON语句。不幸的是,Hive并不会执行这种优化,因此,如果表足够大,那么这个查询就会出现不可控的情况。

hive (dyhtest)> set hive.strict.checks.cartesian.product;

hive.strict.checks.cartesian.product=false

hive (dyhtest)> set hive.strict.checks.cartesian.product = true;

六、JVM重用和压缩

-

JVM重用

-

压缩