时间序列

日期和时间数据类型及工具

from datetime import datetime

now = datetime.now()

now

datetime.datetime(2022, 3, 4, 8, 23, 31, 842698)

now.year,now.month,now.day

(2022, 3, 4)

delta = datetime(2022,3,3)-datetime(1998,10,20,8,10)

delta

datetime.timedelta(days=8534, seconds=57000)

delta.days

8534

delta.seconds

57000

from datetime import timedelta

start = datetime(2022,3,3)

start + timedelta(12)

datetime.datetime(2022, 3, 15, 0, 0)

start - 2*timedelta(12)

datetime.datetime(2022, 2, 7, 0, 0)

import matplotlib.pyplot as plt

from pylab import *

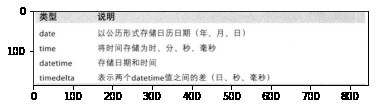

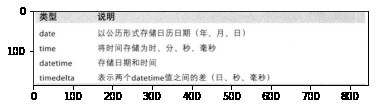

img = plt.imread('datetime模块中的数据类型.png')

imshow(img)

字符串和datetime的相互转换

stamp = datetime.datetime(2022,3,3)

str(stamp)

'2022-03-03 00:00:00'

stamp.strftime('%Y-%m-%d')

'2022-03-03'

import matplotlib.pyplot as plt

from pylab import *

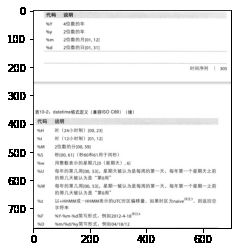

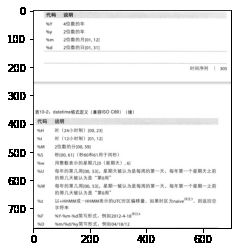

img = plt.imread('datetime格式定义.png')

imshow(img)

value = '2022-03-03'

datetime.datetime.strptime(value,'%Y-%m-%d')

datetime.datetime(2022, 3, 3, 0, 0)

datestrs = ['7/6/2022','8/6/2022']

[datetime.datetime.strptime(x,'%m/%d/%Y') for x in datestrs]

[datetime.datetime(2022, 7, 6, 0, 0), datetime.datetime(2022, 8, 6, 0, 0)]

from dateutil.parser import parse

parse('2022-03-03')

datetime.datetime(2022, 3, 3, 0, 0)

parse('Jan 31,1997 10:45 PM')

datetime.datetime(2022, 1, 31, 22, 45)

parse('6/12/2011',dayfirst=True)

datetime.datetime(2011, 12, 6, 0, 0)

import pandas as pd

pd.to_datetime(datestrs)

DatetimeIndex(['2022-07-06', '2022-08-06'], dtype='datetime64[ns]', freq=None)

idx = pd.to_datetime(datestrs + [None])

idx

DatetimeIndex(['2022-07-06', '2022-08-06', 'NaT'], dtype='datetime64[ns]', freq=None)

idx[2]

NaT

pd.isnull(idx)

array([False, False, True])

import matplotlib.pyplot as plt

from pylab import *

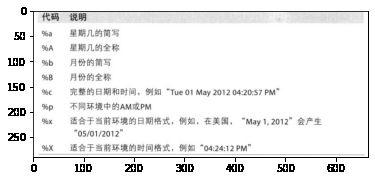

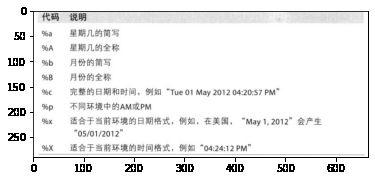

img = plt.imread('特定于当前环境的日期格式.png')

imshow(img)

时间序列基础

from datetime import datetime

from pandas import DataFrame, Series

dates = [datetime(2011,1,2),datetime(2011,1,5),datetime(2011,1,7),

datetime(2011,1,8),datetime(2011,1,10),datetime(2011,1,12)]

ts = Series(np.random.randn(6),index=dates)

ts

2011-01-02 0.955968

2011-01-05 -3.053080

2011-01-07 -0.724017

2011-01-08 -1.219987

2011-01-10 -0.303126

2011-01-12 -0.979242

dtype: float64

type(ts)

pandas.core.series.Series

ts.index

DatetimeIndex(['2011-01-02', '2011-01-05', '2011-01-07', '2011-01-08',

'2011-01-10', '2011-01-12'],

dtype='datetime64[ns]', freq=None)

ts + ts[::2]

2011-01-02 1.911936

2011-01-05 NaN

2011-01-07 -1.448034

2011-01-08 NaN

2011-01-10 -0.606251

2011-01-12 NaN

dtype: float64

ts.index.dtype

dtype('

stamp = ts.index[0]

stamp

Timestamp('2011-01-02 00:00:00')

索引、选取、子集构造

stamp = ts.index[2]

ts[stamp]

-0.7240168825490924

ts['1/10/2011']

-0.30312563460424696

ts['20110110']

-0.30312563460424696

longer_ts = Series(np.random.randn(1000),

index=pd.date_range('1/1/2000',periods=1000))

longer_ts

2000-01-01 -0.238369

2000-01-02 1.119693

2000-01-03 0.092546

2000-01-04 1.110926

2000-01-05 0.170824

...

2002-09-22 -0.468025

2002-09-23 0.260535

2002-09-24 -1.058960

2002-09-25 0.485363

2002-09-26 -0.593321

Freq: D, Length: 1000, dtype: float64

longer_ts['2001']

2001-01-01 0.333511

2001-01-02 0.669764

2001-01-03 -1.459111

2001-01-04 0.316825

2001-01-05 -0.949078

...

2001-12-27 -1.992184

2001-12-28 0.062251

2001-12-29 0.750101

2001-12-30 -0.673639

2001-12-31 -1.512151

Freq: D, Length: 365, dtype: float64

longer_ts['2001-05']

2001-05-01 1.023397

2001-05-02 0.320480

2001-05-03 -0.518482

2001-05-04 -1.238117

2001-05-05 -0.295051

2001-05-06 1.482434

2001-05-07 0.034568

2001-05-08 -1.260276

2001-05-09 0.661322

2001-05-10 1.838568

2001-05-11 0.978017

2001-05-12 1.347121

2001-05-13 0.665021

2001-05-14 -2.233556

2001-05-15 -0.737499

2001-05-16 -0.596111

2001-05-17 1.853862

2001-05-18 -0.925573

2001-05-19 -1.398981

2001-05-20 -0.631674

2001-05-21 1.084205

2001-05-22 0.947516

2001-05-23 -0.022117

2001-05-24 -0.056961

2001-05-25 -0.477587

2001-05-26 0.097978

2001-05-27 1.341151

2001-05-28 -0.346732

2001-05-29 -1.079403

2001-05-30 1.244231

2001-05-31 1.772584

Freq: D, dtype: float64

ts[datetime(2011,1,7)]

-0.7240168825490924

ts

2011-01-02 0.955968

2011-01-05 -3.053080

2011-01-07 -0.724017

2011-01-08 -1.219987

2011-01-10 -0.303126

2011-01-12 -0.979242

dtype: float64

ts['1/6/2011':'1/11/2011']

2011-01-07 -0.724017

2011-01-08 -1.219987

2011-01-10 -0.303126

dtype: float64

ts.truncate(after='1/9/2011')

2011-01-02 0.955968

2011-01-05 -3.053080

2011-01-07 -0.724017

2011-01-08 -1.219987

dtype: float64

dates = pd.date_range('1/1/2000',periods=100,freq='W-WED')

long_df = DataFrame(np.random.randn(100,4),

index=dates,

columns=['Colorado','Texas','New York','Ohio'])

long_df.loc['5-2001']

|

Colorado |

Texas |

New York |

Ohio |

| 2001-05-02 |

-1.864282 |

0.085645 |

-1.803818 |

0.923839 |

| 2001-05-09 |

0.344391 |

0.471942 |

2.089121 |

-1.123721 |

| 2001-05-16 |

0.770508 |

0.618750 |

-0.787188 |

-1.083100 |

| 2001-05-23 |

0.743285 |

-0.468671 |

0.610547 |

0.957291 |

| 2001-05-30 |

0.343177 |

-1.018066 |

1.196951 |

-0.434751 |

带有重复索引的时间序列

dates = pd.DatetimeIndex(['1/1/2000','1/2/2000','1/2/2000','1/2/2000','1/3/2000'])

dup_ts = Series(np.arange(5),index=dates)

dup_ts

2000-01-01 0

2000-01-02 1

2000-01-02 2

2000-01-02 3

2000-01-03 4

dtype: int32

dup_ts.index.is_unique

False

dup_ts['1/3/2000']

4

dup_ts['1/2/2000']

2000-01-02 1

2000-01-02 2

2000-01-02 3

dtype: int32

grouped = dup_ts.groupby(level=0)

grouped.mean()

2000-01-01 0.0

2000-01-02 2.0

2000-01-03 4.0

dtype: float64

grouped.count()

2000-01-01 1

2000-01-02 3

2000-01-03 1

dtype: int64

日期的范围、频率以及移动

ts

2011-01-02 0.955968

2011-01-05 -3.053080

2011-01-07 -0.724017

2011-01-08 -1.219987

2011-01-10 -0.303126

2011-01-12 -0.979242

dtype: float64

ts.resample('D')

生成日期范围

index = pd.date_range('4/1/2012','6/1/2012')

index

DatetimeIndex(['2012-04-01', '2012-04-02', '2012-04-03', '2012-04-04',

'2012-04-05', '2012-04-06', '2012-04-07', '2012-04-08',

'2012-04-09', '2012-04-10', '2012-04-11', '2012-04-12',

'2012-04-13', '2012-04-14', '2012-04-15', '2012-04-16',

'2012-04-17', '2012-04-18', '2012-04-19', '2012-04-20',

'2012-04-21', '2012-04-22', '2012-04-23', '2012-04-24',

'2012-04-25', '2012-04-26', '2012-04-27', '2012-04-28',

'2012-04-29', '2012-04-30', '2012-05-01', '2012-05-02',

'2012-05-03', '2012-05-04', '2012-05-05', '2012-05-06',

'2012-05-07', '2012-05-08', '2012-05-09', '2012-05-10',

'2012-05-11', '2012-05-12', '2012-05-13', '2012-05-14',

'2012-05-15', '2012-05-16', '2012-05-17', '2012-05-18',

'2012-05-19', '2012-05-20', '2012-05-21', '2012-05-22',

'2012-05-23', '2012-05-24', '2012-05-25', '2012-05-26',

'2012-05-27', '2012-05-28', '2012-05-29', '2012-05-30',

'2012-05-31', '2012-06-01'],

dtype='datetime64[ns]', freq='D')

pd.date_range(start='4/1/2012',periods=20)

DatetimeIndex(['2012-04-01', '2012-04-02', '2012-04-03', '2012-04-04',

'2012-04-05', '2012-04-06', '2012-04-07', '2012-04-08',

'2012-04-09', '2012-04-10', '2012-04-11', '2012-04-12',

'2012-04-13', '2012-04-14', '2012-04-15', '2012-04-16',

'2012-04-17', '2012-04-18', '2012-04-19', '2012-04-20'],

dtype='datetime64[ns]', freq='D')

pd.date_range(end='6/1/2012',periods=20)

DatetimeIndex(['2012-05-13', '2012-05-14', '2012-05-15', '2012-05-16',

'2012-05-17', '2012-05-18', '2012-05-19', '2012-05-20',

'2012-05-21', '2012-05-22', '2012-05-23', '2012-05-24',

'2012-05-25', '2012-05-26', '2012-05-27', '2012-05-28',

'2012-05-29', '2012-05-30', '2012-05-31', '2012-06-01'],

dtype='datetime64[ns]', freq='D')

pd.date_range('1/1/2000','12/1/2000',freq='BM')

DatetimeIndex(['2000-01-31', '2000-02-29', '2000-03-31', '2000-04-28',

'2000-05-31', '2000-06-30', '2000-07-31', '2000-08-31',

'2000-09-29', '2000-10-31', '2000-11-30'],

dtype='datetime64[ns]', freq='BM')

pd.date_range('5/2/2012 12:56:31',periods=5)

DatetimeIndex(['2012-05-02 12:56:31', '2012-05-03 12:56:31',

'2012-05-04 12:56:31', '2012-05-05 12:56:31',

'2012-05-06 12:56:31'],

dtype='datetime64[ns]', freq='D')

pd.date_range('5/2/2012 12:56:31',periods=5,normalize=True)

DatetimeIndex(['2012-05-02', '2012-05-03', '2012-05-04', '2012-05-05',

'2012-05-06'],

dtype='datetime64[ns]', freq='D')

频率和日期偏移量

from pandas.tseries.offsets import Hour, Minute

hour = Hour()

hour

four_hours = Hour(4)

four_hours

<4 * Hours>

pd.date_range('1/1/2000','1/3/2000 23:59',freq='4h')

DatetimeIndex(['2000-01-01 00:00:00', '2000-01-01 04:00:00',

'2000-01-01 08:00:00', '2000-01-01 12:00:00',

'2000-01-01 16:00:00', '2000-01-01 20:00:00',

'2000-01-02 00:00:00', '2000-01-02 04:00:00',

'2000-01-02 08:00:00', '2000-01-02 12:00:00',

'2000-01-02 16:00:00', '2000-01-02 20:00:00',

'2000-01-03 00:00:00', '2000-01-03 04:00:00',

'2000-01-03 08:00:00', '2000-01-03 12:00:00',

'2000-01-03 16:00:00', '2000-01-03 20:00:00'],

dtype='datetime64[ns]', freq='4H')

Hour(2) + Minute(30)

<150 * Minutes>

pd.date_range('1/1/2000',periods=10,freq='1h30min')

DatetimeIndex(['2000-01-01 00:00:00', '2000-01-01 01:30:00',

'2000-01-01 03:00:00', '2000-01-01 04:30:00',

'2000-01-01 06:00:00', '2000-01-01 07:30:00',

'2000-01-01 09:00:00', '2000-01-01 10:30:00',

'2000-01-01 12:00:00', '2000-01-01 13:30:00'],

dtype='datetime64[ns]', freq='90T')

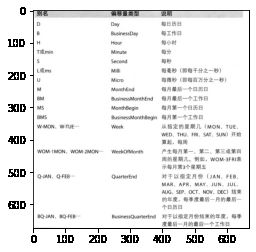

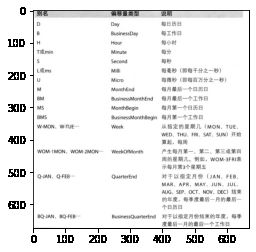

import matplotlib.pyplot as plt

from pylab import *

img = plt.imread('时间序列的基础频率1.png')

imshow(img)

import matplotlib.pyplot as plt

from pylab import *

img = plt.imread('时间序列的基础频率2.png')

imshow(img)

rng = pd.date_range('1/1/2012','9/1/2012',freq='WOM-3FRI')

list(rng)

[Timestamp('2012-01-20 00:00:00', freq='WOM-3FRI'),

Timestamp('2012-02-17 00:00:00', freq='WOM-3FRI'),

Timestamp('2012-03-16 00:00:00', freq='WOM-3FRI'),

Timestamp('2012-04-20 00:00:00', freq='WOM-3FRI'),

Timestamp('2012-05-18 00:00:00', freq='WOM-3FRI'),

Timestamp('2012-06-15 00:00:00', freq='WOM-3FRI'),

Timestamp('2012-07-20 00:00:00', freq='WOM-3FRI'),

Timestamp('2012-08-17 00:00:00', freq='WOM-3FRI')]

移动(超前和滞后)数据

import numpy as np

ts = Series(np.random.randn(4),

index=pd.date_range('1/1/2000',periods=4,freq='M'))

ts

2000-01-31 -0.085330

2000-02-29 0.111774

2000-03-31 1.852114

2000-04-30 -0.948230

Freq: M, dtype: float64

ts.shift(2)

2000-01-31 NaN

2000-02-29 NaN

2000-03-31 -0.085330

2000-04-30 0.111774

Freq: M, dtype: float64

ts.shift(-2)

2000-01-31 1.852114

2000-02-29 -0.948230

2000-03-31 NaN

2000-04-30 NaN

Freq: M, dtype: float64

ts/ts.shift(1)-1

2000-01-31 NaN

2000-02-29 -2.309908

2000-03-31 15.570148

2000-04-30 -1.511972

Freq: M, dtype: float64

ts.shift(2,freq='M')

2000-03-31 -0.085330

2000-04-30 0.111774

2000-05-31 1.852114

2000-06-30 -0.948230

Freq: M, dtype: float64

ts.shift(3,freq='D')

2000-02-03 -0.085330

2000-03-03 0.111774

2000-04-03 1.852114

2000-05-03 -0.948230

dtype: float64

ts.shift(1,freq='3D')

2000-02-03 -0.085330

2000-03-03 0.111774

2000-04-03 1.852114

2000-05-03 -0.948230

dtype: float64

ts.shift(1,freq='90T')

2000-01-31 01:30:00 -0.085330

2000-02-29 01:30:00 0.111774

2000-03-31 01:30:00 1.852114

2000-04-30 01:30:00 -0.948230

dtype: float64

通过偏移量对日期进行位移

from pandas.tseries.offsets import Day, MonthEnd

now = datetime.datetime(2011,11,17)

now + 3*Day()

Timestamp('2011-11-20 00:00:00')

now + MonthEnd()

Timestamp('2011-11-30 00:00:00')

now + MonthEnd(2)

Timestamp('2011-12-31 00:00:00')

offset = MonthEnd()

offset.rollforward(now)

Timestamp('2011-11-30 00:00:00')

offset.rollback(now)

Timestamp('2011-10-31 00:00:00')

ts = Series(np.random.randn(20),

index=pd.date_range('1/15/2000',periods=20,freq='4d'))

ts.groupby(offset.rollforward).mean()

2000-01-31 -0.222426

2000-02-29 -0.607156

2000-03-31 -0.313228

dtype: float64

ts.resample('M').mean()

2000-01-31 -0.222426

2000-02-29 -0.607156

2000-03-31 -0.313228

Freq: M, dtype: float64

时区处理

import pytz

pytz.common_timezones[-5:]

['US/Eastern', 'US/Hawaii', 'US/Mountain', 'US/Pacific', 'UTC']

tz = pytz.timezone('US/Eastern')

tz

本地化和转换

rng = pd.date_range('3/9/2012 9:30',periods=6,freq='D')

ts = Series(np.random.randn(len(rng)),index=rng)

print(ts.index.tz)

None

pd.date_range('3/9/2012 9:30',periods=10,freq='D',tz='UTC')

DatetimeIndex(['2012-03-09 09:30:00+00:00', '2012-03-10 09:30:00+00:00',

'2012-03-11 09:30:00+00:00', '2012-03-12 09:30:00+00:00',

'2012-03-13 09:30:00+00:00', '2012-03-14 09:30:00+00:00',

'2012-03-15 09:30:00+00:00', '2012-03-16 09:30:00+00:00',

'2012-03-17 09:30:00+00:00', '2012-03-18 09:30:00+00:00'],

dtype='datetime64[ns, UTC]', freq='D')

ts_utc = ts.tz_localize('UTC')

ts_utc

2012-03-09 09:30:00+00:00 -1.562317

2012-03-10 09:30:00+00:00 1.076885

2012-03-11 09:30:00+00:00 0.727747

2012-03-12 09:30:00+00:00 1.327910

2012-03-13 09:30:00+00:00 -0.345919

2012-03-14 09:30:00+00:00 -1.059568

Freq: D, dtype: float64

ts_utc.index

DatetimeIndex(['2012-03-09 09:30:00+00:00', '2012-03-10 09:30:00+00:00',

'2012-03-11 09:30:00+00:00', '2012-03-12 09:30:00+00:00',

'2012-03-13 09:30:00+00:00', '2012-03-14 09:30:00+00:00'],

dtype='datetime64[ns, UTC]', freq='D')

ts_utc.tz_convert('US/Eastern')

2012-03-09 04:30:00-05:00 -1.562317

2012-03-10 04:30:00-05:00 1.076885

2012-03-11 05:30:00-04:00 0.727747

2012-03-12 05:30:00-04:00 1.327910

2012-03-13 05:30:00-04:00 -0.345919

2012-03-14 05:30:00-04:00 -1.059568

Freq: D, dtype: float64

ts_eastern = ts.tz_localize('US/Eastern')

ts_eastern.tz_convert('UTC')

2012-03-09 14:30:00+00:00 -1.562317

2012-03-10 14:30:00+00:00 1.076885

2012-03-11 13:30:00+00:00 0.727747

2012-03-12 13:30:00+00:00 1.327910

2012-03-13 13:30:00+00:00 -0.345919

2012-03-14 13:30:00+00:00 -1.059568

dtype: float64

ts_eastern.tz_convert('Europe/Berlin')

2012-03-09 15:30:00+01:00 -1.562317

2012-03-10 15:30:00+01:00 1.076885

2012-03-11 14:30:00+01:00 0.727747

2012-03-12 14:30:00+01:00 1.327910

2012-03-13 14:30:00+01:00 -0.345919

2012-03-14 14:30:00+01:00 -1.059568

dtype: float64

ts.index.tz_localize('Asia/Shanghai')

DatetimeIndex(['2012-03-09 09:30:00+08:00', '2012-03-10 09:30:00+08:00',

'2012-03-11 09:30:00+08:00', '2012-03-12 09:30:00+08:00',

'2012-03-13 09:30:00+08:00', '2012-03-14 09:30:00+08:00'],

dtype='datetime64[ns, Asia/Shanghai]', freq=None)

操作时区意识型Timestamp对象

stamp = pd.Timestamp('2011-03-12 04:00')

stamp_utc = stamp.tz_localize('utc')

stamp_utc.tz_convert('US/Eastern')

Timestamp('2011-03-11 23:00:00-0500', tz='US/Eastern')

stamp_moscow = pd.Timestamp('2011-03-12 04:00',tz='Europe/Moscow')

stamp_moscow

Timestamp('2011-03-12 04:00:00+0300', tz='Europe/Moscow')

stamp_utc.value

1299902400000000000

stamp_utc.tz_convert('US/Eastern').value

1299902400000000000

from pandas.tseries.offsets import Hour

stamp = pd.Timestamp('2012-03-12 01:30',tz='US/Eastern')

stamp

Timestamp('2012-03-12 01:30:00-0400', tz='US/Eastern')

stamp + Hour()

Timestamp('2012-03-12 02:30:00-0400', tz='US/Eastern')

stamp = pd.Timestamp('2012-11-04 00:30',tz='US/Eastern')

stamp

Timestamp('2012-11-04 00:30:00-0400', tz='US/Eastern')

stamp + 2 * Hour()

Timestamp('2012-11-04 01:30:00-0500', tz='US/Eastern')

不同时区之间的运算

rng = pd.date_range('3/7/2012 9:30',periods=10,freq='B')

ts = Series(np.random.randn(len(rng)),index=rng)

ts

2012-03-07 09:30:00 0.610099

2012-03-08 09:30:00 -0.377627

2012-03-09 09:30:00 0.421953

2012-03-12 09:30:00 -0.573061

2012-03-13 09:30:00 -1.092316

2012-03-14 09:30:00 -0.816095

2012-03-15 09:30:00 0.346092

2012-03-16 09:30:00 -0.203076

2012-03-19 09:30:00 0.061797

2012-03-20 09:30:00 0.588646

Freq: B, dtype: float64

ts1 = ts[:7].tz_localize('Europe/London')

ts2 = ts1[2:].tz_convert('Europe/Moscow')

result = ts1+ts2

result.index

DatetimeIndex(['2012-03-07 09:30:00+00:00', '2012-03-08 09:30:00+00:00',

'2012-03-09 09:30:00+00:00', '2012-03-12 09:30:00+00:00',

'2012-03-13 09:30:00+00:00', '2012-03-14 09:30:00+00:00',

'2012-03-15 09:30:00+00:00'],

dtype='datetime64[ns, UTC]', freq=None)

时期及其算术运算

p = pd.Period(2007,freq='A-DEC')

p

Period('2007', 'A-DEC')

p + 5

Period('2012', 'A-DEC')

p - 2

Period('2005', 'A-DEC')

pd.Period('2014',freq='A-DEC') - p

<7 * YearEnds: month=12>

rng = pd.period_range('1/1/2000','6/30/2000',freq='M')

rng

PeriodIndex(['2000-01', '2000-02', '2000-03', '2000-04', '2000-05', '2000-06'], dtype='period[M]')

Series(np.random.randn(6),index=rng)

2000-01 -0.381308

2000-02 -0.433367

2000-03 -1.091356

2000-04 0.276813

2000-05 0.284625

2000-06 0.074151

Freq: M, dtype: float64

values = ['2001Q3','2002Q2','2003Q1']

index = pd.PeriodIndex(values,freq='Q-DEC')

index

PeriodIndex(['2001Q3', '2002Q2', '2003Q1'], dtype='period[Q-DEC]')

时期的频率转换

p = pd.Period('2007',freq='A-DEC')

p.asfreq('M',how='start')

Period('2007-01', 'M')

p.asfreq('M',how='end')

Period('2007-12', 'M')

p = pd.Period('2007',freq='A-JUN')

p.asfreq('M','start')

Period('2006-07', 'M')

p.asfreq('M','end')

Period('2007-06', 'M')

p = pd.Period('2007-08','M')

p.asfreq('A-JUN')

Period('2008', 'A-JUN')

rng = pd.period_range('2006','2009',freq='A-DEC')

ts = Series(np.random.randn(len(rng)),index=rng)

ts

2006 1.185920

2007 -1.529096

2008 1.232203

2009 0.161538

Freq: A-DEC, dtype: float64

ts.asfreq('M',how='start')

2006-01 1.185920

2007-01 -1.529096

2008-01 1.232203

2009-01 0.161538

Freq: M, dtype: float64

ts.asfreq('B',how='end')

2006-12-29 1.185920

2007-12-31 -1.529096

2008-12-31 1.232203

2009-12-31 0.161538

Freq: B, dtype: float64

按季度计算的时期频率

p = pd.Period('2012Q4',freq='Q-JAN')

p

Period('2012Q4', 'Q-JAN')

p.asfreq('D','start')

Period('2011-11-01', 'D')

p.asfreq('D','end')

Period('2012-01-31', 'D')

p4pm = (p.asfreq('B','e')-1).asfreq('T','s')+16*60

p4pm

Period('2012-01-30 16:00', 'T')

p4pm.to_timestamp()

Timestamp('2012-01-30 16:00:00')

rng = pd.period_range('2011Q3','2012Q4',freq='Q-JAN')

ts = Series(np.arange(len(rng)),index=rng)

ts

2011Q3 0

2011Q4 1

2012Q1 2

2012Q2 3

2012Q3 4

2012Q4 5

Freq: Q-JAN, dtype: int32

new_rng = (rng.asfreq('B','e')-1).asfreq('T','s')+16*60

ts.index = new_rng.to_timestamp()

ts

2010-10-28 16:00:00 0

2011-01-28 16:00:00 1

2011-04-28 16:00:00 2

2011-07-28 16:00:00 3

2011-10-28 16:00:00 4

2012-01-30 16:00:00 5

dtype: int32

将Timestamp转换为Period(及其反向过程)

rng = pd.date_range('1/1/2000',periods=3,freq='M')

ts = Series(randn(3),index=rng)

pts = ts.to_period()

ts

2000-01-31 0.827634

2000-02-29 0.238047

2000-03-31 -0.154483

Freq: M, dtype: float64

pts

2000-01 0.827634

2000-02 0.238047

2000-03 -0.154483

Freq: M, dtype: float64

rng = pd.date_range('1/29/2000',periods=6,freq='D')

ts2 = Series(randn(6),index=rng)

ts2.to_period('M')

2000-01 -1.799402

2000-01 -0.281554

2000-01 -0.979846

2000-02 -1.499961

2000-02 0.192467

2000-02 0.126386

Freq: M, dtype: float64

pts = ts.to_period()

pts

2000-01 0.827634

2000-02 0.238047

2000-03 -0.154483

Freq: M, dtype: float64

pts.to_timestamp(how='end')

2000-01-31 23:59:59.999999999 0.827634

2000-02-29 23:59:59.999999999 0.238047

2000-03-31 23:59:59.999999999 -0.154483

dtype: float64

通过数组创建PeriodIndex

data = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\macrodata.csv")

data.year

0 1959.0

1 1959.0

2 1959.0

3 1959.0

4 1960.0

...

198 2008.0

199 2008.0

200 2009.0

201 2009.0

202 2009.0

Name: year, Length: 203, dtype: float64

data.quarter

0 1.0

1 2.0

2 3.0

3 4.0

4 1.0

...

198 3.0

199 4.0

200 1.0

201 2.0

202 3.0

Name: quarter, Length: 203, dtype: float64

index = pd.PeriodIndex(year=data.year,quarter=data.quarter,freq='Q-DEC')

index

PeriodIndex(['1959Q1', '1959Q2', '1959Q3', '1959Q4', '1960Q1', '1960Q2',

'1960Q3', '1960Q4', '1961Q1', '1961Q2',

...

'2007Q2', '2007Q3', '2007Q4', '2008Q1', '2008Q2', '2008Q3',

'2008Q4', '2009Q1', '2009Q2', '2009Q3'],

dtype='period[Q-DEC]', length=203)

data.index = index

data.infl

1959Q1 0.00

1959Q2 2.34

1959Q3 2.74

1959Q4 0.27

1960Q1 2.31

...

2008Q3 -3.16

2008Q4 -8.79

2009Q1 0.94

2009Q2 3.37

2009Q3 3.56

Freq: Q-DEC, Name: infl, Length: 203, dtype: float64

重采样及频率转换

将高频率数据聚合到低频率称为降采样,将低频率数据转换到高频率则称为升采样。

rng = pd.date_range('1/1/2000',periods=100,freq='D')

ts = Series(randn(len(rng)),index=rng)

ts.resample('M').mean()

2000-01-31 0.070790

2000-02-29 0.026944

2000-03-31 -0.011548

2000-04-30 0.078033

Freq: M, dtype: float64

ts.resample('M',kind='period').mean()

2000-01 0.070790

2000-02 0.026944

2000-03 -0.011548

2000-04 0.078033

Freq: M, dtype: float64

import matplotlib.pyplot as plt

from pylab import *

img = plt.imread('resample方法的参数.png')

imshow(img)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-Amf01oEA-1646554497891)(output_150_1.png)]

降采样

rng = pd.date_range('1/1/2000',periods=12,freq='T')

ts = Series(np.arange(12),index=rng)

ts

2000-01-01 00:00:00 0

2000-01-01 00:01:00 1

2000-01-01 00:02:00 2

2000-01-01 00:03:00 3

2000-01-01 00:04:00 4

2000-01-01 00:05:00 5

2000-01-01 00:06:00 6

2000-01-01 00:07:00 7

2000-01-01 00:08:00 8

2000-01-01 00:09:00 9

2000-01-01 00:10:00 10

2000-01-01 00:11:00 11

Freq: T, dtype: int32

ts.resample('5min').sum()

2000-01-01 00:00:00 10

2000-01-01 00:05:00 35

2000-01-01 00:10:00 21

Freq: 5T, dtype: int32

ts.resample('5min',closed='right').sum()

1999-12-31 23:55:00 0

2000-01-01 00:00:00 15

2000-01-01 00:05:00 40

2000-01-01 00:10:00 11

Freq: 5T, dtype: int32

ts.resample('5min',closed='left',label='left').sum()

2000-01-01 00:00:00 10

2000-01-01 00:05:00 35

2000-01-01 00:10:00 21

Freq: 5T, dtype: int32

ts.resample('5min',loffset='-1s').sum()

C:\windows FutureWarning: 'loffset' in .resample() and in Grouper() is deprecated.

>>> df.resample(freq="3s", loffset="8H")

becomes:

>>> from pandas.tseries.frequencies import to_offset

>>> df = df.resample(freq="3s").mean()

>>> df.index = df.index.to_timestamp() + to_offset("8H")

ts.resample('5min',loffset='-1s').sum()

1999-12-31 23:59:59 10

2000-01-01 00:04:59 35

2000-01-01 00:09:59 21

Freq: 5T, dtype: int32

OHLC重采样

ts.resample('5min').ohlc()

|

open |

high |

low |

close |

| 2000-01-01 00:00:00 |

0 |

4 |

0 |

4 |

| 2000-01-01 00:05:00 |

5 |

9 |

5 |

9 |

| 2000-01-01 00:10:00 |

10 |

11 |

10 |

11 |

通过groupby进行重采样

rng = pd.date_range('1/1/2000',periods=100,freq='D')

ts = Series(np.arange(100),index=rng)

ts.groupby(lambda x: x.month).mean()

1 15.0

2 45.0

3 75.0

4 95.0

dtype: float64

ts.groupby(lambda x: x.weekday).mean()

0 47.5

1 48.5

2 49.5

3 50.5

4 51.5

5 49.0

6 50.0

dtype: float64

升采样和插值

frame = DataFrame(np.random.randn(2,4),

index=pd.date_range('1/1/2000',periods=2,freq='W-WED'),

columns=['Colorado','Texas','New York','Ohio'])

frame[:5]

|

Colorado |

Texas |

New York |

Ohio |

| 2000-01-05 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-12 |

-0.202733 |

2.769799 |

-0.172722 |

1.090545 |

df_daily = frame.resample('D')

df_daily

frame.resample('D').ffill()

|

Colorado |

Texas |

New York |

Ohio |

| 2000-01-05 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-06 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-07 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-08 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-09 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-10 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-11 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-12 |

-0.202733 |

2.769799 |

-0.172722 |

1.090545 |

frame.resample('D').ffill(limit=2)

|

Colorado |

Texas |

New York |

Ohio |

| 2000-01-05 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-06 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-07 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-08 |

NaN |

NaN |

NaN |

NaN |

| 2000-01-09 |

NaN |

NaN |

NaN |

NaN |

| 2000-01-10 |

NaN |

NaN |

NaN |

NaN |

| 2000-01-11 |

NaN |

NaN |

NaN |

NaN |

| 2000-01-12 |

-0.202733 |

2.769799 |

-0.172722 |

1.090545 |

frame.resample('W-THU').ffill()

|

Colorado |

Texas |

New York |

Ohio |

| 2000-01-06 |

-0.639248 |

0.966629 |

1.353138 |

-0.141245 |

| 2000-01-13 |

-0.202733 |

2.769799 |

-0.172722 |

1.090545 |

通过时期进行重采样

frame = DataFrame(np.random.randn(24,4),

index=pd.period_range('1-2000','12-2001',freq='M'),

columns=['Colorado','Texas','New York','Ohio'])

frame[:5]

|

Colorado |

Texas |

New York |

Ohio |

| 2000-01 |

-0.111358 |

-0.647902 |

-1.546984 |

-0.723733 |

| 2000-02 |

0.080523 |

-0.957168 |

-0.032819 |

-0.142153 |

| 2000-03 |

-0.357317 |

0.714370 |

0.381672 |

-1.212166 |

| 2000-04 |

-2.072597 |

-1.275430 |

-0.972187 |

0.395826 |

| 2000-05 |

0.204685 |

0.403605 |

-0.206892 |

-0.623941 |

annual_frame = frame.resample('A-DEC').mean()

annual_frame

|

Colorado |

Texas |

New York |

Ohio |

| 2000 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2001 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

annual_frame.resample('Q-DEC').ffill()

|

Colorado |

Texas |

New York |

Ohio |

| 2000Q1 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2000Q2 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2000Q3 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2000Q4 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2001Q1 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

| 2001Q2 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

| 2001Q3 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

| 2001Q4 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

annual_frame.resample('Q-DEC',convention='start').ffill()

|

Colorado |

Texas |

New York |

Ohio |

| 2000Q1 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2000Q2 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2000Q3 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2000Q4 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2001Q1 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

| 2001Q2 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

| 2001Q3 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

| 2001Q4 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

annual_frame.resample('Q-MAR').ffill()

|

Colorado |

Texas |

New York |

Ohio |

| 2000Q4 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2001Q1 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2001Q2 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2001Q3 |

-0.094581 |

0.172699 |

-0.01049 |

-0.184761 |

| 2001Q4 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

| 2002Q1 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

| 2002Q2 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

| 2002Q3 |

-0.209692 |

0.172219 |

-0.16941 |

-0.244421 |

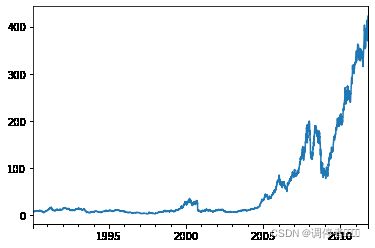

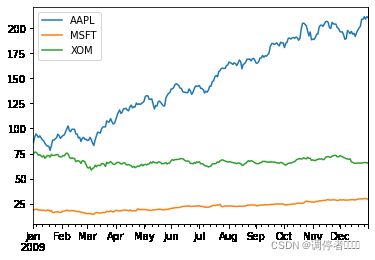

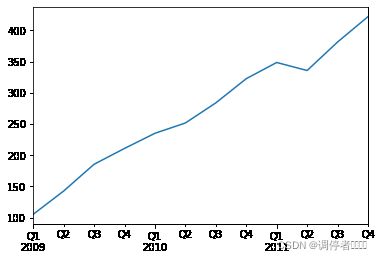

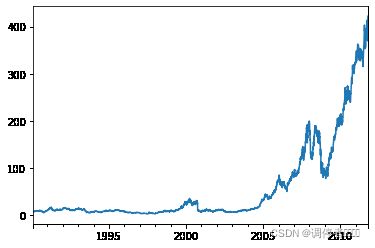

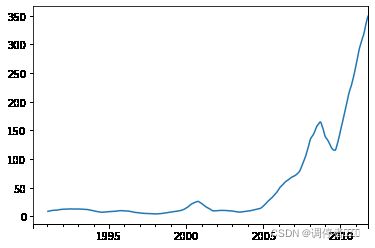

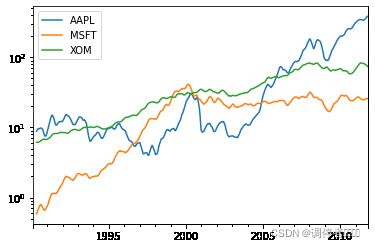

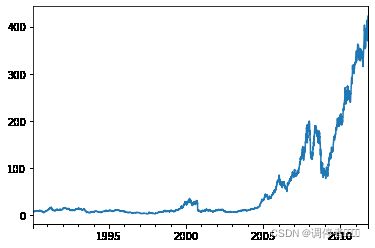

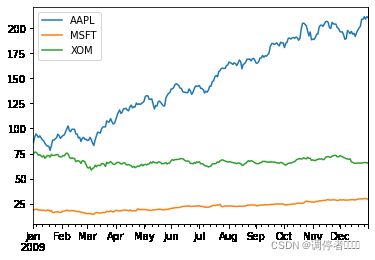

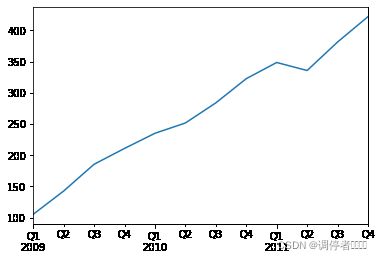

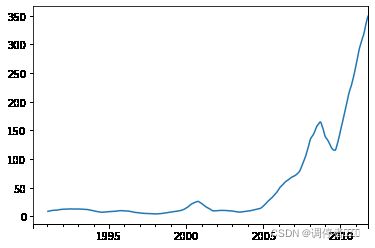

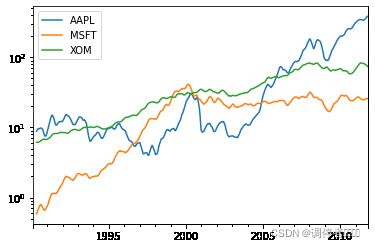

时间序列绘图

close_px_all = pd.read_csv("E:\python_study_files\python\pydata-book-2nd-edition\examples\stock_px.csv",parse_dates=True,index_col=0)

close_px = close_px_all[['AAPL','MSFT','XOM']]

close_px = close_px.resample('B').ffill()

close_px

|

AAPL |

MSFT |

XOM |

| 1990-02-01 |

7.86 |

0.51 |

6.12 |

| 1990-02-02 |

8.00 |

0.51 |

6.24 |

| 1990-02-05 |

8.18 |

0.51 |

6.25 |

| 1990-02-06 |

8.12 |

0.51 |

6.23 |

| 1990-02-07 |

7.77 |

0.51 |

6.33 |

| ... |

... |

... |

... |

| 2011-10-10 |

388.81 |

26.94 |

76.28 |

| 2011-10-11 |

400.29 |

27.00 |

76.27 |

| 2011-10-12 |

402.19 |

26.96 |

77.16 |

| 2011-10-13 |

408.43 |

27.18 |

76.37 |

| 2011-10-14 |

422.00 |

27.27 |

78.11 |

5662 rows × 3 columns

close_px['AAPL'].plot()

close_px.loc['2009'].plot()

close_px['AAPL'].loc['01-2011':'03-2011'].plot()

appl_q = close_px['AAPL'].resample('Q-DEC').ffill()

appl_q.loc['2009':].plot()

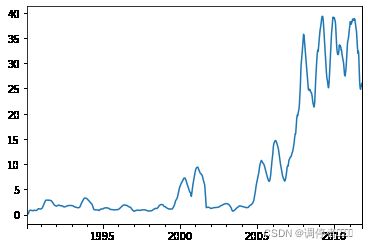

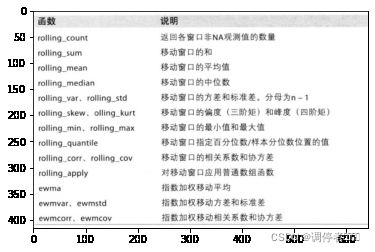

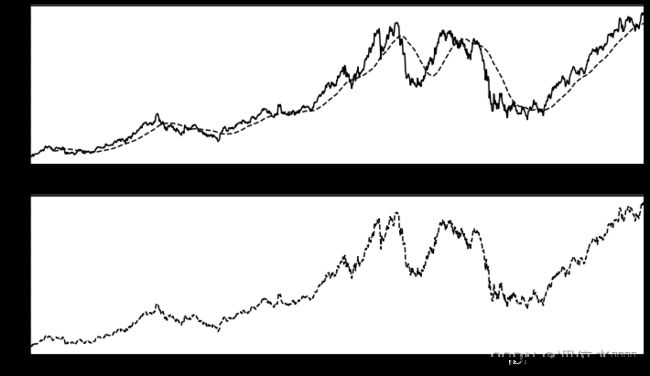

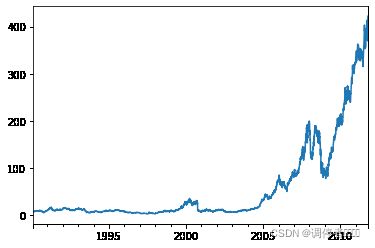

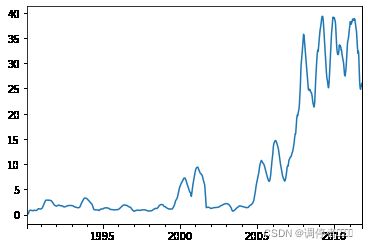

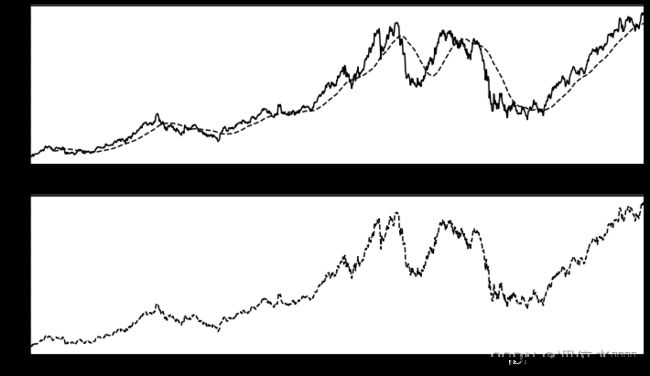

移动窗口函数

close_px.AAPL.plot()

close_px.AAPL.rolling(window=250).mean().plot()

appl_std250 = close_px.AAPL.rolling(window=250,min_periods=10).std()

appl_std250[5:12]

1990-02-08 NaN

1990-02-09 NaN

1990-02-12 NaN

1990-02-13 NaN

1990-02-14 0.148189

1990-02-15 0.141003

1990-02-16 0.135454

Freq: B, Name: AAPL, dtype: float64

appl_std250.plot()

expanding_mean = lambda x: x.rolling(window=len(x),min_periods=1).mean()

close_px.rolling(60).mean().plot(logy=True)

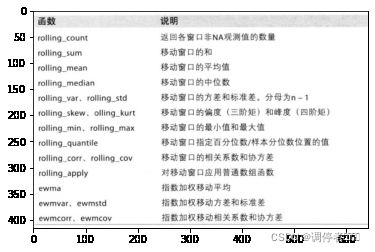

import matplotlib.pyplot as plt

from pylab import *

img = plt.imread('移动窗口和指数加权函数.png')

imshow(img)

指数加权函数

fig, axes = plt.subplots(nrows=2,ncols=1,sharex=True,sharey=True,figsize=(12,7))

aapl_px = close_px.AAPL['2005':'2009']

ma60 = aapl_px.rolling(window=60,min_periods=50).mean()

ewma60 = pd.DataFrame.ewm(aapl_px,span=60)

aapl_px.plot(style='k-',ax=axes[0])

ma60.plot(style='k--',ax=axes[0])

aapl_px.plot(style='k--',ax=axes[1])

ewma60.plot(style='k--',ax=axes[1])

axes[0].set_title('Simple MA')

axes[1].set_title('Exponentially-weighted MA')

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

C:\window in

8 ma60.plot(style='k--',ax=axes[0])

9 aapl_px.plot(style='k--',ax=axes[1])

---> 10 ewma60.plot(style='k--',ax=axes[1])

11 axes[0].set_title('Simple MA')

12 axes[1].set_title('Exponentially-weighted MA')

AttributeError: 'ExponentialMovingWindow' object has no attribute 'plot'

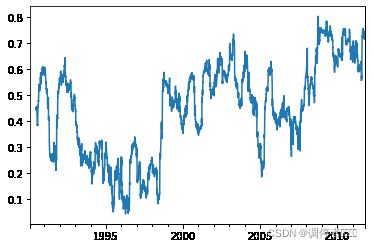

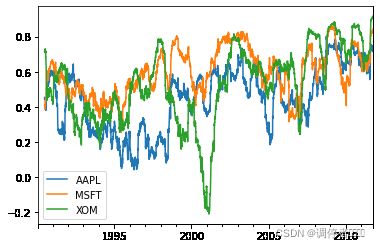

二元移动窗口函数

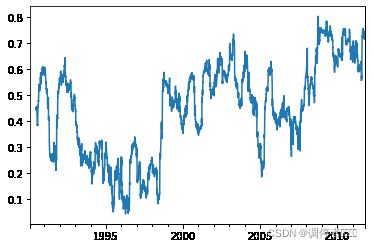

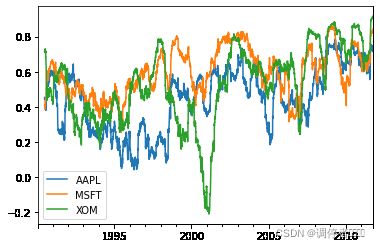

spx_px = close_px_all['SPX']

spx_rets = spx_px/spx_px.shift(1)-1

returns = close_px.pct_change()

corr = returns.AAPL.rolling(window=125,min_periods=100).corr(spx_rets)

corr.plot()

corr = returns.rolling(window=125,min_periods=100).corr(spx_rets)

corr.plot()

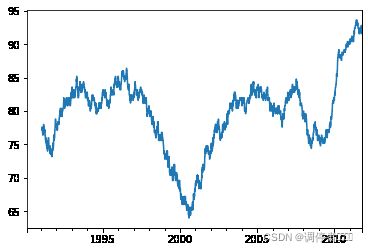

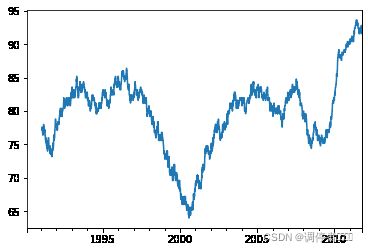

用户定义的移动窗口函数

from scipy.stats import percentileofscore

score_at_2percent = lambda x: percentileofscore(x,0.02)

result = returns.AAPL.rolling(window=250).apply(score_at_2percent)

result.plot()

性能和内存使用方面的注意事项

rng = pd.date_range('1/1/2000',periods=10000000,freq='10ms')

ts = Series(np.random.randn(len(rng)),index=rng)

ts

2000-01-01 00:00:00.000 -2.158081

2000-01-01 00:00:00.010 -0.800653

2000-01-01 00:00:00.020 -1.063636

2000-01-01 00:00:00.030 -0.350992

2000-01-01 00:00:00.040 0.025731

...

2000-01-02 03:46:39.950 1.064119

2000-01-02 03:46:39.960 -1.168419

2000-01-02 03:46:39.970 0.165532

2000-01-02 03:46:39.980 -0.335836

2000-01-02 03:46:39.990 0.906393

Freq: 10L, Length: 10000000, dtype: float64

ts.resample('15min').ohlc()

|

open |

high |

low |

close |

| 2000-01-01 00:00:00 |

-2.158081 |

4.707701 |

-4.575291 |

-0.681948 |

| 2000-01-01 00:15:00 |

-1.153593 |

4.948694 |

-4.179428 |

-0.846462 |

| 2000-01-01 00:30:00 |

0.264933 |

3.978436 |

-4.369072 |

-1.591544 |

| 2000-01-01 00:45:00 |

-0.733233 |

4.702323 |

-4.718692 |

-0.031456 |

| 2000-01-01 01:00:00 |

0.409060 |

4.495952 |

-4.611355 |

-0.462664 |

| ... |

... |

... |

... |

... |

| 2000-01-02 02:45:00 |

0.543960 |

4.395054 |

-4.944563 |

-0.389855 |

| 2000-01-02 03:00:00 |

-2.653642 |

3.921464 |

-4.256564 |

-0.513813 |

| 2000-01-02 03:15:00 |

-0.429338 |

4.326163 |

-4.141456 |

-0.542056 |

| 2000-01-02 03:30:00 |

-0.739633 |

4.639564 |

-4.794910 |

0.619659 |

| 2000-01-02 03:45:00 |

-0.787038 |

4.072322 |

-3.949441 |

0.906393 |

112 rows × 4 columns

%timeit ts.resample('15min').ohlc()

109 ms ± 3.1 ms per loop (mean ± std. dev. of 7 runs, 10 loops each)

%timeit ts.resample('15s').ohlc()

113 ms ± 2.48 ms per loop (mean ± std. dev. of 7 runs, 10 loops each)