java网络爬虫爬取安居客租房信息(文章结尾附有完整代码)

步骤 1: 首先编写爬虫代码获取每一页的 url

安居客租房页面,每一页大约有 60 多条租房信息,每条租房信息如图所示:

打开该页面的 html 代码

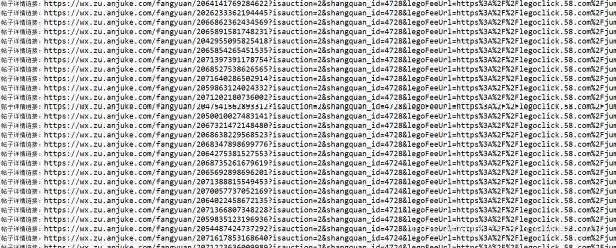

分析可得改图片中的红框中的链接即为每条详情租房信息的链接,首 先将每条详情租房信息链接爬下来。 所得结果如下

爬虫代码为:

URL url = new URL(DOU_BAN_URL.replace("{pageStart}",pageStrat+""));

HttpURLConnection connection =

(HttpURLConnection)url.openConnection();

//设置请求方式

connection.setRequestMethod("GET");

// 10秒超时

connection.setConnectTimeout(10000);

connection.setReadTimeout(10000);

//连接

connection.connect();

//得到响应码

int responseCode = connection.getResponseCode();

if(responseCode == HttpURLConnection.HTTP_OK){

//得到响应流

InputStream inputStream = connection.getInputStream();

//获取响应

BufferedReader reader = new BufferedReader(new

InputStreamReader(inputStream,"UTF-8"));

String returnStr = "";

String line;

while ((line = reader.readLine()) != null){

returnStr+=line + "\r\n";

}

reader.close();

inputStream.close();

connection.disconnect();

Pattern p = Pattern.compile("");

Matcher m = p.matcher(returnStr);

while(m.find()) {

System.out.println("帖子详情链接:" + m.group(2));}步骤 2: 循环进入租房信息详情页(上一步中得到的帖子详情 链接),爬取租房的详细信息,如房价,房屋面积,房屋地址等。

进入详情页,看到详情页面有一部分显示如下

于是开始分析页面 html 源代码中的相应部分

根据该 html 代码编写正则表达式如下:

Pattern p2 = Pattern.compile("房屋编码:([^\\\"]*),

发布时间:([^\\\"]*)\r\n");

Matcher m2 = p2.matcher(tempReturnStr);

Pattern p3 = Pattern.compile("面积:\r\n"

+ " ([^\"]*)");

Matcher m3 = p3.matcher(tempReturnStr);

Pattern p4 = Pattern.compile("朝向:\r\n"

+ " ([^\"]*)");

Matcher m4 = p4.matcher(tempReturnStr);

Pattern p5 = Pattern.compile("楼层:\r\n"

+ " ([^\"]*)");

Matcher m5 = p5.matcher(tempReturnStr);

Pattern p6 = Pattern.compile("小区:\r\n"

+ " ([^\"]*)");

Matcher m6 = p6.matcher(tempReturnStr);

Pattern p7 = Pattern.compile("([^\"]*)元/月");

Matcher m7 = p7.matcher(tempReturnStr);

所得出来的 group()所对应的结果是:

m2.group(1)为房屋编码

m2.group(2)为发布时间

m3.group(1)为房屋面积

m4.group(1)为朝向

m5.group(1)为楼层

m6.group(2)为小区名字

m7.group(1)为租金

将匹配到的每个信息输出,打印在控制台上,显示结果为

红色方框圈出的信息即为打印出来的的爬取到的信息

步骤 3: 配置数据库并连接,将详细信息放到数据库的表中

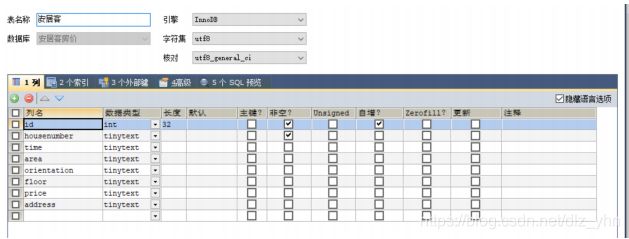

在 sqlyog 中新建一个名为“安居客房价”的数据库,并在该数据 库中新建一个名为“安居客”的表,新建表的信息为:

配置数据库所用代码为:

Class.forName("com.mysql.cj.jdbc.Driver");

String sqlUrl = "jdbc:mysql://localhost:3306/安居客房

价?characterEncoding=UTF-8";

Connection conn = DriverManager.getConnection(sqlUrl, "root",

"你的密码");

Statement stat = conn.createStatement();将打印详细信息(房屋编码,发布时间……)的语句替换成插入 数据库的代码语句 所替换的代码为:(“安居客”是我创建的安居客房价数据库中的表名称)

while(m2.find()&&m3.find()&&m4.find()&&m5.find()&&m6.find()&&m7.find()) {第 14 页 共 22 页

String str="insert into 安居客

(housenumber,time,area,orientation,floor,price,address)

values('"+m2.group(1)+"','"+m2.group(2)+"','"+m3.group(1)+"','"+m4.group(1)

+"','"+m5.group(1)+"','"+m7.group(1)+"','"+m6.group(2)+"')";

stat.executeUpdate(str);

}插入数据库后,表显示的结果为

步骤 4: 反爬虫

最初爬虫设置访问时间为 10 秒钟一次,但是爬到将近 100 条 IP 被禁, 于是采取一些反爬虫措施 1. 加上请求头参数 用浏览器打开安居客租房网站,按 F12 键,打开开发者工具,并点击“网络”,进入如下界面

在右侧的标头信息中找到 cookie 和 user-agent,将其中的值复制下来粘贴到代码中,

connection.setRequestProperty("Cookie", "cookie的值");

connection.setRequestProperty("User-Agent", "user-agent的值")接下来设置了10到20秒的随机访问时间间隔,代码如 下:

Random rand = new Random();

int a=rand.nextInt(10) + 10;

Thread.sleep(a*1000);完整代码:

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStream;

import java.io.InputStreamReader;

import java.net.HttpURLConnection;

import java.net.URL;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

import java.util.Random;

public class Main {

public static String URL1 = "https://wx.zu.anjuke.com/fangyuan/p{pageStart}/";

public static void main(String[] args) throws IOException, ClassNotFoundException, SQLException, InterruptedException {

//System.getProperties().setProperty("proxySet", "true");

//System.getProperties().setProperty("http.proxyHost","125.87.92.163");

//System.getProperties().setProperty("http.proxyPort", "4256");

Class.forName("com.mysql.cj.jdbc.Driver");

String sqlUrl = "jdbc:mysql://localhost:3306/安居客房价?characterEncoding=UTF-8";

Connection conn = DriverManager.getConnection(sqlUrl, "root", "密码");

Statement stat = conn.createStatement();

while(true)

{

int pageStrat = 2;

try {

URL url = new URL(URL1.replace("{pageStart}",pageStrat+""));

HttpURLConnection connection = (HttpURLConnection)url.openConnection();

//设置请求方式

connection.setRequestMethod("GET");

// 10秒超时

connection.setConnectTimeout(10000);

connection.setReadTimeout(10000);

connection.setRequestProperty("Cookie", "cookie值");

connection.setRequestProperty("User-Agent", "useragent值");

//连接

connection.connect();

//得到响应码

int responseCode = connection.getResponseCode();

if(responseCode == HttpURLConnection.HTTP_OK){

//得到响应流

InputStream inputStream = connection.getInputStream();

//获取响应

BufferedReader reader = new BufferedReader(new InputStreamReader(inputStream,"UTF-8"));

String returnStr = "";

String line;

while ((line = reader.readLine()) != null){

returnStr+=line + "\r\n";

}

//System.out.println(returnStr);

reader.close();

inputStream.close();

connection.disconnect();

// System.out.println(returnStr);

Pattern p = Pattern.compile("");

Matcher m = p.matcher(returnStr);

while(m.find()) {

//System.out.println("帖子详情链接:" + m.group(2));

Random rand = new Random();

int a=rand.nextInt(10)+5;

Thread.sleep(a*1000);

try {

String tempUrlStr = m.group(2);

System.out.println("当前链接:" + tempUrlStr);

URL tempUrl = new URL(tempUrlStr);

HttpURLConnection tempConnection = (HttpURLConnection)tempUrl.openConnection();

//设置请求方式

tempConnection.setRequestMethod("GET");

// 10秒超时

tempConnection.setConnectTimeout(10000);

tempConnection.setReadTimeout(10000);

tempConnection.setRequestProperty("Cookie", "cookie值");

tempConnection.setRequestProperty("User-Agent", "useragent值");

//连接

tempConnection.connect();

//得到响应码

int tempResponseCode = tempConnection.getResponseCode();

System.out.println(tempResponseCode);

if(tempResponseCode == HttpURLConnection.HTTP_OK){

//得到响应流

InputStream tempInputStream = tempConnection.getInputStream();

//获取响应

BufferedReader tempReader = new BufferedReader(new InputStreamReader(tempInputStream,"UTF-8"));

String tempReturnStr ="";

String tempLine;

while ((tempLine = tempReader.readLine()) != null){

tempReturnStr += tempLine + "\r\n";

}

Pattern p2 = Pattern.compile("房屋编码:([^\\\"]*),发布时间:([^\\\"]*)\r\n");

Matcher m2 = p2.matcher(tempReturnStr);

Pattern p3 = Pattern.compile("面积:\r\n"

+ " ([^\"]*)");

Matcher m3 = p3.matcher(tempReturnStr);

Pattern p4 = Pattern.compile("朝向:\r\n"

+ " ([^\"]*)");

Matcher m4 = p4.matcher(tempReturnStr);

Pattern p5 = Pattern.compile("楼层:\r\n"

+ " ([^\"]*)");

Matcher m5 = p5.matcher(tempReturnStr);

Pattern p6 = Pattern.compile("小区:\r\n"

+ " ([^\"]*)");

Matcher m6 = p6.matcher(tempReturnStr);

Pattern p7 = Pattern.compile("([^\"]*)元/月");

Matcher m7 = p7.matcher(tempReturnStr);

while(m2.find()&&m3.find()&&m4.find()&&m5.find()&&m6.find()&&m7.find()) {

String str="insert into 安居客(housenumber,time,area,orientation,floor,price,address) values('"+m2.group(1)+"','"+m2.group(2)+"','"+m3.group(1)+"','"+m4.group(1)+"','"+m5.group(1)+"','"+m7.group(1)+"','"+m6.group(2)+"')";

stat.executeUpdate(str);

}

tempReader.close();

tempInputStream.close();

tempConnection.disconnect();

}

}catch(Exception e) {

e.printStackTrace();

}

}

}

}catch(Exception e) {

e.printStackTrace();

}

pageStrat++;

}

}

}