Flink1.13.x+iceberg环境搭建

1.安装hadoop

tar -zxvf hadoop-2.10.1.tar.gz

配置JDK和Hadoop环境变量

vi /etc/profile

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.232.b09-0.el7_7.x86_64

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=/home/hadoop-2.10.1

export HADOOP_CONF_DIR=/home/hadoop-2.10.1/etc/hadoop/

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export PATH=$PATH:$HADOOP_CONF_DIR

生效环境变量

source /etc/profile

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://0.0.0.0:9000</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

先格式化一下hdfs,第一次安装可以使用

bin/hdfs namenode -format

启动namenode和datanode

sbin/hadoop-daemon.sh start namenode

sbin/hadoop-daemon.sh start datanode

2.安装hive

解压hive压缩包

tar -xzvf apache-hive-3.1.2-bin.tar.gz

配置环境变量

vi /etc/profile

export HIVE_HOME=/home/apache-hive-3.1.2-bin

export PATH=$PATH:$HIVE_HOME/bin

环境变量生效

source /etc/profile

修改hive配置

将conf/hive-default.xml.template复制一份后修改名称为hive-site.xml

cp hive-default.xml.template hive-site.xml

修改hive-site.xml配置,如果没有以下配置则添加

<property>

<name>system:java.io.tmpdir</name>

<value>/tmp/hive/java</value>

</property>

<property>

<name>system:user.name</name>

<value>${user.name}</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://192.168.1.2:9083</value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>192.168.1.2</value>

<description>Bind host on which to run the HiveServer2 Thrift service.</description>

</property>

<!-- 数据库配置,存放表元数据-->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive_user</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>password</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>org.postgresql.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:postgresql://192.168.1.2:4502/hive_db?databaseName=hive_db;create=true</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

上传驱动 postgresql-9.4.1208.jar 到 hive 的 lib 目录下

初始化数据库

bin/schematool -dbType postgres -initSchema

启动thrift服务和metastore服务

setsid bin/hive --service hiveserver2

setsid bin/hive --service metastore

3.安装Flink

解压缩

tar -zxvf flink-1.13.6-bin-scala_2.12.tgz

修改环境变量

vi /etc/profile

export FLNK_HOME=/home/flink-1.13.6

export PATH=$FLINK_HOME/bin:$PATH

下载flink-sql-connector-hive-3.1.2_2.12-1.13.6.jar放到lib目录

注意网上有些帖子版本使用iceberg-flink-runtime-0.12.1.jar,但到了flink1.13后可在pon.xml使用就可以了

启动

setsid /home/flink-1.13.6/bin/start-cluster.sh

4.工程使用pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.xxx.xxx</groupId>

<artifactId>flink-job</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>flink-job</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<app.mainclass>com.xxx.xxx.flink.threat.model.xdr.DdosAttackToAmf</app.mainclass>

<flink.version>1.13.6</flink.version>

<scala.version>2.12</scala.version>

<hadoop.version>2.10.1</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.springframework/spring-core -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-core</artifactId>

<version>5.3.9</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-core -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-core</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-clients -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-kafka -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-streaming-java -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-java -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-connector-elasticsearch7 -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-elasticsearch7_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.79</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-simple</artifactId>

<version>1.7.25</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.17.0</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-1.2-api</artifactId>

<version>2.17.0</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>2.17.0</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>2.17.0</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-web</artifactId>

<version>2.17.0</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.11</version>

</dependency>

<dependency>

<groupId>org.apache.iceberg</groupId>

<artifactId>iceberg-flink-runtime-1.13</artifactId>

<version>0.13.1</version>

</dependency>

<!--读取yml配置文件-->

<dependency>

<groupId>org.yaml</groupId>

<artifactId>snakeyaml</artifactId>

<version>1.23</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-scala-bridge_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-common</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner-blink_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-hive_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-sql-connector-hive-3.1.2_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-jdbc_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-hadoop-compatibility_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>commons-cli</groupId>

<artifactId>commons-cli</artifactId>

<version>1.4</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>3.1.2</version>

</dependency>

<dependency>

<groupId>com.alibaba.ververica</groupId>

<artifactId>flink-connector-postgres-cdc</artifactId>

<version>1.4.0</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.2</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

<executions>

<execution>

<id>copy-dependencies</id>

<phase>test</phase>

<goals>

<goal>copy-dependencies</goal>

</goals>

<configuration>

<outputDirectory>

target/classes/lib

</outputDirectory>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<configuration>

<archive>

<manifest>

<addClasspath>true</addClasspath>

<mainClass>

com.zte.siem.flink.threat.model.xdr.DdosAttackToAmf

</mainClass>

<classpathPrefix>lib/</classpathPrefix>

</manifest>

<manifestEntries>

<Class-Path>.</Class-Path>

</manifestEntries>

</archive>

</configuration>

</plugin>

</plugins>

</build>

</project>

使用期间遇到的问题:

将hadoop下的share/hadoop中的jar包放到flink/lib目录

运行bin/sql-client.sh,会发生NoSuchMethodError apache.commons.cli错误,下载新的commons-cli-1.4.jar,替换commons-cli-1.2.jar

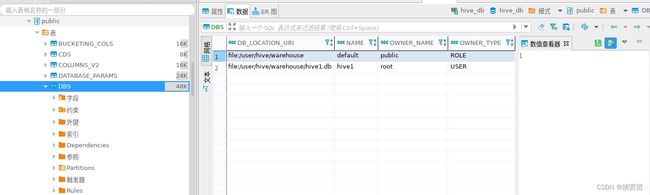

使用hive创建数据库,执行下面命令后弹出界面,并创建hive1数据(感觉是之前在pg的hive_db数据库的基础上又创建数据库,逻辑上的数据库)

hive

然后创建个数据库

create database hive1;

创建完成后再show databases;

在到pg数据去查看,发现DBS表中存放相关元数据

ClassNotFoundException: org.apache.hadoop.conf.Configuration

将hadoop下的share/hadoop/下的文件夹中的所有的jar包放到flink/lib目录

hive metadata分为嵌入式和在远端,如果配置的是远端mysql/pg数据库保存元数据,则需要开启metastore服务

Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

setsid bin/hive --service metastore