基于BERT模型实现文本相似度计算

配置所需的包

!pip install transformers==2.10.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

!pip install HanziConv -i https://pypi.tuna.tsinghua.edu.cn/simple数据预处理

# -*- coding: utf-8 -*-

from torch.utils.data import Dataset

from hanziconv import HanziConv

import pandas as pd

import torch

class DataPrecessForSentence(Dataset):

# 处理文本

def __init__(self, bert_tokenizer, LCQMC_file,

pred=None, max_char_len = 103):

"""

bert_tokenizer :分词器;LCQMC_file :语料文件

"""

self.bert_tokenizer = bert_tokenizer

self.max_seq_len = max_char_len

self.seqs, self.seq_masks, self.seq_segments, \

self.labels = self.get_input(LCQMC_file, pred)

def __len__(self):

return len(self.labels)

def __getitem__(self, idx):

return self.seqs[idx], self.seq_masks[idx], \

self.seq_segments[idx], self.labels[idx]

# 获取文本与标签

def get_input(self, file, pred=None):

# 预测

if pred:

sentences_1 = []

sentences_2 = []

for i,j in enumerate(file):

sentences_1.append(j[0])

sentences_2.append(j[1])

sentences_1 = map(HanziConv.toSimplified, sentences_1)

sentences_2 = map(HanziConv.toSimplified, sentences_2)

labels = [0] * len(file)

else:

ftype = True if file.endswith('.tsv') else False

limit = 50000

df = pd.read_csv(file, sep='\t') if ftype else pd.read_json(file,lines=True)

sentences_1 = map(HanziConv.toSimplified,

df['text_a'][:limit].values if ftype else df["sentence1"].iloc[:limit])

sentences_2 = map(HanziConv.toSimplified,

df['text_b'][:limit].values if ftype else df["sentence2"].iloc[:limit])

labels = df['label'].iloc[:limit].values

# 切词

tokens_seq_1 = list(map(self.bert_tokenizer.tokenize, sentences_1))

tokens_seq_2 = list(map(self.bert_tokenizer.tokenize, sentences_2))

# 获取定长序列及其mask

result = list(map(self.trunate_and_pad, tokens_seq_1, tokens_seq_2))

seqs = [i[0] for i in result]

seq_masks = [i[1] for i in result]

seq_segments = [i[2] for i in result]

return torch.Tensor(seqs).type(torch.long), \

torch.Tensor(seq_masks).type(torch.long), \

torch.Tensor(seq_segments).type(torch.long),\

torch.Tensor(labels).type(torch.long)

def trunate_and_pad(self, tokens_seq_1, tokens_seq_2):

# 对超长序列进行截断

if len(tokens_seq_1) > ((self.max_seq_len - 3)//2):

tokens_seq_1 = tokens_seq_1[0:(self.max_seq_len - 3)//2]

if len(tokens_seq_2) > ((self.max_seq_len - 3)//2):

tokens_seq_2 = tokens_seq_2[0:(self.max_seq_len - 3)//2]

# 分别在首尾拼接特殊符号

seq = ['[CLS]'] + tokens_seq_1 + ['[SEP]'] \

+ tokens_seq_2 + ['[SEP]']

seq_segment = [0] * (len(tokens_seq_1) + 2)\

+ [1] * (len(tokens_seq_2) + 1)

# ID化

seq = self.bert_tokenizer.convert_tokens_to_ids(seq)

# 根据max_seq_len与seq的长度产生填充序列

padding = [0] * (self.max_seq_len - len(seq))

# 创建seq_mask

seq_mask = [1] * len(seq) + padding

# 创建seq_segment

seq_segment = seq_segment + padding

# 对seq拼接填充序列

seq += padding

assert len(seq) == self.max_seq_len

assert len(seq_mask) == self.max_seq_len

assert len(seq_segment) == self.max_seq_len

return seq, seq_mask, seq_segment构建BERT模型

# -*- coding: utf-8 -*-

import torch

from torch import nn

from transformers import BertForSequenceClassification, BertConfig

class BertModel(nn.Module):

def __init__(self):

super(BertModel, self).__init__()

self.bert = BertForSequenceClassification.from_pretrained("/kaggle/input/textmatch/pretrained_model",

num_labels = 2)

# /bert_pretrain/

self.device = torch.device("cuda")

for param in self.bert.parameters():

param.requires_grad = True # 每个参数都要 求梯度

def forward(self, batch_seqs, batch_seq_masks, batch_seq_segments, labels):

loss, logits = self.bert(input_ids = batch_seqs, attention_mask = batch_seq_masks,

token_type_ids=batch_seq_segments, labels = labels,return_dict=False)

probabilities = nn.functional.softmax(logits, dim=-1)

logits = torch.Tensor(logits)

probabilities = nn.functional.softmax(logits, dim=-1)

return loss, logits, probabilities

class BertModelTest(nn.Module):

def __init__(self, model_path, num_labels=2): # 设置正确的 num_labels,默认为3

super(BertModelTest, self).__init__()

config = BertConfig.from_pretrained(model_path, num_labels=num_labels)

self.bert = BertForSequenceClassification(config)

self.device = torch.device("cuda")

def forward(self, batch_seqs, batch_seq_masks,

batch_seq_segments, labels):

loss, logits = self.bert(input_ids=batch_seqs,

attention_mask=batch_seq_masks,

token_type_ids=batch_seq_segments,

labels=labels, return_dict=False)

probabilities = nn.functional.softmax(logits, dim=-1)

return loss, logits, probabilities模型训练

# -*- coding: utf-8 -*-

"""

Created on Thu Mar 12 02:08:46 2020

@author: zhaog

"""

import torch

import torch.nn as nn

import time

from tqdm import tqdm

from sklearn.metrics import roc_auc_score

def correct_predictions(output_probabilities, targets):

_, out_classes = output_probabilities.max(dim=1)

correct = (out_classes == targets).sum()

return correct.item()

def validate(model, dataloader):

# 开启验证模式

model.eval()

device = model.device

epoch_start = time.time()

running_loss = 0.0

running_accuracy = 0.0

all_prob = []

all_labels = []

# 评估时梯度不更新

with torch.no_grad():

for (batch_seqs, batch_seq_masks, batch_seq_segments,

batch_labels) in dataloader:

# 将数据放入指定设备

seqs = batch_seqs.to(device)

masks = batch_seq_masks.to(device)

segments = batch_seq_segments.to(device)

labels = batch_labels.to(device)

loss, logits, probabilities = model(seqs, masks,

segments, labels)

running_loss += loss.item()

running_accuracy += correct_predictions(probabilities, labels)

all_prob.extend(probabilities[:,1].cpu().numpy())

all_labels.extend(batch_labels)

epoch_time = time.time() - epoch_start

epoch_loss = running_loss / len(dataloader)

epoch_accuracy = running_accuracy / (len(dataloader.dataset))

return epoch_time, epoch_loss, epoch_accuracy, \

roc_auc_score(all_labels, all_prob)

def predict(model, test_file, dataloader, device):

model.eval()

with torch.no_grad():

result = []

for (batch_seqs, batch_seq_masks, batch_seq_segments,

batch_labels) in dataloader:

seqs, masks, segments, labels = batch_seqs.to(device), \

batch_seq_masks.to(device),\

batch_seq_segments.to(device), \

batch_labels.to(device)

_, _, probabilities = model(seqs, masks, segments, labels)

result.append(probabilities)

text_result = []

for i, j in enumerate(test_file):

text_result.append([j[0], j[1], '相似'

if torch.argmax(result[i][0]) == 1 else '不相似'])

return text_result

def train(model, dataloader, optimizer, max_gradient_norm):

# 开启训练

model.train()

device = model.device

epoch_start = time.time()

batch_time_avg = 0.0

running_loss = 0.0

correct_preds = 0

tqdm_batch_iterator = tqdm(dataloader)

for batch_index, (batch_seqs, batch_seq_masks,

batch_seq_segments, batch_labels) \

in enumerate(tqdm_batch_iterator):

batch_start = time.time()

# 训练数据放入指定设备,GPU&CPU

seqs, masks, segments, labels = batch_seqs.to(device),\

batch_seq_masks.to(device), \

batch_seq_segments.to(device),\

batch_labels.to(device)

optimizer.zero_grad()

loss, logits, probabilities = model(seqs, masks, segments, labels)

loss.backward()

nn.utils.clip_grad_norm_(model.parameters(), max_gradient_norm)

optimizer.step()

batch_time_avg += time.time() - batch_start

running_loss += loss.item()

correct_preds += correct_predictions(probabilities, labels)

description = "Avg. batch proc. time: {:.4f}s, loss: {:.4f}"\

.format(batch_time_avg/(batch_index+1),

running_loss/(batch_index+1))

tqdm_batch_iterator.set_description(description)

epoch_time = time.time() - epoch_start

epoch_loss = running_loss / len(dataloader)

epoch_accuracy = correct_preds / len(dataloader.dataset)

return epoch_time, epoch_loss, epoch_accuracy# -*- coding: utf-8 -*-

import os

import torch

from torch.utils.data import DataLoader

#from data import DataPrecessForSentence

#from utils import train,validate

from transformers import BertTokenizer

#from model import BertModel

from transformers.optimization import AdamW

def main(train_file, dev_file, target_dir,

epochs=10,

batch_size=128,

lr=0.00001,

patience=3,

max_grad_norm=10.0,

checkpoint=None):

bert_tokenizer = BertTokenizer.from_pretrained('/kaggle/input/textmatch/token/',

do_lower_case=True)

device = torch.device("cuda")

# 保存模型的路径

if not os.path.exists(target_dir):

os.makedirs(target_dir)

print("\t* 加载训练数据...")

train_data = DataPrecessForSentence(bert_tokenizer, train_file)

train_loader = DataLoader(train_data, shuffle=True, batch_size=batch_size)

print("\t* 加载验证数据...")

dev_data = DataPrecessForSentence(bert_tokenizer, dev_file)

dev_loader = DataLoader(dev_data, shuffle=True, batch_size=batch_size)

print("\t* 构建模型...")

model = BertModel().to(device)

print("begin to train...")

# 待优化的参数

param_optimizer = list(model.named_parameters())

no_decay = ['bias', 'LayerNorm.bias', 'LayerNorm.weight']

optimizer_grouped_parameters = [

{

'params':[p for n, p in param_optimizer if

not any(nd in n for nd in no_decay)],

'weight_decay':0.01

},

{

'params':[p for n, p in param_optimizer if

any(nd in n for nd in no_decay)],

'weight_decay':0.0

}

]

# 优化器

optimizer = AdamW(optimizer_grouped_parameters, lr=lr)

scheduler = torch.optim.lr_scheduler.\

ReduceLROnPlateau(optimizer, mode="max",

factor=0.85, patience=0)

best_score = 0.0

start_epoch = 1

epochs_count = [] # 迭代伦次

train_losses = [] # 训练集loss

valid_losses = [] # 验证集loss

if checkpoint:

checkpoint = torch.load(checkpoint)

start_epoch = checkpoint["epoch"] + 1

best_score = checkpoint["best_score"]

model.load_state_dict(checkpoint["model"])

optimizer.load_state_dict(checkpoint["optimizer"])

epochs_count = checkpoint["epochs_count"]

train_losses = checkpoint["train_losses"]

valid_losses = checkpoint["valid_losses"]

# 计算损失、验证集准确度.

_, valid_loss, valid_accuracy, auc = validate(model, dev_loader)

patience_counter = 0

for epoch in range(start_epoch, epochs + 1):

epochs_count.append(epoch)

epoch_time, epoch_loss, epoch_accuracy = \

train(model, train_loader, optimizer, epoch)

train_losses.append(epoch_loss)

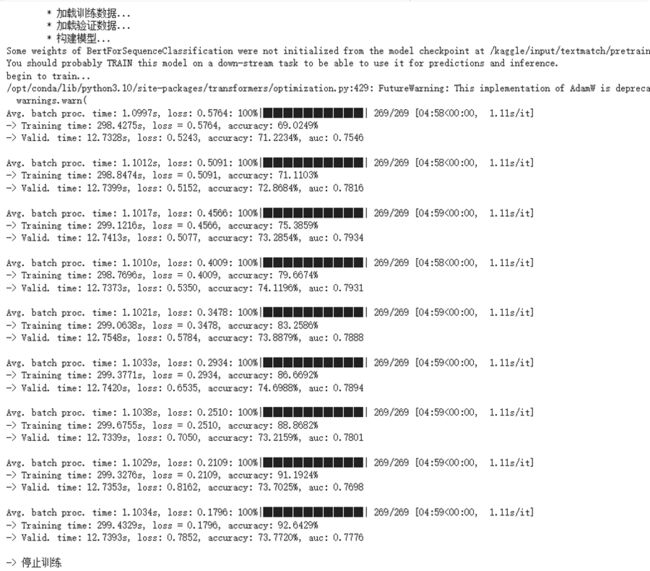

print("-> Training time: {:.4f}s, loss = {:.4f}, accuracy: {:.4f}%"

.format(epoch_time, epoch_loss, (epoch_accuracy*100)))

epoch_time, epoch_loss, epoch_accuracy , \

epoch_auc= validate(model, dev_loader)

valid_losses.append(epoch_loss)

print("-> Valid. time: {:.4f}s, loss: {:.4f}, "

"accuracy: {:.4f}%, auc: {:.4f}\n"

.format(epoch_time, epoch_loss,

(epoch_accuracy*100), epoch_auc))

# 更新学习率

scheduler.step(epoch_accuracy)

if epoch_accuracy < best_score:

patience_counter += 1

else:

best_score = epoch_accuracy

patience_counter = 0

torch.save({"epoch": epoch,

"model": model.state_dict(),

"best_score": best_score,

"epochs_count": epochs_count,

"train_losses": train_losses,

"valid_losses": valid_losses},

os.path.join(target_dir, "best.pth.tar"))

if patience_counter >= patience:

print("-> 停止训练")

break

if __name__ == "__main__":

main(train_file="/kaggle/input/textmatch/dataset/train.json", dev_file="/kaggle/input/textmatch/dataset/dev.json", target_dir= "models") 文本预测

import torch

from sys import platform

from torch.utils.data import DataLoader

from transformers import BertTokenizer

# from model import BertModelTest

# from utils import predict

# from data import DataPrecessForSentence

def main(test_file, pretrained_file, batch_size=1):

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

bert_tokenizer = BertTokenizer.from_pretrained('/kaggle/input/textmatch/pretrained_model/',

do_lower_case=True)

if platform == "linux" or platform == "linux2":

checkpoint = torch.load(pretrained_file)

else:

checkpoint = torch.load(pretrained_file, map_location=device)

test_data = DataPrecessForSentence(bert_tokenizer,

test_file, pred=True)

test_loader = DataLoader(test_data, shuffle=False,

batch_size=batch_size)

model = BertModelTest('/kaggle/input/textmatch/pretrained_model/').to(device)

model.load_state_dict(checkpoint['model'])

result = predict(model, test_file, test_loader, device)

return result

if __name__ == '__main__':

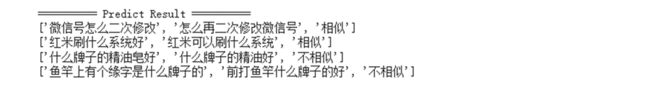

text = [['微信号怎么二次修改', '怎么再二次修改微信号'],

['红米刷什么系统好', '红米可以刷什么系统'],

['什么牌子的精油皂好', '什么牌子的精油好'],

['鱼竿上有个缘字是什么牌子的','前打鱼竿什么牌子的好']]

result = main(text, '/kaggle/input/textmatch/models/best.pth.tar')

print(10 * "=", "Predict Result", 10 * "=")

# 在每行中打印每个结果

for item in result:

print(item)操作异常问题与解决方案

1、transformers版本冲突

解决办法,下载项目指定版本:

!pip install transformers==2.10.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

2、文件读取错误,出现无法读取数据集的情况

修改data.py文件里面的读取文件操作,将修改读取.json文件

else:

ftype = True if file.endswith('.tsv') else False

limit = 50000

df = pd.read_csv(file, sep='\t') if ftype else pd.read_json(file,lines=True)

sentences_1 = map(HanziConv.toSimplified,

df['text_a'][:limit].values if ftype else df["sentence1"].iloc[:limit])

sentences_2 = map(HanziConv.toSimplified,

df['text_b'][:limit].values if ftype else df["sentence2"].iloc[:limit])

labels = df['label'].iloc[:limit].values

3、RuntimeError: Error(s) in loading state_dict for BertModelTest:

size mismatch for bert.classifier.weight: copying a param with shape torch.Size([2, 768]) from checkpoint, the shape in current model is torch.Size([3, 768]).

size mismatch for bert.classifier.bias: copying a param with shape torch.Size([2]) from checkpoint, the shape in current model is torch.Size([3]).

解决办法:需要修改model.py文件下来的BertModelTest类中的num_labels修改为2。

4、出现bert-base-cvhinese文件下载不下来的情况

解决办法:将bert-base-cvhinese文件下的config.json、tokenizer.json、tokenizer_config.json、vocab.txt文件分别下载保存重新命名文件夹token中,然后将bert-base-cvhinese修改为token文件夹的路径。

总结

在我们的实验中,使用主流的预训练模型BERT,我们成功地实现了文本相似度计算任务。该任务的核心目标是通过BERT模型对输入的两段文本进行处理,并判断它们之间是否具有相似性。BERT模型的双向编码器架构使其能够全面理解文本中的语义关系,而不仅仅是单向的传统模型。

通过BERT进行文本相似度计算,我们能够在处理复杂的语境和多义词时取得良好的性能。BERT通过训练过程中的遮蔽语言模型和下一句预测等任务,学习到了丰富的语义表示,这使得它在文本相似度任务中表现出色。在我们的实验结果中,BERT成功捕捉到了输入文本之间的语义信息,有效地判断了它们是否具有相似性。这为解决诸如文本匹配、重述检测等任务奠定了坚实的基础。

总体而言,BERT模型在文本相似度计算任务中的应用展现了其在自然语言处理领域的卓越性能。我们的实验结果为使用BERT解决其他文本相关任务提供了有力的支持,同时也为研究和应用文本相似度计算提供了有益的经验。