(十四)ATP应用测试平台——使用docker-compose一键式安装ATP应用测试平台的依赖服务

前言

关于ATP应用服务测试平台的相关内容已经更新不少,下载项目的小伙伴第一时间一定是想着怎么把这个平台项目跑起来,看下小编花里胡哨的效果是否能正常show。不过由于依赖的增多,项目的服务也随之多了起来,例如为了测试mysql的主从集群读写分离,需要安装mysql的主从服务器,为了测试redis的哨兵模式及分布式锁机制,需要安装一套redis集群,为了测试消息中间键kafka分发消息,需要安装zookeeper集群与kafka集群,由于这些服务的增加,测试环境的搭建也随之复杂了起来。为了解决这个痛点,小编整理了一份ATP的所有服务搭建脚本,通过docker-compose一键式搭建ATP测试平台的所有服务依赖。前提是大家要有docker环境,那我们现在就开始正文吧。

正文

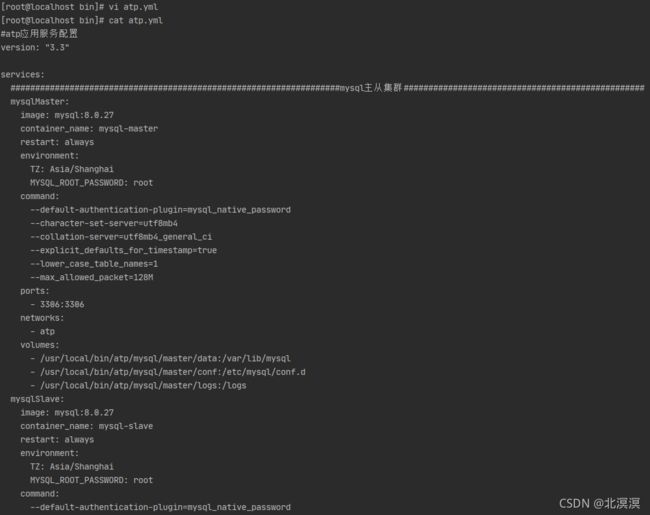

- 创建atp.yml服务启动脚本

#atp应用服务配置

version: "3.3"

services:

###################################################################mysql主从集群#################################################

mysqlMaster:

image: mysql:8.0.27

container_name: mysql-master

restart: always

environment:

TZ: Asia/Shanghai

MYSQL_ROOT_PASSWORD: root

command:

--default-authentication-plugin=mysql_native_password

--character-set-server=utf8mb4

--collation-server=utf8mb4_general_ci

--explicit_defaults_for_timestamp=true

--lower_case_table_names=1

--max_allowed_packet=128M

ports:

- 3306:3306

networks:

- atp

volumes:

- /usr/local/bin/atp/mysql/master/data:/var/lib/mysql

- /usr/local/bin/atp/mysql/master/conf:/etc/mysql/conf.d

- /usr/local/bin/atp/mysql/master/logs:/logs

mysqlSlave:

image: mysql:8.0.27

container_name: mysql-slave

restart: always

environment:

TZ: Asia/Shanghai

MYSQL_ROOT_PASSWORD: root

command:

--default-authentication-plugin=mysql_native_password

--character-set-server=utf8mb4

--collation-server=utf8mb4_general_ci

--explicit_defaults_for_timestamp=true

--lower_case_table_names=1

--max_allowed_packet=128M

ports:

- 3307:3306

networks:

- atp

volumes:

- /usr/local/bin/atp/mysql/slave/data:/var/lib/mysql

- /usr/local/bin/atp/mysql/slave/conf:/etc/mysql/conf.d

- /usr/local/bin/atp/mysql/slave/logs:/logs

adminer:

image: adminer

container_name: mysql-adminer

restart: always

ports:

- 3308:8080

networks:

- atp

depends_on:

- mysqlMaster

- mysqlSlave

#########################################################redis哨兵模式集群######################################################

redisMaster:

image: redis:alpine3.14

container_name: redis-master

restart: always

command: redis-server --port 6379 --requirepass root --appendonly yes --masterauth root --replica-announce-ip 192.168.56.10 --replica-announce-port 6379

ports:

- 6379:6379

networks:

- atp

depends_on:

- adminer

redisSlave1:

image: redis:alpine3.14

container_name: redis-slave-1

restart: always

command: redis-server --slaveof 192.168.56.10 6379 --port 6379 --requirepass root --masterauth root --appendonly yes --replica-announce-ip 192.168.56.10 --replica-announce-port 6380

ports:

- 6380:6379

networks:

- atp

depends_on:

- adminer

redisSlave2:

image: redis:alpine3.14

container_name: redis-slave-2

restart: always

command: redis-server --slaveof 192.168.56.10 6379 --port 6379 --requirepass root --masterauth root --appendonly yes --replica-announce-ip 192.168.56.10 --replica-announce-port 6381

ports:

- 6381:6379

networks:

- atp

depends_on:

- adminer

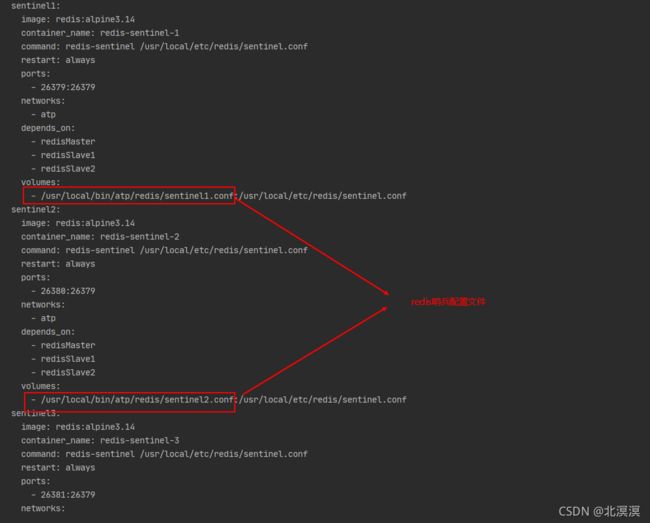

sentinel1:

image: redis:alpine3.14

container_name: redis-sentinel-1

command: redis-sentinel /usr/local/etc/redis/sentinel.conf

restart: always

ports:

- 26379:26379

networks:

- atp

depends_on:

- redisMaster

- redisSlave1

- redisSlave2

volumes:

- /usr/local/bin/atp/redis/sentinel1.conf:/usr/local/etc/redis/sentinel.conf

sentinel2:

image: redis:alpine3.14

container_name: redis-sentinel-2

command: redis-sentinel /usr/local/etc/redis/sentinel.conf

restart: always

ports:

- 26380:26379

networks:

- atp

depends_on:

- redisMaster

- redisSlave1

- redisSlave2

volumes:

- /usr/local/bin/atp/redis/sentinel2.conf:/usr/local/etc/redis/sentinel.conf

sentinel3:

image: redis:alpine3.14

container_name: redis-sentinel-3

command: redis-sentinel /usr/local/etc/redis/sentinel.conf

restart: always

ports:

- 26381:26379

networks:

- atp

depends_on:

- redisMaster

- redisSlave1

- redisSlave2

volumes:

- /usr/local/bin/atp/redis/sentinel3.conf:/usr/local/etc/redis/sentinel.conf

##################################################################zookeeper集群###################################################################

zk1:

image: zookeeper:3.7.0

restart: always

container_name: zk1

hostname: zk1

ports:

- 2181:2181

networks:

- atp

depends_on:

- sentinel1

- sentinel2

- sentinel3

volumes:

- "/usr/local/bin/atp/zk/zk1/data:/data"

- "/usr/local/bin/atp/zk/zk1/logs:/datalog"

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zk2:2888:3888;2181 server.3=zk3:2888:3888;2181

zk2:

image: zookeeper:3.7.0

restart: always

container_name: zk2

hostname: zk2

ports:

- 2182:2181

networks:

- atp

depends_on:

- sentinel1

- sentinel2

- sentinel3

volumes:

- "/usr/local/bin/atp/zk/zk2/data:/data"

- "/usr/local/bin/atp/zk/zk2/logs:/datalog"

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zk1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zk3:2888:3888;2181

zk3:

image: zookeeper:3.7.0

restart: always

container_name: zk3

hostname: zk3

ports:

- 2183:2181

networks:

- atp

depends_on:

- sentinel1

- sentinel2

- sentinel3

volumes:

- "/usr/local/bin/atp/zk/zk3/data:/data"

- "/usr/local/bin/atp/zk/zk3/logs:/datalog"

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zk1:2888:3888;2181 server.2=zk2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181

################################################kafka集群#################################################################

kafka1:

image: wurstmeister/kafka:2.13-2.7.0

restart: always

container_name: kafka1

hostname: kafka1

ports:

- "9091:9092"

- "9991:9991"

networks:

- atp

depends_on:

- zk1

- zk2

- zk3

environment:

KAFKA_BROKER_ID: 1

KAFKA_ADVERTISED_HOST_NAME: kafka1

KAFKA_ADVERTISED_PORT: 9091

KAFKA_HOST_NAME: kafka1

KAFKA_ZOOKEEPER_CONNECT: zk1:2181,zk2:2181,zk3:2181

KAFKA_LISTENERS: PLAINTEXT://kafka1:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.56.10:9091

JMX_PORT: 9991

KAFKA_JMX_OPTS: "-Djava.rmi.server.hostname=kafka1 -Dcom.sun.management.jmxremote.port=9991 -Dcom.sun.management.jmxremote=true -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.managementote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false"

volumes:

- "/usr/local/bin/atp/kafka/kafka1/:/kafka"

kafka2:

image: wurstmeister/kafka:2.13-2.7.0

restart: always

container_name: kafka2

hostname: kafka2

ports:

- "9092:9092"

- "9992:9992"

networks:

- atp

depends_on:

- zk1

- zk2

- zk3

environment:

KAFKA_BROKER_ID: 2

KAFKA_ADVERTISED_HOST_NAME: kafka2

KAFKA_ADVERTISED_PORT: 9092

KAFKA_HOST_NAME: kafka2

KAFKA_ZOOKEEPER_CONNECT: zk1:2181,zk2:2181,zk3:2181

KAFKA_LISTENERS: PLAINTEXT://kafka2:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.56.10:9092

JMX_PORT: 9992

KAFKA_JMX_OPTS: "-Djava.rmi.server.hostname=kafka2 -Dcom.sun.management.jmxremote.port=9992 -Dcom.sun.management.jmxremote=true -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.managementote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false"

volumes:

- "/usr/local/bin/atp/kafka/kafka2/:/kafka"

kafka3:

image: wurstmeister/kafka:2.13-2.7.0

restart: always

container_name: kafka3

hostname: kafka3

ports:

- "9093:9092"

- "9993:9993"

networks:

- atp

depends_on:

- zk1

- zk2

- zk3

environment:

KAFKA_BROKER_ID: 3

KAFKA_ADVERTISED_HOST_NAME: kafka3

KAFKA_ADVERTISED_PORT: 9093

KAFKA_HOST_NAME: kafka3

KAFKA_ZOOKEEPER_CONNECT: zk1:2181,zk2:2181,zk3:2181

KAFKA_LISTENERS: PLAINTEXT://kafka3:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.56.10:9093

JMX_PORT: 9993

KAFKA_JMX_OPTS: "-Djava.rmi.server.hostname=kafka3 -Dcom.sun.management.jmxremote.port=9993 -Dcom.sun.management.jmxremote=true -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.managementote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false"

volumes:

- "/usr/local/bin/atp/kafka/kafka3/:/kafka"

efak:

image: ydockerp/efak:2.0.8

restart: always

container_name: efak

hostname: efak

ports:

- "8048:8048"

networks:

- atp

depends_on:

- kafka1

- kafka2

- kafka3

volumes:

- /usr/local/bin/atp/efak/conf/system-config.properties:/opt/kafka-eagle/conf/system-config.properties

networks:

atp:

driver: bridge

关于redis具体的哨兵配置文件详情及efak的kafka管理工具的配置文件数据映射,请自行参考小编的系列博客。

docker环境下docker-compose安装高可用redis集群详解(一主二从三哨兵)_北溟的博客-CSDN博客

Docker环境下使用docker-compose一键式搭建kafka集群及kafka管理工具EFAK_北溟的博客-CSDN博客

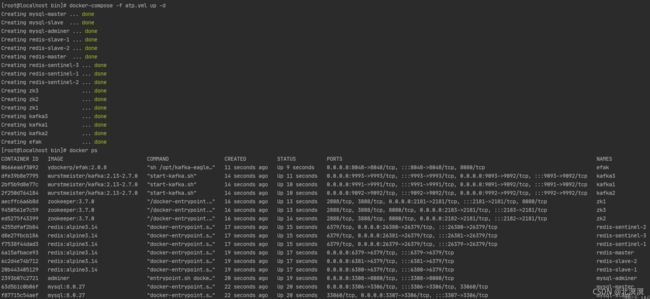

- 启动ATP应用服务

命令:docker-compose -f atp.yml up -d

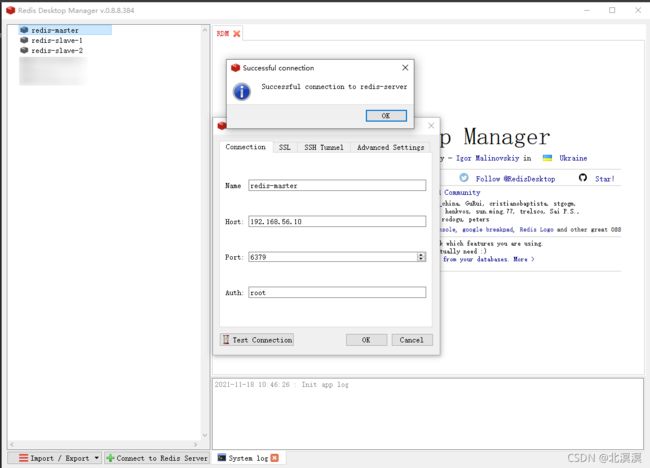

- 服务验证

①mysql数据库验证

②redis验证

③kafka集群验证

结语

ok,关于使用docker-compose一键式安装ATP应用测试平台的依赖服务教程到这里就结束了,我们下期见。。。