Unet+ResNet 实战分割项目、多尺度训练、多类别分割

1. 介绍

传统的Unet网络,特征提取的backbone采用的是vgg模型,vgg的相关介绍和实战参考以前的博文:pytorch 搭建 VGG 网络

VGG 的特征提取能力其实是不弱的,但网络较为臃肿,容易产生梯度消失或者梯度爆炸的问题。而Resnet 可以解决这一问题,参考:ResNet 训练CIFAR10数据集,并做图片分类

本章在之前文章的基础上,只是将Unet的backbone进行替换,将vgg换成了resnet而已,其余的代码完全没变,可以参考昨天的博文:Unet 实战分割项目、多尺度训练、多类别分割

项目目录:

- 粉色框为数据和待推理的数据

- 黄色框是代码训练生成的训练过程的信息。包括最好的权重(pth文件)、学习率下降曲线图片、包含超参数以及各个类别的recall、precision、iou的训练日志txt文件等等

- 蓝色框为代码

2. 对比试验

Resnet 和 Vgg 作为unet backbone的对比实验。不同的项目中,除了model.py代码更换,其余的超参数、数据均未改动

2.1 Drive 数据集

Drive 数据集介绍:UNet 网络做图像分割DRIVE数据集

Drive 数据集为二分割任务

2.1.1 loss 和 mean iou 曲线对比

上图为vgg,下图为resnet,好像区别不大...

2.1.2 训练日志对比

上图为vgg,下图为resnet

其中,每个列表索引是分类的类别,二值分割只有0 和255,所以每个列表只有两个元素

区别确实不大....

2.2 腹部多脏器MRI 数据集

腹部多脏器MRI 数据集介绍:腹部MRI多脏器分割(多个类别的分割)

腹部多脏器MRI 数据集为5类别分割:背景:0,Liver: 63 ,Right kidney: 126 ,Left kidney: 189 ,Spleen: 252

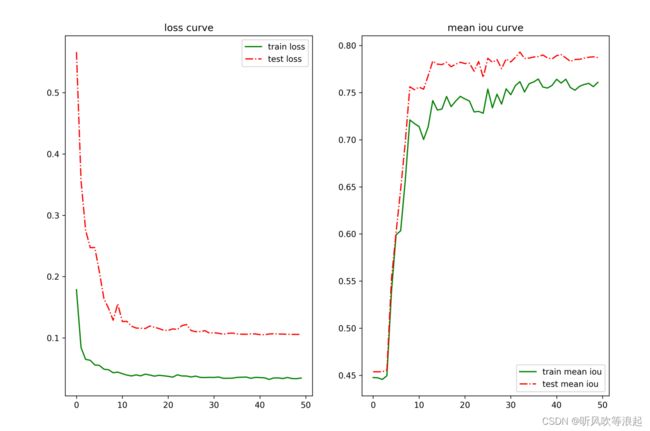

2.2.1 loss 和 mean iou 曲线对比

上图为vgg,下图为resnet

这个提升的很明显,resnet作为unet backbone 的分割iou到了0.84左右。而vgg作为backbone仅有0.76左右

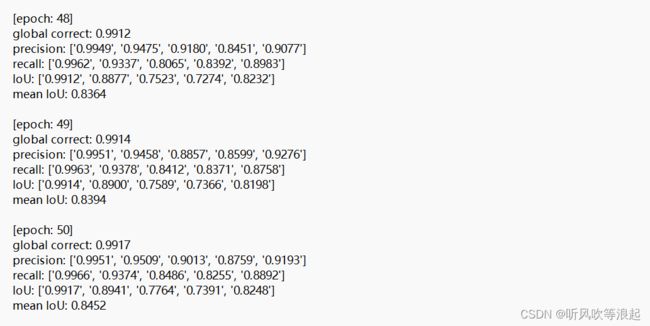

2.2.2 训练日志对比

上图为vgg,下图为resnet

其中,每个列表索引是分类的类别

可以看出,无论哪个类别的iou均有显著提升

3. 代码

model.py 代码如下:

import torch

import torch.nn as nn

import torch.nn.functional as F

class Up(nn.Module): # 将x1上采样,然后调整为x2的大小

"""Upscaling then double conv"""

def __init__(self):

super().__init__()

self.up = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True)

def forward(self, x1, x2):

x1 = self.up(x1) # 将传入数据上采样,

diffY = torch.tensor([x2.size()[2] - x1.size()[2]])

diffX = torch.tensor([x2.size()[3] - x1.size()[3]])

x1 = F.pad(x1, [diffX // 2, diffX - diffX // 2,

diffY // 2, diffY - diffY // 2]) # 填充为x2相同的大小

return x1

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, in_channels, out_channels, stride=1):

super().__init__()

self.residual_function = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1, bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True),

nn.Conv2d(out_channels, out_channels * BasicBlock.expansion, kernel_size=3, padding=1, bias=False),

nn.BatchNorm2d(out_channels * BasicBlock.expansion)

)

self.shortcut = nn.Sequential()

if stride != 1 or in_channels != BasicBlock.expansion * out_channels:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels * BasicBlock.expansion, kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(out_channels * BasicBlock.expansion)

)

def forward(self, x):

return nn.ReLU(inplace=True)(self.residual_function(x) + self.shortcut(x))

class BottleNeck(nn.Module):

expansion = 4

'''

espansion是通道扩充的比例

注意实际输出channel = middle_channels * BottleNeck.expansion

'''

def __init__(self, in_channels, middle_channels, stride=1):

super().__init__()

self.residual_function = nn.Sequential(

nn.Conv2d(in_channels, middle_channels, kernel_size=1, bias=False),

nn.BatchNorm2d(middle_channels),

nn.ReLU(inplace=True),

nn.Conv2d(middle_channels, middle_channels, stride=stride, kernel_size=3, padding=1, bias=False),

nn.BatchNorm2d(middle_channels),

nn.ReLU(inplace=True),

nn.Conv2d(middle_channels, middle_channels * BottleNeck.expansion, kernel_size=1, bias=False),

nn.BatchNorm2d(middle_channels * BottleNeck.expansion),

)

self.shortcut = nn.Sequential()

if stride != 1 or in_channels != middle_channels * BottleNeck.expansion:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channels, middle_channels * BottleNeck.expansion, stride=stride, kernel_size=1,

bias=False),

nn.BatchNorm2d(middle_channels * BottleNeck.expansion)

)

def forward(self, x):

return nn.ReLU(inplace=True)(self.residual_function(x) + self.shortcut(x))

class VGGBlock(nn.Module):

def __init__(self, in_channels, middle_channels, out_channels):

super().__init__()

self.first = nn.Sequential(

nn.Conv2d(in_channels, middle_channels, 3, padding=1),

nn.BatchNorm2d(middle_channels),

nn.ReLU()

)

self.second = nn.Sequential(

nn.Conv2d(middle_channels, out_channels, 3, padding=1),

nn.BatchNorm2d(out_channels),

nn.ReLU()

)

def forward(self, x):

out = self.first(x)

out = self.second(out)

return out

# unet+resnet 可以更换 layers

class UResnet(nn.Module):

def __init__(self, num_classes, input_channels=3,block=BasicBlock,layers=[3,4,6,3]):

super().__init__()

nb_filter = [64, 128, 256, 512, 1024]

self.Up = Up()

self.in_channel = nb_filter[0]

self.pool = nn.MaxPool2d(2, 2)

self.up = nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True)

self.conv0_0 = VGGBlock(input_channels, nb_filter[0], nb_filter[0])

self.conv1_0 = self._make_layer(block, nb_filter[1], layers[0], 1)

self.conv2_0 = self._make_layer(block, nb_filter[2], layers[1], 1)

self.conv3_0 = self._make_layer(block, nb_filter[3], layers[2], 1)

self.conv4_0 = self._make_layer(block, nb_filter[4], layers[3], 1)

self.conv3_1 = VGGBlock((nb_filter[3] + nb_filter[4]) * block.expansion, nb_filter[3],

nb_filter[3] * block.expansion)

self.conv2_2 = VGGBlock((nb_filter[2] + nb_filter[3]) * block.expansion, nb_filter[2],

nb_filter[2] * block.expansion)

self.conv1_3 = VGGBlock((nb_filter[1] + nb_filter[2]) * block.expansion, nb_filter[1],

nb_filter[1] * block.expansion)

self.conv0_4 = VGGBlock(nb_filter[0] + nb_filter[1] * block.expansion, nb_filter[0], nb_filter[0])

self.final = nn.Conv2d(nb_filter[0], num_classes, kernel_size=1)

def _make_layer(self, block, middle_channel, num_blocks, stride):

'''

middle_channels中间维度,实际输出channels = middle_channels * block.expansion

num_blocks,一个Layer包含block的个数

'''

strides = [stride] + [1] * (num_blocks - 1)

layers = []

for stride in strides:

layers.append(block(self.in_channel, middle_channel, stride))

self.in_channel = middle_channel * block.expansion

return nn.Sequential(*layers)

def forward(self, input):

x0_0 = self.conv0_0(input)

x1_0 = self.conv1_0(self.pool(x0_0))

x2_0 = self.conv2_0(self.pool(x1_0))

x3_0 = self.conv3_0(self.pool(x2_0))

x4_0 = self.conv4_0(self.pool(x3_0))

x3_1 = self.conv3_1(torch.cat([x3_0, self.Up(x4_0, x3_0)], 1))

x2_2 = self.conv2_2(torch.cat([x2_0, self.Up(x3_1, x2_0)], 1))

x1_3 = self.conv1_3(torch.cat([x1_0, self.Up(x2_2, x1_0)], 1))

x0_4 = self.conv0_4(torch.cat([x0_0, self.Up(x1_3, x0_0)], 1))

output = self.final(x0_4)

return output

4. 下载

深度学习Unet+Resnet实战分割项目、多尺度训练、多类别分割:腹部多脏器5类别分割数据集资源-CSDN文库

深度学习Unet+Resnet实战分割项目、多尺度训练、多类别分割:DRIVE视神经分割数据集资源-CSDN文库