手把手教你完成深度学习人脸识别系统

目录

- 前言

- 一、系统总流程设计

- 二、环境安装

-

- 1. 创建虚拟环境

- 2.安装其他库

- 三、模型搭建

-

- 1.采集数据集

- 2. 数据预处理

- 3.构建模型和训练

- 五、摄像头测试

- 六、界面搭建

- 报错了并解决的方法

- 总结

前言

随着人工智能的不断发展,机器学习和深度学习这门技术也越来越重要,一时间成为码农的学习热点。下面将使用深度学习技术开发一个人脸识别系统

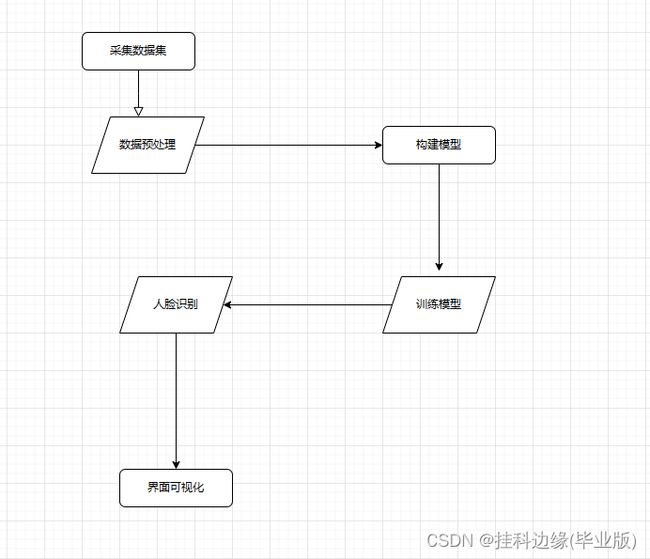

一、系统总流程设计

二、环境安装

手把手教学视频:链接: link

1. 创建虚拟环境

conda create -n py36 python=3.6

激活环境

activate py36

2.安装其他库

单独安装pyqt5

pip install pyqt5

安装requirements.txt配置文件

pip install -r requirements.txt

安装完你已经成功一大把了,看到这里点个赞赞鼓励一下

三、模型搭建

1.采集数据集

使用摄像头进行采集

代码可以直接运行,getdata.py代码如下:

注意:25行 cap = cv2.VideoCapture(1)的改为 cap = cv2.VideoCapture(0),0代表本电脑自带摄像头,1代码其他外接摄像头:

# encoding:utf-8

'''

功能: Python opencv调用摄像头获取个人图片

使用方法:

启动摄像头后需要借助键盘输入操作来完成图片的获取工作

c(change): 生成存储目录

p(photo): 执行截图

q(quit): 退出拍摄

'''

import os

import cv2

def cameraAutoForPictures(saveDir='data/'):

'''

调用电脑摄像头来自动获取图片

'''

if not os.path.exists(saveDir):

os.makedirs(saveDir)

count = 1

cap = cv2.VideoCapture(1)

width, height, w = 640, 480, 360

cap.set(cv2.CAP_PROP_FRAME_WIDTH, width)

cap.set(cv2.CAP_PROP_FRAME_HEIGHT, height)

crop_w_start = (width - w) // 2

crop_h_start = (height - w) // 2

print('width: ', width)

print('height: ', height)

while True:

ret, frame = cap.read()

frame = frame[crop_h_start:crop_h_start + w, crop_w_start:crop_w_start + w]

frame = cv2.flip(frame, 1, dst=None)

cv2.imshow("capture", frame)

action = cv2.waitKey(1) & 0xFF

if action == ord('c'):

saveDir = input(u"请输入新的存储目录:")

if not os.path.exists(saveDir):

os.makedirs(saveDir)

elif action == ord('p'):

cv2.imwrite("%s/%d.jpg" % (saveDir, count), cv2.resize(frame, (224, 224), interpolation=cv2.INTER_AREA))

print(u"%s: %d 张图片" % (saveDir, count))

count += 1

if action == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

# xxx替换为自己的名字

cameraAutoForPictures(saveDir=u'data/1/')

2. 数据预处理

代码可以直接运行,data_preparation.py代码如下:

注意:如果需要处理多人的人脸,需要在if __name__ == '__main__':后面添加多个对应的处理目录

#encoding:utf-8

from __future__ import division

'''

功能: 图像的数据预处理、标准化部分

'''

import os

import cv2

import time

def readAllImg(path,*suffix):

'''

基于后缀读取文件

'''

try:

s=os.listdir(path)

resultArray = []

fileName = os.path.basename(path)

resultArray.append(fileName)

for i in s:

if endwith(i, suffix):

document = os.path.join(path, i)

img = cv2.imread(document)

resultArray.append(img)

except IOError:

print("Error")

else:

print("读取成功")

return resultArray

def endwith(s,*endstring):

'''

对字符串的后续和标签进行匹配

'''

resultArray = map(s.endswith,endstring)

if True in resultArray:

return True

else:

return False

def readPicSaveFace(sourcePath,objectPath,*suffix):

'''

图片标准化与存储

'''

if not os.path.exists(objectPath):

os.makedirs(objectPath)

try:

resultArray=readAllImg(sourcePath,*suffix)

count=1

face_cascade=cv2.CascadeClassifier('config/haarcascade_frontalface_alt.xml')

for i in resultArray:

if type(i)!=str:

gray=cv2.cvtColor(i, cv2.COLOR_BGR2GRAY)

faces=face_cascade.detectMultiScale(gray, 1.3, 5)

for (x,y,w,h) in faces:

listStr=[str(int(time.time())),str(count)]

fileName=''.join(listStr)

f=cv2.resize(gray[y:(y+h),x:(x+w)],(200, 200))

cv2.imwrite(objectPath+os.sep+'%s.jpg' % fileName, f)

count+=1

except Exception as e:

print("Exception: ",e)

else:

print('Read '+str(count-1)+' Faces to Destination '+objectPath)

if __name__ == '__main__':

print('dataProcessing!!!')

readPicSaveFace('data/0/','dataset/0/','.jpg','.JPG','png','PNG','tiff')

readPicSaveFace('data/1/','dataset/1/','.jpg','.JPG','png','PNG','tiff')

#readPicSaveFace('data/2/', 'dataset/2/', '.jpg', '.JPG', 'png', 'PNG', 'tiff'

3.构建模型和训练

代码可以直接运行,train_model.py代码如下:

keras搭建cnn网络模型提取人脸特征

#encoding:utf-8

from __future__ import division

'''

功能: 构建人脸识别模型

'''

import os

import cv2

import random

import numpy as np

from keras.models import Sequential,load_model

from sklearn.model_selection import train_test_split

from keras.layers import Dense,Activation,Convolution2D,MaxPooling2D,Flatten,Dropout

from keras.utils.np_utils import to_categorical

from tensorflow import *

class DataSet(object):

'''

用于存储和格式化读取训练数据的类

'''

def __init__(self,path):

'''

初始化

'''

self.num_classes=None

self.X_train=None

self.X_test=None

self.Y_train=None

self.Y_test=None

self.img_size=128

self.extract_data(path)

def extract_data(self,path):

'''

抽取数据

'''

imgs,labels,counter=read_file(path)

X_train,X_test,y_train,y_test=train_test_split(imgs,labels,test_size=0.2,random_state=random.randint(0, 100))

X_train=X_train.reshape(X_train.shape[0], 1, self.img_size, self.img_size)/255.0

X_test=X_test.reshape(X_test.shape[0], 1, self.img_size, self.img_size)/255.0

X_train=X_train.astype('float32')

X_test=X_test.astype('float32')

Y_train=to_categorical(y_train, num_classes=counter)

Y_test=to_categorical(y_test, num_classes=counter)

self.X_train=X_train

self.X_test=X_test

self.Y_train=Y_train

self.Y_test=Y_test

self.num_classes=counter

def check(self):

'''

校验

'''

print('num of dim:', self.X_test.ndim)

print('shape:', self.X_test.shape)

print('size:', self.X_test.size)

print('num of dim:', self.X_train.ndim)

print('shape:', self.X_train.shape)

print('size:', self.X_train.size)

def endwith(s,*endstring):

'''

对字符串的后续和标签进行匹配

'''

resultArray = map(s.endswith,endstring)

if True in resultArray:

return True

else:

return False

def read_file(path):

'''

图片读取

'''

img_list=[]

label_list=[]

dir_counter=0

IMG_SIZE=128

for child_dir in os.listdir(path):

child_path=os.path.join(path, child_dir)

for dir_image in os.listdir(child_path):

if endwith(dir_image,'jpg'):

img=cv2.imread(os.path.join(child_path, dir_image))

resized_img=cv2.resize(img, (IMG_SIZE, IMG_SIZE))

recolored_img=cv2.cvtColor(resized_img,cv2.COLOR_BGR2GRAY)

img_list.append(recolored_img)

label_list.append(dir_counter)

dir_counter+=1

img_list=np.array(img_list)

return img_list,label_list,dir_counter

def read_name_list(path):

'''

读取训练数据集

'''

name_list=[]

for child_dir in os.listdir(path):

name_list.append(child_dir)

return name_list

class Model(object):

'''

人脸识别模型

'''

FILE_PATH="./models/face.h5"

IMAGE_SIZE=128

def __init__(self):

self.model=None

def read_trainData(self,dataset):

self.dataset=dataset

def build_model(self):

self.model = Sequential()

self.model.add(

Convolution2D(

filters=32,

kernel_size=(5, 5),

padding='same',

dim_ordering='th',

input_shape=self.dataset.X_train.shape[1:]

)

)

self.model.add(Activation('relu'))

self.model.add(

MaxPooling2D(

pool_size=(2, 2),

strides=(2, 2),

padding='same'

)

)

self.model.add(Convolution2D(filters=64, kernel_size=(5,5), padding='same'))

self.model.add(Activation('relu'))

self.model.add(MaxPooling2D(pool_size=(2,2), strides=(2,2), padding='same'))

self.model.add(Flatten())

self.model.add(Dense(1024))

self.model.add(Activation('relu'))

self.model.add(Dense(self.dataset.num_classes))

self.model.add(Activation('softmax'))

self.model.summary()

def train_model(self):

self.model.compile(

optimizer='sgd',

loss='categorical_crossentropy',

metrics=['accuracy'])

self.model.fit(self.dataset.X_train, self.dataset.Y_train, epochs=8, batch_size=10)

# history = self.model.fit(

# self.dataset.X_train,

# self.dataset.Y_train,

# epochs=2,

# batch_size=10,

# validation_data=(self.dataset.X_test, self.dataset.Y_test))

#

# self.plot_training_history(history)

# def train_model(self):

# self.model.compile(

# optimizer='adam',

# loss='categorical_crossentropy',

# metrics=['accuracy'])

# self.model.fit(self.dataset.X_train, self.dataset.Y_train, epochs=10, batch_size=10)

# 画图备用

# def plot_training_history(self, history):

# # 绘制训练过程中的损失值和准确率

# plt.figure(figsize=(12, 6))

#

# plt.subplot(1, 2, 1)

# plt.plot(history.history['loss'], label='Training Loss')

# plt.plot(history.history['val_loss'], label='Validation Loss')

# plt.xlabel('Epoch')

# plt.ylabel('Loss')

# plt.legend()

#

# plt.subplot(1, 2, 2)

# plt.plot(history.history['acc'], label='Training Accuracy')

# plt.plot(history.history['val_acc'], label='Validation Accuracy')

#

# plt.xlabel('Epoch')

# plt.ylabel('Accuracy')

# plt.legend()

#

# plt.show()

def evaluate_model(self):

print('\nTesting---------------')

loss, accuracy = self.model.evaluate(self.dataset.X_test, self.dataset.Y_test)

print('test loss;', loss)

print('test accuracy:', accuracy)

def save(self, file_path=FILE_PATH):

print('Model Saved Finished!!!')

self.model.save(file_path)

def load(self, file_path=FILE_PATH):

print('Model Loaded Successful!!!')

self.model = load_model(file_path)

def predict(self,img):

img=img.reshape((1, 1, self.IMAGE_SIZE, self.IMAGE_SIZE))

img=img.astype('float32')

img=img/255.0

result=self.model.predict(img)

max_index=np.argmax(result)

return max_index,result[0][max_index]

if __name__ == '__main__':

dataset=DataSet('dataset/')

model=Model()

model.read_trainData(dataset)

model.build_model()

model.train_model()

model.evaluate_model()

model.save()

五、摄像头测试

代码可以直接运行,Demo.py代码如下:

new_names 对应文件夹人脸的顺序

#encoding:utf-8

from __future__ import division

import numpy

'''

功能: 人脸识别摄像头视频流数据实时检测模块

'''

from PIL import Image, ImageDraw, ImageFont

import os

import cv2

from train_model import Model

threshold=0.7 # 如果模型认为概率高于70%则显示为模型中已有的人物

# 新的名字列表

new_names = ["张三", "李四"]

# 解决cv2.putText绘制中文乱码

def cv2ImgAddText(img2, text, left, top, textColor=(0, 0, 255), textSize=20):

if isinstance(img2, numpy.ndarray): # 判断是否OpenCV图片类型

img2 = Image.fromarray(cv2.cvtColor(img2, cv2.COLOR_BGR2RGB))

# 创建一个可以在给定图像上绘图的对象

draw = ImageDraw.Draw(img2)

# 字体的格式

fontStyle = ImageFont.truetype(r"C:\WINDOWS\FONTS\MSYH.TTC", textSize, encoding="utf-8")

# 绘制文本

draw.text((left, top), text, textColor, font=fontStyle)

# 转换回OpenCV格式

return cv2.cvtColor(numpy.asarray(img2), cv2.COLOR_RGB2BGR)

class Camera_reader(object):

def __init__(self):

self.model=Model()

self.model.load()

self.img_size=128

def build_camera(self):

'''

调用摄像头来实时人脸识别

'''

face_cascade = cv2.CascadeClassifier('config/haarcascade_frontalface_alt.xml')

cameraCapture=cv2.VideoCapture(0)

success, frame=cameraCapture.read()

while success and cv2.waitKey(1)==-1:

success,frame=cameraCapture.read()

gray=cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

faces=face_cascade.detectMultiScale(gray, 1.3, 5)

for (x,y,w,h) in faces:

ROI=gray[x:x+w,y:y+h]

ROI=cv2.resize(ROI, (self.img_size, self.img_size),interpolation=cv2.INTER_LINEAR)

label,prob=self.model.predict(ROI)

print(label)

if prob > threshold:

show_name = new_names[label]

else:

show_name = "陌生人"

# cv2.putText(frame, show_name, (x,y-20),cv2.FONT_HERSHEY_SIMPLEX,1,255,2)

# 在图像上绘制中文字符

# 解决cv2.putText绘制中文乱码

frame = cv2ImgAddText(frame, show_name, x + 5, y - 30,)

frame=cv2.rectangle(frame,(x,y), (x+w,y+h),(255,0,0),2)

cv2.imshow("Camera", frame)

else:

cameraCapture.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

camera=Camera_reader()

camera.build_camera()

六、界面搭建

pyqt5搭建可视化界面,实现图片识别和摄像头识别

完整代码如下

注意:126行 cap = cv2.VideoCapture(1)的改为 cap = cv2.VideoCapture(0),0代表本电脑自带摄像头,1代码其他外接摄像头:

import sys

import cv2

import numpy

from PyQt5.QtWidgets import QApplication, QMainWindow, QLabel, QPushButton, QVBoxLayout, QWidget, QFileDialog

from PyQt5.QtGui import QPixmap, QImage

from PyQt5.QtCore import Qt

from PIL import Image, ImageDraw, ImageFont

from Demo import Camera_reader

from train_model import Model

# 解决cv2.putText绘制中文乱码

def cv2ImgAddText(img2, text, left, top, textColor=(0, 0, 255), textSize=20):

if isinstance(img2, numpy.ndarray):

img2 = Image.fromarray(cv2.cvtColor(img2, cv2.COLOR_BGR2RGB))

draw = ImageDraw.Draw(img2)

fontStyle = ImageFont.truetype(r"C:\WINDOWS\FONTS\MSYH.TTC", textSize, encoding="utf-8")

draw.text((left, top), text, textColor, font=fontStyle)

return cv2.cvtColor(numpy.asarray(img2), cv2.COLOR_RGB2BGR)

# 新的名字列表

new_names = ["张国荣", "王祖贤"]

class FaceDetectionApp(QMainWindow):

def __init__(self, parent=None):

super().__init__(parent)

self.setWindowTitle("人脸检测应用")

self.setGeometry(100, 100, 800, 600)

self.central_widget = QWidget()

self.setCentralWidget(self.central_widget)

self.layout = QVBoxLayout()

self.upload_button = QPushButton("图片识别")

self.upload_button.clicked.connect(self.upload_image)

self.upload_button.setFixedSize(779, 50)

self.camera_button = QPushButton("摄像头识别")

self.camera_button.clicked.connect(self.start_camera_detection)

self.camera_button.setFixedSize(779, 50)

self.image_label = QLabel()

self.image_label.setAlignment(Qt.AlignCenter)

self.image_label.setFixedSize(779, 500)

self.result_label = QLabel("识别结果: ")

self.result_label.setAlignment(Qt.AlignCenter)

self.layout.addWidget(self.upload_button)

self.layout.addWidget(self.camera_button)

self.layout.addWidget(self.image_label)

self.layout.addWidget(self.result_label)

self.central_widget.setLayout(self.layout)

self.model = Model()

self.model.load()

def upload_image(self):

options = QFileDialog.Options()

options |= QFileDialog.ReadOnly

file_name, _ = QFileDialog.getOpenFileName(self, "选择图片", "", "Images (*.png *.jpg *.jpeg *.bmp *.gif *.tiff)", options=options)

if file_name:

image = cv2.imread(file_name)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

face_cascade = cv2.CascadeClassifier('config/haarcascade_frontalface_alt.xml')

faces = face_cascade.detectMultiScale(gray, 1.35, 5)

if len(faces) > 0:

for (x, y, w, h) in faces:

roi = gray[y:y + h, x:x + w]

roi = cv2.resize(roi, (128, 128), interpolation=cv2.INTER_LINEAR)

label, prob = self.model.predict(roi)

if prob > 0.7:

show_name = new_names[label]

res = f"识别为: {show_name}, 概率: {prob:.2f}"

else:

show_name = "陌生人"

res = "抱歉,未识别出该人!请尝试增加数据量来训练模型!"

frame = cv2ImgAddText(image, show_name, x + 5, y - 30)

cv2.rectangle(frame, (x, y), (x + w, y + h), (255, 0, 0), 2)

cv2.imwrite('prediction.jpg', frame)

self.result = cv2.cvtColor(frame, cv2.COLOR_BGR2BGRA)

self.QtImg = QImage(

self.result.data, self.result.shape[1], self.result.shape[0], QImage.Format_RGB32)

self.image_label.setPixmap(QPixmap.fromImage(self.QtImg))

self.image_label.setScaledContents(True) # 自适应界面大小

self.result_label.setText(res)

else:

self.result_label.setText("未检测到人脸")

def start_camera_detection(self):

self.camera = Camera_reader()

self.camera.build_camera()

class Camera_reader(object):

def __init__(self):

self.model = Model()

self.model.load()

self.img_size = 128

def build_camera(self):

face_cascade = cv2.CascadeClassifier('config/haarcascade_frontalface_alt.xml')

cameraCapture = cv2.VideoCapture(1)

success, frame = cameraCapture.read()

while success and cv2.waitKey(1) == -1:

success, frame = cameraCapture.read()

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

faces = face_cascade.detectMultiScale(gray, 1.3, 5)

for (x, y, w, h) in faces:

ROI = gray[x:x + w, y:y + h]

ROI = cv2.resize(ROI, (self.img_size, self.img_size), interpolation=cv2.INTER_LINEAR)

label, prob = self.model.predict(ROI)

if prob > 0.7:

show_name = new_names[label]

else:

show_name = "陌生人"

frame = cv2ImgAddText(frame, show_name, x + 5, y - 30)

frame = cv2.rectangle(frame, (x, y), (x + w, y + h), (255, 0, 0), 2)

cv2.imshow("Camera", frame)

else:

cameraCapture.release()

cv2.destroyAllWindows()

if __name__ == "__main__":

app = QApplication(sys.argv)

window = FaceDetectionApp()

window.show()

sys.exit(app.exec_())

报错了并解决的方法

报错:AttributeError: ‘str‘ object has no attribute ‘decode‘

降低h5py版本

解决方法:

pip install h5py==2.10.0

总结

完整源码地址:链接: link

输入四位数:0odf

本文通过opencv+cnn网络模型结合实现人脸识别,opencv实现人脸识别,cnn实现人脸的特征提取,并识别是某个人,cnn模型有待优化,你们可以自己需求更换其它的深度学习模型,增加训练数据集样本,实现更精准的人脸识别模型,有问题评论区留言,谢谢观看

博主熬夜写博客写代码,已经掉一大把头发了,麻烦点个赞赞鼓励一下