pulsar集群部署

Linux安装openjdk11

下载openjdk11

openjdk11下载

压缩包上传到指定路径并解压

tar -xvf openjdk-11.0.0.2_linux-x64.tar.gz && mv jdk-11.0.0.2/ jdk11

配置指定用户的环境变量

vi ~/.bash_profile

# 末尾增加如下内容(根据自己jdk目录修改)

export JAVA_HOME=/yourpath/jdk-11

export CLASSPATH=$JAVA_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$PATH

# 使配置文件生效

source ~/.bash_profile

# 验证jdk是否安装成功

java -verison

zookeeper集群部署

ZooKeeper 实现了高性能,高可靠性和有序的访问。高性能保证了ZooKeeper能应用在大型的分布式系统上,高可靠性保证它不会由于单一节点的故障而造成任何问题。有序的访问能保证客户端可以实现较为复杂的同步操作

zookeeper集群一般为奇数台,集群超过一半的机器能够正常工作,zookeeper就能够对外提供服务

zookeeper下载连接

zookeeper3.8.4下载

压缩包上传到指定路径并解压

# 解压zookeeper压缩包

tar -xvf apache-zookeeper-3.8.4-bin.tar.gz

修改conf下的cfg配置文件

cd apache-zookeeper-3.8.4-bin/conf/ && cp zoo_sample.cfg zoo.cfg

# 修改 zoo.cfg 文件

vim zoo.cfg # 修改内容在下方展示

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/yourpath/zk/tmp/zookeeper

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# https://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

## Metrics Providers

#

# https://prometheus.io Metrics Exporter

#metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

#metricsProvider.httpHost=0.0.0.0

#metricsProvider.httpPort=7000

#metricsProvider.exportJvmInfo=true

server.1=172.17.0.2:2888:3888

server.2=172.17.0.3:2888:3888

server.3=172.17.0.4:2888:3888

tickTime: 客户端与服务端或者服务端和服务端之间维持心跳的时间间隔,每隔tickTime时间就会发送一个心跳,通过心跳不仅能够用来监听机器的工作状态,还可以通过心跳来控制follower和Leader的通信时间,默认情况下FL(Follower和Leader)的会话通常是心跳间隔的两倍,单位为毫秒。

initLimit: 集群中的follower服务器与Leader服务器之间的初始连接时能容忍的最多心跳数量

syncLimit: 急群众的follower服务器与leader服务器之间的请求和回答最多能容忍的心跳数量

dataDir: 目录地址,用来存放myid信息和一些版本、日志、服务器唯一ID等信息

clientPort: 监听客户端连接的端口

server.n=127.0.0.1:2888:3888

n:代表的是一个数字,表示这个服务器的标号

127.0.0.1:IP服务器地址

2888:ZooKeeper服务器之间的通信端口

3888:Leader选举的端口

配置myid

# 在 `dataDir` 目录下创建myid文件,比如我配置的是/yourpath/zk/tmp/zookeeper/ 以其中一台为例

mkdir -p /yourpath/zk/tmp/zookeeper/

cd /yourpath/zk/tmp/zookeeper/

echo 1 > myid

# myid文件对应的内容为server.n中n的值

more myid #输出"1"

# 另外两台为 echo 2 > myid echo 3 > myid

配置指定用户的环境变量

vi ~/.bash_profile

# 末尾增加以下内容(根据自己zk目录修改):

export ZK_HOME=/yourpath/zk/apache-zookeeper-3.8.4-bin/

export PATH=$PATH:$JAVA_HOME/bin:$ZK_HOME/bin

# 使配置文件生效

source ~/.bash_profile

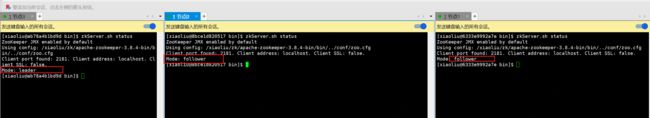

启动zookeeper服务 端口 2888 3888 允许访问

# 后台启动zookeeper

cd /yourpath/zk/apache-zookeeper-3.8.4-bin/bin/ && zkServer.sh start

# 查看zookeeper状态

zkServer.sh status

zookeeper相关命令

查看日志启动命令:zkServer.sh start-foreground

后台启动命令:zkServer.sh start

停止命令:zkServer.sh stop

查看状态命令:zkServer.sh status

- 查看端口状态 netstat -natp | egrep ‘(2888|3888)’

pulsar集群部署(2.10.6版本)

pulsar下载

pulsar下载

pulsar配置文件修改

修改bookkeeper配置文件

cd /yourpath/pulsar/apache-pulsar-2.10.6/conf

vim bookeeper.conf

# 修改39行

journalDirectory=/yourpath/pulsar/data/bookkeeper/journal

# 修改56行 为本机IP

advertisedAddress=172.17.0.4

# 修改416行

ledgerDirectories=/yourpath/pulsar/data/bookkeeper/ledgers

# 修改677行

zkServers=172.17.0.5:2181,172.17.0.4:2181,172.17.0.3:2181

#新增instanceId配置,避免出现 instaceId no mach 的错误

instanceId=bookie-1

修改broker配置文件

cd /yourpath/pulsar/apache-pulsar-2.10.6/conf

vim broker.conf

# 修改27行

metadataStoreUrl=172.17.0.5:2181,172.17.0.4:2181,172.17.0.3:2181

# 修改30行

configurationMetadataStoreUrl=172.17.0.5:2181,172.17.0.4:2181,172.17.0.3:2181

# 修改55行

bindAddress=172.17.0.4

# 修改61行

advertisedAddress=172.17.0.4

# 修改115行 集群名称

clusterName=test-pulsar-cluster

- copy文件加到另外两台机器

- 修改bookkeeper.conf 中

advertisedAddress``instanceId的值 - 修改broker.conf中

bindAddress``advertisedAddress的值

修改zookeeper端口

#zookeeper部署后, 3.5以后的版本, 会自动占用8080端口. 需要修改配置文件。

#这会和后边的端口有冲突,因此需要将这个改掉。具体做法为:

cd /yourpath/zookeeper-3.8/conf

vim zoo.cfg

admin.serverPort=8887

# 后台启动zookeeper

cd /yourpath/apache-zookeeper-3.8.4-bin/bin/ && zkServer.sh restart

# 查看zookeeper状态

zkServer.sh status

初始化元数据

cd /yourpath/apache-pulsar-2.10.6

bin/pulsar initialize-cluster-metadata \

--cluster test-pulsar-cluster \

--zookeeper 172.17.0.5:2181,172.17.0.4:2181,172.17.0.3:2181 \

--configuration-store 172.17.0.5:2181,172.17.0.4:2181,172.17.0.3:2181 \

--web-service-url http://172.17.0.5:8080,172.17.0.4:8080,172.17.0.3:8080 \

--web-service-url-tls https://172.17.0.5:8443,172.17.0.4:8443,172.17.0.3:8443 \

--broker-service-url pulsar://172.17.0.5:6650,172.17.0.4:6650,172.17.0.3:6650 \

--broker-service-url-tls pulsar+ssl://172.17.0.5:6651,172.17.0.4:6651,172.17.0.3:6651

# 接着初始化bookkeeper集群:若出现提示输入Y/N:请输入Y

bin/bookkeeper shell metaformat

# 登录到zk查看数据

cd /yourpath/zk/apache-zookeeper-3.8.4-bin/bin/ && sh zkCli.sh

# 通过 ls / 查看数据

ls /

[admin, bookies, ledgers, pulsar, stream, zookeeper] #有这些数据证明初始化成功

启动bookkeeper和broker服务

cd /yourpath/apache-pulsar-2.10.6

# 后台启动

bin/pulsar-daemon start bookie

# 验证是否启动:可三台都检测

bin/bookkeeper shell bookiesanity

# 提示:认为启动成功

Bookie sanity test succeeded

cd /usr/local/apache-pulsar-2.10.1/bin

bin/pulsar-daemon start broker

#注意:三个节点都需要依次启动

#检测是否启动:

bin/pulsar-admin brokers list test-pulsar-cluster

数据验证

# 生产消息

bin/pulsar-client produce persistent://public/default/test --messages "hello-pulsar"

#缩写

bin/pulsar-client produce test --messages "hello-pulsar"

# 消费消息

bin/pulsar-client consume persistent://public/default/test -s "consumer-test"

#缩写

bin/pulsar-client consume test -s "consumer-test"

可视化工具

pulsar manager压缩包下载

pulsar0.3.0下载

pulsar manager配置修改

cd /yourpath/pulsar-manager

tar -zxvf apache-pulsar-manager-0.3.0-bin.tar.gz

cd pulsar-manager

tar -xvf pulsar-manager.tar

cd pulsar-manager

cp -r ../dist ui

# 后台启动pulsar-manager

nohup bin/pulsar-manager > pulsar-manager.log 2>&1 &

# 增加管理员账号

CSRF_TOKEN=$(curl http://localhost:7750/pulsar-manager/csrf-token)

curl \

-H "X-XSRF-TOKEN:$CSRF_TOKEN" \

-H "Cookie: XSRF-TOKEN=$CSRF_TOKEN;" \

-H 'Content-Type: application/json' \

-X PUT http://localhost:7750/pulsar-manager/users/superuser \

-d '{"name":"pulsar","password":"pulsar","description":"test","email":"[email protected]"}'

- 页面访问地址 : http://localhost:7750/ui/index.html

- 新建Environment的配置说明

- 环境名称,不用和集群名称相同

- Environment Name: pulsar-cluster

- Broker的webServicePort地址,默认为8080,只填写一个节点

- Service URL: http://nodeIp:8080

- bookie的httpServerPort地址,默认为8000,只填写一个节点

- Bookie URL: http://nodeIp:8000

proxy方式部署

配置文件修改

cd /yourpath/apache-pulsar-2.10.6

vim conf/proxy.conf

# 修改26行

metadataStoreUrl=node1:2181,node2:2181,node3:2181

# 修改57行

advertiseAddress=本机IP

# 63行 把默认端口6650修改一下,避免端口冲突

servicePort=7650

# 68行 把默认端口8080修改一下,避免端口冲突

webServicePort=8086

# 311行

clusterName=集群名字

# 326行

zookeeperServers=node1:2181,node2:2181,node3:2181

启动服务

cd /yourpath/apache-pulsar-2.10.6

bin/pulsar-daemon start proxy

参数调优

- 待补充