大模型系列-fastgpt,ollama搭建本地知识库

大模型系列-fastgpt,ollama搭建本地知识库

-

-

- 1. 安装fastgpt,oneapi

- 2. 安装ollama运行大模型

-

- 2.1. 安装ollama

- 2.2. ollama下载模型

- 3. 安装开源的文本向量模型

- 小技巧

-

- 阿里云部署fastgpt oneapi,并且在本机映射autodl的ollama端口

- docker 运行m3e

- 错误解决

-

- 1. docker-compose up -d后oneapi不能启动

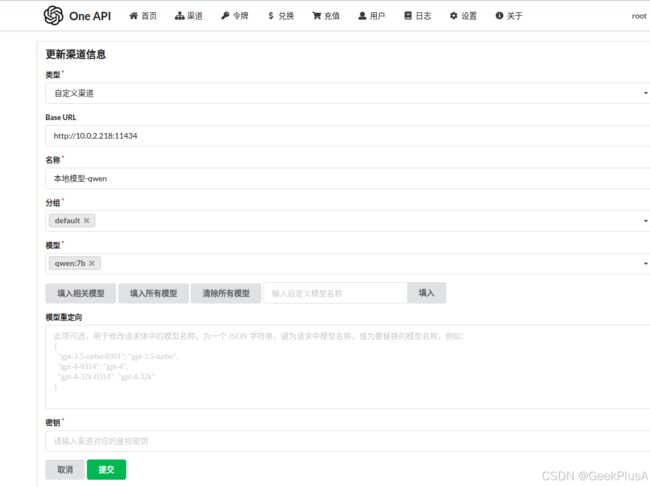

- 2. oneapi配置大模型后,测试报错

-

1. 安装fastgpt,oneapi

- docker-compose安装

- 下载docker-compose.yml,config.json

mkdir fastgpt

cd fastgpt

curl -O https://raw.githubusercontent.com/labring/FastGPT/main/projects/app/data/config.json

# pgvector 版本(测试推荐,简单快捷)

curl -o docker-compose.yml https://raw.githubusercontent.com/labring/FastGPT/main/files/docker/docker-compose-pgvector.yml

# milvus 版本

# curl -o docker-compose.yml https://raw.githubusercontent.com/labring/FastGPT/main/files/docker/docker-compose-milvus.yml

# zilliz 版本

# curl -o docker-compose.yml https://raw.githubusercontent.com/labring/FastGPT/main/files/docker/docker-compose-zilliz.yml

- 修改

docker-compose.yml里面的内容

# 数据库的默认账号和密码仅首次运行时设置有效

# 如果修改了账号密码,记得改数据库和项目连接参数,别只改一处~

# 该配置文件只是给快速启动,测试使用。正式使用,记得务必修改账号密码,以及调整合适的知识库参数,共享内存等。

# 如何无法访问 dockerhub 和 git,可以用阿里云(阿里云没有arm包)

version: '3.3'

services:

minio:

container_name: minio

image: minio/minio:RELEASE.2023-03-20T20-16-18Z

environment:

MINIO_ACCESS_KEY: minioadmin

MINIO_SECRET_KEY: minioadmin

ports:

- '9001:9001'

- '9000:9000'

networks:

- fastgpt

volumes:

- ./minio:/minio_data

command: minio server /minio_data --console-address ":9001"

healthcheck:

test: ['CMD', 'curl', '-f', 'http://localhost:9000/minio/health/live']

interval: 30s

timeout: 20s

retries: 3

# milvus

milvusEtcd:

container_name: milvusEtcd

image: quay.io/coreos/etcd:v3.5.5

environment:

- ETCD_AUTO_COMPACTION_MODE=revision

- ETCD_AUTO_COMPACTION_RETENTION=1000

- ETCD_QUOTA_BACKEND_BYTES=4294967296

- ETCD_SNAPSHOT_COUNT=50000

networks:

- fastgpt

volumes:

- ./milvus/etcd:/etcd

command: etcd -advertise-client-urls=http://127.0.0.1:2379 -listen-client-urls http://0.0.0.0:2379 --data-dir /etcd

healthcheck:

test: ['CMD', 'etcdctl', 'endpoint', 'health']

interval: 30s

timeout: 20s

retries: 3

milvusStandalone:

container_name: milvusStandalone

image: milvusdb/milvus:v2.4.3

command: ['milvus', 'run', 'standalone']

security_opt:

- seccomp:unconfined

environment:

ETCD_ENDPOINTS: milvusEtcd:2379

MINIO_ADDRESS: minio:9000

networks:

- fastgpt

volumes:

- ./milvus/data:/var/lib/milvus

healthcheck:

test: ['CMD', 'curl', '-f', 'http://localhost:9091/healthz']

interval: 30s

start_period: 90s

timeout: 20s

retries: 3

depends_on:

- 'milvusEtcd'

- 'minio'

mongo:

image: registry.cn-hangzhou.aliyuncs.com/fastgpt/mongo:5.0.18 # 阿里云

container_name: mongo

#restart: always

ports:

- 27017:27017

networks:

- fastgpt

command: mongod --keyFile /data/mongodb.key --replSet rs0

environment:

- MONGO_INITDB_ROOT_USERNAME=myusername

- MONGO_INITDB_ROOT_PASSWORD=mypassword

volumes:

- ./mongo/data:/data/db

entrypoint:

- bash

- -c

- |

openssl rand -base64 128 > /data/mongodb.key

chmod 400 /data/mongodb.key

chown 999:999 /data/mongodb.key

echo 'const isInited = rs.status().ok === 1

if(!isInited){

rs.initiate({

_id: "rs0",

members: [

{ _id: 0, host: "mongo:27017" }

]

})

}' > /data/initReplicaSet.js

# 启动MongoDB服务

exec docker-entrypoint.sh "$$@" &

# 等待MongoDB服务启动

until mongo -u myusername -p mypassword --authenticationDatabase admin --eval "print('waited for connection')" > /dev/null 2>&1; do

echo "Waiting for MongoDB to start..."

sleep 2

done

# 执行初始化副本集的脚本

mongo -u myusername -p mypassword --authenticationDatabase admin /data/initReplicaSet.js

# 等待docker-entrypoint.sh脚本执行的MongoDB服务进程

wait $$!

# fastgpt

sandbox:

container_name: sandbox

image: ghcr.io/labring/fastgpt-sandbox:v4.8.15 # git

# image: registry.cn-hangzhou.aliyuncs.com/fastgpt/fastgpt-sandbox:v4.8.15 # 阿里云

networks:

- fastgpt

#restart: always

fastgpt:

container_name: fastgpt

image: ghcr.io/labring/fastgpt:v4.8.15-fix2 # git

# image: registry.cn-hangzhou.aliyuncs.com/fastgpt/fastgpt:v4.8.15-fix2 # 阿里云

ports:

- 3000:3000

networks:

- fastgpt

depends_on:

- mongo

- milvusStandalone

- sandbox

#restart: always

environment:

# 前端访问地址: http://localhost:3000

- FE_DOMAIN=http://localhost:3000

# root 密码,用户名为: root。如果需要修改 root 密码,直接修改这个环境变量,并重启即可。

- DEFAULT_ROOT_PSW=1234

# AI模型的API地址哦。务必加 /v1。这里默认填写了OneApi的访问地址。

- OPENAI_BASE_URL=http://oneapi:3000/v1

# AI模型的API Key。(这里默认填写了OneAPI的快速默认key,测试通后,务必及时修改)

- CHAT_API_KEY=sk-fastgpt

# 数据库最大连接数

- DB_MAX_LINK=30

# 登录凭证密钥

- TOKEN_KEY=any

# root的密钥,常用于升级时候的初始化请求

- ROOT_KEY=root_key

# 文件阅读加密

- FILE_TOKEN_KEY=filetoken

# MongoDB 连接参数. 用户名myusername,密码mypassword。

- MONGODB_URI=mongodb://myusername:mypassword@mongo:27017/fastgpt?authSource=admin

# zilliz 连接参数

- MILVUS_ADDRESS=http://milvusStandalone:19530

- MILVUS_TOKEN=none

# sandbox 地址

- SANDBOX_URL=http://sandbox:3000

# 日志等级: debug, info, warn, error

- LOG_LEVEL=info

- STORE_LOG_LEVEL=warn

volumes:

- ./config.json:/app/data/config.json

# oneapi

oneapi:

container_name: oneapi

image: ghcr.io/songquanpeng/one-api:v0.6.7

#image: justsong/one-api:latest

# image: registry.cn-hangzhou.aliyuncs.com/fastgpt/one-api:v0.6.6 # 阿里云

ports:

- 3001:3000

#depends_on:

# - mysql_my

networks:

- fastgpt

#restart: always

environment:

# mysql 连接参数

#- SQL_DSN=root:oneapimmysql@tcp(mysql:3306)/oneapi1

- SQL_DSN=root:root@tcp(10.0.2.218:3306)/oneapi

#- SQL_DSN=root:root@tcp(host.docker.internal:3306)/oneapi

# 登录凭证加密密钥

- SESSION_SECRET=oneapikey

# 内存缓存

- MEMORY_CACHE_ENABLED=true

# 启动聚合更新,减少数据交互频率

- BATCH_UPDATE_ENABLED=true

# 聚合更新时长

- BATCH_UPDATE_INTERVAL=10

# 初始化的 root 密钥(建议部署完后更改,否则容易泄露)

- INITIAL_ROOT_TOKEN=fastgpt

volumes:

- ./oneapi:/data

networks:

fastgpt:

- 启动容器

docker-compose up -d

- 登录oneapi

可以通过ip:3001访问OneAPI,默认账号为root密码为123456。 - 登录fastgpt

可以通过ip:3000访问FastGPT,默认账号为root密码为docker-compose.yml环境变量里设置的DEFAULT_ROOT_PSW。

2. 安装ollama运行大模型

参考:Ollama 中文文档

2.1. 安装ollama

docker pull ollama/ollama:latest

docker run -d --gpus=all -v /media/geekplusa/GeekPlusA1/ai/models/llm/models/ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

# 使ollama保持模型加载在内存(显存)中

docker run -d --gpus=all -e OLLAMA_KEEP_ALIVE=-1 -v /media/geekplusa/GeekPlusA1/ai/models/llm/models/ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

2.2. ollama下载模型

- 运行

qwen:7b模型

docker exec -it ollama ollama run qwen:7b

- 测试

curl http://localhost:11434/api/chat -d '{

"model": "qwen:7b",

"messages": [

{

"role": "user",

"content": "你是谁?"

}

]

}'

3. 安装开源的文本向量模型

由于M3E模型不可商用,由可商用模型bge-m3替换,安装

- 下载

bge-small-zh-v1.5模型

git clone https://www.modelscope.cn/Xorbits/bge-small-zh-v1.5.git

cd bge-small-zh-v1.5

wget https://www.modelscope.cn/models/Xorbits/bge-small-zh-v1.5/resolve/master/pytorch_model.bin

- 启动模型

from sentence_transformers import SentenceTransformer

import torch

from flask import Flask, request, jsonify

import os

import threading

m3e = SentenceTransformer("bge-m3")

if torch.cuda.is_available():

m3e=m3e.to("cuda")

print("现在使用GPU模式运行M3模型")

else:

print("现在使用CPU模式运行M3模型")

# print("Enter your authtoken, which can be copied from https://dashboard.ngrok.com/auth")

# conf.get_default().auth_token = getpass.getpass()

os.environ["FLASK_ENV"] = "development"

app = Flask(__name__)

# Define Flask routes

@app.route("/")

def index():

return "这是BGE-M3的API接口,请访问/v1/embeddings 使用POST请求"

@app.route('/v1/embeddings', methods=['POST'])

def embeddings():

data = request.json

input_text = data.get('input')

print(f"/v1/embeddings 收到请求,输入文本为:{input_text}")

model = data.get('model')

if model is None:

model = "BAAI/bge-m3"

if input_text is None:

return jsonify(error="No input text provided"), 400

# input can be string or array of strings

# if isinstance(input_text, str):

# sentences = [input_text]

# elif isinstance(input_text, list):

# sentences = input_text

sentences = input_text if isinstance(input_text, list) else [input_text]

embeddings = m3e.encode(sentences,convert_to_tensor=True,show_progress_bar=True)

embeddings = embeddings.tolist()

data = [{"object": "embedding", "embedding": x, "index": i} for i, x in enumerate(embeddings)]

# Mimic the response structure of the OpenAI API

response = {

"object": "list",

"data": data,

"model": model,

"usage": {

"prompt_tokens": 0,

"total_tokens": 0

}

}

return jsonify(response)

if __name__ == '__main__':

# Start the Flask server in a new thread

threading.Thread(target=app.run, kwargs={"use_reloader": False,"debug":True,"host": "0.0.0.0"}).start()

小技巧

阿里云部署fastgpt oneapi,并且在本机映射autodl的ollama端口

- 后台运行

# 其中17001是本地端口 127.0.0.1:11434是autodl的端口

ssh -CNg -f -N -L 17001:127.0.0.1:11434 [email protected] -p 35040

docker 运行m3e

docker run -d --name m3e -p 17002:6008 --gpus all -e sk-key=123321 registry.cn-hangzhou.aliyuncs.com/fastgpt_docker/m3e-large-api

错误解决

1. docker-compose up -d后oneapi不能启动

oneapi依赖mysql,主要是mysql的问题,另外启动一个mysql容器,oneapi依赖后就解决了。

2. oneapi配置大模型后,测试报错

{

"message": "do request failed: Post \"http://localhost:11434/api/chat\": dial tcp [::1]:11434: connect: connection refused",

"success": false,

"time": 0

}

这是由于oneapi依赖mysql,配置的时候的填写本机真实ip地址,不能localhost或者127.0.0.1,正确配置如图