MapReduce的数据流程、执行流程

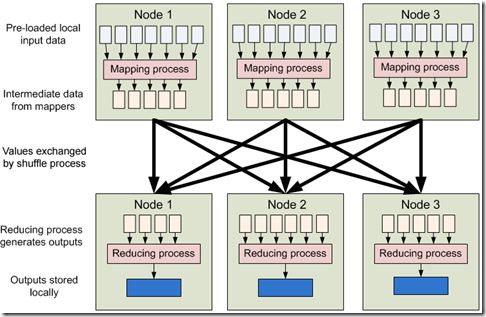

MapReduce的数据流程:

- 预先加载本地的输入文件

- 经过MAP处理产生中间结果

- 经过shuffle程序将相同key的中间结果分发到同一节点上处理

- Recude处理产生结果输出

- 将结果输出保存在hdfs上

MAP

在map阶段,使用job.setInputFormatClass定义的InputFormat将输入的数据集分割成小数据块splits,

同时InputFormat提供一个RecordReder的实现。默认的是TextInputFormat,

他提供的RecordReder会将文本的一行的偏移量作为key,这一行的文本作为value。

这就是自定义Map的输入是<longwritable, text="">的原因。

然后调用自定义Map的map方法,将一个个<longwritable, text="">对输入给Map的map方法。

最终是按照自定义的MAP的输出key类,输出class类生成一个List<mapoutputkeyclass, mapoutputvalueclass="">。

Partitioner

在map阶段的最后,会先调用job.setPartitionerClass设置的类对这个List进行分区,

每个分区映射到一个reducer。每个分区内又调用job.setSortComparatorClass设置的key比较函数类排序。

可以看到,这本身就是一个二次排序。

如果没有通过job.setSortComparatorClass设置key比较函数类,则使用key的实现的compareTo方法。

Shuffle:

将每个分区根据一定的规则,分发到reducer处理

Sort

在reduce阶段,reducer接收到所有映射到这个reducer的map输出后,

也是会调用job.setSortComparatorClass设置的key比较函数类对所有数据对排序。

然后开始构造一个key对应的value迭代器。这时就要用到分组,

使用jobjob.setGroupingComparatorClass设置的分组函数类。只要这个比较器比较的两个key相同,

他们就属于同一个组,它们的value放在一个value迭代器

Reduce

最后就是进入Reducer的reduce方法,reduce方法的输入是所有的(key和它的value迭代器)。

同样注意输入与输出的类型必须与自定义的Reducer中声明的一致。

具体的例子:

是hadoop mapreduce example中的例子,自己改写了一下并加入的注释

1 import java.io.DataInput; 2 import java.io.DataOutput; 3 import java.io.IOException; 4 import java.util.StringTokenizer; 5 6 import org.apache.hadoop.conf.Configuration; 7 import org.apache.hadoop.fs.Path; 8 import org.apache.hadoop.io.IntWritable; 9 import org.apache.hadoop.io.LongWritable; 10 import org.apache.hadoop.io.RawComparator; 11 import org.apache.hadoop.io.Text; 12 import org.apache.hadoop.io.WritableComparable; 13 import org.apache.hadoop.io.WritableComparator; 14 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 15 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 16 import org.apache.hadoop.mapreduce.Job; 17 import org.apache.hadoop.mapreduce.Mapper; 18 import org.apache.hadoop.mapreduce.Partitioner; 19 import org.apache.hadoop.mapreduce.Reducer; 20 import org.apache.hadoop.util.GenericOptionsParser; 21 22 import com.catt.cdh.mr.example.SecondarySort2.FirstPartitioner; 23 import com.catt.cdh.mr.example.SecondarySort2.Reduce; 24 25 /** 26 * This is an example Hadoop Map/Reduce application. 27 * It reads the text input files that must contain two integers per a line. 28 * The output is sorted by the first and second number and grouped on the 29 * first number. 30 * 31 * To run: bin/hadoop jar build/hadoop-examples.jar secondarysort 32 * <i>in-dir</i> <i>out-dir</i> */ 33 public class SecondarySort { 34 35 /** 36 * Define a pair of integers that are writable. 37 * They are serialized in a byte comparable format. 38 */ 39 public static class IntPair implements WritableComparable<intpair> { 40 private int first = 0; 41 private int second = 0; 42 43 /** 44 * Set the left and right values. 45 */ 46 public void set(int left, int right) { 47 first = left; 48 second = right; 49 } 50 51 public int getFirst() { 52 return first; 53 } 54 55 public int getSecond() { 56 return second; 57 } 58 59 /** 60 * Read the two integers. 61 * Encoded as: MIN_VALUE -> 0, 0 -> -MIN_VALUE, MAX_VALUE-> -1 62 */ 63 @Override 64 public void readFields(DataInput in) throws IOException { 65 first = in.readInt() + Integer.MIN_VALUE; 66 second = in.readInt() + Integer.MIN_VALUE; 67 } 68 69 @Override 70 public void write(DataOutput out) throws IOException { 71 out.writeInt(first - Integer.MIN_VALUE); 72 out.writeInt(second - Integer.MIN_VALUE); 73 } 74 75 @Override 76 // The hashCode() method is used by the HashPartitioner (the default 77 // partitioner in MapReduce) 78 public int hashCode() { 79 return first * 157 + second; 80 } 81 82 @Override 83 public boolean equals(Object right) { 84 if (right instanceof IntPair) { 85 IntPair r = (IntPair) right; 86 return r.first == first && r.second == second; 87 } else { 88 return false; 89 } 90 } 91 92 /** A Comparator that compares serialized IntPair. */ 93 public static class Comparator extends WritableComparator { 94 public Comparator() { 95 super(IntPair.class); 96 } 97 98 // 针对key进行比较,调用多次 99 public int compare(byte[] b1, int s1, int l1, byte[] b2, int s2, 100 int l2) { 101 return compareBytes(b1, s1, l1, b2, s2, l2); 102 } 103 } 104 105 static { 106 // 注意:如果不进行注册,则使用key.compareTo方法进行key的比较 107 // register this comparator 108 WritableComparator.define(IntPair.class, new Comparator()); 109 } 110 111 // 如果不注册WritableComparator,则使用此方法进行key的比较 112 @Override 113 public int compareTo(IntPair o) { 114 if (first != o.first) { 115 return first < o.first ? -1 : 1; 116 } else if (second != o.second) { 117 return second < o.second ? -1 : 1; 118 } else { 119 return 0; 120 } 121 } 122 } 123 124 /** 125 * Partition based on the first part of the pair. 126 */ 127 public static class FirstPartitioner extends 128 Partitioner<intpair, intwritable=""> { 129 @Override 130 public int getPartition(IntPair key, IntWritable value, 131 int numPartitions) { 132 return Math.abs(key.getFirst() * 127) % numPartitions; 133 } 134 } 135 136 /** 137 * Compare only the first part of the pair, so that reduce is called once 138 * for each value of the first part. 139 */ 140 public static class FirstGroupingComparator implements 141 RawComparator<intpair> { 142 143 // 针对key调用,调用多次 144 @Override 145 public int compare(byte[] b1, int s1, int l1, byte[] b2, int s2, int l2) { 146 return WritableComparator.compareBytes(b1, s1, Integer.SIZE / 8, 147 b2, s2, Integer.SIZE / 8); 148 } 149 150 // 没有监控到被调用,不知道有什么用 151 @Override 152 public int compare(IntPair o1, IntPair o2) { 153 int l = o1.getFirst(); 154 int r = o2.getFirst(); 155 return l == r ? 0 : (l < r ? -1 : 1); 156 } 157 } 158 159 /** 160 * Read two integers from each line and generate a key, value pair 161 * as ((left, right), right). 162 */ 163 public static class MapClass extends 164 Mapper<longwritable, text,="" intpair,="" intwritable=""> { 165 166 private final IntPair key = new IntPair(); 167 private final IntWritable value = new IntWritable(); 168 169 @Override 170 public void map(LongWritable inKey, Text inValue, Context context) 171 throws IOException, InterruptedException { 172 StringTokenizer itr = new StringTokenizer(inValue.toString()); 173 int left = 0; 174 int right = 0; 175 if (itr.hasMoreTokens()) { 176 left = Integer.parseInt(itr.nextToken()); 177 if (itr.hasMoreTokens()) { 178 right = Integer.parseInt(itr.nextToken()); 179 } 180 key.set(left, right); 181 value.set(right); 182 context.write(key, value); 183 } 184 } 185 } 186 187 /** 188 * A reducer class that just emits the sum of the input values. 189 */ 190 public static class Reduce extends 191 Reducer<intpair, intwritable,="" text,="" intwritable=""> { 192 private static final Text SEPARATOR = new Text( 193 "------------------------------------------------"); 194 private final Text first = new Text(); 195 196 @Override 197 public void reduce(IntPair key, Iterable<intwritable> values, 198 Context context) throws IOException, InterruptedException { 199 context.write(SEPARATOR, null); 200 first.set(Integer.toString(key.getFirst())); 201 for (IntWritable value : values) { 202 context.write(first, value); 203 } 204 } 205 } 206 207 public static void main(String[] args) throws Exception { 208 Configuration conf = new Configuration(); 209 String[] ars = new String[] { "hdfs://data2.kt:8020/test/input", 210 "hdfs://data2.kt:8020/test/output" }; 211 conf.set("fs.default.name", "hdfs://data2.kt:8020/"); 212 213 String[] otherArgs = new GenericOptionsParser(conf, ars) 214 .getRemainingArgs(); 215 if (otherArgs.length != 2) { 216 System.err.println("Usage: secondarysort <in> <out>"); 217 System.exit(2); 218 } 219 Job job = new Job(conf, "secondary sort"); 220 job.setJarByClass(SecondarySort.class); 221 job.setMapperClass(MapClass.class); 222 223 // 不再需要Combiner类型,因为Combiner的输出类型<text, intwritable="">对Reduce的输入类型<intpair, intwritable="">不适用 224 // job.setCombinerClass(Reduce.class); 225 // Reducer类型 226 job.setReducerClass(Reduce.class); 227 // 分区函数 228 job.setPartitionerClass(FirstPartitioner.class); 229 // 设置setSortComparatorClass,在partition后, 230 // 每个分区内又调用job.setSortComparatorClass设置的key比较函数类排序 231 // 另外,在reducer接收到所有映射到这个reducer的map输出后, 232 // 也是会调用job.setSortComparatorClass设置的key比较函数类对所有数据对排序 233 // job.setSortComparatorClass(GroupingComparator2.class); 234 // 分组函数 235 236 job.setGroupingComparatorClass(FirstGroupingComparator.class); 237 238 // the map output is IntPair, IntWritable 239 // 针对自定义的类型,需要指定MapOutputKeyClass 240 job.setMapOutputKeyClass(IntPair.class); 241 // job.setMapOutputValueClass(IntWritable.class); 242 243 // the reduce output is Text, IntWritable 244 job.setOutputKeyClass(Text.class); 245 job.setOutputValueClass(IntWritable.class); 246 247 FileInputFormat.addInputPath(job, new Path(otherArgs[0])); 248 FileOutputFormat.setOutputPath(job, new Path(otherArgs[1])); 249 System.exit(job.waitForCompletion(true) ? 0 : 1); 250 } 251 252 }</intpair,></text,></out></in></intwritable></intpair,></longwritable,></intpair></intpair,></intpair>

转载自: http://www.open-open.com/lib/view/open1385602212718.html