vmware workstation9.0 RHEL5.8 oracle 10g RAC安装指南及问题总结

(1)虚拟机:添加三块网卡 eth0 eth1 eth2 ,分别用于内网,心跳,外网

RAC1 内网:192.168.1.10/24 心跳:192.168.2.10/24 VIP:192.168.1.100 外网:DHCP

RAC2 内网:192.168.1.11/24 心跳:192.168.2.11/24 VIP:192.168.1.101 外网:DHCP

(2)磁盘大小:20GB 安装系统时使用逻辑卷管理磁盘,以方便后续空间不够时进行扩展

系统:RHEL5.8

(3)共享磁盘

OCR磁盘:100M

Voting disk:100M

两块作为ASM磁盘:

asmdisk1:2GB

asmdisk2:2GB

二,配置

1.主节点配置

(1)网络设置

修改网络配置文件

(2)防火墙和SElinux

关闭防火墙

service iptables stop

禁用SELinux(需要重启主机)

编辑/etc/selinux/config

修改

SELINUX=disabled

(3)修改主机名

编辑/etc/sysconfig/network

设置

HOSTNAME=db10a

(4)修改/etc/hosts文件

添加

##Public Network - (eth0) 192.168.1.10 db10a 192.168.1.11 db10b ##Private Interconnect - (eth1) 192.168.2.10 db10a-priv 192.168.2.11 db10b-priv ##Public Virtual IP (VIP) addresses - (eth0) 192.168.1.100 db10a-vip 192.168.1.101 db10b-vip

(5)创建所需用户和用户组

groupadd -g 500 oinstall groupadd -g 501 dba useradd -m -u 500 -g oinstall -G dba -d /home/oracle -s /bin/bash -c "Oracle Software Owner" oracle

设置密码:

passwd oracle

(6)创建所需目录并授权(其中media为存放oracle所需软件的目录)

mkdir -p /oracle/media chown -R oracle:oinstall /oracle/media/ chmod -R 775 /oracle/media/ mkdir -p /u01/crs1020 chown -R oracle:oinstall /u01/crs1020 chmod -R 775 /u01/crs1020/ mkdir -p /u01/app/oracle chown -R oracle:oinstall /u01/app/oracle/ chmod -R 775 /u01/app/oracle/

(7)修改环境变量文件

su - oracle vi .bash_profile

把文件中的内容替换

# .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs PATH=$PATH:$HOME/bin export PATH ################################################## # User specific environment and startup programs ################################################## export ORACLE_BASE=/u01/app/oracle export ORACLE_HOME=$ORACLE_BASE/product/10.2.0/db_1 export CRS_HOME=/u01/crs1020 export ORA_CRS_HOME=/u01/crs1020 export ORACLE_PATH=$ORACLE_BASE/common/oracle/sql:.:$ORACLE_HOME/rdbms/admin ################################################## # Each RAC node must have a unique ORACLE_SID. (i.e. orcl1, orcl2,...) ################################################## export ORACLE_SID=testdb101 export PATH=$ORA_CRS_HOME/bin:.:${JAVA_HOME}/bin:${PATH}:$HOME/bin:$ORACLE_HOME/bin export PATH=${PATH}:/usr/bin:/bin:/usr/bin/X11:/usr/local/bin export PATH=${PATH}:$ORACLE_BASE/common/oracle/bin:$ORA_CRS_HOME/bin:/sbin export ORACLE_TERM=xterm export TNS_ADMIN=$ORACLE_HOME/network/admin export ORA_NLS10=$ORACLE_HOME/nls/data export LD_LIBRARY_PATH=$ORACLE_HOME/lib export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$ORACLE_HOME/oracm/lib export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/lib:/usr/lib:/usr/local/lib export CLASSPATH=$ORACLE_HOME/JRE export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/jlib export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/rdbms/jlib export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/network/jlib export THREADS_FLAG=native export TEMP=/tmp export TMPDIR=/tmp ################################################## # set NLS_LANG to resolve messy code in SQLPLUS ################################################## export NLS_LANG=AMERICAN_AMERICA.WE8ISO8859P1 ################################################## # Shell setting. ################################################## umask 022 set -o vi export PS1="\${ORACLE_SID}@`hostname` \${PWD}$ " ################################################## # Oracle Alias ################################################## alias ls="ls -FA" alias vi=vim alias base='cd $ORACLE_BASE' alias home='cd $ORACLE_HOME' alias alert='tail -200f $ORACLE_BASE/admin/RACDB/bdump/alert_$ORACLE_SID.log' alias tnsnames='vi $ORACLE_HOME/network/admin/tnsnames.ora' alias listener='vi $ORACLE_HOME/network/admin/listener.ora'

(8)修改内核参数

vi /etc/sysctl.conf

添加:

kernel.shmmax = 4294967295 kernel.shmmni = 4096 kernel.sem = 250 32000 100 128 fs.file-max = 65536 net.ipv4.ip_local_port_range = 1024 65000 net.core.rmem_default = 1048576 net.core.rmem_max = 1048576 net.core.wmem_default = 262144 net.core.wmem_max = 262144

生效

sysctl -p

(9)限制参数

编辑/etc/security/limits.conf

添加:

oracle soft nproc 2047 oracle hard nproc 16384 oracle soft nofile 1024 oracle hard nofile 65536

在/etc/pam.d/login中添加:

session required /lib/security/pam_limits.so

如下:

cat >> /etc/pam.d/login << EOF session required /lib/security/pam_limits.so EOF

(10)设置计时器

cat >> /etc/rc.local << EOF modprobe hangcheck-timer hangcheck-tick=30 hangcheck_margin=180 EOF

(11)检查安装包并安装所需要的rpm包

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' binutils \ compat-libstdc++-33 \ elfutils-libelf \ elfutils-libelf-devel \ elfutils-libelf-devel-static \ gcc \ gcc-c++ \ glibc \ glibc-common \ glibc-devel \ glibc-headers \ kernel-headers \ ksh \ libaio \ libaio-devel \ libgcc \ libgomp \ libstdc++ \ libstdc++-devel \ make \ sysstat \ unixODBC \ unixODBC-devel \ libXp

可配置本地yum源

a. mount /dev/cdrom /mnt b. cat >> /etc/yum.repos.d/dvd.repo<<EOF [dvd] name=install dvd baseurl=file:///mnt/Server enabled=1 gpgcheck=0 EOF c. yum clean all d. yum list

配置成功后,安装缺失软件包

(12)配置并启动ntp服务

ntp服务

rpm -qa | grep ntp vi /etc/ntp.conf restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap service ntpd start chkconfig ntpd on

(13)关闭虚拟机,添加共享磁盘(这一步最好在前面开始的时候做,否则多重启一下虚拟机系统)

添加4块儿磁盘:ocr磁盘为100M

voting disk 为:100M

ASM1:2G

ASM2:2G

添加的4块儿磁盘要与本地的磁盘不要设置在同一总线下

在节点以的vmx文件中添加(开启前添加):

disk.locking="false" diskLib.dataCacheMaxSize = "0" diskLib.dataCacheMaxReadAheadSize = "0" diskLib.DataCacheMinReadAheadSize = "0" diskLib.dataCachePageSize = "4096" diskLib.maxUnsyncedWrites = "0" scsi1:0.deviceType = "disk" scsi1:1.deviceType = "disk" scsi1:2.deviceType = "disk" scsi1:3.deviceType = "disk" disk.EnableUUID ="true"

对4块儿盘进行分区

fdisk /dev/sdb

分别为:sdb1,sdc1,sdd1,sde1

使用udev进行绑定裸设备

编辑/etc/udev/rules.d/60-raw.rules

添加:

ACTION=="add", KERNEL=="sdb", RUN+="/bin/raw /dev/raw/raw1 %N" ACTION=="add", KERNEL=="sdc", RUN+="/bin/raw /dev/raw/raw2 %N" ACTION=="add", KERNEL=="sdd", RUN+="/bin/raw /dev/raw/raw3 %N" ACTION=="add", KERNEL=="sde", RUN+="/bin/raw /dev/raw/raw4 %N" KERNEL=="raw[1-4]", OWNER="oracle", GROUP="oinstall", MODE="660"

重启udev

start_udev

查看:

[root@db10a ~]# raw -qa /dev/raw/raw1: bound to major 8, minor 16 /dev/raw/raw2: bound to major 8, minor 32 /dev/raw/raw3: bound to major 8, minor 48 /dev/raw/raw4: bound to major 8, minor 64 [root@db10a ~]# ls -l /dev/raw total 0 crw-rw---- 1 oracle oinstall 162, 1 Sep 9 09:46 raw1 crw-rw---- 1 oracle oinstall 162, 2 Sep 9 09:46 raw2 crw-rw---- 1 oracle oinstall 162, 3 Sep 9 09:46 raw3 crw-rw---- 1 oracle oinstall 162, 4 Sep 9 09:46 raw4

注意权限和属组

使用上面的方式进行绑定的时候报磁盘没有共享的错误

使用rawdevice方式进行绑定

在/etc/sysconfig/rawdevices 中加入:

/dev/raw/raw1 /dev/sdb1 /dev/raw/raw2 /dev/sdc1 /dev/raw/raw3 /dev/sdd1 /dev/raw/raw4 /dev/sde1

将赋权限的命令加到/etc/rc.d/rc.local文件中,以便重启的时候对裸设备赋正确的权限

chown –R oracle:oinstall /dev/raw/raw* chmod 660 /dev/raw/raw*

2.副节点设置

(1)复制或者克隆节点一

(2)修改的地方有

IP地址

主机名

oracle用户中的实例名(这里是testdb102)

3.配互信

在主节点

节点一

su - oracle mkdir ~/.ssh ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_rsa. Your public key has been saved in /home/oracle/.ssh/id_rsa.pub. The key fingerprint is: df:1c:ee:d1:98:88:fb:b8:a4:f6:c5:46:c6:98:01:20 oracle@rac1 ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_dsa. Your public key has been saved in /home/oracle/.ssh/id_dsa.pub. The key fingerprint is: 79:7f:a4:2f:63:79:0e:3f:c6:5b:6a:f4:28:68:cb:8e oracle@rac1

在副节点上执行相同的操作,确保通信无阻

su - oracle mkdir ~/.ssh ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_rsa. Your public key has been saved in /home/oracle/.ssh/id_rsa.pub. The key fingerprint is: 7f:1f:55:db:40:e9:da:95:f5:f4:7c:8c:3d:3f:c9:43 oracle@rac2 ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_dsa. Your public key has been saved in /home/oracle/.ssh/id_dsa.pub. The key fingerprint is: 04:b8:c7:7a:6c:b3:09:ca:ab:27:47:db:89:a4:8d:1d oracle@rac2

在主节点 rac1 上以 oracle 用户执行以下操作

cat ~/.ssh/id_rsa.pub >> ./.ssh/authorized_keys cat ~/.ssh/id_dsa.pub >> ./.ssh/authorized_keys ssh db10b cat ~/.ssh/id_rsa.pub >> ./.ssh/authorized_keys The authenticity of host 'db10b (192.168.1.11)' can't be established. RSA key fingerprint is 2a:e9:a7:db:dc:7a:cf:c9:7c:2d:ca:fa:d8:77:95:4f. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'db10b,192.168.1.11' (RSA) to the list of known hosts. oracle@db10b's password: ssh db10b cat ~/.ssh/id_dsa.pub >> ./.ssh/authorized_keys oracle@db10b's password: scp ~/.ssh/authorized_keys db10b:~/.ssh/authorized_keys

oracle@rac2's password: authorized_keys 100% 1988 1.9KB/s 00:00

在主节点上执行下面操作进行校验

RACDB1@rac1 /home/oracle$ vi ssh.sh ssh db10a date ssh db10b date ssh db10a-priv date ssh db10b-priv date ssh db10b RACDB1@rac1 /home/oracle$ sh ssh.sh The authenticity of host 'rac1 (192.168.1.103)' can't be established. RSA key fingerprint is 94:ca:c8:ef:47:81:d7:f2:f6:1a:34:43:2f:5e:68:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac1,192.168.1.103' (RSA) to the list of known hosts. Mon May 13 15:09:04 CST 2013 Mon May 13 15:09:06 CST 2013 The authenticity of host 'rac1-priv (192.168.2.101)' can't be established. RSA key fingerprint is 94:ca:c8:ef:47:81:d7:f2:f6:1a:34:43:2f:5e:68:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac1-priv,192.168.2.101' (RSA) to the list of known hosts. Mon May 13 15:09:07 CST 2013 The authenticity of host 'rac2-priv (192.168.2.102)' can't be established. RSA key fingerprint is 94:ca:c8:ef:47:81:d7:f2:f6:1a:34:43:2f:5e:68:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac2-priv,192.168.2.102' (RSA) to the list of known hosts. Mon May 13 15:09:10 CST 2013 RACDB2@rac2 /home/oracle$

这时已经通过 ssh 切换到 rac2 上,

副节点 rac2 上同样进行校验

RACDB2@rac2 /home/oracle$ vi ssh.sh ssh rac1 date ssh rac2 date ssh rac1-priv date ssh rac2-priv date ssh rac1 RACDB2@rac2 /home/oracle$ sh ssh.sh The authenticity of host 'rac1 (192.168.1.103)' can't be established. RSA key fingerprint is 94:ca:c8:ef:47:81:d7:f2:f6:1a:34:43:2f:5e:68:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac1,192.168.1.103' (RSA) to the list of known hosts. Mon May 13 15:11:11 CST 2013 The authenticity of host 'rac2 (192.168.1.102)' can't be established. RSA key fingerprint is 94:ca:c8:ef:47:81:d7:f2:f6:1a:34:43:2f:5e:68:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac2,192.168.1.102' (RSA) to the list of known hosts. Mon May 13 15:11:14 CST 2013 The authenticity of host 'rac1-priv (192.168.2.101)' can't be established. RSA key fingerprint is 94:ca:c8:ef:47:81:d7:f2:f6:1a:34:43:2f:5e:68:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac1-priv,192.168.2.101' (RSA) to the list of known hosts. Mon May 13 15:11:14 CST 2013 The authenticity of host 'rac2-priv (192.168.2.102)' can't be established. RSA key fingerprint is 94:ca:c8:ef:47:81:d7:f2:f6:1a:34:43:2f:5e:68:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'rac2-priv,192.168.2.102' (RSA) to the list of known hosts. Mon May 13 15:11:17 CST 2013 RACDB1@rac1 /home/oracle$

校验完毕同时切回了 rac1 节点

再次进行测试看看是否需要手动操作

RACDB1@rac1 /home/oracle$ sh ssh.sh Mon May 13 15:12:17 CST 2013 Mon May 13 15:12:18 CST 2013 Mon May 13 15:12:17 CST 2013 Mon May 13 15:12:18 CST 2013 Last login: Mon May 13 15:09:10 2013 from rac1 RACDB2@rac2 /home/oracle$ sh ssh.sh Mon May 13 15:12:23 CST 2013 Mon May 13 15:12:24 CST 2013 Mon May 13 15:12:23 CST 2013 Mon May 13 15:12:25 CST 2013 Last login: Mon May 13 15:11:16 2013 from rac2

一去一回,完全不用进行手动操作,两个节点间的互信关系就此配置完毕!!!

PS:保证两节点的时间同步,在二节点停止ntpd服务,并部署下面的脚本,1s进行一次时间同步

[root@rac2 ~]# cat ntpdate_to_rac1.sh -----注意:使用这个脚本的时候客户端的ntpd服务不启动 while :; do ntpdate db10a; sleep 1; done

如若仍不满足需求,可以在后台同时启动多个同步脚本。后台启动方法如下。

[root@rac2 ~]# nohup sh ntpdate_to_rac1.sh >> ntpdate_to_rac1.log& [root@db10b ~]# tail -f ntpdate_to_rac1.log 9 Sep 22:51:20 ntpdate[9892]: adjust time server 192.168.1.10 offset -0.000113 sec 9 Sep 22:51:21 ntpdate[9894]: adjust time server 192.168.1.10 offset 0.000029 sec 9 Sep 22:51:22 ntpdate[9896]: adjust time server 192.168.1.10 offset -0.000058 sec 9 Sep 22:51:23 ntpdate[9898]: adjust time server 192.168.1.10 offset 0.000010 sec 9 Sep 22:51:24 ntpdate[9900]: adjust time server 192.168.1.10 offset -0.000062 sec 9 Sep 22:51:25 ntpdate[9902]: adjust time server 192.168.1.10 offset -0.000022 sec 9 Sep 22:51:26 ntpdate[9904]: adjust time server 192.168.1.10 offset -0.000017 sec .....

三,安装

1.安装cluster软件

解压:

gzip -d 10201_clusterware_linux_x86_64.cpio.gz gzip -d 10201_database_linux_x86_64.cpio.gz cpio -idmv < 10201_clusterware_linux_x86_64.cpio cpio -idmv < 10201_database_linux_x86_64.cpio

检查安装环境:

cd /oracle/media/clusterware/ ./runcluvfy.sh stage -pre crsinst -n db10a,db10b -verbose

检查报:

Could not find a suitable set of interfaces for VIPs.

忽略,继续安装

安装cluster软件的最后要执行两个脚本

[root@rac1 ~]# /u01/app/oracle/oraInventory/orainstRoot.sh Changing permissions of /u01/app/oracle/oraInventory to 770. Changing groupname of /u01/app/oracle/oraInventory to oinstall. The execution of the script is complete [root@rac2 ~]# /u01/app/oracle/oraInventory/orainstRoot.sh Changing permissions of /u01/app/oracle/oraInventory to 770. Changing groupname of /u01/app/oracle/oraInventory to oinstall. The execution of the script is complete

第二个脚本在第一个节点 rac1 上执行

[root@rac1 ~]# /u01/crs1020/root.sh -----时间较长

在第二个节点 rac2 上执行这个脚本前,完成以下工作

[root@rac2 ~]# cd /u01/crs1020/bin/

修改 [root@rac2 bin]# vi vipca

在

if [ "$arch" = "i686" -o "$arch" = "ia64" ] then LD_ASSUME_KERNEL=2.4.19 export LD_ASSUME_KERNEL fi

下面加入

unset LD_ASSUME_KERNEL

修改

[root@rac2 bin]# vi srvctl

在

LD_ASSUME_KERNEL=2.4.19 export LD_ASSUME_KERNEL

下面加入

unset LD_ASSUME_KERNEL

此时

Rac2 上执行

[root@rac2 ~]# /u01/crs1020/root.sh

最后还会报错

Running vipca(silent) for configuring nodeapps Error 0(Native: listNetInterfaces:[3]) [Error 0(Native: listNetInterfaces:[3])]

解决办法:

[root@rac2 bin]# ./oifcfg getif [root@rac2 bin]# ./oifcfg iflist eth0 192.168.1.0 eth1 192.168.2.0 eth2 192.168.0.0 [root@rac2 bin]# ./oifcfg setif -global eth0/192.168.1.0:public [root@rac2 bin]# ./oifcfg setif -global eth1/192.168.2.0:cluster_interconnect [root@rac2 bin]# ./oifcfg getif eth0 192.168.1.0 global public eth1 192.168.2.0 global cluster_interconnect

然后 rac2 上启动 vipca 图形界面

[root@rac2 ~]# cd /u01/crs1020/bin/

[root@rac2 bin]# ./vipca

点击 next

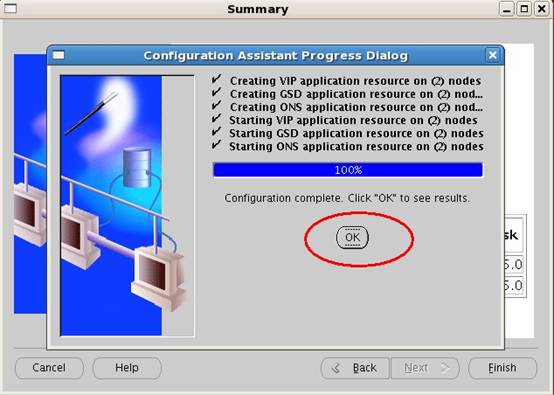

VIP配置成功后(显示如下)

2.安装数据库软件

在安装的时候选择只安装软件

3.listener配置

手工配置或者使用netca配置(推荐使用netca)

安装完成之后使用crs_stat命令查看:

[root@db10b ~]# /u01/crs1020/bin/crs_stat -t -v Name Type R/RA F/FT Target State Host ---------------------------------------------------------------------- ora....0A.lsnr application 0/5 0/0 ONLINE ONLINE db10a ora.db10a.gsd application 0/5 0/0 ONLINE ONLINE db10a ora.db10a.ons application 0/3 0/0 ONLINE ONLINE db10a ora.db10a.vip application 0/0 0/0 ONLINE ONLINE db10a ora....0B.lsnr application 0/5 0/0 ONLINE ONLINE db10b ora.db10b.gsd application 0/5 0/0 ONLINE ONLINE db10b ora.db10b.ons application 0/3 0/0 ONLINE ONLINE db10b ora.db10b.vip application 0/0 0/0 ONLINE ONLINE db10b

4.创建ASM实例和创建数据库

最后一步,使用dbca建库,建库完成后检查服务

testdb101@db10a /home/oracle$ crs_stat -t -v Name Type R/RA F/FT Target State Host ---------------------------------------------------------------------- ora....SM1.asm application 0/5 0/0 ONLINE ONLINE db10a ora....0A.lsnr application 0/5 0/0 ONLINE ONLINE db10a ora.db10a.gsd application 0/5 0/0 ONLINE ONLINE db10a ora.db10a.ons application 0/3 0/0 ONLINE ONLINE db10a ora.db10a.vip application 0/0 0/0 ONLINE ONLINE db10a ora....SM2.asm application 0/5 0/0 ONLINE ONLINE db10b ora....0B.lsnr application 0/5 0/0 ONLINE ONLINE db10b ora.db10b.gsd application 0/5 0/0 ONLINE ONLINE db10b ora.db10b.ons application 0/3 0/0 ONLINE ONLINE db10b ora.db10b.vip application 0/0 0/0 ONLINE ONLINE db10b ora.....DSG.cs application 0/1 0/1 ONLINE ONLINE db10a ora....101.srv application 0/1 0/0 ONLINE ONLINE db10a ora....db10.db application 0/1 0/1 ONLINE ONLINE db10b ora....01.inst application 0/5 0/0 ONLINE ONLINE db10a ora....02.inst application 0/5 0/0 ONLINE ONLINE db10b

四,问题总结

这次安装RAC是遇到问题最多的一次,一一总结如下:

第一,在安装最后执行两个脚本,第一个脚本在两个节点顺利执行,第二个脚本root.sh在主节点顺利执行,在副节点执行的时候等了n长时间报下面的错误:

[root@db10b bin]# /u01/crs1020/root.sh Checking to see if Oracle CRS stack is already configured Setting the permissions on OCR backup directory Setting up NS directories Oracle Cluster Registry configuration upgraded successfully Successfully accumulated necessary OCR keys. Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897. node <nodenumber>: <nodename> <private interconnect name> <hostname> node 1: db10a db10a-priv db10a node 2: db10b db10b-priv db10b Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. Now formatting voting device: /dev/raw/raw2 Format of 1 voting devices complete. Startup will be queued to init within 90 seconds. Adding daemons to inittab Expecting the CRS daemons to be up within 600 seconds. Failure at final check of Oracle CRS stack. 10

查看日志

注释:这个日志在crs目录下的log目录下的db10a(主机名),例如,/u01/crs1020/log/db10b下面的alertdb10b.log

[client(20498)]CRS-1006:The OCR location /dev/raw/raw1 is inaccessible. Details in /u01/crs1020/log/db10a/client/ocrconfig_20498.log. 2014-09-10 11:57:38.503 [client(20498)]CRS-1006:The OCR location /dev/raw/raw1 is inaccessible. Details in /u01/crs1020/log/db10a/client/ocrconfig_20498.log. 2014-09-10 11:57:38.533 [client(20498)]CRS-1006:The OCR location /dev/raw/raw1 is inaccessible. Details in /u01/crs1020/log/db10a/client/ocrconfig_20498.log. 2014-09-10 11:57:38.616 [client(20498)]CRS-1001:The OCR was formatted using version 2.

CRS crsd.log: Oracle Database 10g CRS Release 10.2.0.1.0 Production Copyright 1996, 2005 Oracle. All rights reserved. 2008-06-25 13:44:35.663: [ default][3086907072][ENTER]0 Oracle Database 10g CRS Release 10.2.0.1.0 Production Copyright 1996, 2004, Oracle. All rights reserved 2008-06-25 13:44:35.663: [ default][3086907072]0CRS Daemon Starting 2008-06-25 13:44:35.664: [ CRSMAIN][3086907072]0Checking the OCR device 2008-06-25 13:44:35.669: [ CRSMAIN][3086907072]0Connecting to the CSS Daemon 2008-06-25 13:44:35.743: [ COMMCRS][132295584]clsc_connect: (0x86592a8) no listener at (ADDRESS=(PROTOCOL=ipc)(KEY=OCSSD_LL_rac2_crs)) 2008-06-25 13:44:35.743: [ CSSCLNT][3086907072]clsssInitNative: connect failed, rc 9 2008-06-25 13:44:35.743: [ CRSRTI][3086907072]0CSS is not ready. Received status 3 from CSS. Waiting for good status .. 2008-06-25 13:44:36.753: [ COMMCRS][132295584]clsc_connect: (0x86050a8) no listener at (ADDRESS=(PROTOCOL=ipc)(KEY=OCSSD_LL_rac2_crs)) 2008-06-25 13:44:36.753: [ CSSCLNT][3086907072]clsssInitNative: connect failed, rc 9 2008-06-25 13:44:36.753: [ CRSRTI][3086907072]0CSS is not ready. Received status 3 from CSS. Waiting for good status .. . ..(后面一直重复同样的错误信息)

解决方法:

有三种说法: 1.防火墙(排除) 2. CRS-1006:The OCR location /dev/raw/raw4 is inaccessible. 手动格式/dev/raw/raw4: dd if=/dev/zero of=/dev/raw/raw4 bs=1m 然后 rm -fr /etc/oracle on all nodes 然后在每个节点 $CRS_HOME/root.sh 3.我应该是属于下面的一种情况:(因为我的二节点上没有下面的参数) diskLib.dataCacheMaxSize = "0" diskLib.dataCacheMaxReadAheadSize = "0" diskLib.DataCacheMinReadAheadSize = "0" diskLib.dataCachePageSize = "4096" diskLib.maxUnsyncedWrites = "0" 还有在后面的fdisk,只用对rac1操作就行了,重启后cat /proc/partiion 确定2台虚拟机上都是一样的配置;

另外附上完全清理CRS,卸载RAC的方法:

dd if=/dev/zero of=/dev/raw/raw1 bs=1M count=300 dd if=/dev/zero of=/dev/raw/raw2 bs=1M count=300 dd if=/dev/zero of=/dev/raw/raw3 bs=1M count=300 dd if=/dev/zero of=/dev/raw/raw4 bs=1M count=300 rm -rf /u01/app/oracle/* rm -rf /u01/crs1020/* rm -rf /etc/rc.d/rc5.d/S96init.crs rm -rf /etc/rc.d/init.d/init.crs rm -rf /etc/rc.d/rc4.d/K96init.crs rm -rf /etc/rc.d/rc6.d/K96init.crs rm -rf /etc/rc.d/rc1.d/K96init.crs rm -rf /etc/rc.d/rc0.d/K96init.crs rm -rf /etc/rc.d/rc2.d/K96init.crs rm -rf /etc/rc.d/rc3.d/S96init.crs rm -rf /etc/oracle/* rm -rf /etc/oraInst.loc rm -rf /etc/oratab rm -rf /usr/local/bin/coraenv rm -rf /usr/local/bin/dbhome rm -rf /usr/local/bin/oraenv rm -f /etc/init.d/init.cssd rm -f /etc/init.d/init.crs rm -f /etc/init.d/init.crsd rm -f /etc/init.d/init.evmd rm -f /etc/rc2.d/K96init.crs rm -f /etc/rc2.d/S96init.crs rm -f /etc/rc3.d/K96init.crs rm -f /etc/rc3.d/S96init.crs rm -f /etc/rc5.d/K96init.crs rm -f /etc/rc5.d/S96init.crs rm -f /etc/inittab.crs vi /etc/inittab 删除掉与crs和css有关的服务

解决了上面的错误,但是在副节点上再次执行root.sh的时候报下面的错误:

[root@db10b u01]# /u01/crs1020/root.sh Checking to see if Oracle CRS stack is already configured Setting the permissions on OCR backup directory Setting up NS directories Oracle Cluster Registry configuration upgraded successfully clscfg: EXISTING configuration version 3 detected. clscfg: version 3 is 10G Release 2. Successfully accumulated necessary OCR keys. Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897. node <nodenumber>: <nodename> <private interconnect name> <hostname> node 1: db10a db10a-priv db10a node 2: db10b db10b-priv db10b clscfg: Arguments check out successfully. NO KEYS WERE WRITTEN. Supply -force parameter to override. -force is destructive and will destroy any previous cluster configuration. Oracle Cluster Registry for cluster has already been initialized Startup will be queued to init within 90 seconds. Adding daemons to inittab Expecting the CRS daemons to be up within 600 seconds. CSS is active on these nodes. db10a db10b CSS is active on all nodes. Waiting for the Oracle CRSD and EVMD to start Oracle CRS stack installed and running under init(1M) Running vipca(silent) for configuring nodeapps PRKH-1010 : Unable to communicate with CRS services. [PRKH-1000 : Unable to load the SRVM HAS shared library [PRKN-1008 : Unable to load the shared library "srvmhas10" or a dependent library, from LD_LIBRARY_PATH="/u01/crs1020/jdk/jre/lib/i386/client:/u01/crs1020/jdk/jre/lib/i386:/u01/crs1020/jdk/jre/../lib/i386:/u01/crs1020/lib32:/u01/crs1020/srvm/lib32:/u01/crs1020/lib:/u01/crs1020/srvm/lib:/u01/crs1020/lib" [java.lang.UnsatisfiedLinkError: /u01/crs1020/lib32/libsrvmhas10.so: libclntsh.so.10.1: wrong ELF class: ELFCLASS64]]] PRKH-1010 : Unable to communicate with CRS services. [PRKH-1000 : Unable to load the SRVM HAS shared library [PRKN-1008 : Unable to load the shared library "srvmhas10" or a dependent library, from LD_LIBRARY_PATH="/u01/crs1020/jdk/jre/lib/i386/client:/u01/crs1020/jdk/jre/lib/i386:/u01/crs1020/jdk/jre/../lib/i386:/u01/crs1020/lib32:/u01/crs1020/srvm/lib32:/u01/crs1020/lib:/u01/crs1020/srvm/lib:/u01/crs1020/lib" [java.lang.UnsatisfiedLinkError: /u01/crs1020/lib32/libsrvmhas10.so: libclntsh.so.10.1: wrong ELF class: ELFCLASS64]]] PRKH-1010 : Unable to communicate with CRS services. [PRKH-1000 : Unable to load the SRVM HAS shared library [PRKN-1008 : Unable to load the shared library "srvmhas10" or a dependent library, from LD_LIBRARY_PATH="/u01/crs1020/jdk/jre/lib/i386/client:/u01/crs1020/jdk/jre/lib/i386:/u01/crs1020/jdk/jre/../lib/i386:/u01/crs1020/lib32:/u01/crs1020/srvm/lib32:/u01/crs1020/lib:/u01/crs1020/srvm/lib:/u01/crs1020/lib" [java.lang.UnsatisfiedLinkError: /u01/crs1020/lib32/libsrvmhas10.so: libclntsh.so.10.1: wrong ELF class: ELFCLASS64]]]

解决:

[root@db10b u01]# /u01/crs1020/root.sh Checking to see if Oracle CRS stack is already configured Setting the permissions on OCR backup directory Setting up NS directories Oracle Cluster Registry configuration upgraded successfully clscfg: EXISTING configuration version 3 detected. clscfg: version 3 is 10G Release 2. Successfully accumulated necessary OCR keys. Using ports: CSS=49895 CRS=49896 EVMC=49898 and EVMR=49897. node <nodenumber>: <nodename> <private interconnect name> <hostname> node 1: db10a db10a-priv db10a node 2: db10b db10b-priv db10b clscfg: Arguments check out successfully. NO KEYS WERE WRITTEN. Supply -force parameter to override. -force is destructive and will destroy any previous cluster configuration. Oracle Cluster Registry for cluster has already been initialized Startup will be queued to init within 90 seconds. Adding daemons to inittab Expecting the CRS daemons to be up within 600 seconds. CSS is active on these nodes. db10a db10b CSS is active on all nodes. Oracle CRS stack installed and running under init(1M) Running vipca(silent) for configuring nodeapps Error 0(Native: listNetInterfaces:[3]) [Error 0(Native: listNetInterfaces:[3])]

解决:

[root@rac2 bin]# ./oifcfg getif [root@rac2 bin]# ./oifcfg iflist

eth0 192.168.1.0

eth1 192.168.2.0

eth2 192.168.0.0 [root@rac2 bin]# ./oifcfg setif -global eth0/192.168.1.0:public [root@rac2 bin]# ./oifcfg setif -global eth1/192.168.2.0:cluster_interconnect [root@rac2 bin]# ./oifcfg getif

eth0 192.168.1.0 global public

eth1 192.168.2.0 global cluster_interconnect

然后再root用户下启动vipca配置好VIP