强化学习事后经验回放Hindsight Experience Replay

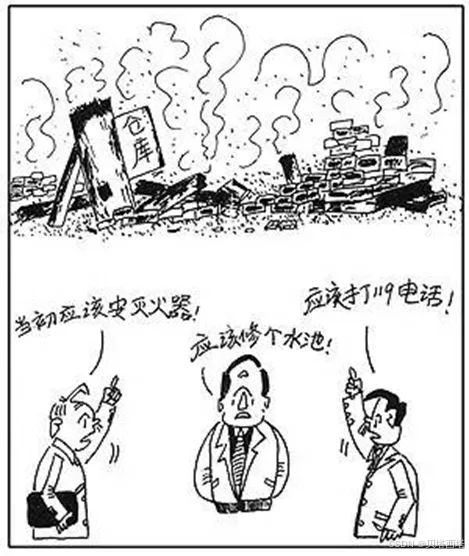

事后诸葛亮其实并不是一无是处,OpenAI研究如何“事后诸葛亮”缓解强化学习稀疏奖励问题

Hindsight Experience Replay(HER)原理详解

Hindsight Experience Replay(HER)是强化学习中解决**稀疏奖励(Sparse Reward)问题的关键技术,通过目标重标记(Goal Relabeling)**将失败经验转化为有效训练数据,显著提升样本效率。以下是其核心原理和实现细节:

1. 核心思想:从失败中学习

- 问题背景:在目标导向的任务中(如机器人抓取、迷宫导航),智能体仅在达成目标时获得正奖励,其他时刻奖励为零或负值。这种稀疏奖励导致学习缓慢甚至无法收敛。

- 关键洞察:即使智能体未达成原始目标,其轨迹中可能包含对其他目标的“成功经验”。例如,原本目标是到达A点,但实际到达了B点,此时可将目标重定义为B点,从而生成成功经验。

2. 工作原理

步骤1:标准经验回放

智能体与环境交互,存储经验元组 (s, a, r, s', g),其中:

s:当前状态a:执行的动作r:根据原始目标g计算的奖励s':下一状态g:原始目标

步骤2:目标重标记(Goal Relabeling)

对每个存储的经验,额外生成新经验,用替代目标 g' 替换原始目标 g,并重新计算奖励 r'。例如:

- 替代目标选择策略:

- Final:使用回合结束时的状态作为

g'。 - Future:随机选择当前状态之后的某个状态作为

g'。 - Episode:从整个回合中随机选一个状态作为

g'。 - Random:随机生成合理的目标。

步骤3:混合训练

将原始经验和新生成的经验共同用于训练,使智能体学习多目标策略,同时解决稀疏奖励问题。

3. 数学形式化

- 奖励函数:若任务要求状态

g,奖励函数通常设计为:

r ( s , a , g ) = { 0 如果 s ′ 达到 g − 1 否则 r(s, a, g) = \begin{cases} 0 & \text{如果 } s' \text{ 达到 } g \\ -1 & \text{否则} \end{cases} r(s,a,g)={0−1如果 s′ 达到 g否则 - 替代目标奖励:当目标被重标记为

g'时,奖励变为:

r ′ ( s , a , g ′ ) = { 0 如果 s ′ 达到 g ′ − 1 否则 r'(s, a, g') = \begin{cases} 0 & \text{如果 } s' \text{ 达到 } g' \\ -1 & \text{否则} \end{cases} r′(s,a,g′)={0−1如果 s′ 达到 g′否则

4. 关键优势

- 数据增强:通过构造虚拟成功经验,增加正奖励样本。

- 稀疏奖励缓解:即使原始目标未达成,也能从替代目标中获得学习信号。

- 多目标泛化:智能体学会根据不同目标调整策略,提升适应性。

- 样本效率提升:相比传统方法,收敛速度显著加快。

5. 应用示例

任务:机械臂抓取物体到目标位置

- 原始目标:将物体移动到位置A。

- 实际结果:物体被移动到位置B。

- HER处理:

- 将目标重标记为B,奖励设为0(成功)。

- 存储经验

(s, a, r=0, s', g'=B),用于训练。 - 效果:智能体学会如何从状态

s到达任意位置(如B),而不仅限于A。

6. 实现细节

- 与Off-policy算法结合:常与DDPG、DQN等算法结合,利用经验回放机制。

- 替代目标比例:通常混合原始目标和新目标(如50%原始,50%替代)。

- 目标空间设计:需确保替代目标在任务中有意义(如可达的位置)。

7. 代码demo

import torch

import torch.nn as nn

import torch.optim as optim

import numpy as np

from collections import deque

import random

# 定义DQN网络(示例算法A)

class DQN(nn.Module):

def __init__(self, state_dim, goal_dim, action_dim, hidden_dim=256):

super(DQN, self).__init__()

# 将状态和goal拼接作为输入

self.net = nn.Sequential(

nn.Linear(state_dim + goal_dim, hidden_dim),

nn.ReLU(),

nn.Linear(hidden_dim, hidden_dim),

nn.ReLU(),

nn.Linear(hidden_dim, action_dim)

)

def forward(self, state, goal):

# 拼接状态和goal

x = torch.cat([state, goal], dim=1)

return self.net(x)

# 回放缓冲区实现

class ReplayBuffer:

def __init__(self, capacity):

self.buffer = deque(maxlen=capacity)

def add(self, transition):

"""添加单条transition"""

self.buffer.append(transition)

def add_batch(self, transitions):

"""批量添加transition"""

self.buffer.extend(transitions)

def sample(self, batch_size):

"""随机采样minibatch"""

return random.sample(self.buffer, batch_size)

def __len__(self):

return len(self.buffer)

# 定义HER参数

HER_SAMPLE_N = 4 # 每个transition采样额外目标数

FUTURE_STEP = 4 # 未来目标采样步长

# 初始化组件

state_dim = 3 # 示例环境状态维度

goal_dim = 3 # 目标维度(通常与状态维度相同)

action_dim = 2 # 示例动作空间维度

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

dqn = DQN(state_dim, goal_dim, action_dim).to(device)

target_dqn = DQN(state_dim, goal_dim, action_dim).to(device)

target_dqn.load_state_dict(dqn.state_dict())

optimizer = optim.Adam(dqn.parameters(), lr=1e-3)

buffer = ReplayBuffer(100000) # 回放缓冲区容量

# 定义奖励函数示例(需要根据具体问题修改)

def compute_reward(state, action, goal):

"""示例:使用目标距离的负值作为奖励"""

distance = torch.norm(state - goal, dim=1)

return -distance.unsqueeze(1) # 保持维度一致

# 训练循环

num_episodes = 1000

for episode in range(num_episodes):

# 采样初始状态和目标

current_state = torch.randn(state_dim).to(device) # 示例随机初始化

goal = torch.randn(goal_dim).to(device) # 示例随机目标

episode_transitions = []

states = []

# 收集轨迹数据

for t in range(10): # 示例episode长度设为10

# 行为策略选择动作(epsilon-greedy示例)

if np.random.rand() < 0.3:

action = torch.randint(0, action_dim, (1,))

else:

with torch.no_grad():

q_values = dqn(current_state.unsqueeze(0), goal.unsqueeze(0))

action = q_values.argmax().item()

# 执行动作,获得新状态(这里用随机状态模拟环境)

next_state = current_state + torch.randn_like(current_state)*0.1

states.append(current_state)

# 存储原始transition

episode_transitions.append((

current_state.clone(),

torch.tensor([action]),

next_state.clone(),

goal.clone()

))

current_state = next_state

# 后见经验回放处理

for t in range(len(episode_transitions)):

state, action, next_state, original_goal = episode_transitions[t]

# 添加原始目标transition

reward = compute_reward(next_state, action, original_goal)

buffer.add((

torch.cat([state, original_goal]),

action,

reward,

torch.cat([next_state, original_goal])

))

# HER核心部分:采样额外目标

# 使用future策略采样未来状态作为目标

future_indices = np.random.choice(

np.arange(t, len(episode_transitions)),

size=HER_SAMPLE_N,

replace=True

)

additional_goals = [episode_transitions[idx][2] for idx in future_indices]

# 为每个额外目标生成transition

for g in additional_goals:

new_reward = compute_reward(next_state, action, g)

buffer.add((

torch.cat([state, g]),

action,

new_reward,

torch.cat([next_state, g])

))

# 优化步骤

if len(buffer) >= 512: # 当缓冲区足够时开始训练

batch = buffer.sample(512)

state_batch = torch.stack([x[0] for x in batch]).to(device)

action_batch = torch.stack([x[1] for x in batch]).to(device)

reward_batch = torch.stack([x[2] for x in batch]).to(device)

next_state_batch = torch.stack([x[3] for x in batch]).to(device)

# DQN更新逻辑

current_q = dqn(state_batch[:, :state_dim], state_batch[:, state_dim:]).gather(1, action_batch)

with torch.no_grad():

max_next_q = target_dqn(

next_state_batch[:, :state_dim], # current state, state space

next_state_batch[:, state_dim:] # goal, state space

).max(1)[0].unsqueeze(1)

target_q = reward_batch + 0.99 * max_next_q

loss = nn.MSELoss()(current_q, target_q)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# 定期更新目标网络

if episode % 10 == 0:

target_dqn.load_state_dict(dqn.state_dict())

# 注意:这是一个简化实现,具体应用时需要根据实际问题调整:

# 1. 环境交互逻辑

# 2. 奖励函数实现

# 3. 目标采样策略(当前使用future策略)

# 4. 探索策略(当前为简单epsilon-greedy)

# 5. 网络结构和超参数

关键实现细节说明:

-

双网络架构:采用DQN的标准双网络设计(在线网络+目标网络)来稳定训练

-

状态-目标拼接:始终将状态和goal拼接后作为网络输入

torch.cat([state, goal], dim=1)

- HER核心实现:

- 在轨迹收集完成后进行后处理(第二个for循环)

- 使用future策略采样目标:从当前时刻之后的轨迹中随机选择状态作为新目标

future_indices = np.random.choice(

np.arange(t, len(episode_transitions)),

size=HER_SAMPLE_N,

replace=True

)

- 奖励重计算:对于每个新目标重新计算奖励

new_reward = compute_reward(next_state, action, g)

- 数据存储:同时存储原始目标和新目标的transition

buffer.add((

torch.cat([state, g]),

action,

new_reward,

torch.cat([next_state, g])

))

- 训练流程:使用标准DQN更新规则,但输入包含goal信息

current_q = dqn(state_batch[:, :state_dim],

state_batch[:, state_dim:]).gather(1, action_batch)

实际使用时需要根据具体任务调整以下部分:

- 环境交互接口

- 奖励函数实现(示例使用简单距离奖励)

- 目标采样策略(当前为future策略)

- 网络结构和超参数

- 探索策略实现(当前为简单epsilon-greedy)

7. 局限性与改进

- 局限性:

- 替代目标需符合任务逻辑(如不能选择物理不可达的位置)。

- 可能引入无关目标,导致策略偏离原始任务。

- 改进方向:

- 课程学习:逐步增加目标难度。

- 优先经验回放:侧重重要替代目标。

- 分层HER:结合高层目标规划和底层控制。

8. 总结

HER通过重构失败经验的目标和奖励,将稀疏奖励问题转化为密集奖励问题,是解决复杂环境探索难题的高效方法。其核心在于“即使未达到原始目标,也能从中学到有价值的信息”,这一思想在机器人控制、游戏AI等领域广泛应用。