Strategies, Approaches, and Methodologies

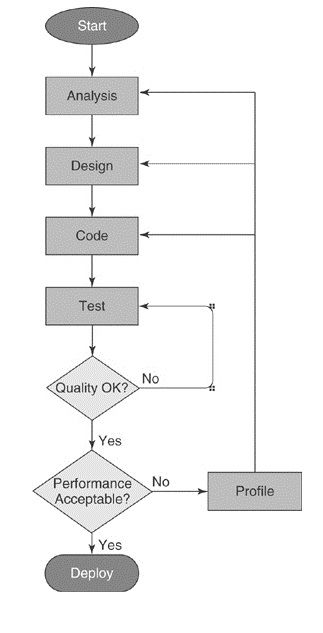

1. A diagram of Software Development Process including performance phase :

2. The following list is the types of questions the performance requirements we came up with in analysis phase `should answer:

a) What is the expected throughput of the application?

b) What is the expected latency between a stimulus and a response to that stimulus?

c) How many concurrent users or concurrent tasks shall the application support?

d) What is the accepted throughput and latency at the maximum number of concurrent users or concurrent tasks?

e) What is the maximum worst case latency?

f) What is the frequency of garbage collection induced latencies that will be tolerated?

3. There are two commonly accepted approaches to performance analysis: top down or bottom up. Top down, as the term implies, focuses at the top level of the application and drills down the software stack looking for problem areas and optimization opportunities. In contrast, bottom up begins at the lowest level of the software stack, at the CPU level looking at statistics such as CPU cache misses, inefficient use of CPU instructions, and then working up the software stack at what constructs or idioms are used by the application. The top down approach is most commonly used by application developers. The bottom up approach is commonly used by performance specialists in situations where the performance task involves identifying performance differences in an application on differing platform architectures, operating systems, or in the case of Java differing implementations of Java Virtual Machines.

4. Whatever the cause that stimulates the performance tuning activity, monitoring the application while it is running under a load of particular interest is the first step in a top down approach. This monitoring activity may include observing operating system level statistics, Java Virtual Machine (JVM) statistics, Java EE container statistics, and/or application performance instrumentation statistics. Then based on what the monitoring information suggests you begin the next step such as tuning the JVM’s garbage collectors, tuning the JVM’s command line options, tuning the operating system or profiling the application.

5. The bottom up approach is also frequently used when it is not possible to make a change to the application’s source code. In the bottom up approach, the gathering of performance statistics and the monitoring activity begin at the lowest level, the CPU. The number of CPU instructions, often referred to as path length and the number CPU caches misses tend to be the most commonly observed statistics in a bottom up approach. The focus of the bottom up approach is usually to improve the utilization of the CPU without making changes to the application.

6. In cases where the application can be modified, the bottom up approach may result in making changes to the application. These modifications may include a change to the application source code such as moving frequently accessed data near each other so they can be accessed on the same CPU cache line and thus not having to wait to fetch the data from memory.

7. The operating system views each of the hardware threads per core as a processor. An important distinction of multiple hardware threads CPU is only one of the multiple threads per core executes on a given clock cycle. When a long latency event occurs, such as a CPU cache miss, if there is another runnable hardware thread in the same core, that hardware thread executes on the next clock cycle. In contrast, other modern CPUs with a single hardware thread per core, or even hyperthreaded cores, will block on long latency events such as CPU cache misses and may waste clock cycles while waiting for a long latency event to be satisfied. In other modern CPUs, if another runnable application thread is ready to run and no other hardware threads are available, a thread context switch must occur before another runnable application thread can execute. Thread context switches generally take hundreds of clock cycles to complete. Hence, on a highly threaded application with many threads ready to execute, the “multiple hardware threads per core” type of processors have the capability to execute the application faster as a result of their capability to switch to another runnable thread within a core on the next clock cycle. The capability to have multiple hardware threads per core and switch to a different runnable hardware thread in the same core on the next clock cycle comes at the expense of a CPU with a slower clock rate.

8. When it comes to choosing a computing system, if the target application is expected to have a large number of simultaneous application threads executing concurrently, it is likely this type of application will perform and scale better on a bigger number of hardware threads per core type of processors than a smaller number of hardware threads per core type of processor. In contrast, an application that is expected to have a small number of application threads, will likely perform better on a higher clock rate, smaller number of hardware threads per core type of processor than a slower clock rate “bigger number of hardware threads per core type of processor”.