Lucene学习总结之七:Lucene搜索过程解析(3)

2.3、QueryParser解析查询语句生成查询对象

代码为:

| QueryParser parser = new QueryParser(Version.LUCENE_CURRENT, "contents", new StandardAnalyzer(Version.LUCENE_CURRENT)); Query query = parser.parse("+(+apple* -boy) (cat* dog) -(eat~ foods)"); |

此过程相对复杂,涉及JavaCC,QueryParser,分词器,查询语法等,本章不会详细论述,会在后面的章节中一一说明。

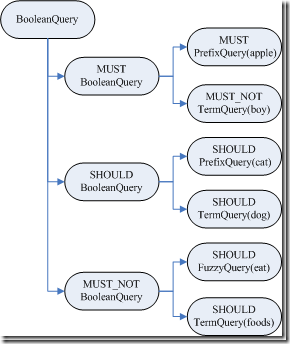

此处唯一要说明的是,根据查询语句生成的是一个Query树,这棵树很重要,并且会生成其他的树,一直贯穿整个索引过程。

| query BooleanQuery (id=96) |

对于Query对象有以下说明:

- BooleanQuery即所有的子语句按照布尔关系合并

- +也即MUST表示必须满足的语句

- SHOULD表示可以满足的,minNrShouldMatch表示在SHOULD中必须满足的最小语句个数,默认是0,也即既然是SHOULD,也即或的关系,可以一个也不满足(当然没有MUST的时候除外)。

- -也即MUST_NOT表示必须不能满足的语句

- 树的叶子节点中:

- 最基本的是TermQuery,也即表示一个词

- 当然也可以是PrefixQuery和FuzzyQuery,这些查询语句由于特殊的语法,可能对应的不是一个词,而是多个词,因而他们都有rewriteMethod对象指向MultiTermQuery的Inner Class,表示对应多个词,在查询过程中会得到特殊处理。

2.4、搜索查询对象

代码为:

TopDocs docs = searcher.search(query, 50);

其最终调用search(createWeight(query), filter, n);

索引过程包含以下子过程:

- 创建weight树,计算term weight

- 创建scorer及SumScorer树,为合并倒排表做准备

- 用SumScorer进行倒排表合并

- 收集文档结果集合及计算打分

2.4.1、创建Weight对象树,计算Term Weight

IndexSearcher(Searcher).createWeight(Query) 代码如下:

| protected Weight createWeight(Query query) throws IOException { return query.weight(this); } |

| BooleanQuery(Query).weight(Searcher) 代码为: public Weight weight(Searcher searcher) throws IOException { //重写Query对象树 Query query = searcher.rewrite(this); //创建Weight对象树 Weight weight = query.createWeight(searcher); //计算Term Weight分数 float sum = weight.sumOfSquaredWeights(); float norm = getSimilarity(searcher).queryNorm(sum); weight.normalize(norm); return weight; } |

此过程又包含以下过程:

- 重写Query对象树

- 创建Weight对象树

- 计算Term Weight分数

2.4.1.1、重写Query对象树

从BooleanQuery的rewrite函数我们可以看出,重写过程也是一个递归的过程,一直到Query对象树的叶子节点。

| BooleanQuery.rewrite(IndexReader) 代码如下: BooleanQuery clone = null; for (int i = 0 ; i < clauses.size(); i++) { BooleanClause c = clauses.get(i); //对每一个子语句的Query对象进行重写 Query query = c.getQuery().rewrite(reader); if (query != c.getQuery()) { if (clone == null) clone = (BooleanQuery)this.clone(); //重写后的Query对象加入复制的新Query对象树 clone.clauses.set(i, new BooleanClause(query, c.getOccur())); } } if (clone != null) { return clone; //如果有子语句被重写,则返回复制的新Query对象树。 } else return this; //否则将老的Query对象树返回。 |

让我们把目光聚集到叶子节点上,叶子节点基本是两种,或是TermQuery,或是MultiTermQuery,从Lucene的源码可以看出TermQuery的rewrite函数就是返回对象本身,也即真正需要重写的是MultiTermQuery,也即一个Query代表多个Term参与查询,如本例子中的PrefixQuery及FuzzyQuery。

对此类的Query,Lucene不能够直接进行查询,必须进行重写处理:

- 首先,要从索引文件的词典中,把多个Term都找出来,比如"appl*",我们在索引文件的词典中可以找到如下Term:"apple","apples","apply",这些Term都要参与查询过程,而非原来的"appl*"参与查询过程,因为词典中根本就没有"appl*"。

- 然后,将取出的多个Term重新组织成新的Query对象进行查询,基本有两种方式:

- 方式一:将多个Term看成一个Term,将包含它们的文档号取出来放在一起(DocId Set),作为一个统一的倒排表来参与倒排表的合并。

- 方式二:将多个Term组成一个BooleanQuery,它们之间是OR的关系。

从上面的Query对象树中,我们可以看到,MultiTermQuery都有一个RewriteMethod成员变量,就是用来重写Query对象的,有以下几种:

- ConstantScoreFilterRewrite采取的是方式一,其rewrite函数实现如下:

| public Query rewrite(IndexReader reader, MultiTermQuery query) { Query result = new ConstantScoreQuery(new MultiTermQueryWrapperFilter<MultiTermQuery>(query)); result.setBoost(query.getBoost()); return result; } |

| MultiTermQueryWrapperFilter中的getDocIdSet函数实现如下:

public DocIdSet getDocIdSet(IndexReader reader) throws IOException { //得到MultiTermQuery的Term枚举器 final TermEnum enumerator = query.getEnum(reader); try { if (enumerator.term() == null) return DocIdSet.EMPTY_DOCIDSET; //创建包含多个Term的文档号集合 final OpenBitSet bitSet = new OpenBitSet(reader.maxDoc()); final int[] docs = new int[32]; final int[] freqs = new int[32]; TermDocs termDocs = reader.termDocs(); try { int termCount = 0; //一个循环,取出对应MultiTermQuery的所有的Term,取出他们的文档号,加入集合 do { Term term = enumerator.term(); if (term == null) break; termCount++; termDocs.seek(term); while (true) { final int count = termDocs.read(docs, freqs); if (count != 0) { for(int i=0;i<count;i++) { bitSet.set(docs[i]); } } else { break; } } } while (enumerator.next()); query.incTotalNumberOfTerms(termCount); } finally { termDocs.close(); } return bitSet; } finally { enumerator.close(); } } |

- ScoringBooleanQueryRewrite及其子类ConstantScoreBooleanQueryRewrite采取方式二,其rewrite函数代码如下:

|

public Query rewrite(IndexReader reader, MultiTermQuery query) throws IOException { //得到MultiTermQuery的Term枚举器 FilteredTermEnum enumerator = query.getEnum(reader); BooleanQuery result = new BooleanQuery(true); int count = 0; try { //一个循环,取出对应MultiTermQuery的所有的Term,加入BooleanQuery do { Term t = enumerator.term(); if (t != null) { TermQuery tq = new TermQuery(t); tq.setBoost(query.getBoost() * enumerator.difference()); result.add(tq, BooleanClause.Occur.SHOULD); count++; } } while (enumerator.next()); } finally { enumerator.close(); } query.incTotalNumberOfTerms(count); return result; } |

- 以上两种方式各有优劣:

- 方式一使得MultiTermQuery对应的所有的Term看成一个Term,组成一个docid set,作为统一的倒排表参与倒排表的合并,这样无论这样的Term在索引中有多少,都只会有一个倒排表参与合并,不会产生TooManyClauses异常,也使得性能得到提高。但是多个Term之间的tf, idf等差别将被忽略,所以采用方式二的RewriteMethod为ConstantScoreXXX,也即除了用户指定的Query boost,其他的打分计算全部忽略。

- 方式二使得整个Query对象树被展开,叶子节点都为TermQuery,MultiTermQuery中的多个Term可根据在索引中的tf, idf等参与打分计算,然而我们事先并不知道索引中和MultiTermQuery相对应的Term到底有多少个,因而会出现TooManyClauses异常,也即一个BooleanQuery中的子查询太多。这样会造成要合并的倒排表非常多,从而影响性能。

- Lucene认为对于MultiTermQuery这种查询,打分计算忽略是很合理的,因为当用户输入"appl*"的时候,他并不知道索引中有什么与此相关,也并不偏爱其中之一,因而计算这些词之间的差别对用户来讲是没有意义的。从而Lucene对方式二也提供了ConstantScoreXXX,来提高搜索过程的性能,从后面的例子来看,会影响文档打分,在实际的系统应用中,还是存在问题的。

- 为了兼顾上述两种方式,Lucene提供了ConstantScoreAutoRewrite,来根据不同的情况,选择不同的方式。

| ConstantScoreAutoRewrite.rewrite代码如下: public Query rewrite(IndexReader reader, MultiTermQuery query) throws IOException { final Collection<Term> pendingTerms = new ArrayList<Term>(); //计算文档数目限制,docCountPercent默认为0.1,也即索引文档总数的0.1% final int docCountCutoff = (int) ((docCountPercent / 100.) * reader.maxDoc()); //计算Term数目限制,默认为350 final int termCountLimit = Math.min(BooleanQuery.getMaxClauseCount(), termCountCutoff); int docVisitCount = 0; FilteredTermEnum enumerator = query.getEnum(reader); try { //一个循环,取出与MultiTermQuery相关的所有的Term。 while(true) { Term t = enumerator.term(); if (t != null) { pendingTerms.add(t); docVisitCount += reader.docFreq(t); } //如果Term数目超限,或者文档数目超限,则可能非常影响倒排表合并的性能,因而选用方式一,也即ConstantScoreFilterRewrite的方式 if (pendingTerms.size() >= termCountLimit || docVisitCount >= docCountCutoff) { Query result = new ConstantScoreQuery(new MultiTermQueryWrapperFilter<MultiTermQuery>(query)); result.setBoost(query.getBoost()); return result; } else if (!enumerator.next()) { //如果Term数目不太多,而且文档数目也不太多,不会影响倒排表合并的性能,因而选用方式二,也即ConstantScoreBooleanQueryRewrite的方式。 BooleanQuery bq = new BooleanQuery(true); for (final Term term: pendingTerms) { TermQuery tq = new TermQuery(term); bq.add(tq, BooleanClause.Occur.SHOULD); } Query result = new ConstantScoreQuery(new QueryWrapperFilter(bq)); result.setBoost(query.getBoost()); query.incTotalNumberOfTerms(pendingTerms.size()); return result; } } } finally { enumerator.close(); } } |

从上面的叙述中,我们知道,在重写Query对象树的时候,从MultiTermQuery得到的TermEnum很重要,能够得到对应MultiTermQuery的所有的Term,这是怎么做的的呢?

MultiTermQuery的getEnum返回的是FilteredTermEnum,它有两个成员变量,其中TermEnum actualEnum是用来枚举索引中所有的Term的,而Term currentTerm指向的是当前满足条件的Term,FilteredTermEnum的next()函数如下:

| public boolean next() throws IOException { if (actualEnum == null) return false; currentTerm = null; //不断得到下一个索引中的Term while (currentTerm == null) { if (endEnum()) return false; if (actualEnum.next()) { Term term = actualEnum.term(); //如果当前索引中的Term满足条件,则赋值为当前的Term if (termCompare(term)) { currentTerm = term; return true; } } else return false; } currentTerm = null; return false; } |

| 不同的MultiTermQuery的termCompare不同:

protected boolean termCompare(Term term) { //只要前缀相同,就满足条件 if (term.field() == prefix.field() && term.text().startsWith(prefix.text())){ return true; } endEnum = true; return false; }

protected final boolean termCompare(Term term) { //对于FuzzyQuery,其prefix设为空"",也即这一条件一定满足,只要计算的是similarity if (field == term.field() && term.text().startsWith(prefix)) { final String target = term.text().substring(prefix.length()); this.similarity = similarity(target); return (similarity > minimumSimilarity); } endEnum = true; return false; } //计算Levenshtein distance 也即 edit distance,对于两个字符串,从一个转换成为另一个所需要的最少基本操作(添加,删除,替换)数。

private synchronized final float similarity(final String target) { final int m = target.length(); final int n = text.length(); // init matrix d for (int i = 0; i<=n; ++i) { p[i] = i; } // start computing edit distance for (int j = 1; j<=m; ++j) { // iterates through target int bestPossibleEditDistance = m; final char t_j = target.charAt(j-1); // jth character of t d[0] = j; for (int i=1; i<=n; ++i) { // iterates through text // minimum of cell to the left+1, to the top+1, diagonally left and up +(0|1) if (t_j != text.charAt(i-1)) { d[i] = Math.min(Math.min(d[i-1], p[i]), p[i-1]) + 1; } else { d[i] = Math.min(Math.min(d[i-1]+1, p[i]+1), p[i-1]); } bestPossibleEditDistance = Math.min(bestPossibleEditDistance, d[i]); } // copy current distance counts to 'previous row' distance counts: swap p and d int _d[] = p; p = d; d = _d; } return 1.0f - ((float)p[n] / (float) (Math.min(n, m))); } |

| 有关edit distance的算法详见http://www.merriampark.com/ld.htm 计算两个字符串s和t的edit distance算法如下: Step 1: Step 2: Step 3: Step 4: Step 5: Step 6: Step 7: 举例说明其过程如下: 比较的两个字符串为:“GUMBO” 和 "GAMBOL". |

下面做一个试验,来说明ConstantScoreXXX对评分的影响:

| 在索引中,添加了以下四篇文档: file01.txt : apple other other other other file02.txt : apple apple other other other file03.txt : apple apple apple other other file04.txt : apple apple apple other other 搜索"apple"结果如下: docid : 3 score : 0.67974937 文档按照包含"apple"的多少排序。 而搜索"apple*"结果如下: docid : 0 score : 1.0 也即Lucene放弃了对score的计算。 |

经过rewrite,得到的新Query对象树如下:

| query BooleanQuery (id=89) | | //"apple*"被用方式一重写为ConstantScoreQuery | | //"cat*"被用方式一重写为ConstantScoreQuery | | //"eat~"作为FuzzyQuery,被重写成BooleanQuery, |