Linux+Apache+Tomcat集群和负载均衡

![]()

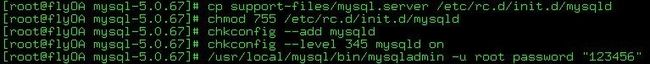

添加到自启动并设置mysql密码

服务器:

l 192.168.14.30(Apache负载)

l 10.0.30.67(Tomcat服务器)

l 10.0.30.151(Tomcat服务器)

软件环境:

l CentOS-5.5-x86_64

l Apache 2.2.3(CentOS安装时自带)

l jdk-6u26-linux-x64-rpm.bin

l apache-tomcat-6.0.32.tar.gz

1、首先配置Tomcat服务器

安装JDK,并配置Java路径:

[root@cloud download]# vim /etc/profile

JAVA_HOME=/usr/java/jdk1.6.0_26

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$CATALINA_HOME/common/lib

export JAVA_HOME

export PATH

export CLASSPATH

[root@cloud download]# source /etc/profile

[root@cloud download]# ./ jdk-6u26-linux-x64-rpm.bin

[root@cloud download]# java -version

java version "1.6.0_26"

Java(TM) SE Runtime Environment (build 1.6.0_26-b03)

Java HotSpot(TM) 64-Bit Server VM (build 20.1-b02, mixed mode)

安装Tomcat

[root@cloud download]# tar zxvf apache-tomcat-6.0.32.tar.gz

cp -r apache-tomcat-6.0.32 /usr/tomcat6

修改server.xml配置文件

去掉注释,,这是路由使用的,在apache中会用到jvmRoute的值

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">另外一台命名为jvm2

<!--<Engine name="Catalina" defaultHost="localhost">-->

把<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>改成:

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions="8">

<Manager className="org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown="false"

notifyListenersOnReplication="true"/>

<Channel className="org.apache.catalina.tribes.group.GroupChannel">

<Membership className="org.apache.catalina.tribes.membership.McastService"

address="228.0.0.4"

port="45564"

frequency="500"

dropTime="3000"/>

<Receiver className="org.apache.catalina.tribes.transport.nio.NioReceiver"

address="auto"

port="4000"

autoBind="100"

selectorTimeout="5000"

maxThreads="6"/>

<Sender className="org.apache.catalina.tribes.transport.ReplicationTransmitter">

<Transport className="org.apache.catalina.tribes.transport.nio.PooledParallelSender"/>

</Sender>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.TcpFailureDetector"/>

<Interceptor className="org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor"/>

</Channel>

<Valve className="org.apache.catalina.ha.tcp.ReplicationValve"

filter=""/>

<Valve className="org.apache.catalina.ha.session.JvmRouteBinderValve"/>

<Deployer className="org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir="/tmp/war-temp/"

deployDir="/tmp/war-deploy/"

watchDir="/tmp/war-listen/"

watchEnabled="false"/>

<ClusterListener className="org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener"/>

<ClusterListener className="org.apache.catalina.ha.session.ClusterSessionListener"/>

</Cluster>

上面配置说明:

Using the above configuration will enable all-to-all session replication using the DeltaManager to replicate session deltas. By all-to-all we mean that the session gets replicated to all the other nodes in the cluster. This works great for smaller cluster but we don't recommend it for larger clusters(a lot of tomcat nodes). Also when using the delta manager it will replicate to all nodes, even nodes that don't have the application deployed.

To get around this problem, you'll want to use the BackupManager. This manager only replicates the session data to one backup node, and only to nodes that have the application deployed. Downside of the BackupManager: not quite as battle tested as the delta manager.

Here are some of the important default values:

1. Multicast address is 228.0.0.4

2. Multicast port is 45564 (the port and the address together determine cluster membership.

3. The IP broadcasted is java.net.InetAddress.getLocalHost().getHostAddress() (make sure you don't broadcast 127.0.0.1, this is a common error)

4. The TCP port listening for replication messages is the first available server socket in range 4000-4100

5. Two listeners are configured ClusterSessionListener and JvmRouteSessionIDBinderListener

6. Two interceptors are configured TcpFailureDetector and MessageDispatch15Interceptor

The following is the default cluster configuration:

To run session replication in your Tomcat 6.0 container, the following steps should be completed:

l All your session attributes must implement java.io.Serializable

l Uncomment the Cluster element in server.xml

l If you have defined custom cluster valves, make sure you have the ReplicationValve defined as well under the Cluster element in server.xml

l If your Tomcat instances are running on the same machine, make sure the tcpListenPort attribute is unique for each instance, in most cases Tomcat is smart enough to resolve this on it's own by autodetecting available ports in the range 4000-4100

l Make sure your web.xml has the <distributable/> element

l If you are using mod_jk, make sure that jvmRoute attribute is set at your Engine <Engine name="Catalina" jvmRoute="node01" > and that the jvmRoute attribute value matches your worker name in workers.properties

l Make sure that all nodes have the same time and sync with NTP service!

l Make sure that your loadbalancer is configured for sticky session mode.

配置web.xml,增加<distributable/>元素,表示此应用将与群集服务器复制Session

<?xml version="1.0" encoding="UTF-8"?>

<web-app version="2.4" xmlns="http://java.sun.com/xml/ns/j2ee" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/j2ee http://java.sun.com/xml/ns/j2ee/web-app_2_4.xsd">

<display-name>cncloud_gr</display-name>

<distributable/>

配置webapp访问路径:

<Context path="" docBase="cncloud" debug="0" />

配置webapp端口:

<Connector port="80" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443"

URIEncoding="UTF-8"

useBodyEncodingForURI="true" />

把web项目打成war包放在Tomcat/webapps目录下

启动Tomcat,检查各个服务器是否能够正常访问:

./ startup.sh

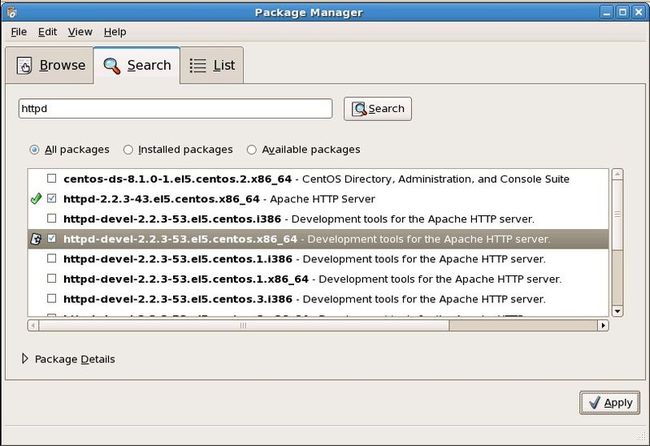

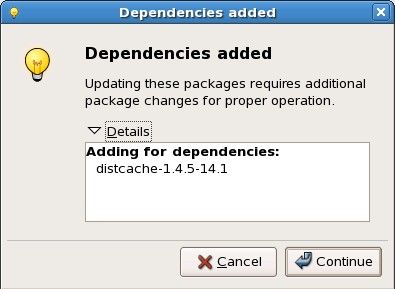

2、安装Apache服务器

可以使用以下三种方式:

l 命令:yum install httpd httpd-devel mod_ssl

l 编译Apache源码,如果使用源代码安装,一般默认安装在/usr/local/apache2目录下

l 图形界面操作:

测试Apache是否安装成功:http://192.168.14.30/

默认apache配置文件:/etc/httpd/conf/httpd.conf

模块路径:/usr/sbin/apachectl

web目录:/var/www/html

启动apache:service httpd start

出现上面提示是因为没有配置好主机名

[root@cloud download]# vim /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

192.168.14.30 cloud_test.com cloud_test

修改apache配置文件

vim /etc/httpd/conf/httpd.conf

设置web服务器根目录:

DocumentRoot "/var/www/html"

设置目录首页:

DirectoryIndex index.html index.html.var

修改为

DirectoryIndex index.html index.jsp index.htm

ServerName http://i-dbank.com:80

在文件最后添加下面内容:

<Location /server-status>

SetHandler server-status

Order Deny,Allow

Deny from all

Allow from all

</Location>

<Location /balancer-manager>

SetHandler balancer-manager

Order Deny,Allow

Deny from all

Allow from all

</Location>

ProxyPass /images !

ProxyPass /css !

ProxyPass /js !

ProxyRequests Off

ProxyPass / balancer://tomcatcluster/ stickysession=jsessionid nofailover=On

ProxyPass /temp http://192.168.14.30/temp/

#ProxyPassReverse / balancer://tomcatcluster/

#ProxyPassReverse /ccas balancer://tomcatcluster/ccas/

#ProxyPassReverse /clpay balancer://tomcatcluster/clpay/

<Proxy balancer://tomcatcluster>

#BalancerMember http://192.168.14.30:8080 loadfactor=1

BalancerMember http://10.0.30.67 route=jvm1 loadfactor=1

# Less powerful server,don't send as many requests there

BalancerMember http://10.0.30.151 route=jvm2 loadfactor=2

</Proxy>

查看apache编译的模块信息

[root@cloud_test tomcat-connectors-1.2.32-src]# /usr/sbin/apachectl -l

Compiled in modules:

core.c

prefork.c

http_core.c

mod_so.c

重新启动Apache:service httpd start