lucene通过NRTManager这个类来实现近实时搜索,所谓近实时搜索即在索引发生改变时,通

过线程跟踪,在相对很短的时间反映给给用户程序的调用

NRTManager通过管理IndexWriter对象,并将IndexWriter的一些方法(增删改)例如

addDocument,deleteDocument等方法暴露给客户调用,它的操作全部在内存里面,所以如果

你不调用IndexWriter的commit方法,通过以上的操作,用户硬盘里面的索引库是不会变化的,所

以你每次更新完索引库请记得commit掉,这样才能将变化的索引一起写到硬盘中,实现索引更新后的同步

用户每次获取最新索引(IndexSearcher),可以通过两种方式,第一种是通过调用

NRTManagerReopenThread对象,该线程负责实时跟踪索引内存的变化,每次变化就调用

maybeReopen方法,保持最新代索引,打开一个新的IndexSearcher对象,而用户所要的

IndexSearcher对象是NRTManager通过调用getSearcherManager方法获得SearcherManager对

象,然后通过SearcherManager对象获取IndexSearcher对象返回个客户使用,用户使用完之

后调用SearcherManager的release释放IndexSearcher对象,最后记得关闭NRTManagerReopenThread;

第二种方式是不通过NRTManagerReopenThread对象,而是直接调用NRTManager的

maybeReopen方法来获取最新的IndexSearcher对象来获取最新索引

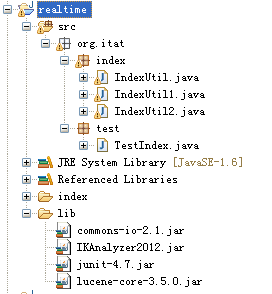

1、工程目录

2、只使用SearcherManager,不使用NRTManager的方式搜索

- package org.itat.index;

- import java.io.File;

- import java.io.IOException;

- import java.util.concurrent.Executors;

- import org.apache.lucene.analysis.Analyzer;

- import org.apache.lucene.analysis.standard.StandardAnalyzer;

- import org.apache.lucene.document.Document;

- import org.apache.lucene.document.Field;

- import org.apache.lucene.document.NumericField;

- import org.apache.lucene.index.CorruptIndexException;

- import org.apache.lucene.index.IndexReader;

- import org.apache.lucene.index.IndexWriter;

- import org.apache.lucene.index.IndexWriterConfig;

- import org.apache.lucene.index.Term;

- import org.apache.lucene.search.IndexSearcher;

- import org.apache.lucene.search.ScoreDoc;

- import org.apache.lucene.search.SearcherManager;

- import org.apache.lucene.search.SearcherWarmer;

- import org.apache.lucene.search.TermQuery;

- import org.apache.lucene.search.TopDocs;

- import org.apache.lucene.store.Directory;

- import org.apache.lucene.store.FSDirectory;

- import org.apache.lucene.store.LockObtainFailedException;

- import org.apache.lucene.util.Version;

- /**

- * 只使用SearcherManager,不使用NRTManager

- *

- * SearcherManager的maybeReopen会自动检查是否需要重新打开,比如重复执行search02几次,

- * 中间的一次删除一条数据这个删除的数据需要对writer进行commit才行,这样硬盘上的索引才会生效

- * 那么使用maybeReopen就可以检测到硬盘中的索引是否改变,并在下次查询的时候就进行生效

- *

- * 但是:

- * 光使用SearcherManager的话做不到实时搜索,为什么呢?

- * 因为使用SearcherManager需要进行writer.commit才会检测到,但是我们知道writer的commit是非常

- * 消耗性能的,我们不能经常性的commit,那需要怎么做呢?

- * 我们只能把添加修改删除的操作在内存中生效,然后使用内存中的索引信息并且在搜索时能起到效果,

- * 过一段时间累计到一定程序才进行writer.commit

- */

- public class IndexUtil1 {

- private String[] ids = {"1","2","3","4","5","6"};

- private String[] emails = {"aa@itat.org","bb@itat.org","cc@cc.org","dd@sina.org","ee@zttc.edu","ff@itat.org"};

- private String[] contents = {

- "welcome to visited the space,I like book",

- "hello boy, I like pingpeng ball",

- "my name is cc I like game",

- "I like football",

- "I like football and I like basketball too",

- "I like movie and swim"

- };

- private int[] attachs = {2,3,1,4,5,5};

- private String[] names = {"zhangsan","lisi","john","jetty","mike","jake"};

- private Analyzer analyzer = new StandardAnalyzer(Version.LUCENE_35);

- private SearcherManager mgr = null;//是线程安全的

- private Directory directory = null;

- public IndexUtil1() {

- try {

- directory = FSDirectory.open(new File("D:\\Workspaces\\realtime\\index"));

- mgr = new SearcherManager(

- directory,

- new SearcherWarmer() {

- /**

- * 索引一更新就要重新获取searcher,那获取searcher的时候就会调用这个方法

- * 执行maybeReopen的时候会执行warm方法,在这里可以对资源等进行控制

- */

- @Override

- public void warm(IndexSearcher search) throws IOException {

- System.out.println("has change");

- }

- },

- Executors.newCachedThreadPool()

- );

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- /**

- * 删除索引数据,默认不会完全删除,被放入索引回收站

- */

- public void delete(String id) {

- IndexWriter writer = null;

- try {

- writer = new IndexWriter(directory,

- new IndexWriterConfig(Version.LUCENE_35,analyzer));

- //参数是一个选项,可以是一个Query,也可以是一个term,term是一个精确查找的值

- //此时删除的文档并不会被完全删除,而是存储在一个回收站中的,可以恢复

- //执行完这个操作,索引文件夹下就会多出一个名叫_0_1.del的文件,也就是删除的文件在这个文件中记录了

- writer.deleteDocuments(new Term("id",id));

- writer.commit();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (LockObtainFailedException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- } finally {

- try {

- if(writer!=null) writer.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- }

- public void query() {

- try {

- IndexReader reader = IndexReader.open(directory);

- //通过reader可以有效的获取到文档的数量

- System.out.println("numDocs:"+reader.numDocs());//存储的文档数//不包括被删除的

- System.out.println("maxDocs:"+reader.maxDoc());//总存储量,包括在回收站中的索引

- System.out.println("deleteDocs:"+reader.numDeletedDocs());

- reader.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- /**

- * 索引文件后缀为.fmn为保存的是域的名称等

- * .fdt和.fdx保存的是Store.YES的信息,保存域里面存储的数据

- * .frq表示这里的域哪些出现多少次,哪些单词出现多少次,

- * .nrm存储一些评分信息

- * .prx存储一些偏移量等

- * .tii和.tis专门存储索引里面的所有内容信息

- */

- public void index() {

- IndexWriter writer = null;

- try {

- //在2.9版本之后,lucene的就不是全部的索引格式都兼容的了,所以在使用的时候必须写明版本号

- writer = new IndexWriter(directory, new IndexWriterConfig(Version.LUCENE_35, analyzer));

- writer.deleteAll();//清空索引

- Document doc = null;

- for(int i=0;i<ids.length;i++) {

- doc = new Document();

- doc.add(new Field("id",ids[i],Field.Store.YES,Field.Index.NOT_ANALYZED_NO_NORMS));

- doc.add(new Field("email",emails[i],Field.Store.YES,Field.Index.NOT_ANALYZED));

- doc.add(new Field("email","test"+i+"@test.com",Field.Store.YES,Field.Index.NOT_ANALYZED));

- doc.add(new Field("content",contents[i],Field.Store.NO,Field.Index.ANALYZED));

- doc.add(new Field("name",names[i],Field.Store.YES,Field.Index.NOT_ANALYZED_NO_NORMS));

- //存储数字

- //NumberTools.stringToLong("");已经被标记为过时了

- doc.add(new NumericField("attach",Field.Store.YES,true).setIntValue(attachs[i]));

- String et = emails[i].substring(emails[i].lastIndexOf("@")+1);

- writer.addDocument(doc);

- }

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (LockObtainFailedException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- } finally {

- try {

- if(writer!=null)writer.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- }

- public void search02() {

- IndexSearcher searcher = mgr.acquire();//获得一个searcher

- try {

- /**

- * maybeReopen会自动检查是否需要重新打开

- * 比如重复执行search02几次,中间一次删除一条数据

- * 这个删除的数据需要对writer进行commit才行

- * 那么使用maybeReopen就可以检测到硬盘中的索引是否改变

- * 并在下次查询的时候把删除的这条给去掉

- */

- mgr.maybeReopen();

- TermQuery query = new TermQuery(new Term("content","like"));

- TopDocs tds = searcher.search(query, 10);

- for(ScoreDoc sd:tds.scoreDocs) {

- Document doc = searcher.doc(sd.doc);

- System.out.println(doc.get("id")+"---->"+

- doc.get("name")+"["+doc.get("email")+"]-->"+doc.get("id")+","+

- doc.get("attach")+","+doc.get("date")+","+doc.getValues("email")[1]);

- }

- searcher.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- } finally{

- try {

- mgr.release(searcher);//释放一个searcher

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- }

- }

3、SearcherManager和NRTManager联合使用

- package org.itat.index;

- import java.io.File;

- import java.io.IOException;

- import org.apache.lucene.analysis.Analyzer;

- import org.apache.lucene.analysis.standard.StandardAnalyzer;

- import org.apache.lucene.document.Document;

- import org.apache.lucene.document.Field;

- import org.apache.lucene.document.NumericField;

- import org.apache.lucene.index.CorruptIndexException;

- import org.apache.lucene.index.IndexReader;

- import org.apache.lucene.index.IndexWriter;

- import org.apache.lucene.index.IndexWriterConfig;

- import org.apache.lucene.index.Term;

- import org.apache.lucene.search.IndexSearcher;

- import org.apache.lucene.search.NRTManager;

- import org.apache.lucene.search.NRTManagerReopenThread;

- import org.apache.lucene.search.ScoreDoc;

- import org.apache.lucene.search.SearcherManager;

- import org.apache.lucene.search.SearcherWarmer;

- import org.apache.lucene.search.TermQuery;

- import org.apache.lucene.search.TopDocs;

- import org.apache.lucene.store.Directory;

- import org.apache.lucene.store.FSDirectory;

- import org.apache.lucene.store.LockObtainFailedException;

- import org.apache.lucene.util.Version;

- /**

- * SearcherManager和NRTManager联合使用

- *

- * SearcherManager的maybeReopen会自动检查是否需要重新打开,比如重复执行search02几次,

- * 中间的一次删除一条数据这个删除的数据需要对writer进行commit才行,这样硬盘上的索引才会生效

- * 那么使用maybeReopen就可以检测到硬盘中的索引是否改变,并在下次查询的时候就进行生效

- *

- * 但是:

- * 光使用SearcherManager的话做不到实时搜索,为什么呢?

- * 因为使用SearcherManager需要进行writer.commit才会检测到,但是我们知道writer的commit是非常

- * 消耗性能的,我们不能经常性的commit,那需要怎么做呢?

- * 我们只能把添加修改删除的操作在内存中生效,然后使用内存中的索引信息并且在搜索时能起到效果,

- * 过一段时间累计到一定程序才进行writer.commit

- * NRTManage就是这样的功能,把更新的数据存储在内容中,但是lucene搜索的时候也可以搜索到,需要

- * writer进行commit才会把索引更新到硬盘中

- */

- public class IndexUtil2 {

- private String[] ids = {"1","2","3","4","5","6"};

- private String[] emails = {"aa@itat.org","bb@itat.org","cc@cc.org","dd@sina.org","ee@zttc.edu","ff@itat.org"};

- private String[] contents = {

- "welcome to visited the space,I like book",

- "hello boy, I like pingpeng ball",

- "my name is cc I like game",

- "I like football",

- "I like football and I like basketball too",

- "I like movie and swim"

- };

- private int[] attachs = {2,3,1,4,5,5};

- private String[] names = {"zhangsan","lisi","john","jetty","mike","jake"};

- private Analyzer analyzer = new StandardAnalyzer(Version.LUCENE_35);

- private SearcherManager mgr = null;//是线程安全的

- private NRTManager nrtMgr = null;//near real time 近实时搜索

- private Directory directory = null;

- private IndexWriter writer = null;

- public IndexUtil2() {

- try {

- directory = FSDirectory.open(new File("D:\\Workspaces\\realtime\\index"));

- writer = new IndexWriter(directory,new IndexWriterConfig(Version.LUCENE_35, analyzer));

- nrtMgr = new NRTManager(writer,

- new SearcherWarmer() {

- /**

- * 索引一更新就要重新获取searcher,那获取searcher的时候就会调用这个方法

- * 执行maybeReopen的时候会执行warm方法,在这里可以对资源等进行控制

- */

- @Override

- public void warm(IndexSearcher search) throws IOException {

- System.out.println("has open");

- }

- }

- );

- //启动NRTManager的Reopen线程

- //NRTManagerReopenThread会每隔25秒去检测一下索引是否更新并判断是否需要重新打开writer

- NRTManagerReopenThread reopen = new NRTManagerReopenThread(nrtMgr, 5.0, 0.025);//0.025为25秒

- reopen.setDaemon(true);//设为后台线程

- reopen.setName("NrtManager Reopen Thread");

- reopen.start();

- mgr = nrtMgr.getSearcherManager(true);//true为允许所有的更新

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- /**

- * 删除索引数据,默认不会完全删除,被放入索引回收站

- */

- public void delete(String id) {

- try {

- //参数是一个选项,可以是一个Query,也可以是一个term,term是一个精确查找的值

- //此时删除的文档并不会被完全删除,而是存储在一个回收站中的,可以恢复

- //执行完这个操作,索引文件夹下就会多出一个名叫_0_1.del的文件,也就是删除的文件在这个文件中记录了

- nrtMgr.deleteDocuments(new Term("id",id));//使用使用nrtMgr来删除

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (LockObtainFailedException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- public void query() {

- try {

- IndexReader reader = IndexReader.open(directory);

- //通过reader可以有效的获取到文档的数量

- System.out.println("numDocs:"+reader.numDocs());//存储的文档数//不包括被删除的

- System.out.println("maxDocs:"+reader.maxDoc());//总存储量,包括在回收站中的索引

- System.out.println("deleteDocs:"+reader.numDeletedDocs());

- reader.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- /**

- * 索引文件后缀为.fmn为保存的是域的名称等

- * .fdt和.fdx保存的是Store.YES的信息,保存域里面存储的数据

- * .frq表示这里的域哪些出现多少次,哪些单词出现多少次,

- * .nrm存储一些评分信息

- * .prx存储一些偏移量等

- * .tii和.tis专门存储索引里面的所有内容信息

- */

- public void index() {

- IndexWriter writer = null;

- try {

- //在2.9版本之后,lucene的就不是全部的索引格式都兼容的了,所以在使用的时候必须写明版本号

- writer = new IndexWriter(directory, new IndexWriterConfig(Version.LUCENE_35, analyzer));

- writer.deleteAll();//清空索引

- Document doc = null;

- for(int i=0;i<ids.length;i++) {

- doc = new Document();

- doc.add(new Field("id",ids[i],Field.Store.YES,Field.Index.NOT_ANALYZED_NO_NORMS));

- doc.add(new Field("email",emails[i],Field.Store.YES,Field.Index.NOT_ANALYZED));

- doc.add(new Field("email","test"+i+"@test.com",Field.Store.YES,Field.Index.NOT_ANALYZED));

- doc.add(new Field("content",contents[i],Field.Store.NO,Field.Index.ANALYZED));

- doc.add(new Field("name",names[i],Field.Store.YES,Field.Index.NOT_ANALYZED_NO_NORMS));

- //存储数字

- //NumberTools.stringToLong("");已经被标记为过时了

- doc.add(new NumericField("attach",Field.Store.YES,true).setIntValue(attachs[i]));

- String et = emails[i].substring(emails[i].lastIndexOf("@")+1);

- writer.addDocument(doc);

- }

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (LockObtainFailedException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- } finally {

- try {

- if(writer!=null)writer.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- }

- public void search02() {

- IndexSearcher searcher = mgr.acquire();//获得一个searcher

- try {

- /**

- * maybeReopen会自动检查是否需要重新打开

- * 比如重复执行search02几次,中间一次删除一条数据

- * 这个删除的数据需要对writer进行commit才行

- * 那么使用maybeReopen就可以检测到硬盘中的索引是否改变

- * 并在下次查询的时候把删除的这条给去掉

- */

- TermQuery query = new TermQuery(new Term("content","like"));

- TopDocs tds = searcher.search(query, 10);

- for(ScoreDoc sd:tds.scoreDocs) {

- Document doc = searcher.doc(sd.doc);

- System.out.println(doc.get("id")+"---->"+

- doc.get("name")+"["+doc.get("email")+"]-->"+doc.get("id")+","+

- doc.get("attach")+","+doc.get("date")+","+doc.getValues("email")[1]);

- }

- searcher.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- } finally{

- try {

- mgr.release(searcher);//释放一个searcher

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- }

- }

4、不使用SearcherManager和NRTManager

- package org.itat.index;

- import java.io.File;

- import java.io.IOException;

- import org.apache.lucene.analysis.standard.StandardAnalyzer;

- import org.apache.lucene.document.Document;

- import org.apache.lucene.document.Field;

- import org.apache.lucene.document.NumericField;

- import org.apache.lucene.index.CorruptIndexException;

- import org.apache.lucene.index.IndexReader;

- import org.apache.lucene.index.IndexWriter;

- import org.apache.lucene.index.IndexWriterConfig;

- import org.apache.lucene.index.Term;

- import org.apache.lucene.search.IndexSearcher;

- import org.apache.lucene.search.ScoreDoc;

- import org.apache.lucene.search.TermQuery;

- import org.apache.lucene.search.TopDocs;

- import org.apache.lucene.store.Directory;

- import org.apache.lucene.store.FSDirectory;

- import org.apache.lucene.store.LockObtainFailedException;

- import org.apache.lucene.util.Version;

- /**

- * 不使用SearcherManager和NRTManager

- * @author user

- */

- public class IndexUtil {

- private String[] ids = {"1","2","3","4","5","6"};

- private String[] emails = {"aa@itat.org","bb@itat.org","cc@cc.org","dd@sina.org","ee@zttc.edu","ff@itat.org"};

- private String[] contents = {

- "welcome to visited the space,I like book",

- "hello boy, I like pingpeng ball",

- "my name is cc I like game",

- "I like football",

- "I like football and I like basketball too",

- "I like movie and swim"

- };

- private int[] attachs = {2,3,1,4,5,5};

- private String[] names = {"zhangsan","lisi","john","jetty","mike","jake"};

- private Directory directory = null;

- private static IndexReader reader = null;

- public IndexUtil() {

- try {

- directory = FSDirectory.open(new File("D:\\Workspaces\\realtime\\index"));

- // directory = new RAMDirectory();

- // index();

- reader = IndexReader.open(directory,false);

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- /**

- * 对于IndexReader而言,反复使用Index.open打开会有很大的开销,所以一般在整个程序的生命周期中

- * 只会打开一个IndexReader,通过这个IndexReader来创建不同的IndexSearcher,如果使用单例模式,

- * 可能出现的问题有:

- * 1、当使用Writer修改了索引之后不会更新信息,所以需要使用IndexReader.openIfChange方法操作

- * 如果IndexWriter在创建完成之后,没有关闭,需要进行commit操作之后才能提交

- * @return

- */

- public IndexSearcher getSearcher() {

- try {

- if(reader==null) {

- reader = IndexReader.open(directory,false);

- } else {

- IndexReader tr = IndexReader.openIfChanged(reader);

- //如果原来的reader没改变,返回null

- //如果原来的reader改变,则更新为新的索引

- if(tr!=null) {

- reader.close();

- reader = tr;

- }

- }

- return new IndexSearcher(reader);

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- return null;

- }

- /**

- * 删除索引数据,默认不会完全删除,被放入索引回收站

- */

- public void delete() {

- IndexWriter writer = null;

- try {

- writer = new IndexWriter(directory,

- new IndexWriterConfig(Version.LUCENE_35,new StandardAnalyzer(Version.LUCENE_35)));

- //参数是一个选项,可以是一个Query,也可以是一个term,term是一个精确查找的值

- //此时删除的文档并不会被完全删除,而是存储在一个回收站中的,可以恢复

- //执行完这个操作,索引文件夹下就会多出一个名叫_0_1.del的文件,也就是删除的文件在这个文件中记录了

- writer.deleteDocuments(new Term("id","1"));

- writer.commit();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (LockObtainFailedException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- } finally {

- try {

- if(writer!=null) writer.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- }

- public void query() {

- try {

- IndexReader reader = IndexReader.open(directory);

- //通过reader可以有效的获取到文档的数量

- System.out.println("numDocs:"+reader.numDocs());//存储的文档数//不包括被删除的

- System.out.println("maxDocs:"+reader.maxDoc());//总存储量,包括在回收站中的索引

- System.out.println("deleteDocs:"+reader.numDeletedDocs());

- reader.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- /**

- * 索引文件后缀为.fmn为保存的是域的名称等

- * .fdt和.fdx保存的是Store.YES的信息,保存域里面存储的数据

- * .frq表示这里的域哪些出现多少次,哪些单词出现多少次,

- * .nrm存储一些评分信息

- * .prx存储一些偏移量等

- * .tii和.tis专门存储索引里面的所有内容信息

- */

- public void index() {

- IndexWriter writer = null;

- try {

- //在2.9版本之后,lucene的就不是全部的索引格式都兼容的了,所以在使用的时候必须写明版本号

- writer = new IndexWriter(directory, new IndexWriterConfig(Version.LUCENE_35, new StandardAnalyzer(Version.LUCENE_35)));

- writer.deleteAll();//清空索引

- Document doc = null;

- for(int i=0;i<ids.length;i++) {

- doc = new Document();

- doc.add(new Field("id",ids[i],Field.Store.YES,Field.Index.NOT_ANALYZED_NO_NORMS));

- doc.add(new Field("email",emails[i],Field.Store.YES,Field.Index.NOT_ANALYZED));

- doc.add(new Field("email","test"+i+"@test.com",Field.Store.YES,Field.Index.NOT_ANALYZED));

- doc.add(new Field("content",contents[i],Field.Store.NO,Field.Index.ANALYZED));

- doc.add(new Field("name",names[i],Field.Store.YES,Field.Index.NOT_ANALYZED_NO_NORMS));

- //存储数字

- //NumberTools.stringToLong("");已经被标记为过时了

- doc.add(new NumericField("attach",Field.Store.YES,true).setIntValue(attachs[i]));

- String et = emails[i].substring(emails[i].lastIndexOf("@")+1);

- writer.addDocument(doc);

- }

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (LockObtainFailedException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- } finally {

- try {

- if(writer!=null)writer.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- }

- public void search02() {

- try {

- IndexSearcher searcher = getSearcher();

- TermQuery query = new TermQuery(new Term("content","like"));

- TopDocs tds = searcher.search(query, 10);

- for(ScoreDoc sd:tds.scoreDocs) {

- Document doc = searcher.doc(sd.doc);

- System.out.println(doc.get("id")+"---->"+

- doc.get("name")+"["+doc.get("email")+"]-->"+doc.get("id")+","+

- doc.get("attach")+","+doc.get("date")+","+doc.getValues("email")[1]);

- }

- searcher.close();

- } catch (CorruptIndexException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- }

工程路径:http://download.csdn.net/detail/wxwzy738/5332972

http://my.oschina.net/tianshibuzuoai/blog/65271

http://blog.csdn.net/wxwzy738/article/details/8886920

相当于jdbc的连接池。