hadoop2.3配置文件

Hadoop集群配置(最全面总结)http://blog.csdn.net/hguisu/article/details/7237395

cdh 下载 hadoop http://archive-primary.cloudera.com/cdh5/cdh/5/

配置过程详述

大家从官网下载的apache hadoop2.3.0的代码是32位操作系统下编译的,不能使用64位的jdk。我下面部署的hadoop代码是自己的64位机器上重新编译过的。服务器都是64位的,本配置尽量模拟真实环境。大家可以以32位的操作系统做练习,这是没关系的。

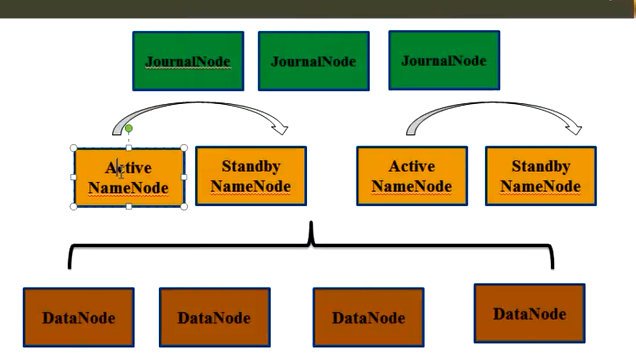

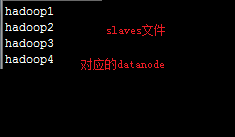

在这里我们选用4台机器进行示范,各台机器的职责如下表格所示

| hadoop1 | hadoo2 | hadoop3 | hadoop4 | |

| 是NameNode吗? | 是,属集群c1 | 是,属集群c1 | 是,属集群c2 | 是,属集群c2 |

| 是DataNode吗? | 是 | 是 | 是 | 是 |

| 是JournalNode吗? | 是 | 是 | 是 | 不是 |

| 是ZooKeeper吗? | 是 | 是 | 是 | 不是 |

| 是ZKFC吗? | 是 | 是 | 是 | 是 |

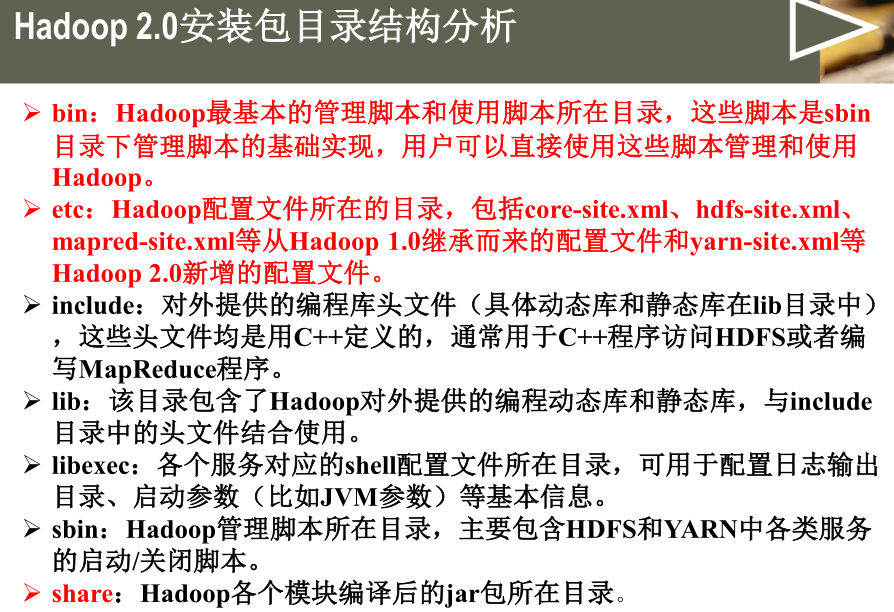

配置文件一共包括6个,分别是hadoop-env.sh、core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml和slaves。除了hdfs-site.xml文件在不同集群配置不同外,其余文件在四个节点的配置是完全一样的,可以复制。

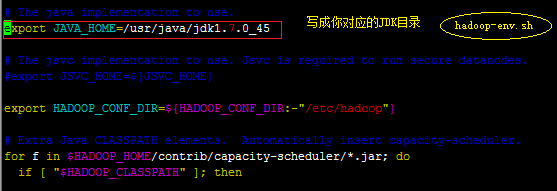

文件hadoop-env.sh

就是修改这一行内容,修改后的结果如下

export JAVA_HOME=/usr/java/jdk1.7.0_45

【这里的JAVA_HOME的值是jdk的安装路径。如果你那里不一样,请修改为自己的地址】

文件core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://cluster1</value>

</property>

【这里的值指的是默认的HDFS路径。当有多个HDFS集群同时工作时,用户如果不写集群名称,那么默认使用哪个哪?在这里指定!该值来自于hdfs-site.xml中的配置】

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop1:2181,hadoop2:2181,hadoop3:2181</value>

</property>

【这里是ZooKeeper集群的地址和端口。注意,数量一定是奇数,且不少于三个节点】

<property>

<name>fs.defaultFS</name>

<value>hdfs://cluster1</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop1:2181,hadoop2:2181,hadoop3:2181</value>

</property>

该文件只配置在hadoop1和hadoop2上。

<configuration>

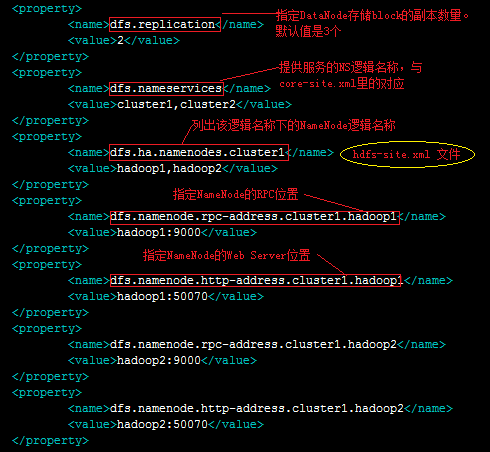

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

【指定DataNode存储block的副本数量。默认值是3个,我们现在有4个DataNode,该值不大于4即可。】

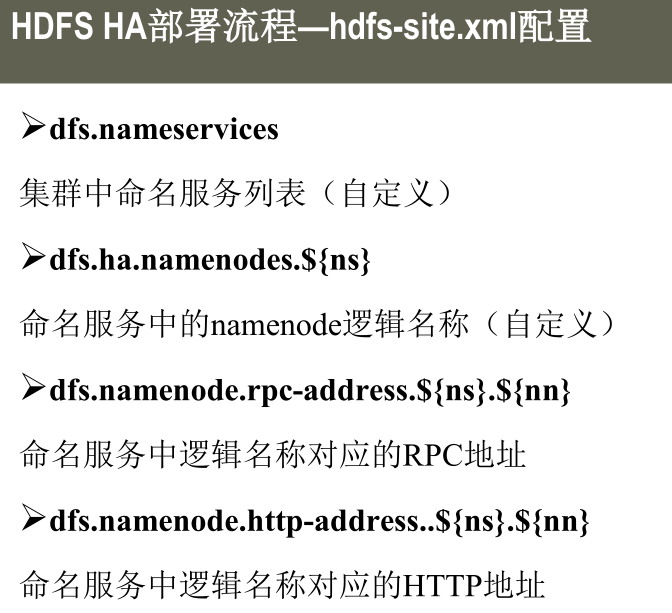

<property>

<name>dfs.nameservices</name>

<value>cluster1,cluster2</value>

</property>

【使用federation时,使用了2个HDFS集群。这里抽象出两个NameService实际上就是给这2个HDFS集群起了个别名。名字可以随便起,相互不重复即可】

<property>

<name>dfs.ha.namenodes.cluster1</name>

<value>hadoop1,hadoop2</value>

</property>

【指定NameService是cluster1时的namenode有哪些,这里的值也是逻辑名称,名字随便起,相互不重复即可】

<property>

<name>dfs.namenode.rpc-address.cluster1.hadoop101</name>

<value>hadoop101:9000</value>

</property>

【指定hadoop1的RPC地址】

<property>

<name>dfs.namenode.http-address.cluster1.hadoop101</name>

<value>hadoop101:50070</value>

</property>

【指定hadoop1的http地址】

<property>

<name>dfs.namenode.rpc-address.cluster1.hadoop102</name>

<value>hadoop2:9000</value>

</property>

【指定hadoop2的RPC地址】

<property>

<name>dfs.namenode.http-address.cluster1.hadoop102</name>

<value>hadoop102:50070</value>

</property>

【指定hadoop2的http地址】

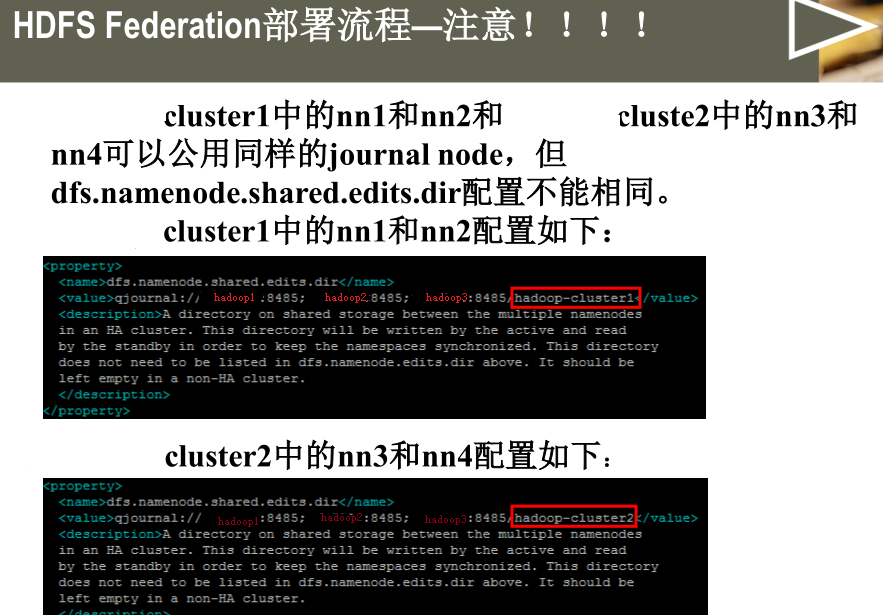

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop1:8485;hadoop2:8485;hadoop3:8485/cluster1</value>

</property>

【指定cluster1的两个NameNode共享edits文件目录时,使用的JournalNode集群信息】

<property>

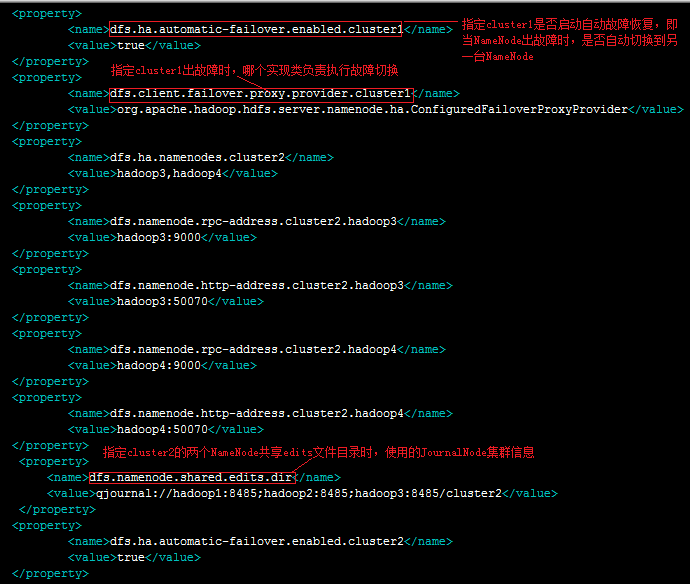

<name>dfs.ha.automatic-failover.enabled.cluster1</name>

<value>true</value>

</property>

【指定cluster1是否启动自动故障恢复,即当NameNode出故障时,是否自动切换到另一台NameNode】

<property>

<name>dfs.client.failover.proxy.provider.cluster1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

【指定cluster1出故障时,哪个实现类负责执行故障切换】

<property>

<name>dfs.ha.namenodes.cluster2</name>

<value>hadoop3,hadoop4</value>

</property>

【指定NameService是cluster2时,两个NameNode是谁,这里是逻辑名称,不重复即可。以下配置与cluster1几乎全部相似,不再添加注释】

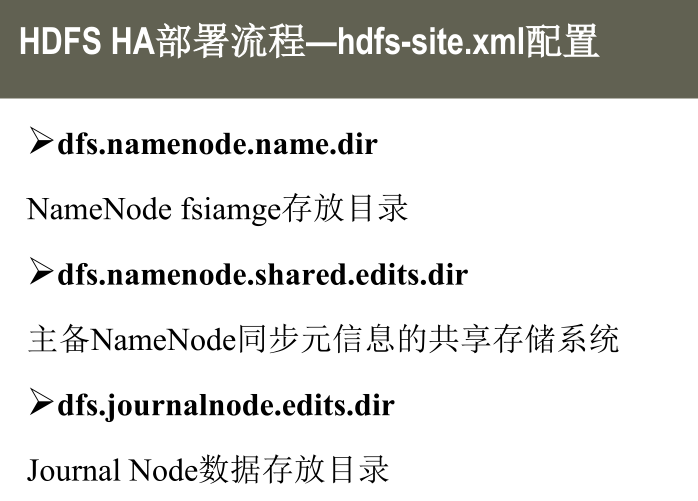

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/usr/local/hadoop/tmp/journal</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///home/grid/hadoop/hdfs/name</value>

</property>

自动切换

<property>

<name>dfs.ha.automatic-failover.enabled.cluster2</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.cluster2</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/grid/.ssh/id_rsa</value>

</property>

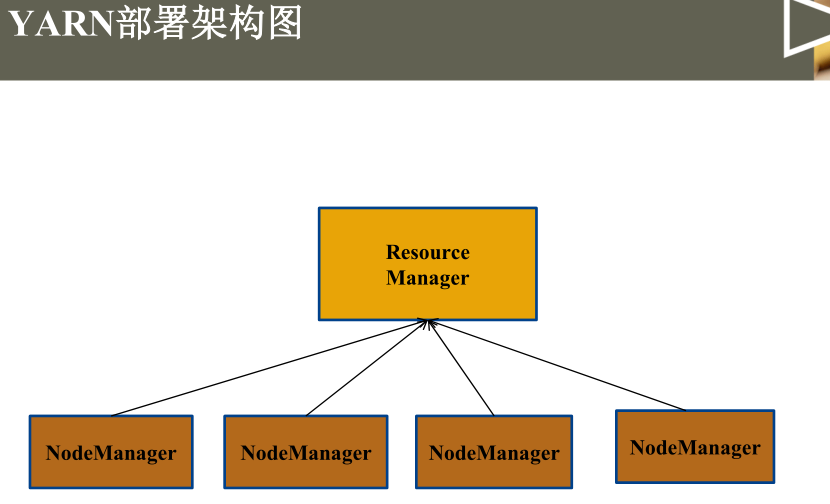

yarn-site.xml文件

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop1</value>

</property>

<property>

<description>The address of the applications manager interface in the RM.</description>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<property>

<description>The address of the scheduler interface.</description>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<property>

<description>The http address of the RM web application.</description>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<property>

<description>The https adddress of the RM web application.</description>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>${yarn.resourcemanager.hostname}:8090</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<property>

<description>The address of the RM admin interface.</description>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<property>

<description>The class to use as the resource scheduler.</description>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<description>fair-scheduler conf location</description>

<name>yarn.scheduler.fair.allocation.file</name>

<value>${yarn.home.dir}/etc/hadoop/fairscheduler.xml</value>

</property>

<property>

<description>List of directories to store localized files in. An application's localized file directory will be found in:

${yarn.nodemanager.local-dirs}/usercache/${user}/appcache/application_${appid}.

Individual containers' work directories, called container_${contid}, will

be subdirectories of this.

</description>

<name>yarn.nodemanager.local-dirs</name>

<value>/home/dongxicheng/hadoop/yarn/local</value>

</property>

<property>

<description>Whether to enable log aggregation</description>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<description>Where to aggregate logs to.</description>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/tmp/logs</value>

</property>

<property>

<description>Amount of physical memory, in MB, that can be allocated for containers.</description>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>30720</value>

</property>

<property>

<description>Number of CPU cores that can be allocated for containers.</description>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>12</value>

</property>

<property>

<description>the valid service name should only contain a-zA-Z0-9_ and can not start with numbers</description>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

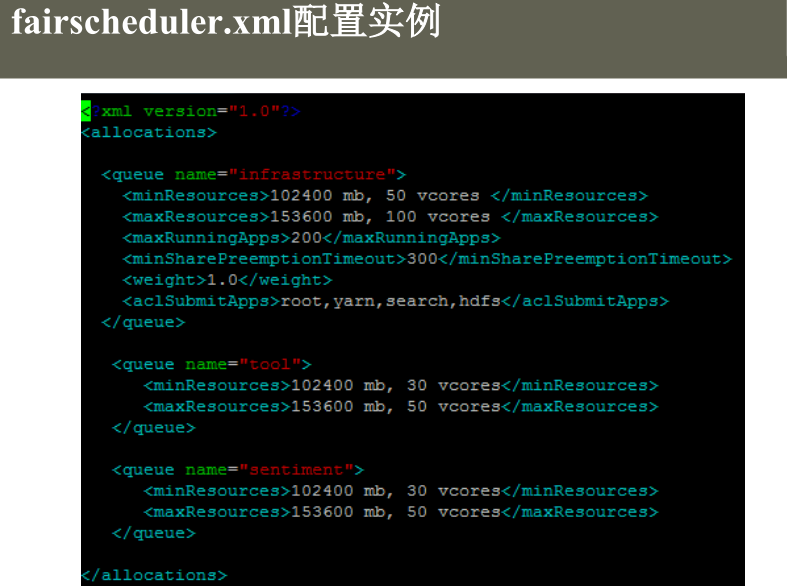

fairscheduler.xml文件

<?xml version="1.0"?>

<allocations>

<queue name="infrastructure">

<minResources>102400 mb, 50 vcores </minResources>

<maxResources>153600 mb, 100 vcores </maxResources>

<maxRunningApps>200</maxRunningApps>

<minSharePreemptionTimeout>300</minSharePreemptionTimeout>

<weight>1.0</weight>

<aclSubmitApps>root,yarn,search,hdfs</aclSubmitApps>

</queue>

<queue name="tool">

<minResources>102400 mb, 30 vcores</minResources>

<maxResources>153600 mb, 50 vcores</maxResources>

</queue>

<queue name="sentiment">

<minResources>102400 mb, 30 vcores</minResources>

<maxResources>153600 mb, 50 vcores</maxResources>

</queue>

</allocations>

hdfs-site.xml文件

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>cluster1,cluster2</value>

</property>

<property>

<name>dfs.ha.namenodes.cluster1</name>

<value>hadoop1,hadoop2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster1.hadoop1</name>

<value>hadoop1:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster1.hadoop1</name>

<value>hadoop1:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster1.hadoop2</name>

<value>hadoop2:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster1.hadoop2</name>

<value>hadoop2:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop1:8485;hadoop2:8485;hadoop3:8485/cluster1</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled.cluster1</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.cluster1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.namenodes.cluster2</name>

<value>hadoop3,hadoop4</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster2.hadoop3</name>

<value>hadoop3:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster2.hadoop3</name>

<value>hadoop3:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster2.hadoop4</name>

<value>hadoop4:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster2.hadoop4</name>

<value>hadoop4:50070</value>

</property>

<!--

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop1:8485;hadoop2:8485;hadoop3:8485/cluster2</value>

</property>

-->

<property>

<name>dfs.ha.automatic-failover.enabled.cluster2</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.cluster2</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/usr/local/hadoop/tmp/journal</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/grid/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///home/grid/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///home/grid/hadoop/hdfs/data</value>

</property>

core-site.xml文件

<property>

<name>fs.defaultFS</name>

<value>hdfs://cluster1</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop1:2181,hadoop2:2181,hadoop3:2181</value>

</property>

本文出自 “陈生龙” 博客,谢绝转载!