CDH4安装部署系列之四-安装部署高可用CDH4

1.1 CDH4服务器规划

编号 |

虚拟机IP |

服务 |

||||

1 |

10.255.0.120 |

Namenode1 |

RecourseManager |

zkfc |

||

2 |

10.255.0.145 |

Namenode2 |

zkfc |

|||

3 |

10.255.0.146 |

Journalnode1 |

datanode1 |

NodeManager |

MapReduce |

Zookeeper |

4 |

10.255.0.149 |

Journalnode2 |

datanode1 |

NodeManager |

MapReduce |

Zookeeper |

5 |

10.255.0.150 |

Journalnode3 |

datanode1 |

NodeManager |

MapReduce |

Zookeeper |

注:由于服务器有限,所以将ResourceManager和Namenode安装在了同一台服务器上。生产环境不建议这么安装。生产环境CDH4集群的服务器规划请参考CDH4安装部署规划

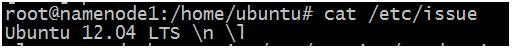

1.2 在集群的所有节点准备Ubuntu 12.0.4操作系统

在规划的集群服务器所有节点安装Ubuntu 12.04,关于Ubuntu12.0.4的安装在此不再介绍,这里只对操作系统版本进行确认。

在各节点执行以下命令,查看操作系统版本

# cat /etc/issue

#lsb_release -c

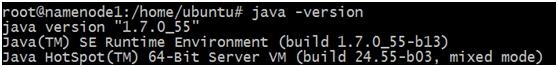

1.3 在集群的所有节点上准备JDK1.7环境

在集群中的所有节点安装JDK1.7,关于JDK1.7的安装过程在此不再描述,这里只对JDK版本进行确认。

分别在集群中的所有节点执行以下命令,查看JDK1.7是否安装成功

#java -version

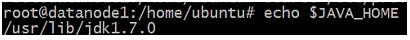

分别在集群中的所有节点执行的/etc/profile文件中配置JDK环境变量

export JAVA_HOME=/usr/lib/jdk1.7.0

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

export CLASSPATH=$CLASSPATH:.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

测试环境变量

#Source /etc/profile

#echo $JAVA_HOME

1.4 在集群的所有节点上配置源

在Cloudera官网找到Ubuntu Precise 12.0.4对应的源,如下图所示,并在公司的源服务器上增加CDH4源(此步骤由IT协助完成,此处略)

Cloudera官网:

http://www.cloudera.com/content/cloudera-content/cloudera-docs/CDH4/latest/CDH4-Installation-Guide/CDH4-Installation-Guide.html#../CDH4-Installation-Guide/../CDH4-Installation-Guide/cdh4ig_topic_4_4.html

在集群的所有节中配置CDH4源

#vim /etc/apt/source.list

deb [arch=amd64]http://10.155.0.66/cdh precise-cdh4 contrib

deb [arch=amd64]http://10.155.0.66/cm precise-cm4 contrib

deb http://10.155.0.66/ubuntu/precise main universe restricted multiverse

deb http://10.155.0.66/ubuntu/precise-security universe main multiverse restricted

deb http://10.155.0.66/ubuntu/precise-updates universe main multiverse restricted

deb http://10.155.0.66/ubuntu/precise-proposed universe main multiverse restricted

deb http://10.155.0.66/ubuntu/precise-backports universe main multiverse restricted

deb [arch=amd64] http://10.155.0.66/search/ubuntu/precise/amd64/search precise-search1.2.0 contrib

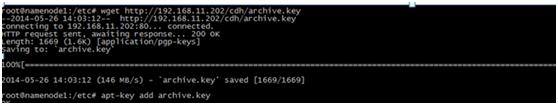

在集群的所有节点中增加源认证,确保安装时下载到正确的cdh4包

#wget http://10.155.0.66/cdh/archive.key

#apt-key add archive.key

在集群的所有节点更新源:

#apt-get update

1.5 为集群的每个节点配置网络主机名

1.5.1 在集群的所有节点上配置IP

修改所有节点的/etc/network/interface, 配置对应的ip地址,注意inetaddr应该配置在eth0网卡上

#vim /etc/network/interface

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto eth0

iface eth0 inet static

address 10.255.0.120

netmask 255.255.255.0

gateway10.255.0.254

重启网卡使配置生效

#/etc/init.d/networking restart

1.5.2 在集群的所有节点上配置主机名

修改所有节点的/etc/hosts文件,包含ip地址和对应的完全符合FQDN的域名

#vim /etc/hosts:

10.255.0.120 namenode1.uc.com

10.255.0.145 namenode2.uc.com

10.255.0.146 datanode1.uc.com

10.255.0.149 datanode2.uc.com

10.255.0.150 datanode3.uc.com

2. 修改所有节点的/etc/hostname,使每一个节点的主机名生效

如在节点10.255.0.120上,修改hostname:

# vim /etc/hostname

namenode1.uc.com

3. 重启系统使hostname生效

#reboot

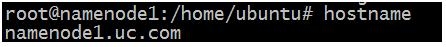

1.5.3 确认每个主机被网络识别

执行uname -a,检查输出的主机名和hostname命令输出的主机名一致,如在节点10.255.0.120上运行:

#uname �Ca

#hostname

2. 运行ifconfig,确定eth0的inet addr值为配置的ip。

如在节点10.255.0.120上运行:

#ifconfig

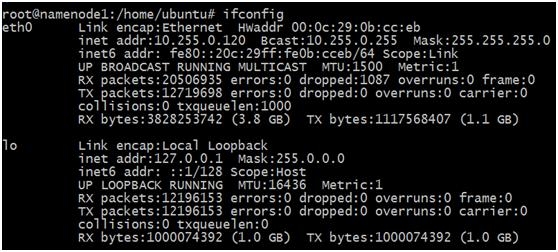

1.6 在Namenode1节点上配置SSH无密码登陆到其它节点

SSH无密码登陆方便从Namenode1 ssh无密码登陆到集群中的其他节点,也方便从namenode1拷贝文件到其他节点时不需要输入密码,具体操作步骤如下:

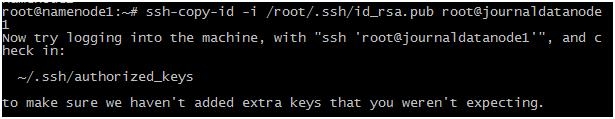

在Namenode1上运行

#ssh-keygen

根据提示按回车键,最终将生成的密钥保存在.ssh/id_rsa文件中,如上图所示

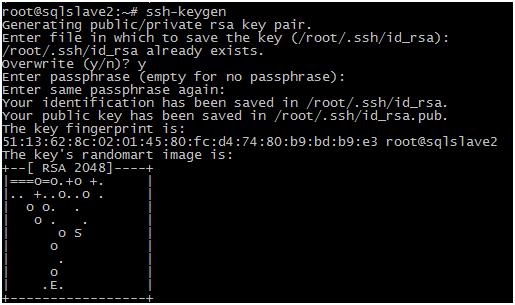

2. 在Namenode1上将秘钥拷贝到集群中的其他节点,实现 Namenode1无密码登陆到其他节点

如拷贝秘钥到Namenode2:

#ssh-copy-id -i /root/.ssh/id_rsa.pub [email protected]

按以上操作依次将秘钥拷贝到集群的所有其他节点(操作步骤的唯一区别是主机名不一样)

1.7 安装高可用CDH4

分别在以下节点安装对应的服务:

编号 |

虚拟机IP |

服务 |

||||

1 |

10.255.0.120 |

Namenode1 |

RecourseManager |

zkfc |

||

2 |

10.255.1.145 |

Namenode2 |

zkfc |

|||

3 |

10.255.1.146 |

Journalnode1 |

datanode1 |

NodeManager |

MapReduce |

Zookeeper |

4 |

10.255.1.149 |

Journalnode2 |

datanode1 |

NodeManager |

MapReduce |

Zookeeper |

5 |

10.255.1.150 |

Journalnode3 |

datanode1 |

NodeManager |

MapReduce |

Zookeeper |

1. 安装和部署Zookeeper

Clouder建议,如果正准备部署高可用(Namenode HA)集群,则必须先安装部署Zookeeper集群。 Zookeeper具体安装部署请参见Zookeeper 安装

2. 安装和部署zkfc

部署高可用(Namenode HA)集群,在安装部署Zookeeper集群之后需要安装部署Zookeeper客户端ZKFC。 ZKFC具体安装部署请参见ZKFC安装。

3. 安装Namenode:

# apt-get install hadoop-hdfs-Namenode

验证Namenode是否安装成功

#dpkg -l|grep Namenode

4. 安装Journalnode

#apt-get install hadoop-hdfs-Journalnode

验证Journalnode是否安装成功

#dpkg -l|grep Journalnode

5. 安装datanode

#apt-get install hadoop-hdfs-datanode

验证datanode是否安装成功

#dpkg -l|grep datanode

6. 安装yarn-resourcemanager

#apt-get install hadoop-yarn-resourcemanager

验证resourcemanager是否安装成功

#dpkg -l|grep resourcemanager

7. 安装yarn-NodeManager

# apt-get install hadoop-yarn-NodeManager

验证NodeManager是否安装成功

#dpkg -l|grep NodeManager

8. 安装hadoop-MapReduce

#apt-get install hadoop-MapReduce

验证MapReduce是否安装成功

#dpkg -l|grep MapReduce

1.8 部署HDFS

检查HDFS默认配置文件路径

HDFS默认配置文件路径位于/etc/Hadoop/conf.dist和/etc/Hadoop/conf下面。这2个文件夹的内容是一摸一样的。配置我们自己的HDFS时,对/etc/Hadoop/conf下的文件进行修改

在Namenode1上自定义HDFS配置文件

1. #vim /etc/hadoof/conf/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<property>

<name>dfs.ha.Namenodes.mycluster</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.Namenode.rpc-address.mycluster.nn1</name>

<value>Namenode1.uc.com:8020</value>

</property>

<property>

<name>dfs.Namenode.rpc-address.mycluster.nn2</name>

<value>Namenode2.uc.com:8020</value>

</property>

<property>

<name>dfs.Namenode.http-address.mycluster.nn1</name>

<value>Namenode1.uc.com:50070</value>

</property>

<property>

<name>dfs.Namenode.http-address.mycluster.nn2</name>

<value>Namenode2.uc.com:50070</value>

</property>

<property>

<name>dfs.Namenode.shared.edits.dir</name><value>qjournal://datanode1.uc.com:8485;datanode2.uc.com:8485;datanode3.uc.com:8485/mycluster</value>

</property>

<property>

<name>dfs.Journalnode.edits.dir</name>

<value>/data1/1/dfs/jn</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.Namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence(hdfs)</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/var/lib/hadoop-hdfs/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>5000</value>

<description>

SSH connection timeout, inmilliseconds, to use with the builtin

sshfence fencer.

</description>

</property>

<property>

<name>ha.Zookeeper.quorum.mycluster</name>

<value>datanode1.uc.com:2181,datanode2.uc.com:2181,datanode3.uc.com:2181</value>

</property>

</configuration>

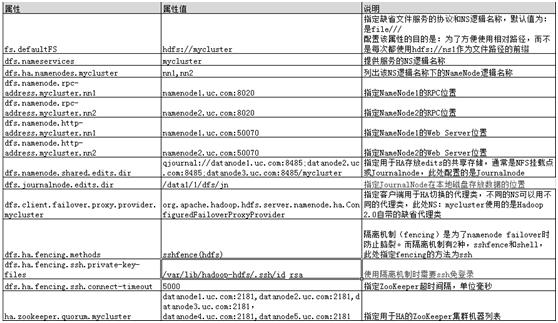

配置文件说明:

2. #vim /etc/hadoof/conf/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions.superusergroup</name>

<value>hadoop</value>

</property>

<property>

<name>dfs.Namenode.name.dir</name>

<value>file:///data1/1/dfs/nn</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///data1/1/dfs/dn,/data1/2/dfs/dn,/data1/3/dfs/dn</value>

</property>

</configuration>

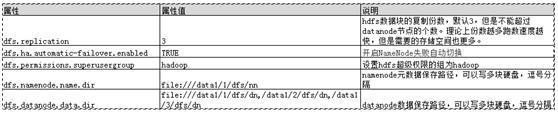

配置文件说明:

3. #vim /etc/hadoof/conf/slaves

datanode1.uc.com

datanode2.uc.com

datanode3.uc.com

配置文件说明:

slaves文件中列出所有datanode节点的域名

4. 配置环境变量#vim /etc/profile

#vim /etc/profile

export JAVA_HOME=/usr/lib/jdk1.7.0

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

exportCLASSPATH=$CLASSPATH:.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:/usr/lib/hive/lib/:/usr/lib/hadoop/

#source /etc/profile

5. 配置环境变量#vim /etc/hadoop/conf/hadoop-env.sh

# Set Hadoop-specific environment variables here.

# Forcing YARN-based mapreduce implementaion.

# Make sure to comment out if you want to go back to the default or

# if you want this to be tweakable on a per-user basis

export HADOOP_MAPRED_HOME=/usr/lib/hadoop-mapreduce

6. 从Namenode1将配置文件拷贝到集群中的所有节点

#scp hdfs-site.xml core-site.xml slaves namenode2:/etc/hadoop/conf

#scp hdfs-site.xml core-site.xml slaves datanode1:/etc/hadoop/conf

#scp hdfs-site.xml core-site.xml slaves datanode2:/etc/hadoop/conf

#scp hdfs-site.xml core-site.xml slaves datanode3:/etc/hadoop/conf

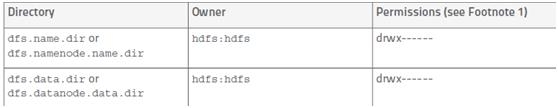

创建配置文件中指定的本地存储目录并设置权限

下表是Hadoop官方文档提供的本地存储目录的正确属主、属组及权限:

根据hdfs-site.xml:dfs.Namenode.name.dir= file:///data1/1/dfs/nn,在所有Namenode节点建立Namenode元数据保存路径,并修改文件夹的访问权限

#mkdir -p/data1/1/dfs/nn

#chown -R hdfs:hdfs/data1/1/dfs/nn

#chmod 700/data1/1/dfs/nn

2. 根据core-site.xml,在所有Journalnode建立文件夹和修改文件夹的访问权限

#sudo mkdir -p/data1/1/dfs/jn

#sudochown -R hdfs:hdfs /data1/1/dfs/jn

3. 根据hdfs-site.xml,在所有datanode建立文件夹及修改文件夹的权限

mkdir -p /data1/1/dfs/dn /data1/2/dfs/dn /data1/3/dfs/dn

chown -R hdfs:hdfs /data1/1/dfs/dn /data1/2/dfs/dn /data1/3/dfs/dn

1.9 启动HDFS

HDFS启动时必须严格按以下顺序,否则可能会出现错误

1. 启动所有Journalnode

# service hadoop-hdfs-Journalnode start

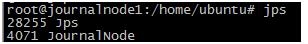

验证Journalnode启动成功

eg:验证Journalnode1启动成功,如下图,输入jps,回车,出现Journalnode进程,表示启动成功

2. 格式化Namenode1

#sudo -u hdfshadoop Namenode -format

注意:

1) 需要用hdfs用户做格式化,因为在/data1/1/dfs/nn下创建的文件夹,用户都是hdfs

2) 初次启动需要格式化Namenode1,以后就不需要了否则会格式化之前存储在Namenode1上的元数据,导致Datanode找不到对应的元数据

3. 在Namenode1或Namenode2上格式化Journalnode

# hdfs Namenode -initializeSharedEdits

注意:初次启动时执行,以后就不需要了,否则会格式化所有存储在Journalnode上的元数据,从而导致Namenode1和Namenode2同步失败而报错

4. 启动Namenode1

# sudo service hadoop-hdfs-Namenode start

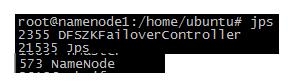

验证Namenode启动成功,eg:验证Namenode1启动成功,输入jps命令后出现Namenode进程表示启动成功

5. 在Namenode2上同步Namenode1的元数据并启动Namenode2

# sudo -u hdfs hdfs Namenode-bootstrapStandby

注:初次启动时需要,以后通过Journalnode自动同步

# sudo service hadoop-hdfs-Namenodestart

验证Namenode启动成功,eg:验证Namenode2启动成功,输入jps命令后出现Namenode进程表示启动成功

6. 启动所有datanode

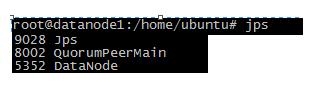

# /etc/init.d/hadoop-hdfs-datanodestart

验证datanode启动成功,eg:验证datanode1安装成功,输入jps,回车,出现DataNode进程名称,表示启动成功

1.10 验证HDFS文件结构

在任意一个节点建立/input文件夹,并设置正确的访问权限和属主:

#sudo -u hdfs hadoop fs-mkdir /input

#sudo -u hdfs hadoop fs-chmod -R 1755 /input

在任意一个节点建立/output文件夹,并设置正确的访问权限和属主:

#sudo -u hdfs hadoop fs-mkdir /output

#sudo -u hdfs hadoop fs-chmod -R 1755 /output

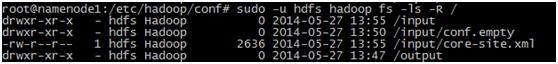

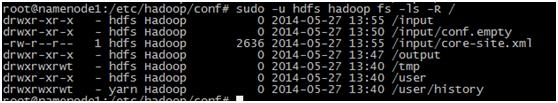

查看HDFS文件结构

sudo -u hdfs hadoop fs -ls -R /

出现如下图所示:表示HDFS启动成功,并能正常使用

1.11 验证HA自动切换是否生效

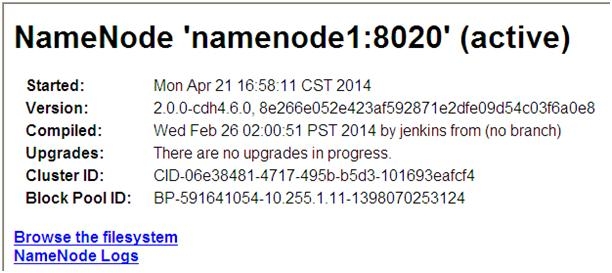

在浏览器中输入:http://10.255.0.120:50070,因为首先启动的是Namenode1,所以Namenode1是active的

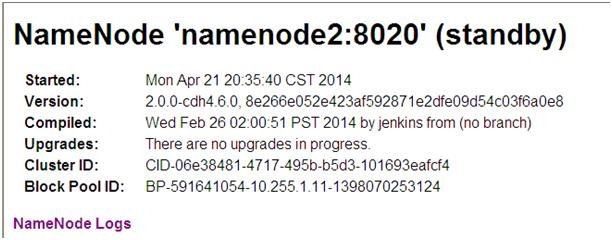

2. 在浏览器中输入:http://10.255.0.145:50070, Namenode2是standby状态

3. 停止Namenode1:

# service hadoop-hdfs-Namenode stop

5秒钟后(HA切换时,在core-site.xml:dfs.ha.fencing.ssh.connect-timeout设置的Zookeeper最长超时时间),在浏览器中输入:http://10.255.1.12:50070, Namenode2自动切换为active状态了

1.4 部署YARN

在Namenode1自定义YARN配置文件

1. vim yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>Namenode1:8031</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>Namenode1:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>Namenode1:8030</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>Namenode1:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>Namenode1:8088</value>

</property>

<property>

<description>Classpathfor typical applications.</description>

<name>yarn.application.classpath</name>

<value>

$HADOOP_CONF_DIR,

$HADOOP_COMMON_HOME/*,$HADOOP_COMMON_HOME/lib/*,

$HADOOP_HDFS_HOME/*,$HADOOP_HDFS_HOME/lib/*,

$HADOOP_MAPRED_HOME/*,$HADOOP_MAPRED_HOME/lib/*,

$YARN_HOME/*,$YARN_HOME/lib/*

</value>

</property>

<property>

<name>yarn.NodeManager.aux-services</name>

<value>MapReduce.shuffle</value>

</property>

<property>

<name>yarn.NodeManager.aux-services.MapReduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.NodeManager.local-dirs</name>

<value>file:///data1/1/yarn/local,file:///data1/2/yarn/local,file:///data1/3/yarn/local</value>

</property>

<property>

<name>yarn.NodeManager.log-dirs</name>

<value>file:///dat1/1/yarn/logs,file:///data1/2/yarn/logs,file:///data1/3/yarn/logs</value>

</property>

<property>

<description>Where toaggregate logs</description>

<name>yarn.NodeManager.remote-app-log-dir</name>

<value>hdfs://var/log/hadoop-yarn/apps</value>

</property>

<property>

<name>yarn.web-proxy.address</name>

<value>datanode3:54315</value>

</property>

</configuration>

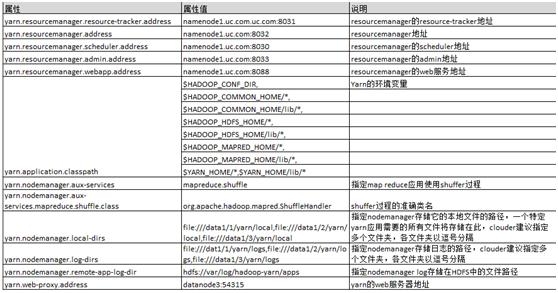

配置文件说明:

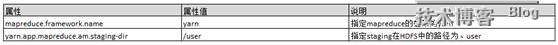

2. vim mapred-site.xml

<configuration>

<property>

<name>MapReduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.MapReduce.am.staging-dir</name>

<value>/user</value>

</property>

<property>

<name>MapReduce.jobhistory.address</name>

<value>Journalnode1.uc.com:10020</value>

<Description> The address of the JobHistory Server host:port</Description>

</property>

<property>

<name>MapReduce.jobhistory.webapp.address</name>

<value> Journalnode1.uc.com:19888</value>

<Description> The address of the JobHistory Server webapplication host:port </Description>

</property>

</configuration>

配置文件说明:

创建配置文件中指定的本地目录并设置权限

下表是Hadoop官方文档提供的本地存储文件夹的正确属主属组和权限:

Directory |

Owner |

Permissions |

yarn.NodeManager.local-dirs |

yarn:yarn |

drwxr-xr-x |

yarn.NodeManager.log-dirs |

yarn:yarn |

drwxr-xr-x |

根据yarn-site.xml:yarn.NodeManager.local-dirs=file:///data1/1/yarn/local,file:///data1/2/yarn/local,file:///data1/3/yarn/local,在所有datanode建立文件夹及修改文件夹的权限

# mkdir -p /data1/1/yarn/local/data1/2/yarn/local /data1/3/yarn/local

#chown -R yarn:yarn /data1/1/yarn/local/data1/2/yarn/local /data1/3/yarn/local

根据yarn-site.xml: yarn.NodeManager.log-dirs=file:///dat1/1/yarn/logs,file:///data1/2/yarn/logs,file:///data1/3/yarn/logs, 在所有datanode建立文件夹及修改文件夹的权限

#mkdir -p /data1/1/yarn/logs/data1/2/yarn/logs /data1/3/yarn/logs

#chown -R yarn:yarn /data1/1/yarn/logs/data1/2/yarn/logs /data1/3/yarn/logs

创建配置文件中指定的HDFS中的文件

根据yarn-site:yarn.NodeManager.remote-app-log-dir= hdfs://var/log/hadoop-yarn/apps, 在HDFS中建立/ log文件夹,并设置正确的访问权限:

#sudo -u hdfs hadoop fs -mkdir /var/log/hadoop-yarn

#sudo -u hdfs hadoop fs -chown yarn:mapred /var/log/hadoop-yarn

注意:必须创建该目录,因为它是yarn-site配置文件中配置的属性值:/var/log/hadoop-yarn/apps的父目录

根据mapred-site:yarn.app.MapReduce.am.staging-dir =/usre, 在HDFS中建立/ user文件夹,并设置正确的访问权限:

#sudo -u hdfs hadoop fs -mkdir /user

#sudo -u hdfs hadoop fs -chmod -R 1755 /user

#sudo -u hdfs hadoop fs -chown yarn /user

HDFS启动,需要创建/user的子目录:/user/history,并设置正确的访问权限:

#sudo -u hdfs hadoop fs -mkdir /user/history

#sudo -u hdfs hadoop fs -chmod -R 1777 /user/history

#sudo -u hdfs hadoop fs -chown yarn /user/history

在任意一个节点建立/tmp文件夹,并设置正确的访问权限和属主。如果没有建立/tmp且/tmp没有正确的权限,在之后的CDH组件的运行过程中可能会遇到问题

#sudo -u hdfs hadoop fs -mkdir /tmp

#sudo -u hdfs hadoop fs -chmod -R 1777 /tmp

验证HDFS文件结构

# sudo -u hdfs hadoop fs -ls -R /

应该看到如下图:

从Namenode1将配置文件拷贝到集群中的所有节点

#scp yarn-site.xml mapred-site.xmlhadoop-env.sh namenode2:/etc/hadoop/conf

#scp yarn-site.xml mapred-site.xmlhadoop-env.sh datanode1:/etc/hadoop/conf

#scp yarn-site.xml mapred-site.xmlhadoop-env.sh datanode2:/etc/hadoop/conf

#scp yarn-site.xml mapred-site.xmlhadoop-env.sh datanode3:/etc/hadoop/conf

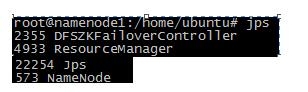

1.13 启动YARN

1. 启动所有resourcemanager

# /etc/init.d/hadoop-yarn-resourcemanagerstart

验证Resourcemanager启动成功,输入jps,回车,出现如下进程名:ResourceManager,表示启动成功

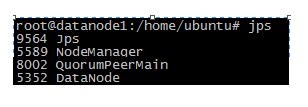

2. 启动所有NodeManager

# /etc/init.d/hadoop-yarn-NodeManagerstart

验证NodeManager启动成功,eg:验证datanode1上NodeManager启动:输入jps,回车,出现NodeManager进程名,说明启动成功

1.14 验证YARN

在任意一个节点操作如下:

1. 在HDFS建立文件夹input 和 output(如已经创建,不用重复创建)

# sudo -u hdfs hadoop fs -mkdir /input

# sudo -u hdfs hadoop fs -mkdir /output

2. 拷贝文件/etc/Hadoop/cof/*.xml到input

# sudo -u hdfs hadoop fs -put /etc/hadoop/conf/core-site.xml /input

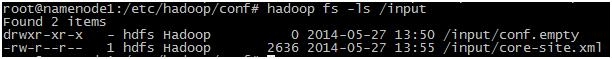

3. 查看文件是否拷贝成功

# sudo -u hdfs hadoop fs-ls /input

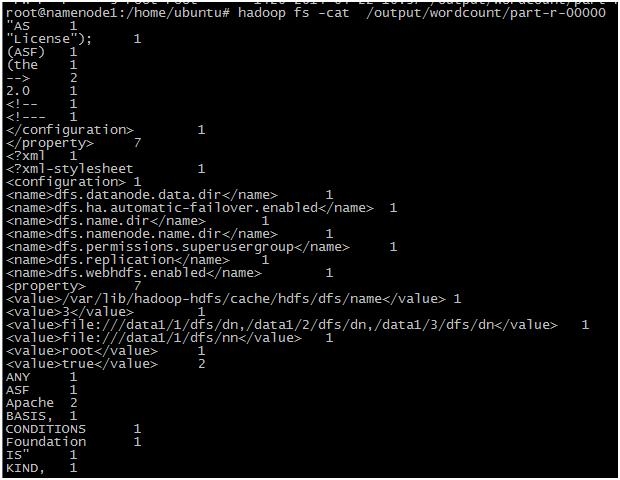

4. 在安装MapReduce的任意节点上,运行一个hadoopjob:wordcount,统计input中存在的单词数量,结果在/output/wordcount中

# sudo -u hdfs hadoop jar/usr/lib/hadoop-MapReduce/hadoop-MapReduce-examples.jar wordcount/input/core-site.xml /output/wordcount

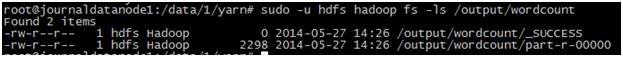

5. 查看output文件中的内容

# sudo -u hdfs hadoop fs -ls /output/wordcount

#sudo -u hdfs hadoop fs -cat /output/wordcount/part-r-00000

出现下图,说明mapreduce运算成功,yarn可以正常使用: