keeplived应用

Keeplived简介

keepalived观其名可知,保持存活,在网络里面就是保持在线了,也就是所谓的高可用或热备,用来防止单点故障(单点故障是指一旦某一点出现故障就会导致整个系统架构的不可用)的发生。

Keepalived原理

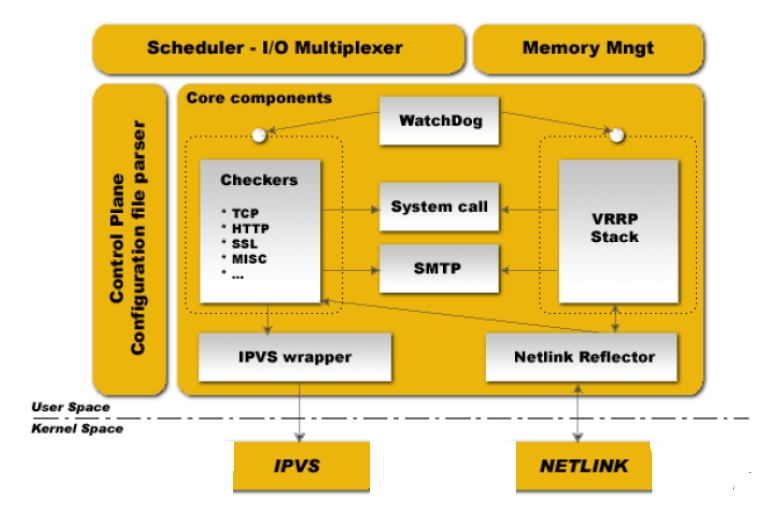

keepalived也是模块化设计,不同模块复杂不同的功能

core:是keepalived的核心,复杂主进程的启动和维护,全局配置文件的加载解析等

check:负责healthchecker(健康检查),包括了各种健康检查方式,以及对应的配置的解析包括LVS的配置解析

vrrp:VRRPD子进程,VRRPD子进程就是来实现VRRP协议的

libipfwc:iptables(ipchains)库,配置LVS会用到

libipvs*:配置LVS会用到

注意,keepalived和LVS完全是两码事,只不过他们各负其责相互配合而已

keepalived启动后会有三个进程

父进程:内存管理,子进程管理等等

子进程:VRRP子进程

子进程:healthchecker子进程

有图可知,两个子进程都被系统WatchDog看管,两个子进程各自复杂自己的事,healthchecker子进程复杂检查各自服务器的健康程度,例如HTTP,LVS等等,如果healthchecker子进程检查到MASTER上服务不可用了,就会通知本机上的兄弟VRRP子进程,让他删除通告,并且去掉虚拟IP,转换为BACKUP状态

实验:

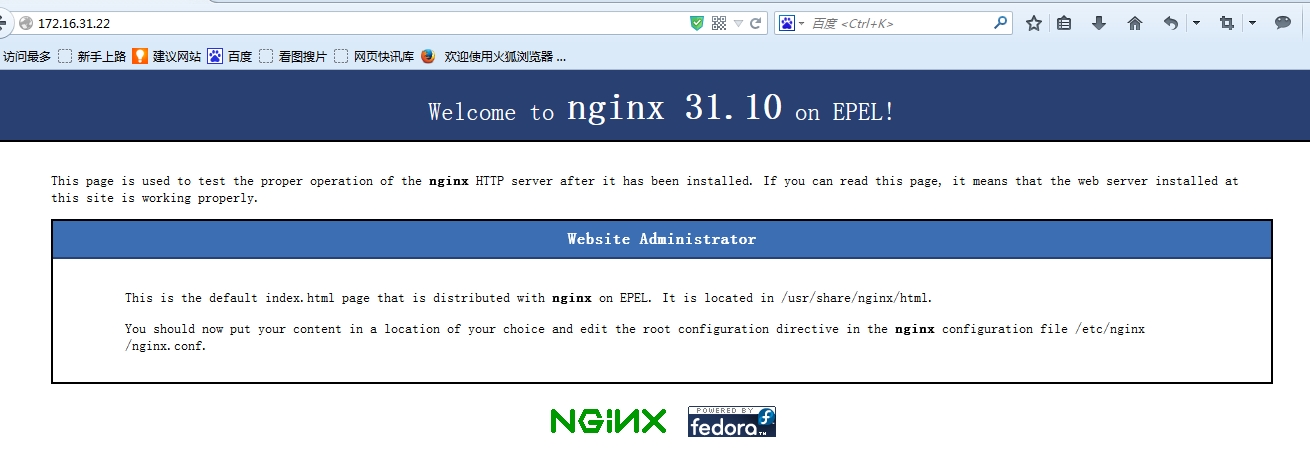

主机1 172.16.31.10

主机2 172.16.31.30

1, 下载rpm包至本地,然后安装,此次实验用的是keepalived-1.2.13-1.el6.x86_64.rpm主机1,2都安装

#yum install -y keepalived-1.2.13-1.el6.x86_64.rpm

2, 编辑配置文件在/etc/keepalived/keepalived.conf,一般在配置前最好先把配置文件备份一下

#cp keepalived.conf keepalived.conf.bak

!Configuration File for keepalived //叹号开头的行为注释行

global_defs{ //全局配置

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instanceVI_1 { // 配置实例1

state MASTER //状态为主

interface eth0 //在哪个接口上配置

virtual_router_id 51 // 虚拟router_id 号为51

priority 100 // 优先级

advert_int 1

authentication {

auth_type PASS // 基于密码的认证

auth_pass1111 // 认证密码

}

virtual_ipaddress { // 虚拟地址IP组

172.16.31.22

}

}

3, 配置备份节点etc/keepalived/keepalived.conf

vrrp_instanceVI_1 {

state BACKUP // 状态变为备的,BACKUP

interface eth0

virtual_router_id 101

priority 99 // 优先级比主的小就行

advert_int 1

authentication {

auth_type PASS

auth_pass 123456 // 与主的保持一致

}

virtual_ipaddress {

172.16.31.22

}

}

4, 启动keepalived服务在主机1接点上

# service keepalived start

Starting keepalived: [ OK ]

在主机1上# ip addr show

(1): lo: <LOOPBACK,UP,LOWER_UP> mtu16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

(2): eth0:<BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen1000

link/ether 00:0c:29:a7:90:c5 brd ff:ff:ff:ff:ff:ff

inet 172.16.31.10/16 brd 172.16.255.255 scope global eth0

inet 172.16.31.22/32 scope global eth0 虚拟IP配置上了

inet6 fe80::20c:29ff:fea7:90c5/64 scope link

valid_lft forever preferred_lft forever

5, 然后再主机1上停止一下服务,查看日志文件

# service keepalived stop

Oct 10 17:00:49 localhost Keepalived[2791]: Stopping Keepalivedv1.2.13 (09/14,2014)

Oct 10 17:00:49 localhost Keepalived_vrrp[2793]:VRRP_Instance(VI_1) removing protocol VIPs.

主机1上# ip addrshow

(1): lo:<LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

(2): eth0:<BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen1000

link/ether 00:0c:29:a7:90:c5 brdff:ff:ff:ff:ff:ff

inet 172.16.31.10/16 brd 172.16.255.255scope global eth0

inet6 fe80::20c:29ff:fea7:90c5/64 scopelink

valid_lftforever preferred_lft forever

此刻172.16.31.22消失了

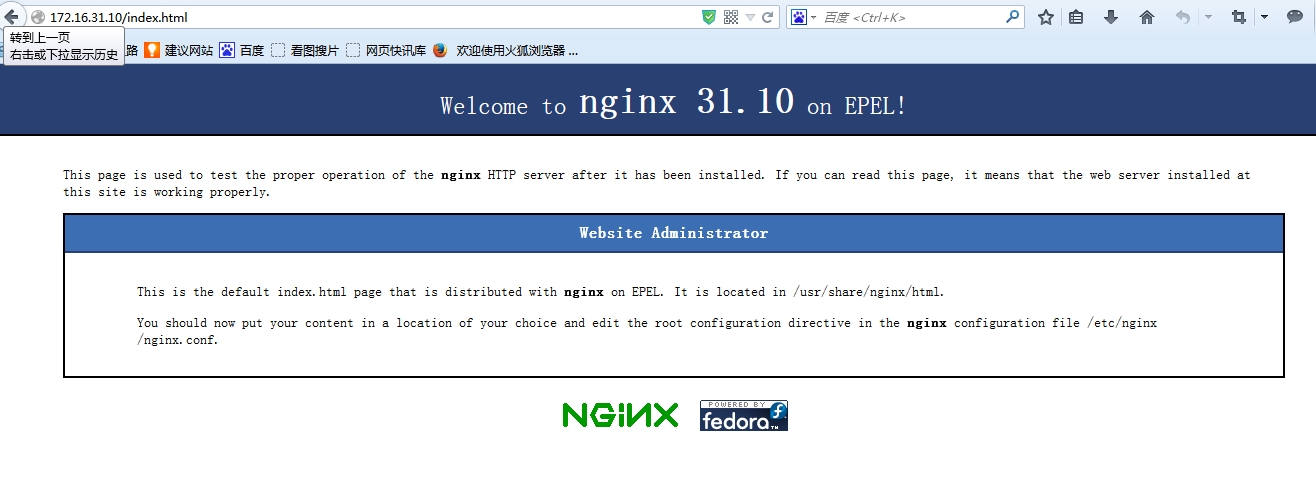

6, 此刻在启动2节点keepalived服务,并查看日志文件

# service keepalived start

Sep 25 08:15:21 localhost Keepalived_vrrp[3954]: VRRP_Instance(VI_1)Transition to MASTER STATE

Sep 25 08:15:22 localhost Keepalived_vrrp[3954]: VRRP_Instance(VI_1)Entering MASTER STATE

Sep 25 08:15:22 localhost Keepalived_vrrp[3954]: VRRP_Instance(VI_1)setting protocol VIPs.

由于没人和主机2抢,所以主机2就升级为master

# ip addrshow

(1): lo:<LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

(2): eth0:<BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen1000

link/ether 00:0c:29:c8:8f:e1 brdff:ff:ff:ff:ff:ff

inet 172.16.31.30/16 brd 172.16.255.255scope global eth0

inet172.16.31.22/32 scope global eth0 此刻ip在主机2上

inet6 fe80::20c:29ff:fec8:8fe1/64 scopelink

valid_lftforever preferred_lft forever

7, 在2节点上停止服务,在开始一下服务

Oct 10 17:40:59 localhost Keepalived_vrrp[2941]: VRRP_Instance(VI_1)Received lower prio advert, forcing new election 1节点接收到一个比自己优先级低的,然后重新选举,自己变为主

Oct 10 17:41:00 localhost Keepalived_vrrp[2941]: VRRP_Instance(VI_1)Entering MASTER STATE

Oct 10 17:41:00 localhost Keepalived_vrrp[2941]: VRRP_Instance(VI_1)setting protocol VIPs.

Oct 10 17:41:00 localhost Keepalived_healthcheckers[2940]: Netlinkreflector reports IP 172.16.31.22 added

8, 然后开始配置双主模型,在1,2节点上配置,各增加一个实例

vrrp_instanceVI_2 {

state BACKUP

interface eth0

virtual_router_id 200

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 123

}

virtual_ipaddress {

172.16.31.222

}

}

节点2上

vrrp_instanceVI_2 {

state MASTER

interface eth0

virtual_router_id 200

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123

}

virtual_ipaddress {

172.16.31.222

}

}

然后节点1上# ip addr show

(1): lo: <LOOPBACK,UP,LOWER_UP> mtu16436 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

(2): eth0:<BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen1000

link/ether 00:0c:29:a7:90:c5 brd ff:ff:ff:ff:ff:ff

inet 172.16.31.10/16 brd 172.16.255.255 scope global eth0

inet 172.16.31.22/32 scope global eth0

inet6 fe80::20c:29ff:fea7:90c5/64 scope link

valid_lft forever preferred_lft forever

节点2上

(2): eth0:<BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen1000

link/ether 00:0c:29:c8:8f:e1 brd ff:ff:ff:ff:ff:ff

inet 172.16.31.30/16 brd 172.16.255.255 scope global eth0

inet 172.16.31.222/32 scope global eth0

inet6 fe80::20c:29ff:fec8:8fe1/64 scope link

valid_lft forever preferred_lft forever

9, 简单的利用keepalived配置通知nginx

首先在1,2节点上安装nginx,然后再配置文件keepalived.conf配置

vrrp_instanceVI_1 {

state MASTER

interface eth0

virtual_router_id 101

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

172.16.31.22

}

notify_master "/etc/rc.d/init.d/nginxstart" 表示当切换master时,要执行的脚本

notify_backup "/etc/rc.d/init.d/nginxstop"

notify_fault "/etc/rc.d/init.d/nginxstop"

}

2节点上同样的配置,然后1,2节点

#servicekeepalived restart

LISTEN 0 128 *:80 *:*

然后再此基础上扩展,shell脚本来检测

vrrp-script chk_nx{

script "killall -0 nginx"

interval 1 脚本执行间隔

weight -5 脚本结果导致优先级变更,-5优先级-5,+10优先级+10

fall 2

rise 1

}

然后再配置文件最后再加上

track_script{

chk_ nx 这个是调用上面那个脚本

}

2节点上也是这样,然后2节点开启服务

然后开启1节点,这时候由于1节点比2节点的优先级高,就会变为主的