Cloudera Manager 日常操作

HDFS URI: hdfs://active-namenode[:8020]/ or hdfs://nameservice-name/

Web Interface:

hadoop namenode: 50070

hadoop secondarynode: 50090

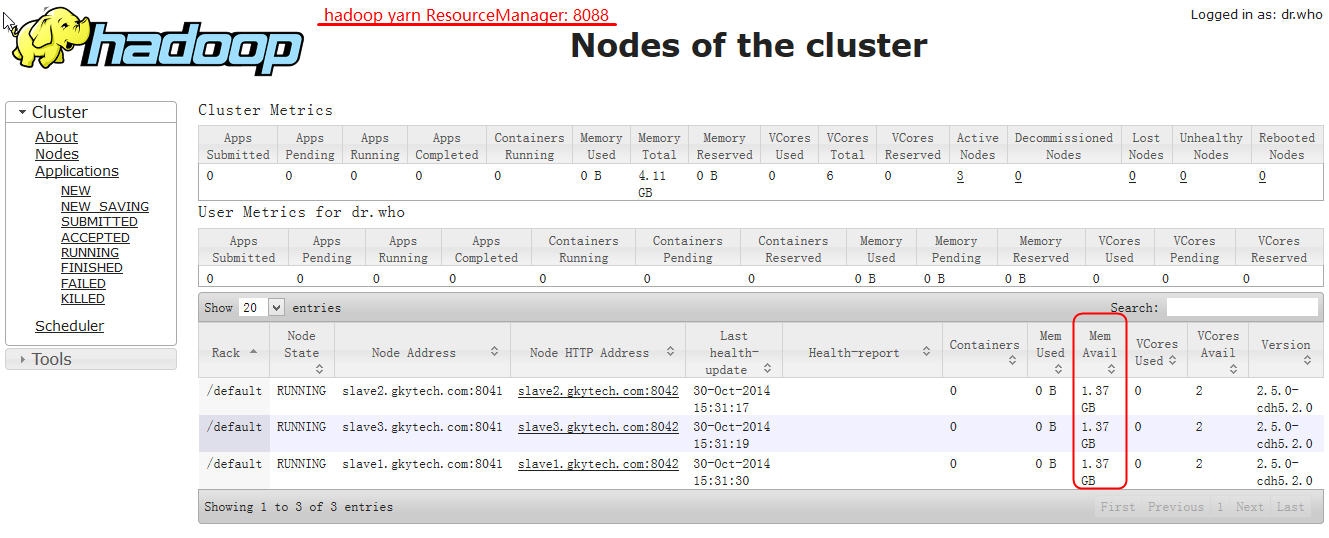

hadoop yarn ResourceManager: 8088

hadoop yarn NodeManager: 8042

hadoop MapReduce JobHistory Server: 19888

调整参数,优化性能:

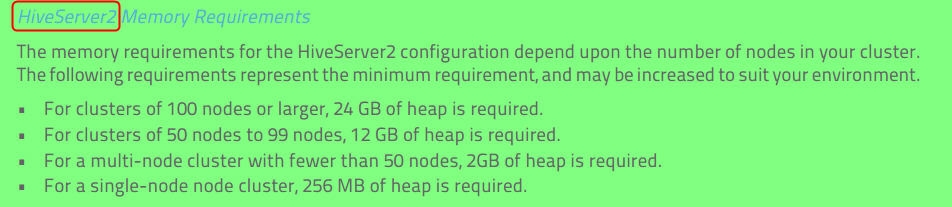

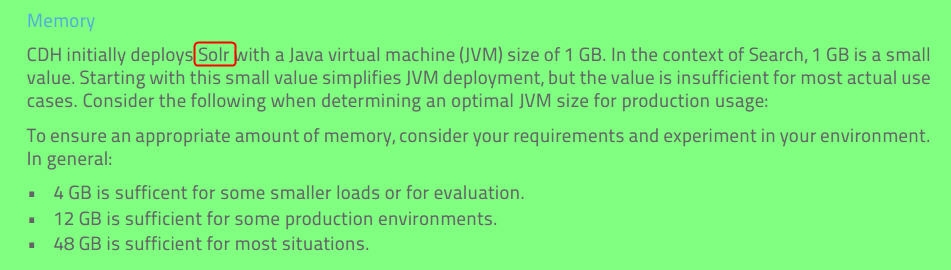

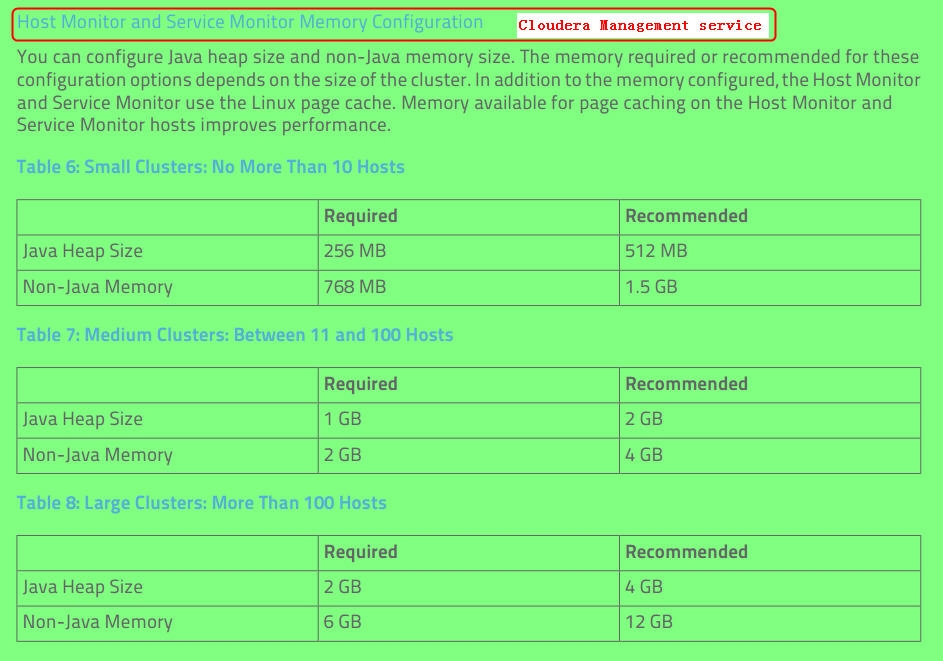

search "heap"、"memory"、"non-java" in all servic configuration tab, then tune it appropriately

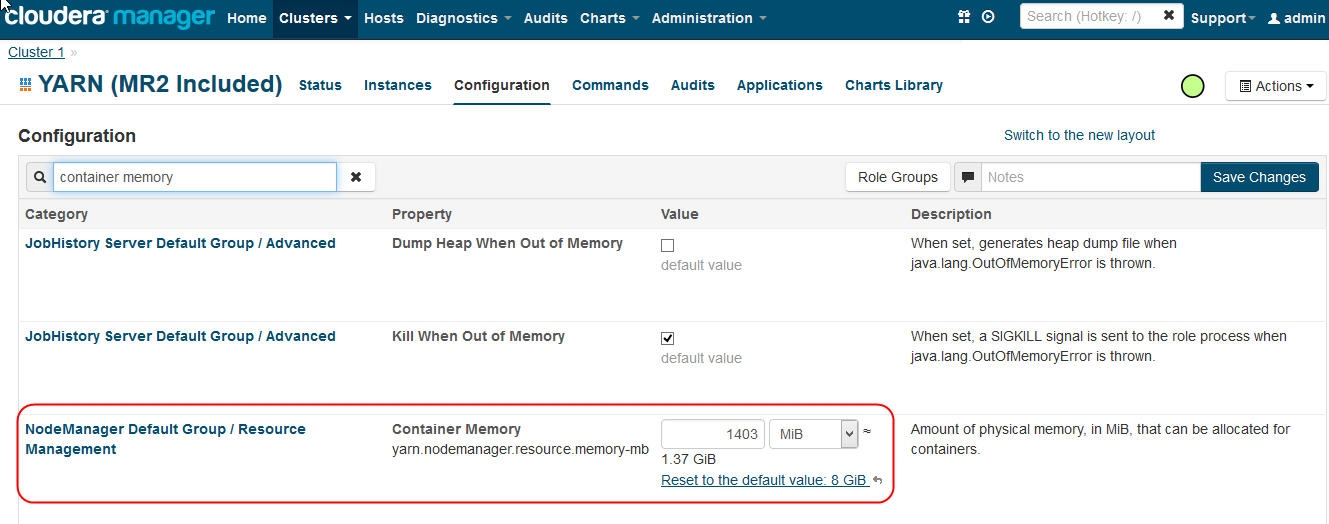

Change Container Memory used by ResourceManager and NodeManager when running jobs:

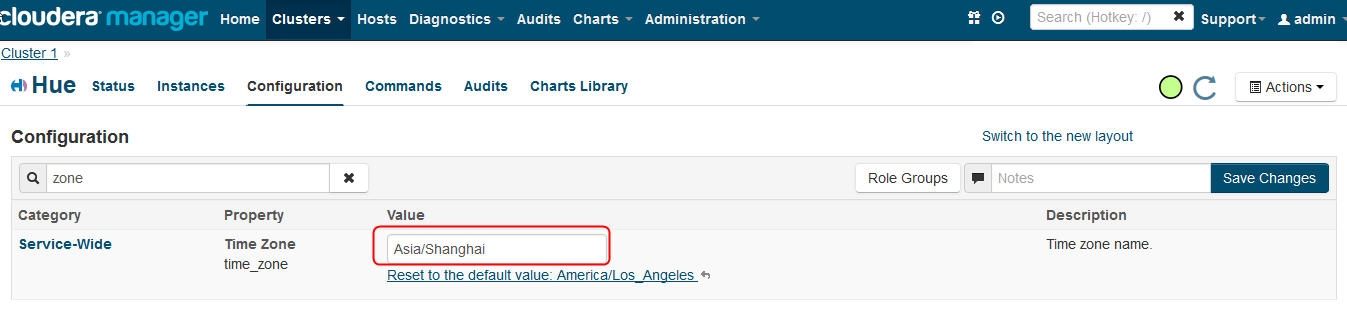

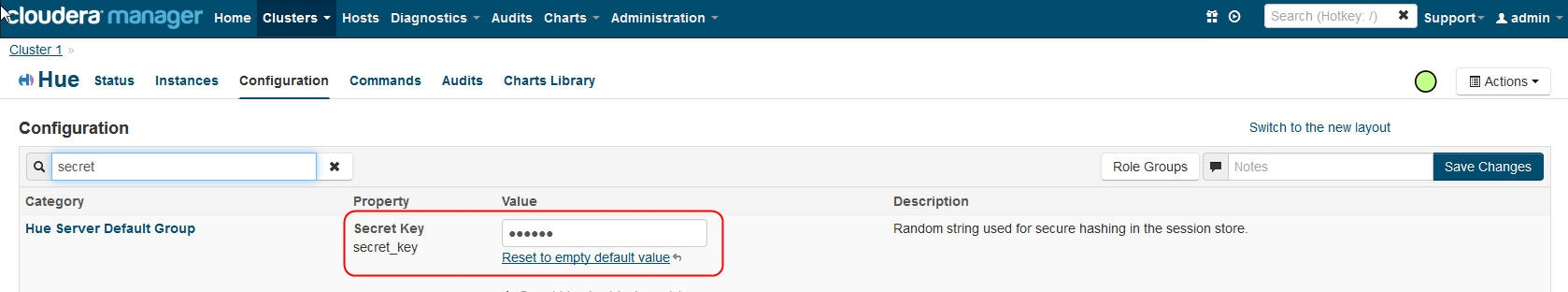

Change Time Zone and set secret key for HUE:

restart HUE service

enable LZO functionality on cluster: (make sure all nodes in cluster installed lzo package and activated gplextras parcel in CM5)

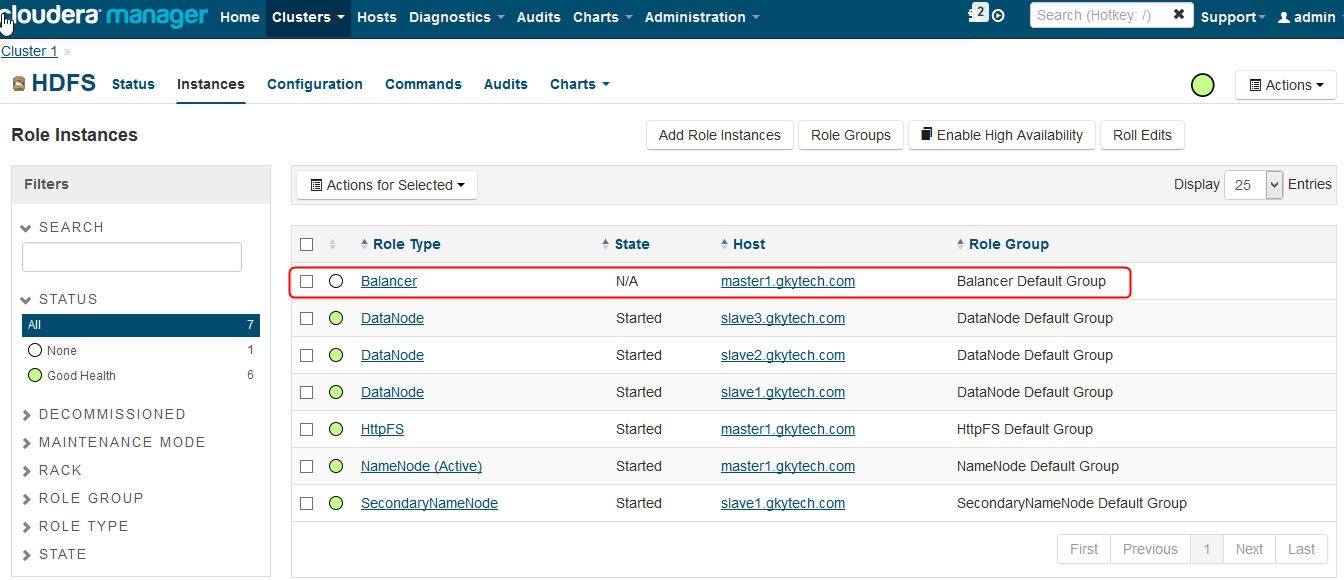

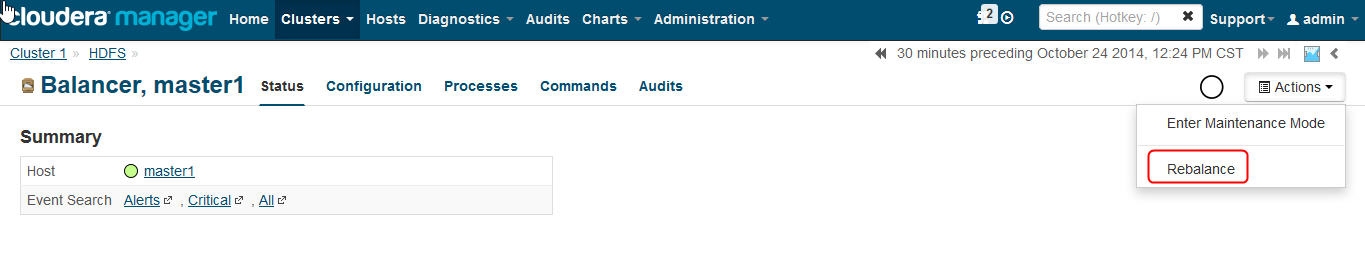

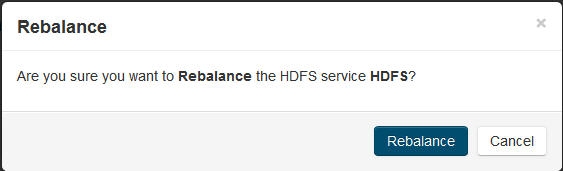

HDFS

1. Go to the HDFS service.

2. Click the Configuration tab.

3. Search for the io.compression.codecs property.

4. In the Compression Codecs property, click in the field, then click the + sign to open a new value field.

5. Add the following two codecs:

com.hadoop.compression.lzo.LzoCodec

com.hadoop.compression.lzo.LzopCodec

6. Save your configuration changes.

Oozie

1. Go to /var/lib/oozie on each Oozie server and even if the LZO JAR is present, symlink the Hadoop LZO

JAR: ln -s /opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/hadoop-lzo.jar /var/lib/oozie/

CDH 5 - /opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/hadoop-lzo.jar

Sqoop 2

1. Add the following entries to the Sqoop Service Environment Advanced Configuration Snippet:

HADOOP_CLASSPATH=$HADOOP_CLASSPATH:/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/*

JAVA_LIBRARY_PATH=$JAVA_LIBRARY_PATH:/opt/cloudera/parcels/GPLEXTRAS/lib/hadoop/lib/native

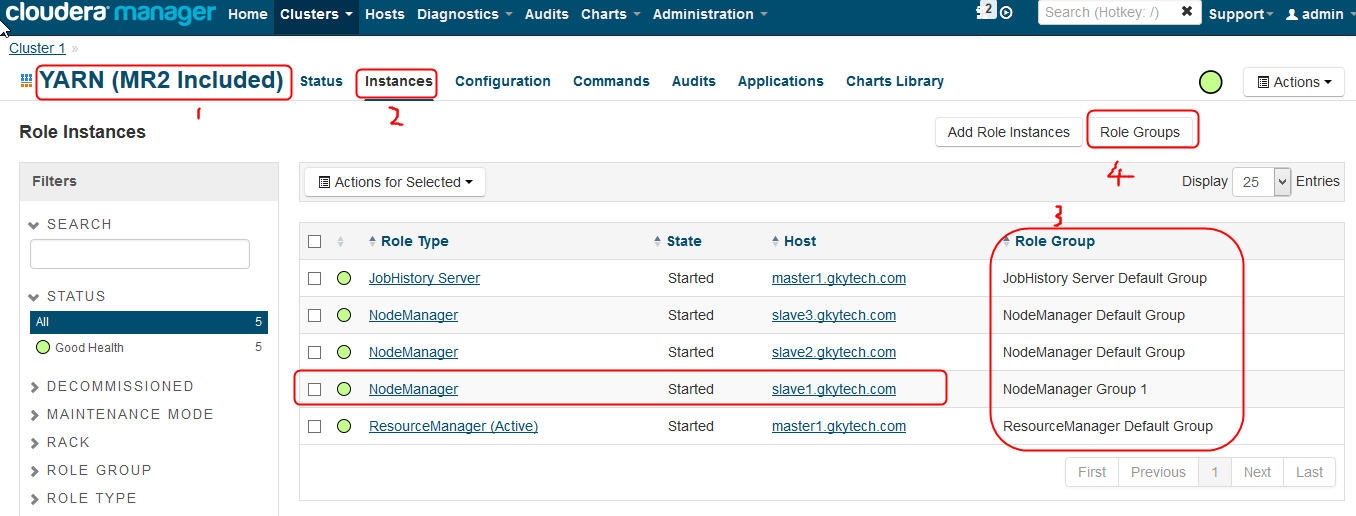

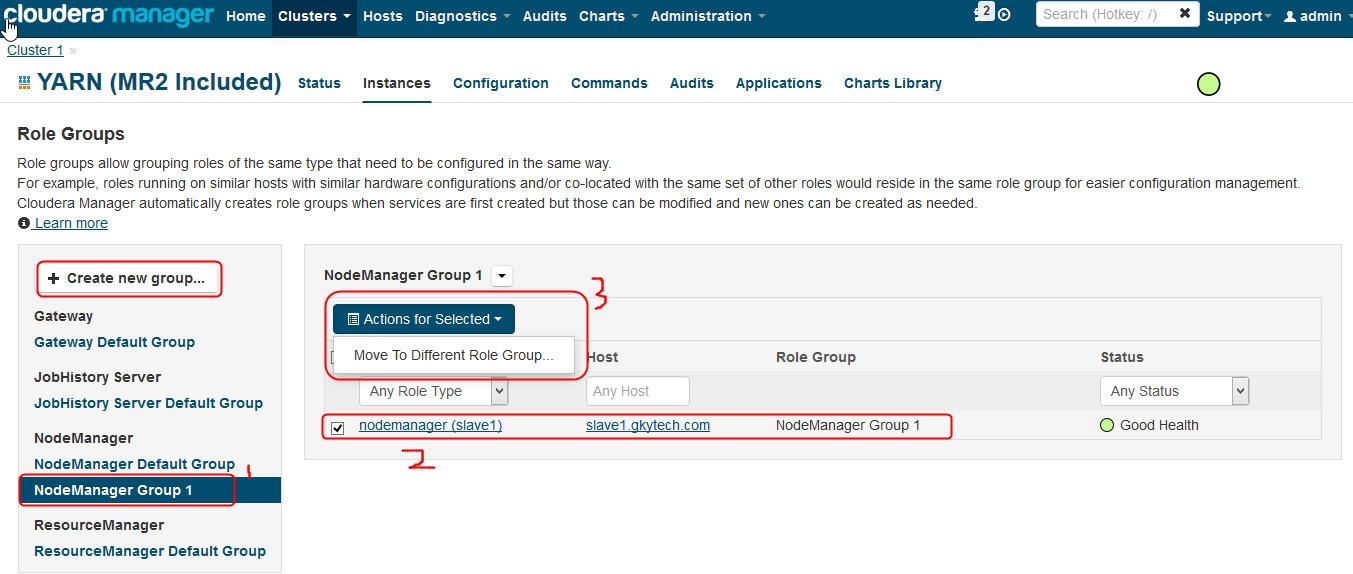

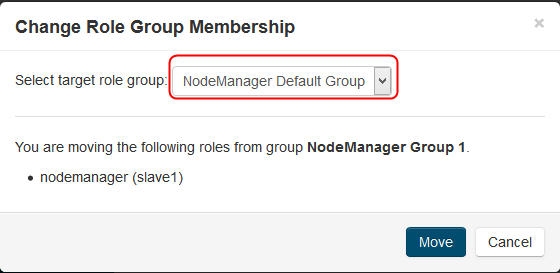

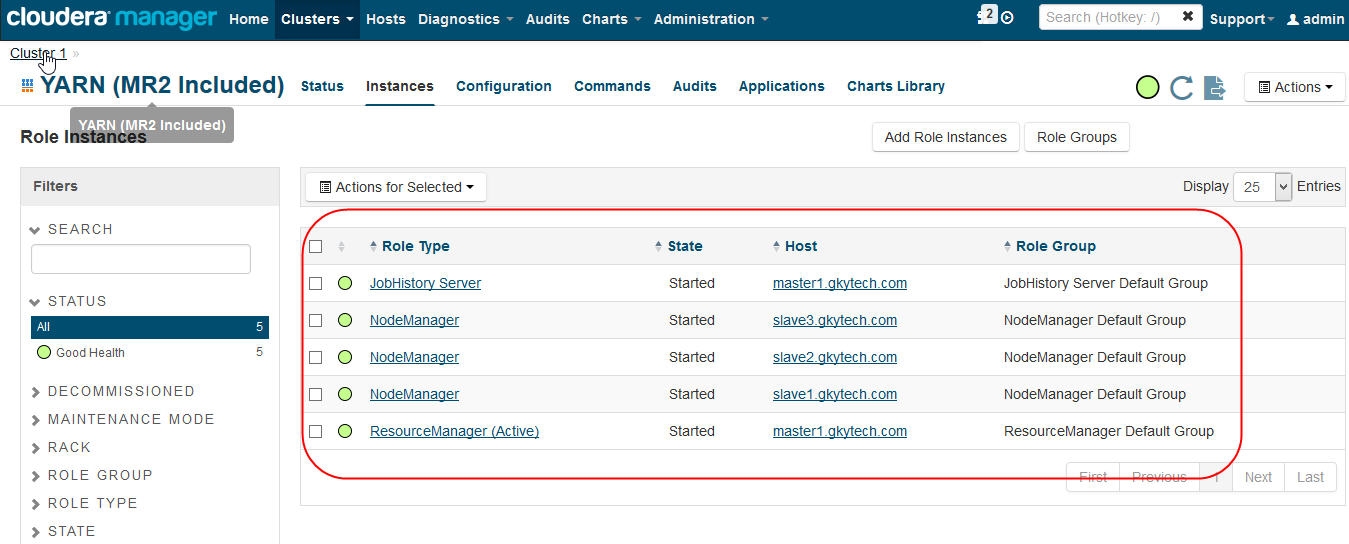

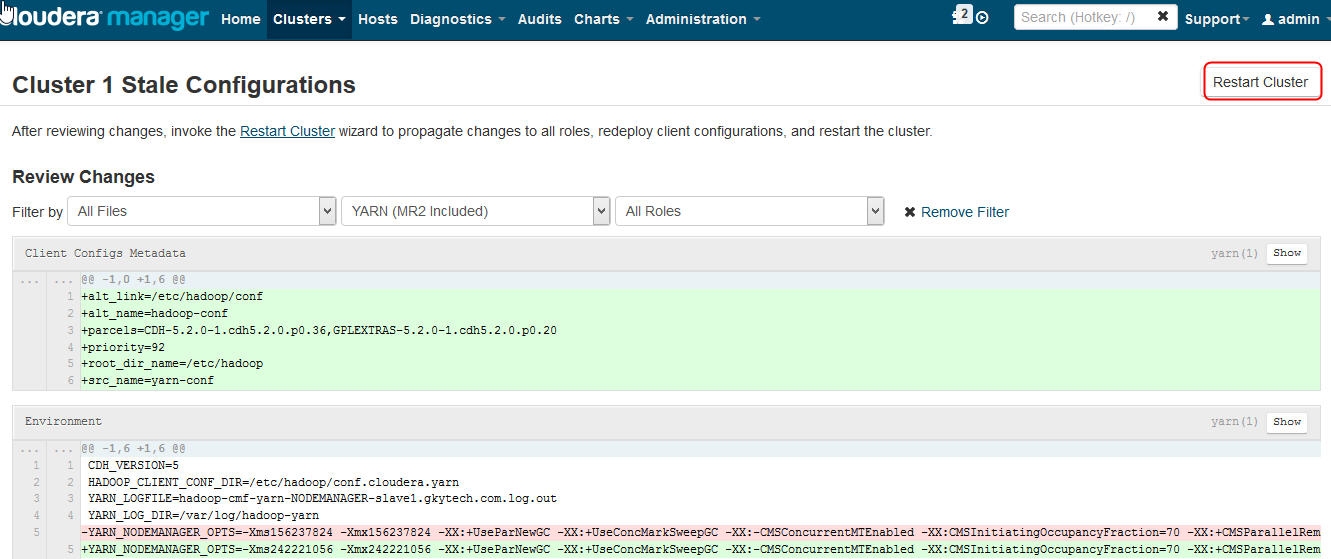

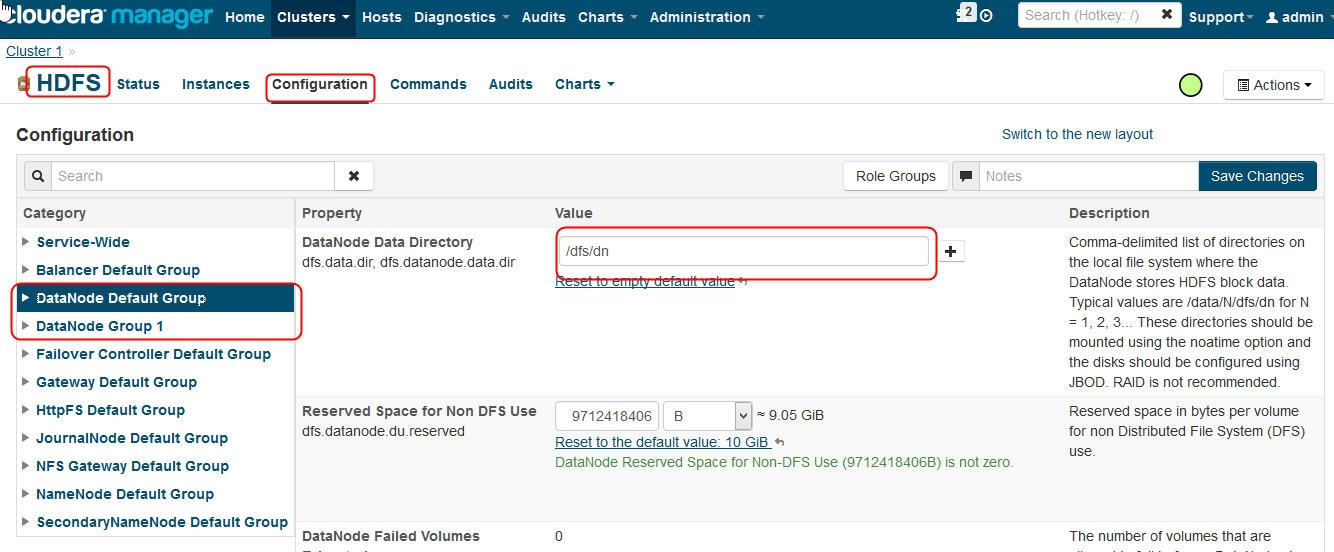

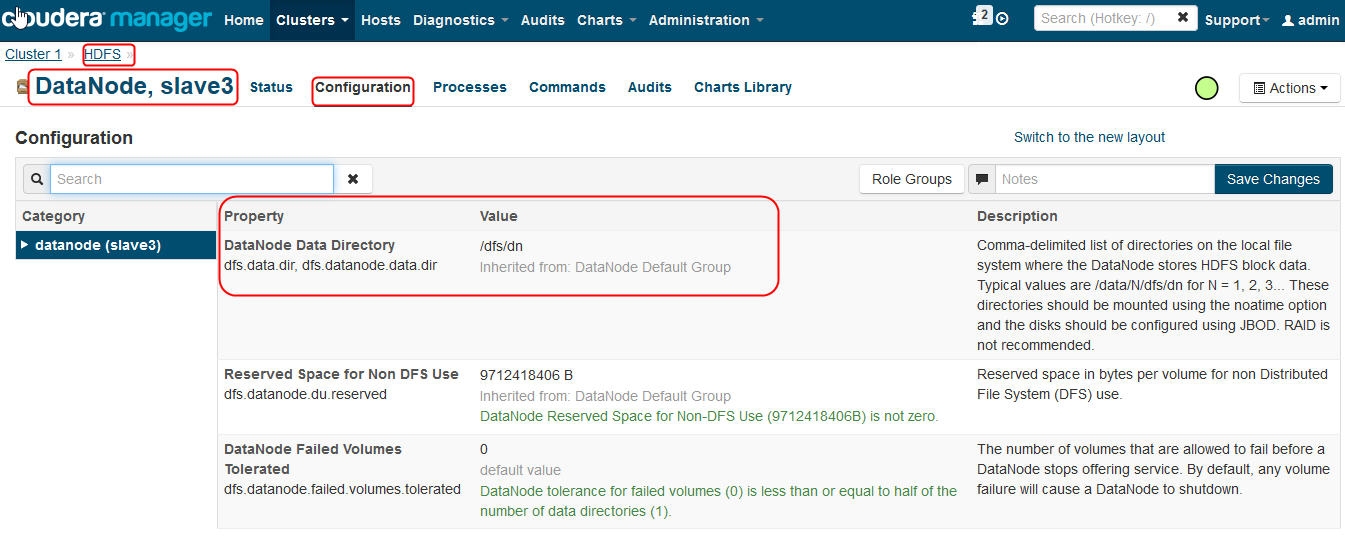

Move node to other role group:

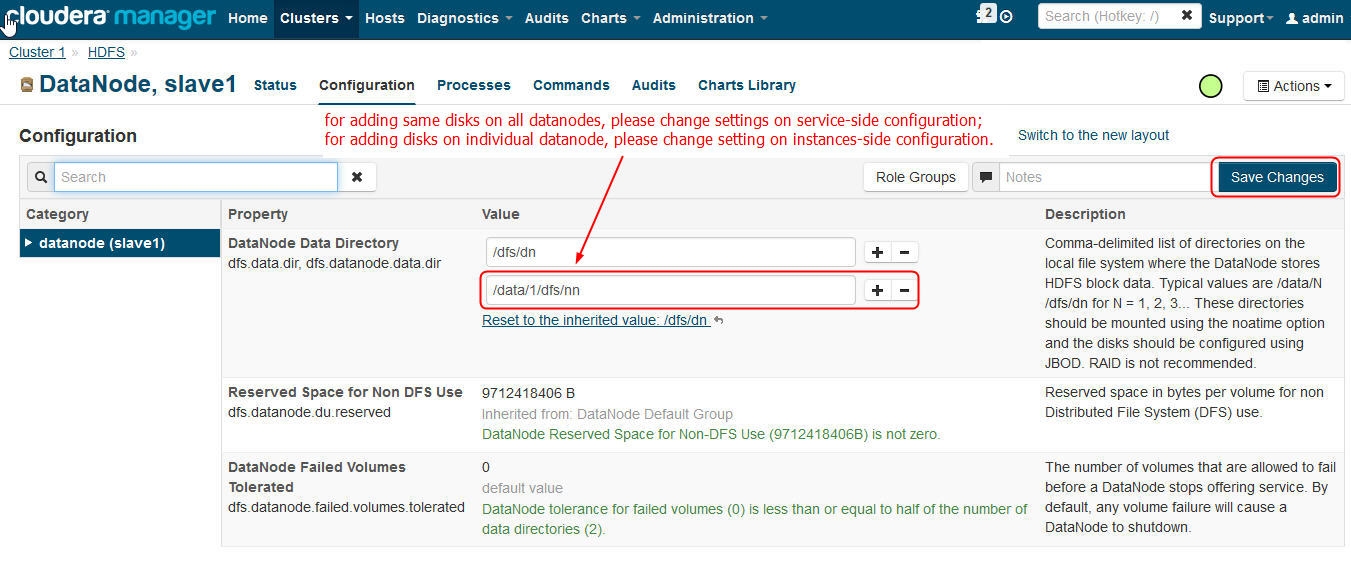

Service-side configuration and instance-side configuration:

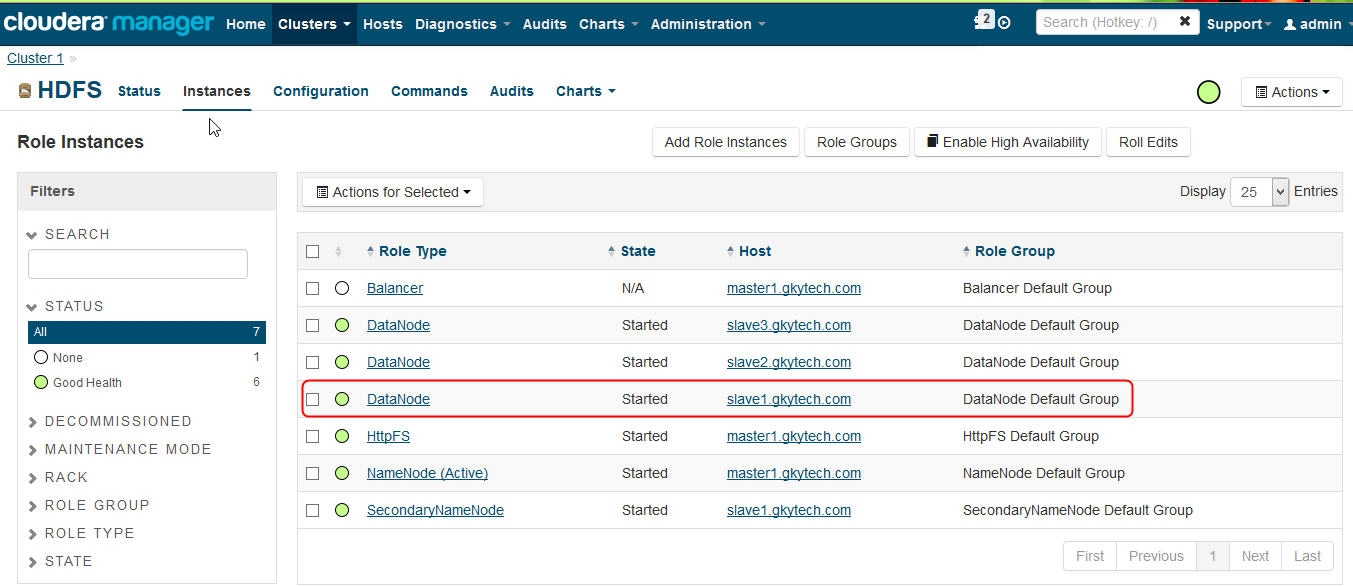

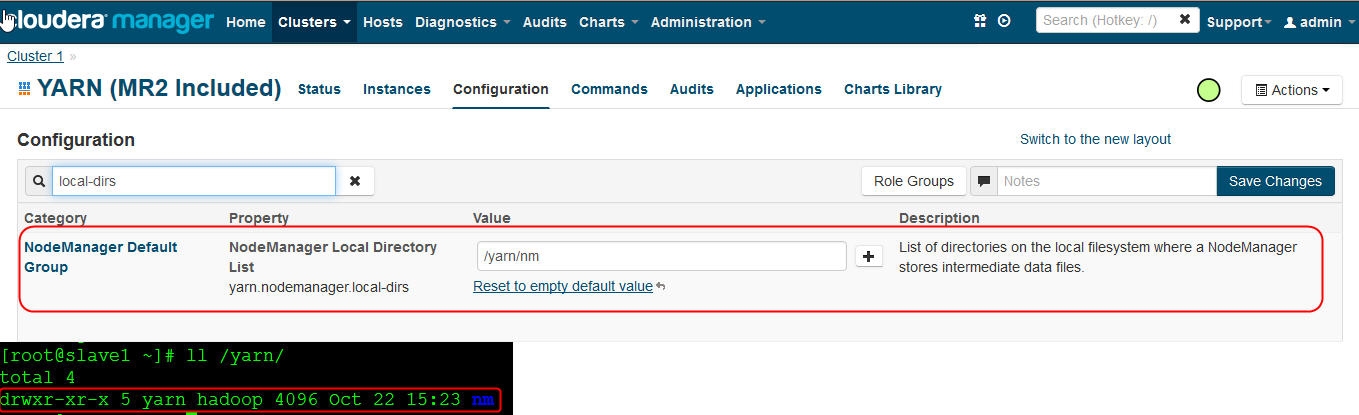

Add disk to datanodes:

1. on slave1 datanode, add disks to it, here we only add one disk for example

2. fdisk /dev/sdb (one partition per disk)

3. mkfs.ext4 /dev/sdb1

4. mkdir -p /data/1

5. vi /etc/fstab

/dev/sdb1 /data/1 ext4 defaults,noatime 0 0

mount -a

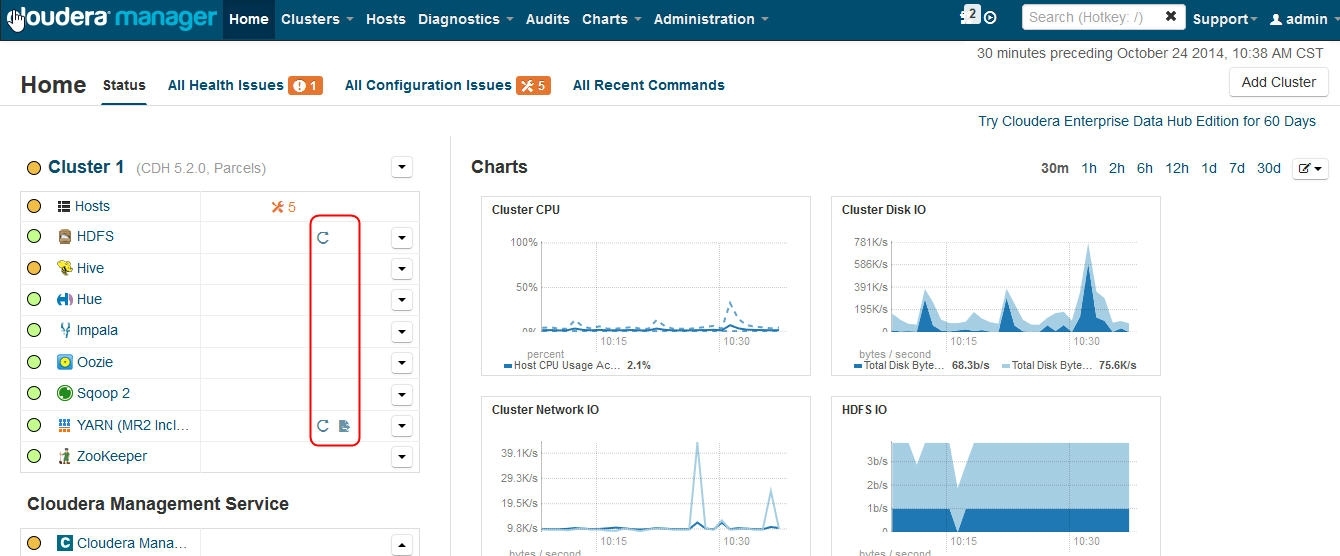

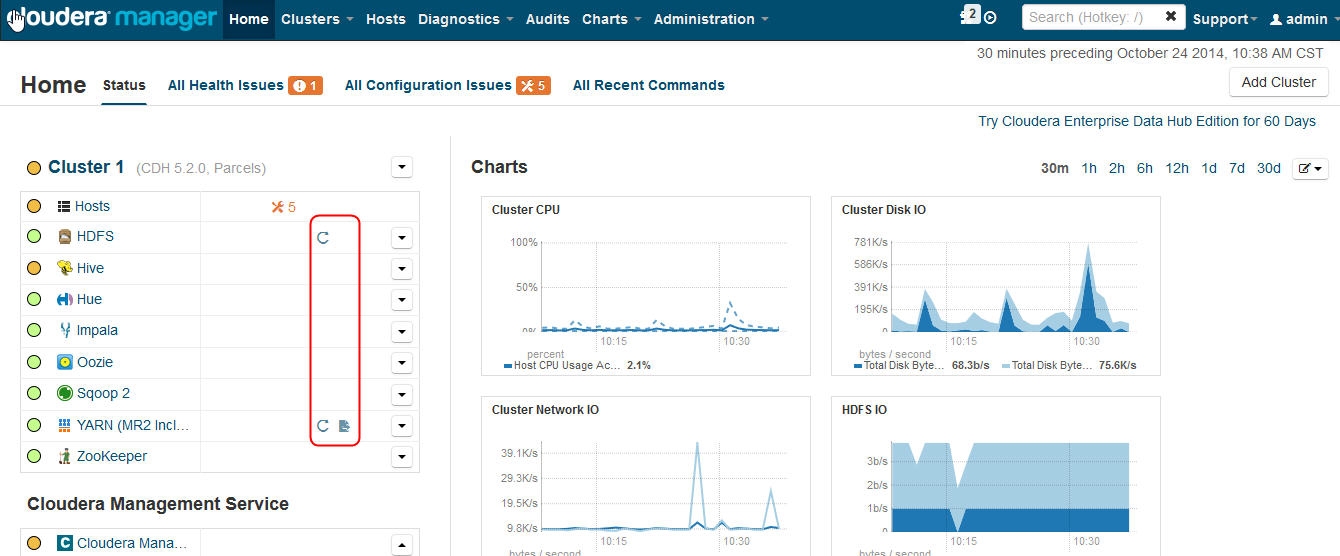

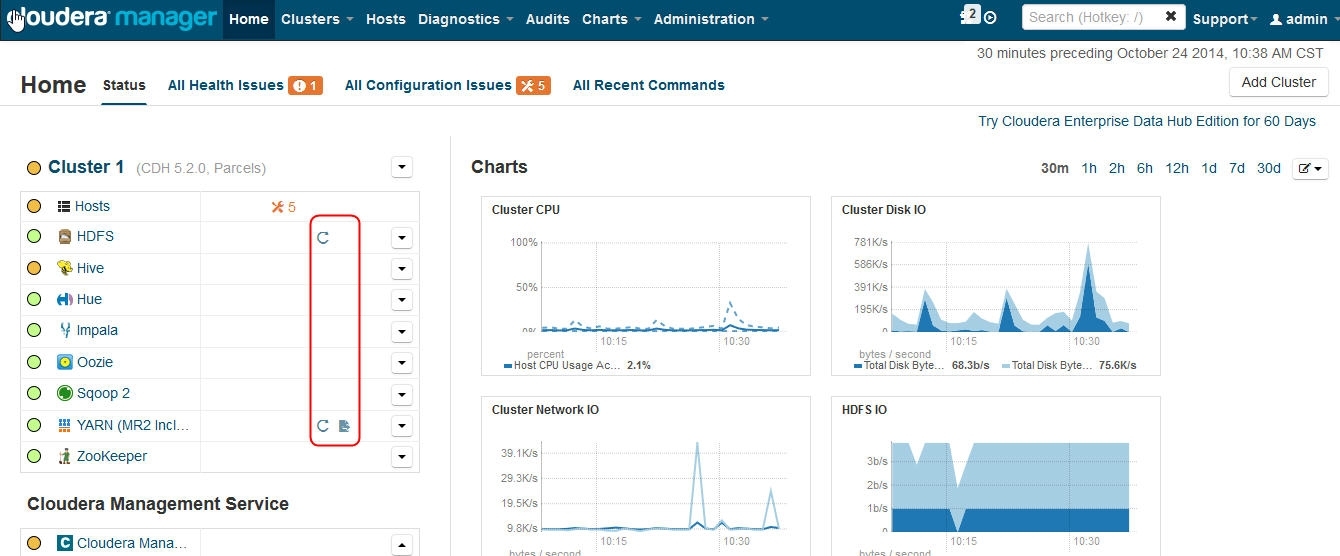

The order in which to start services is:

1. Cloudera Management Service

2. Zookeeper

3. HDFS

4. Solr

5. Flume

6. HBase

7. KS_Indexer

8. YARN

9. Hive

10. Impala

11. Oozie

12. Sqoop2

13. Hue

The order in which to stop services is:

1. Hue

2. Sqoop2

3. Oozie

4. Impala

5. Hive

6. YARN

7. KS_Indexer

8. HBase

9. Flume

10. Solr

11. HDFS

12. Zookeeper

13. Cloudera Management Service

其他用户使用hadoop运行mapreduce job:

on Cluster Nodes:

useradd username (you can integrate AD with Znetyal)

[ optional: groupadd supergroup; gpasswd -a username supergroup ]

sudo -u hdfs hadoop fs -mkdir /user/username (you can integrate AD with HUE)

sudo -u hdfs hadoop fs -chown username /user/username

with username login:

hadoop fs -ls /

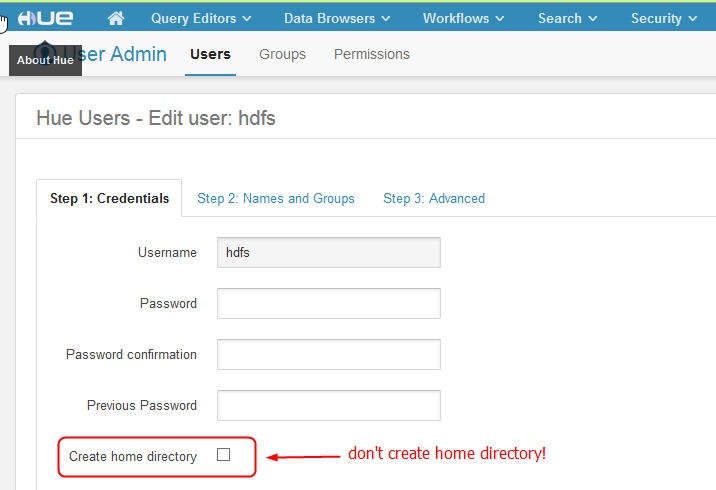

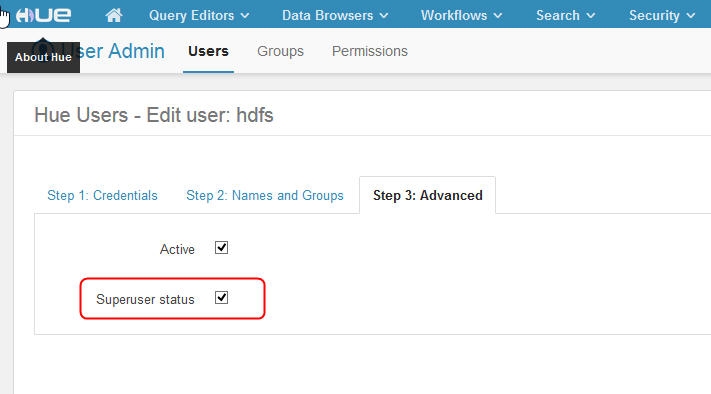

Add hdfs user in HUE web UI:

Deleting Services

1. stop service

2. delete service

3. remove related user data (double check carefully)

$ sudo rm -Rf /var/lib/flume-ng /var/lib/hadoop* /var/lib/hue /var/lib/navigator /var/lib/oozie /var/lib/solr /var/lib/sqoop* /var/lib/zookeeper

$ sudo rm -Rf /dfs /mapred /yarn

Deleting Hosts

1. Decommission host

2. Stop cloudera manager agent

3. Delete host

Notes: Decommissioning applies to only to HDFS DataNode, MapReduce TaskTracker, YARN NodeManager, and HBase RegionServer roles

Install the JCE Policy File:

unzip -x UnlimitedJCEPolicyJDK7.zip

cd UnlimitedJCEPolicy

cp local_policy.jar US_export_policy.jar /usr/java/default/jre/lib/security/