HBase-1.0.1学习笔记(一)集群搭建

鲁春利的工作笔记,谁说程序员不能有文艺范?

Hbase集群依赖于Hadoop(Hadoop2.6.0HA集群搭建)和Zookeeper(ZooKeeper3.4.6集群配置)

如下配置参照了http://hbase.apache.org/book.html,详见:hbase-1.0.1.1/docs/book.html

安装JDK1.6或更高版本

略

下载HBase

从地址http://hbase.apache.org/下载hbase安装包

解压 % tar -xzv -f hbase-x.y.z.tar.gz

配置HBase环境变量

# hadoop体系的软件均通过hadoop用户来运行 vim /home/hadoop/.bash_profile HBASE_HOME=/usr/local/hbase1.0.1 PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$ZOOKEEPER_HOME/conf: $HBASE_HOME/bin:$PATH

配置文件hbase-env.sh

export JAVA_HOME=/usr/local/jdk1.7

export HBASE_HOME=/usr/local/hbase1.0.1

# 由于hbase使用到了HDFS的HA地址,需要配置为Hadoop的conf目录(hadoop2.0为etc/hadoop)

export HBASE_CLASSPATH=/usr/local/hadoop2.6.0/etc/hadoop

# HBase的日志

export HBASE_LOG_DIR=${HBASE_HOME}/logs

# 禁用hbase自带的zookeeper

export HBASE_MANAGES_ZK=false

配置文件hbase-site.xml

<configuration> <property> <name>hbase.cluster.distributed</name> <value>true</value> <description>true for fully-distributed</description> </property> <property> <name>hbase.tmp.dir</name> <value>/usr/local/hbase1.0.1/data/tmp</value> <description>本地文件系统的临时文件夹,hbase运行时使用</description> </property> <property> <name>hbase.local.dir</name> <value>/usr/local/hbase1.0.1/data/local</value> <description>本地文件系统的本地存储</description> </property> <property> <name>hbase.rootdir</name> <value>hdfs://cluster/hbase</value> <description>hbase在hdfs上存储的根目录(fs.defaultFS+目录名称)</description> </property> <property> <name>hbase.zookeeper.quorum</name> <value>nnode,dnode1,dnode2</value> <description>zk服务器列表</description> </property> <property> <name>hbase.zookeeper.property.dataDir</name> <value>/usr/local/zookeeper3.4.6/data</value> <description>Property from ZooKeeper config zoo.cfg.</description> </property> <property> <name>zookeeper.session.timeout</name> <value>120000</value> <description>2 minute, the minute from RegionServer to Zookepper</description> </property> <property> <name>hbase.zookeeper.property.tickTime</name> <value>2000</value> <description>Property from Zookeeper config zoo.cfg</description> </property> <property> <name>hbase.zookeeper.property.clientPort</name> <value>2181</value> <description>Property from Zookeeper config zoo.cfg</description> </property> <!-- HMaster相关参数 --> <property> <name>hbase.master.info.bindAddress</name> <value>nnode</value> <description>HBase Master Web UI的绑定地址,默认0.0.0.0</description> </property> <property> <name>hbase.master.info.port</name> <value>16010</value> <description>HBase Master Web UI端口,-1表示不运行Web UI</description> </property> <!-- HRegionServer相关参数 --> <property> <name>hbase.regionserver.port</name> <value>16020</value> <description>The port the HBase RegionServer binds to.</description> </property> <property> <name>hbase.regionserver.info.port</name> <value>16030</value> <description>The port for the HBase RegionServer web UI</description> </property> <!-- 在其他机器上需要配置为当前主机的主机名 --> <property> <name>hbase.regionserver.info.bindAddress</name> <value>nnode</value> <description>The address for the HBase RegionServer web UI</description> </property> </configuration>

配置regionservers文件

[hadoop@nnode conf]$ pwd /usr/local/hbase1.0.1/conf # 定义RegionServer [hadoop@nnode conf]$ cat regionservers dnode1 dnode2 [hadoop@nnode conf]$

将该hbase目录拷贝到另外两台主机dnode1和dnode2上,在HMaster节点(nnode)运行HBase

# 启动zookeeper # 启动hadoop # 运行HBase bin/start-hbase.sh # Hbase默认只有一个Master,我们可以也启动多个Master: # hbase-daemon.sh start master # 不过,其它的Master并不会工作,只有当主Master down掉后其它的Master才会选择接管Master的工作。

验证安装

[hadoop@nnode ~]$ jps 12969 ResourceManager 12800 DFSZKFailoverController 12328 JournalNode 12281 QuorumPeerMain 13286 Jps 12514 NameNode [hadoop@nnode ~]$ start-hbase.sh starting master, logging to /usr/local/hbase1.0.1/logs/hbase-hadoop-master-nnode.out dnode2: starting regionserver, logging to /usr/local/hbase1.0.1/logs/hbase-hadoop-regionserver-dnode2.out dnode1: starting regionserver, logging to /usr/local/hbase1.0.1/logs/hbase-hadoop-regionserver-dnode1.out -- HMaster [hadoop@nnode ~]$ jps 12969 ResourceManager 12800 DFSZKFailoverController 13413 HMaster 12328 JournalNode 12281 QuorumPeerMain 12514 NameNode 13513 Jps [hadoop@nnode ~]$ -- RegionServer [hadoop@dnode1 ~]$ jps 12477 DFSZKFailoverController 12179 QuorumPeerMain 12309 NameNode 12233 JournalNode 12637 Jps 12377 DataNode

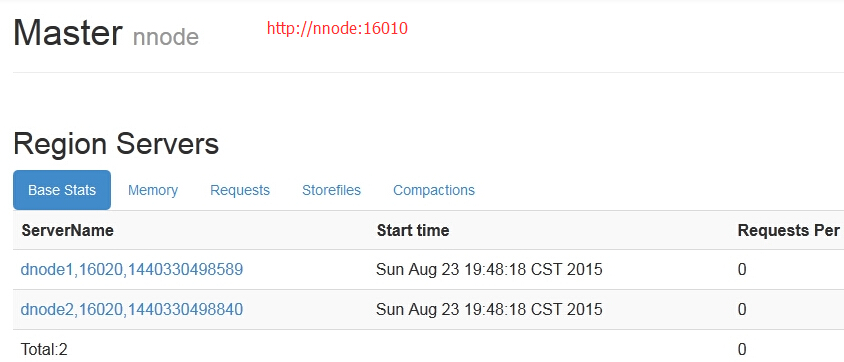

访问master节点的Web界面

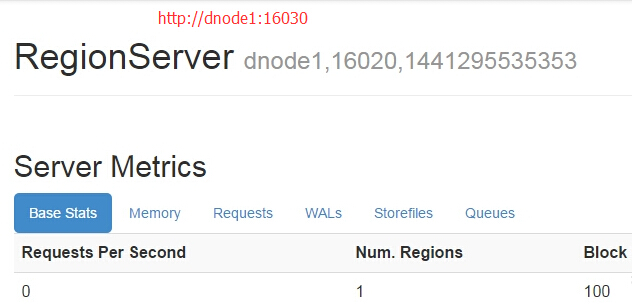

访问regionserver节点的Web界面

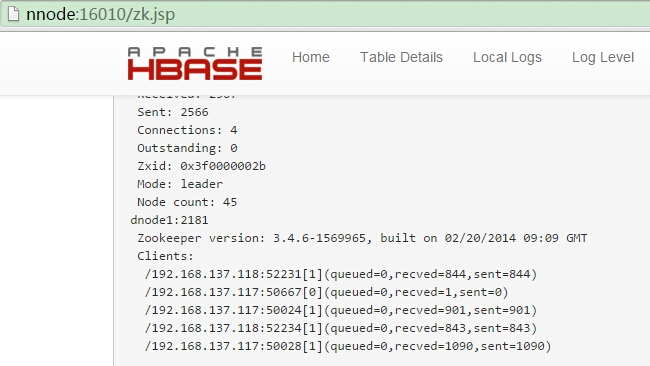

查看master节点web目录下zk的状态

命令行工具HBase shell使用

bin/hbase shell

连接成功后进入HBase的执行环境

HBase Shell; enter 'help<RETURN>' for list of supported commands. Type "exit<RETURN>" to leave the HBase Shell Version 1.0.1, r66a93c09df3b12ff7b86c39bc8475c60e15af82d, Fri Apr 17 22:14:06 PDT 2015 hbase(main):001:0>

输入help可以看到命令的详细帮助信息,需要注意的是,在使用命令引用到表名、行和列时需要加单引号。

创建一个名为test的表,只有一个column family(列族)为cf。

# 创建表 hbase(main):004:0> create 'test', 'cf' 0 row(s) in 0.7540 seconds => Hbase::Table - test # 查看表 hbase(main):005:0> list 'test' TABLE test 1 row(s) in 0.0150 seconds => ["test"] # 插入数据 hbase(main):006:0> put 'test', 'row1', 'cf:id', '1000' 0 row(s) in 0.3310 seconds hbase(main):007:0> put 'test', 'row1', 'cf:name', 'lucl' 0 row(s) in 0.0250 seconds hbase(main):008:0> put 'test', 'row2', 'cf:c', 'val001' 0 row(s) in 0.0220 seconds # 以上命令分别插入了三行数据,第一行rowkey为row1,列为cf:id,值为1000。HBase中的列是又 # column family前缀和列的名字组成的,以冒号分割。 # 扫描表的数据 hbase(main):009:0> scan 'test' ROW COLUMN+CELL row1 column=cf:id, timestamp=1440335878007, value=1000 row1 column=cf:name, timestamp=1440335886167, value=lucl row2 column=cf:c, timestamp=1440335892630, value=val001 2 row(s) in 0.1560 seconds # 获取单行数据 hbase(main):010:0> get 'test', 'row1' COLUMN CELL cf:id timestamp=1440335878007, value=1000 cf:name timestamp=1440335886167, value=lucl 2 row(s) in 0.0610 seconds # 停用表 hbase(main):011:0> disable 'test' 0 row(s) in 2.2920 seconds # 删除表 hbase(main):012:0> drop 'test' 0 row(s) in 0.7670 seconds # 查看表 hbase(main):013:0> list 'test' TABLE 0 row(s) in 0.0090 seconds => [] # 退出 hbase(main):014:0> quit

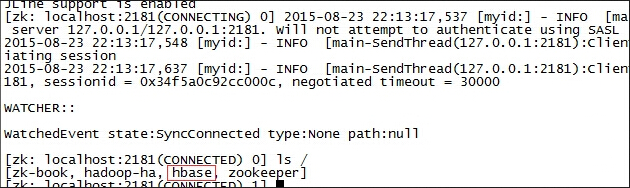

通过zookeeper可以看到hbase的数据

同样在hdfs上也记录了hbase的数据

[hadoop@dnode1 ~]$ hdfs dfs -ls -R /hbase|grep meta -rw-r--r-- 2 hadoop hadoop 1905 2015-08-23 21:48 /hbase/WALs/dnode2,16020,1440330498840/dnode2%2C16020%2C1440330498840..meta.1440334123526.meta -rw-r--r-- 2 hadoop hadoop 83 2015-08-23 21:48 /hbase/WALs/dnode2,16020,1440330498840/dnode2%2C16020%2C1440330498840..meta.1440337724324.meta drwxr-xr-x - hadoop hadoop 0 2015-06-22 21:44 /hbase/data/hbase/meta drwxr-xr-x - hadoop hadoop 0 2015-06-22 21:44 /hbase/data/hbase/meta/.tabledesc -rw-r--r-- 2 hadoop hadoop 372 2015-06-22 21:44 /hbase/data/hbase/meta/.tabledesc/.tableinfo.0000000001 drwxr-xr-x - hadoop hadoop 0 2015-06-22 21:44 /hbase/data/hbase/meta/.tmp drwxr-xr-x - hadoop hadoop 0 2015-08-23 20:48 /hbase/data/hbase/meta/1588230740 -rw-r--r-- 2 hadoop hadoop 32 2015-06-22 21:44 /hbase/data/hbase/meta/1588230740/.regioninfo drwxr-xr-x - hadoop hadoop 0 2015-08-23 22:05 /hbase/data/hbase/meta/1588230740/.tmp drwxr-xr-x - hadoop hadoop 0 2015-08-23 22:05 /hbase/data/hbase/meta/1588230740/info -rw-r--r-- 2 hadoop hadoop 5153 2015-08-23 22:05 /hbase/data/hbase/meta/1588230740/info/a907aae12e8c44b7bc0b56438c93f218 -rw-r--r-- 2 hadoop hadoop 12796 2015-08-23 20:48 /hbase/data/hbase/meta/1588230740/info/ebad6cdd23df45748747c0933ea1b266 drwxr-xr-x - hadoop hadoop 0 2015-08-23 19:48 /hbase/data/hbase/meta/1588230740/recovered.edits -rw-r--r-- 2 hadoop hadoop 0 2015-08-23 19:48 /hbase/data/hbase/meta/1588230740/recovered.edits/110.seqid

停止HBase

./bin/stop-hbase.sh

之前在公司虚拟机搭建的测试环境为hadoop2.4、hbase-0.98.1-hadoop2,当hadoop重新配置为2.6后,在启动hbase的过程中出现了一些列问题,记录如下:

1、zookeeper服务未启动

# hbase依赖于zookeeper,需要通过hbase-site.xml来确定使用的zookeeper主机 # zkServer.sh status # 查看zookeeper的状态,如果状态不正确验证zoo.cfg文件的配置及查看启动日志

2、java.lang.RuntimeException: HMaster Aborted

通过jps命令已经看到HMaster了,但是很快就又消失了,查看log报错HMaster Aborted。

该问题从网上查询时有人说/etc/hosts配置的127.0.0.1需要注释掉,也有人说hadoop的版本与habse中的hadoop版本不一致,总之如下问题出现时上述两种方式我都进行了调整及替换,完成后该错误消失。

[mvtech2@cu-dmz3 mapreduce] bin/start-hbase.sh ## 启动时的输出写入到/usr/sca_app/hbase/logs/hbase-mvtech2-master-cu-dmz3.log文件中 [mvtech2@cu-dmz3 mapreduce]$ jps 22821 QuorumPeerMain 15110 SecondaryNameNode 15290 ResourceManager 14867 NameNode 25059 Jps 23905 HRegionServer # 无HMaster,如果在hbase-site.xml文件中未指定hbase.master的配置,则从那台机器启动hbase # 那台机器自动成为HMaster,而HRegionServer则依赖于conf/regionservers文件 [mvtech2@cu-dmz3 mapreduce]$ [mvtech2@cu-dmz3 mapreduce]$ tail -n500 /usr/sca_app/hbase/logs/hbase-mvtech2-master-cu-dmz3.log 2015-08-27 22:23:58,143 INFO [master:cu-dmz3:60000] zookeeper.ZooKeeper: Session: 0x34f6f7fe9de0002 closed 2015-08-27 22:23:58,143 INFO [main-EventThread] zookeeper.ClientCnxn: EventThread shut down 2015-08-27 22:23:58,143 INFO [master:cu-dmz3:60000] master.HMaster: HMaster main thread exiting 2015-08-27 22:23:58,143 ERROR [main] master.HMasterCommandLine: Master exiting java.lang.RuntimeException: HMaster Aborted at org.apache.hadoop.hbase.master.HMasterCommandLine.startMaster(HMasterCommandLine.java:192) at org.apache.hadoop.hbase.master.HMasterCommandLine.run(HMasterCommandLine.java:134) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70) at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:126) at org.apache.hadoop.hbase.master.HMaster.main(HMaster.java:2801) # 查看HBase的版本 [mvtech2@cu-dmz3 lib]$ hbase version 2015-08-27 22:41:02,694 INFO [main] util.VersionInfo: HBase 0.98.1-hadoop2 2015-08-27 22:41:02,695 INFO [main] util.VersionInfo: Subversion https://svn.apache.org/repos/asf/hbase/tags/0.98.1RC3 -r 1583035 2015-08-27 22:41:02,695 INFO [main] util.VersionInfo: Compiled by apurtell on Sat Mar 29 17:19:25 PDT 2014 [mvtech2@cu-dmz3 lib]$ # HBase的lib目录下hadoop版本为2.2的,但此时配置的hadoop为2.6的 [mvtech2@cu-dmz3 lib]$ ll|grep core -rw-r--r-- 1 mvtech2 mvtech2 206035 2月 27 2014 commons-beanutils-core-1.8.0.jar -rw-r--r-- 1 mvtech2 mvtech2 1455001 2月 27 2014 hadoop-mapreduce-client-core-2.2.0.jar -rw-r--r-- 1 mvtech2 mvtech2 45024 2月 27 2014 hamcrest-core-1.3.jar [mvtech2@cu-dmz3 bin]$ ./hadoop version Hadoop 2.6.0 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1 Compiled by jenkins on 2014-11-13T21:10Z Compiled with protoc 2.5.0 From source with checksum 18e43357c8f927c0695f1e9522859d6a This command was run using /usr/sca_app/hadoop/share/hadoop/common/hadoop-common-2.6.0.jar [mvtech2@cu-dmz3 bin]$ # Hadoop的lib版本为2.6.0 [mvtech2@cu-dmz3 mapreduce]$ pwd /usr/sca_app/hadoop/share/hadoop/mapreduce [mvtech2@cu-dmz3 mapreduce]$ ll 总用量 4680 -rw-r--r-- 1 mvtech2 mvtech2 504308 11月 14 2014 hadoop-mapreduce-client-app-2.6.0.jar -rw-r--r-- 1 mvtech2 mvtech2 664917 11月 14 2014 hadoop-mapreduce-client-common-2.6.0.jar -rw-r--r-- 1 mvtech2 mvtech2 1509398 11月 14 2014 hadoop-mapreduce-client-core-2.6.0.jar -rw-r--r-- 1 mvtech2 mvtech2 233872 11月 14 2014 hadoop-mapreduce-client-hs-2.6.0.jar [mvtech2@cu-dmz3 mapreduce]$ cp hadoop-mapreduce-client-core-2.6.0.jar /usr/sca_app/hbase/lib [mvtech2@cu-dmz3 bin]$ cd /usr/sca_app/hbase/lib/ [mvtech2@cu-dmz3 lib]$ ll | grep core -rw-r--r-- 1 mvtech2 mvtech2 206035 2月 27 2014 commons-beanutils-core-1.8.0.jar -rw-r--r-- 1 mvtech2 mvtech2 1455001 2月 27 2014 hadoop-mapreduce-client-core-2.2.0.jar -rw-r--r-- 1 mvtech2 mvtech2 1509398 8月 27 22:28 hadoop-mapreduce-client-core-2.6.0.jar -rw-r--r-- 1 mvtech2 mvtech2 45024 2月 27 2014 hamcrest-core-1.3.jar -rw-r--r-- 1 mvtech2 mvtech2 31532 2月 27 2014 htrace-core-2.04.jar -rw-r--r-- 1 mvtech2 mvtech2 181201 2月 27 2014 httpcore-4.1.3.jar -rw-r--r-- 1 mvtech2 mvtech2 227500 2月 27 2014 jackson-core-asl-1.8.8.jar -rw-r--r-- 1 mvtech2 mvtech2 458233 2月 27 2014 jersey-core-1.8.jar -rw-r--r-- 1 mvtech2 mvtech2 28100 2月 27 2014 jersey-test-framework-core-1.9.jar -rw-r--r-- 1 mvtech2 mvtech2 82445 2月 27 2014 metrics-core-2.1.2.jar [mvtech2@cu-dmz3 lib]$ mv hadoop-mapreduce-client-core-2.2.0.jar hadoop-mapreduce-client-core-2.2.0.jar.bak [mvtech2@cu-dmz3 lib]$ ll | grep core -rw-r--r-- 1 mvtech2 mvtech2 206035 2月 27 2014 commons-beanutils-core-1.8.0.jar -rw-r--r-- 1 mvtech2 mvtech2 1455001 2月 27 2014 hadoop-mapreduce-client-core-2.2.0.jar.bak -rw-r--r-- 1 mvtech2 mvtech2 1509398 8月 27 22:28 hadoop-mapreduce-client-core-2.6.0.jar -rw-r--r-- 1 mvtech2 mvtech2 45024 2月 27 2014 hamcrest-core-1.3.jar -rw-r--r-- 1 mvtech2 mvtech2 31532 2月 27 2014 htrace-core-2.04.jar -rw-r--r-- 1 mvtech2 mvtech2 181201 2月 27 2014 httpcore-4.1.3.jar -rw-r--r-- 1 mvtech2 mvtech2 227500 2月 27 2014 jackson-core-asl-1.8.8.jar -rw-r--r-- 1 mvtech2 mvtech2 458233 2月 27 2014 jersey-core-1.8.jar -rw-r--r-- 1 mvtech2 mvtech2 28100 2月 27 2014 jersey-test-framework-core-1.9.jar -rw-r--r-- 1 mvtech2 mvtech2 82445 2月 27 2014 metrics-core-2.1.2.jar # 在HBase的lib目录下hadoop的jar包有多个2.2.0的,均需要替换成2.6的

3、org.apache.hadoop.hbase.TableExistsException: hbase:namespace

2015-08-27 23:57:13,713 FATAL [master:cu-dmz3:60000] master.HMaster: Master server abort: loaded coprocessors are: [] 2015-08-27 23:57:13,715 FATAL [master:cu-dmz3:60000] master.HMaster: Unhandled exception. Starting shutdown. org.apache.hadoop.hbase.TableExistsException: hbase:namespace at org.apache.hadoop.hbase.master.handler.CreateTableHandler.prepare(CreateTableHandler.java:120) at org.apache.hadoop.hbase.master.TableNamespaceManager.createNamespaceTable(TableNamespaceManager.java:232) at org.apache.hadoop.hbase.master.TableNamespaceManager.start(TableNamespaceManager.java:86) at org.apache.hadoop.hbase.master.HMaster.initNamespace(HMaster.java:1062) at org.apache.hadoop.hbase.master.HMaster.finishInitialization(HMaster.java:926) at org.apache.hadoop.hbase.master.HMaster.run(HMaster.java:615) at java.lang.Thread.run(Thread.java:745) 2015-08-27 23:57:13,719 INFO [master:cu-dmz3:60000] master.HMaster: Aborting # 说明zookeeper记录的hbase数据已经存在,与现在的不一致(说明:hadoop之前升级了, # hbase中的hadoop的jar被替换了) # 删除zookeeper下的hbase数据即可 # zkCli.sh # rmr /hbase # 重新启动hbase集群 # start-hbase.sh # HBase启动成功