Ceph集群安装

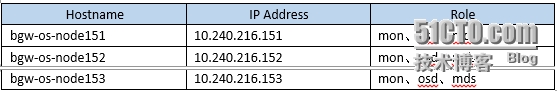

1.环境说明

2. 安装步骤

2.1 ceph模块安装(各节点都需要安装)

yum installceph-deploy ceph python-ceph nodejs-argparse redhat-lsb xfsdump qemu-kvmqemu-kvm-tools qemu-img qemu-guest-agent libvirt �Cy

2.2 生成fsid

[root@bgw-os-node151 ~]# uuidgen

2.3 ceph配置(在bgw-os-node151上执行)

[root@bgw-os-node151 ~]# cat > /etc/ceph/ceph.conf << EOF

[global]

fsid =0071bd6f-849c-433a-8051-2e553df49aea

mon initial members =bgw-os-node151,bgw-os-node152,bgw-os-node153

mon host =10.240.216.151,10.240.216.152,10.240.216.153

public network = 10.240.216.0/24

auth cluster required= cephx

auth service required= cephx

auth client required= cephx

filestore xattr useomap = true

mds max file size =102400000000000

mds cache size =102400

osd journal size =1024

osd pool default size= 2

osd pool defaultmin_size = 1

osd crush chooseleaftype = 1

osd recoverythreads = 1

osd_mkfs_type = xfs

[mon]

mon clock driftallowed = .50

mon osd down outinterval = 900

EOF

2.4 在bgw-os-node151上创建各种密钥

[root@bgw-os-node151 ~]# ceph-authtool --create-keyring /etc/ceph/ceph.mon.keyring--gen-key -n mon. --cap mon 'allow *'

creating /etc/ceph/ceph.mon.keyring

[root@bgw-os-node151 ~]# ceph-authtool --create-keyring/etc/ceph/ceph.client.admin.keyring --gen-key -n client.admin --set-uid=0 --capmon 'allow *' --cap osd 'allow *' --cap mds 'allow *'

creating/etc/ceph/ceph.client.admin.keyring

[root@bgw-os-node151 ~]# ceph-authtool /etc/ceph/ceph.mon.keyring --import-keyring/etc/ceph/ceph.client.admin.keyring

importing contents of/etc/ceph/ceph.client.admin.keyring into /etc/ceph/ceph.mon.keyring

[root@bgw-os-node151 ~]# mkdir -p /var/lib/ceph/mon/ceph-bgw-os-node151

[root@bgw-os-node151 ~]# mkdir -p /var/lib/ceph/bootstrap-osd/

[root@bgw-os-node151 ~]# ceph-authtool -C /var/lib/ceph/bootstrap-osd/ceph.keyring

creating/var/lib/ceph/bootstrap-osd/ceph.keyring

[root@bgw-os-node151 ~]# ceph-mon --mkfs -i bgw-os-node151 --keyring/etc/ceph/ceph.mon.keyring

ceph-mon: renaming mon.noname-a10.240.216.151:6789/0 to mon.bgw-os-node151

ceph-mon: set fsid to 0071bd6f-849c-433a-8051-2e553df49aea

ceph-mon: created monfs at/var/lib/ceph/mon/ceph-bgw-os-node151 for mon.bgw-os-node151

[root@bgw-os-node151 ~]# touch /var/lib/ceph/mon/ceph-bgw-os-node151/done

[root@bgw-os-node151 ~]# touch /var/lib/ceph/mon/ceph-bgw-os-node151/sysvinit

[root@bgw-os-node151 ~]# /sbin/service ceph -c /etc/ceph/ceph.conf startmon.bgw-os-node151

=== mon.bgw-os-node151 ===

Starting Ceph mon.bgw-os-node151 onbgw-os-node151...

Starting ceph-create-keys onbgw-os-node151...

2.5 同步ceph配置到其他节点并启动(严格按照下面的步骤执行)

[root@bgw-os-node152 ~]# mkdir -p /var/lib/ceph/mon/ceph-bgw-os-node152

[root@bgw-os-node152 ~]# mkdir -p /var/lib/ceph/bootstrap-osd/

[root@bgw-os-node153 ~]# mkdir -p /var/lib/ceph/mon/ceph-bgw-os-node153

[root@bgw-os-node153 ~]# mkdir -p /var/lib/ceph/bootstrap-osd/

[root@bgw-os-node151 ~]# scp /etc/ceph/* 10.240.216.152:/etc/ceph

[root@bgw-os-node151 ~]# scp /etc/ceph/* 10.240.216.153:/etc/ceph

[root@bgw-os-node151 ~]# scp /var/lib/ceph/bootstrap-osd/*10.240.216.152:/var/lib/ceph/bootstrap-osd/

[root@bgw-os-node151 ~]# scp /var/lib/ceph/bootstrap-osd/*10.240.216.153:/var/lib/ceph/bootstrap-osd/

[root@bgw-os-node152 ~]# ceph-mon --mkfs -i bgw-os-node152 --keyring/etc/ceph/ceph.mon.keyring

[root@bgw-os-node152 ~]# touch /var/lib/ceph/mon/ceph-bgw-os-node152/done

[root@bgw-os-node152 ~]# touch /var/lib/ceph/mon/ceph-bgw-os-node152/sysvinit

[root@bgw-os-node152 ~]# /sbin/service ceph -c /etc/ceph/ceph.conf startmon.bgw-os-node152

[root@bgw-os-node153 ~]# ceph-mon --mkfs -i bgw-os-node153 --keyring/etc/ceph/ceph.mon.keyring

[root@bgw-os-node153 ~]# touch /var/lib/ceph/mon/ceph-bgw-os-node153/done

[root@bgw-os-node153 ~]# touch /var/lib/ceph/mon/ceph-bgw-os-node153/sysvinit

[root@bgw-os-node153 ~]# /sbin/service ceph -c /etc/ceph/ceph.conf startmon.bgw-os-node153

[root@bgw-os-node153 ~]# ceph --cluster=ceph --admin-daemon/var/run/ceph/ceph-mon.bgw-os-node153.asok mon_status

[root@bgw-os-node151 ~]# ceph -s

cluster 0071bd6f-849c-433a-8051-2e553df49aea

health HEALTH_ERR 192 pgs stuck inactive; 192 pgs stuck unclean; noosds; clock skew detected on mon.bgw-os-node153

monmap e2: 3 mons at{bgw-os-node151=10.240.216.151:6789/0,bgw-os-node152=10.240.216.152:6789/0,bgw-os-node153=10.240.216.153:6789/0},election epoch 8, quorum 0,1,2 bgw-os-node151,bgw-os-node152,bgw-os-node153

osdmap e1: 0 osds: 0 up, 0 in

pgmap v2: 192 pgs, 3 pools, 0 bytes data, 0 objects

0 kB used, 0 kB / 0 kB avail

192 creating

[root@bgw-os-node152 ~]# ceph -s

cluster 0071bd6f-849c-433a-8051-2e553df49aea

health HEALTH_ERR 192 pgs stuck inactive; 192 pgs stuck unclean; noosds; clock skew detected on mon.bgw-os-node153

monmap e2: 3 mons at {bgw-os-node151=10.240.216.151:6789/0,bgw-os-node152=10.240.216.152:6789/0,bgw-os-node153=10.240.216.153:6789/0},election epoch 8, quorum 0,1,2 bgw-os-node151,bgw-os-node152,bgw-os-node153

osdmap e1: 0 osds: 0 up, 0 in

pgmap v2: 192 pgs, 3 pools, 0 bytes data, 0 objects

0 kB used, 0 kB / 0 kB avail

192 creating

[root@bgw-os-node153 ~]# ceph -s

cluster 0071bd6f-849c-433a-8051-2e553df49aea

health HEALTH_ERR 192 pgs stuck inactive; 192 pgs stuck unclean; noosds; clock skew detected on mon.bgw-os-node153

monmap e2: 3 mons at{bgw-os-node151=10.240.216.151:6789/0,bgw-os-node152=10.240.216.152:6789/0,bgw-os-node153=10.240.216.153:6789/0},election epoch 8, quorum 0,1,2 bgw-os-node151,bgw-os-node152,bgw-os-node153

osdmap e1: 0 osds: 0 up, 0 in

pgmap v2: 192 pgs, 3 pools, 0 bytes data, 0 objects

0 kB used, 0 kB / 0 kB avail

192 creating

2.6 在bgw-os-node151上创建osd并启动

2.7 查看系统的可用卷(磁盘)

[root@bgw-os-node151 ~]# fdisk -l

[root@bgw-os-node151 ~]# df -hT

2.8 查看当前的ceph pool

[root@bgw-os-node151 ~]# ceph osd lspools

0 data,1 metadata,2 rbd,

2.9 在bgw-os-node151上添加第一个osd

[root@bgw-os-node151 ~]# ceph osd create

0

[root@bgw-os-node151 ~]# mkdir -p /var/lib/ceph/osd/ceph-0

[root@bgw-os-node151 ~]# mkfs.xfs -f /dev/sdb -- 根据具体情况

meta-data=/dev/sdb isize=256 agcount=4, agsize=18308499 blks

= sectsz=512 attr=2, projid32bit=0

data = bsize=4096 blocks=73233995,imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=35758,version=2

= sectsz=512 sunit=0 blks,lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@bgw-os-node151 ~]# mount /dev/sdb /var/lib/ceph/osd/ceph-0

[root@bgw-os-node151 ~]# mount -o remount,user_xattr /var/lib/ceph/osd/ceph-0

[root@bgw-os-node151 ~]# mount

/dev/sda2 on / type ext4 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts(rw,gid=5,mode=620)

tmpfs on /dev/shm type tmpfs (rw)

/dev/sda1 on /boot type ext4 (rw)

none on /proc/sys/fs/binfmt_misc typebinfmt_misc (rw)

/dev/sdb on /var/lib/ceph/osd/ceph-0 typexfs (rw,user_xattr)

[root@bgw-os-node151 ~]# echo "/dev/sdb /var/lib/ceph/osd/ceph-0 xfs defaults 0 0" >>/etc/fstab

[root@bgw-os-node151 ~]# echo "/dev/sdb /var/lib/ceph/osd/ceph-0 xfs remount,user_xattr 0 0" >>/etc/fstab

[root@bgw-os-node151 ~]# ceph-osd -i 0 --mkfs --mkkey

2015-03-19 13:38:14.363503 7f3fe46e77a0 -1journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aioanyway

2015-03-19 13:38:14.369579 7f3fe46e77a0 -1journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aioanyway

2015-03-19 13:38:14.370140 7f3fe46e77a0 -1filestore(/var/lib/ceph/osd/ceph-0) could not find23c2fcde/osd_superblock/0//-1 in index: (2) No such file or directory

2015-03-19 13:38:14.377213 7f3fe46e77a0 -1created object store /var/lib/ceph/osd/ceph-0 journal/var/lib/ceph/osd/ceph-0/journal for osd.0 fsid0071bd6f-849c-433a-8051-2e553df49aea

2015-03-19 13:38:14.377265 7f3fe46e77a0 -1auth: error reading file: /var/lib/ceph/osd/ceph-0/keyring: can't open/var/lib/ceph/osd/ceph-0/keyring: (2) No such file or directory

2015-03-19 13:38:14.377364 7f3fe46e77a0 -1created new key in keyring /var/lib/ceph/osd/ceph-0/keyring

[root@bgw-os-node151 ~]# ceph auth add osd.0 osd 'allow *' mon 'allow profile osd'-i /var/lib/ceph/osd/ceph-0/keyring

added key for osd.0

2.10 为第一个osd添加rack规则

[root@bgw-os-node151 ~]# ceph osd crush add-bucket rack1 rack

added bucket rack1 type rack to crush map

[root@bgw-os-node151 ~]#ceph osd crush move bgw-os-node151 rack=rack1

Error ENOENT: itembgw-os-node151 does not exist -- 需要先执行下面的操作

[root@bgw-os-node151 ~]# ceph osd crush add-bucket bgw-os-node151 host

added bucket bgw-os-node151 type host tocrush map

[root@bgw-os-node151 ~]# ceph osd crush move bgw-os-node151 rack=rack1

moved item id -3 name 'bgw-os-node151' tolocation {rack=rack1} in crush map

[root@bgw-os-node151 ~]# ceph osd crush move rack1 root=default

moved item id -2 name 'rack1' to location{root=default} in crush map

[root@bgw-os-node151 ~]# ceph osd crush add osd.0 1.0 host=bgw-os-node151

add item id 0 name 'osd.0' weight 1 atlocation {host=bgw-os-node151} to crush map

[root@bgw-os-node151 ~]# touch /var/lib/ceph/osd/ceph-0/sysvinit

[root@bgw-os-node151 ~]# /etc/init.d/ceph start osd.0

=== osd.0 ===

create-or-move updated item name 'osd.0'weight 0.27 at location {host=bgw-os-node151,root=default} to crush map

Starting Ceph osd.0 on bgw-os-node151...

starting osd.0 at :/0 osd_data/var/lib/ceph/osd/ceph-0 /var/lib/ceph/osd/ceph-0/journal

[root@bgw-os-node151 ~]# ps aux | grep osd

root 25090 5.7 0.0 504804 27836 ? Ssl 13:44 0:00 /usr/bin/ceph-osd -i0 --pid-file /var/run/ceph/osd.0.pid -c /etc/ceph/ceph.conf --cluster ceph

root 25154 0.0 0.0 103304 2028 pts/0 S+ 13:44 0:00 grep osd

2.11 在bgw-os-node151上添加其余osd

[root@bgw-os-node151 ~]# ceph osd create

1

[root@bgw-os-node151 ~]# mkdir -p /var/lib/ceph/osd/ceph-1

[root@bgw-os-node151 ~]# mkfs.xfs -f /dev/sdc

meta-data=/dev/sdc isize=256 agcount=4, agsize=18308499 blks

= sectsz=512 attr=2, projid32bit=0

data = bsize=4096 blocks=73233995,imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=35758,version=2

= sectsz=512 sunit=0 blks,lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@bgw-os-node151 ~]# mount /dev/sdc /var/lib/ceph/osd/ceph-1

[root@bgw-os-node151 ~]# mount -o remount,user_xattr /var/lib/ceph/osd/ceph-1

[root@bgw-os-node151 ~]# echo "/dev/sdc /var/lib/ceph/osd/ceph-1 xfs defaults 0 0" >>/etc/fstab

[root@bgw-os-node151 ~]# echo "/dev/sdc /var/lib/ceph/osd/ceph-1 xfs remount,user_xattr 0 0">> /etc/fstab

[root@bgw-os-node151 ~]# ceph-osd -i 1 --mkfs --mkkey

2015-03-19 13:56:58.131623 7f209809e7a0 -1journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aioanyway

2015-03-19 13:56:58.137304 7f209809e7a0 -1journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aioanyway

2015-03-19 13:56:58.137875 7f209809e7a0 -1filestore(/var/lib/ceph/osd/ceph-1) could not find 23c2fcde/osd_superblock/0//-1in index: (2) No such file or directory

2015-03-19 13:56:58.145813 7f209809e7a0 -1created object store /var/lib/ceph/osd/ceph-1 journal/var/lib/ceph/osd/ceph-1/journal for osd.1 fsid0071bd6f-849c-433a-8051-2e553df49aea

2015-03-19 13:56:58.145862 7f209809e7a0 -1auth: error reading file: /var/lib/ceph/osd/ceph-1/keyring: can't open/var/lib/ceph/osd/ceph-1/keyring: (2) No such file or directory

2015-03-19 13:56:58.145958 7f209809e7a0 -1created new key in keyring /var/lib/ceph/osd/ceph-1/keyring

[root@bgw-os-node151 ~]# ceph auth add osd.1 osd 'allow *' mon 'allow profile osd'-i /var/lib/ceph/osd/ceph-1/keyring

added key for osd.1

[root@bgw-os-node151 ~]# ceph osd crush add osd.1 1.0 host=bgw-os-node151

add item id 1 name 'osd.1' weight 1 atlocation {host=bgw-os-node151} to crush map

[root@bgw-os-node151 ~]# touch /var/lib/ceph/osd/ceph-1/sysvinit

[root@bgw-os-node151 ~]# /etc/init.d/ceph start osd.1

=== osd.1 ===

create-or-move updated item name 'osd.1'weight 0.27 at location {host=bgw-os-node151,root=default} to crush map

Starting Ceph osd.1 on bgw-os-node151...

starting osd.1 at :/0 osd_data/var/lib/ceph/osd/ceph-1 /var/lib/ceph/osd/ceph-1/journal

[root@bgw-os-node151 ~]# ceph -s

cluster 0071bd6f-849c-433a-8051-2e553df49aea

health HEALTH_WARN 192 pgs degraded; 192 pgs stuck unclean; clock skewdetected on mon.bgw-os-node153

monmap e2: 3 mons at{bgw-os-node151=10.240.216.151:6789/0,bgw-os-node152=10.240.216.152:6789/0,bgw-os-node153=10.240.216.153:6789/0},election epoch 8, quorum 0,1,2 bgw-os-node151,bgw-os-node152,bgw-os-node153

osdmap e15: 2 osds: 2 up, 2 in

pgmap v22: 192 pgs, 3 pools, 0 bytes data, 0 objects

1058 MB used, 278 GB / 279 GB avail

192 active+degraded

[root@bgw-os-node151 ~]# ceph osd tree

# id weight type name up/down reweight

-1 2 root default

-2 2 rack rack1

-3 2 hostbgw-os-node151

0 1 osd.0 up 1

1 1 osd.1 up 1

2.12 在bgw-os-node152上创建osd并启动

[root@bgw-os-node152 ~]# ceph osd create #osd的编号在集群中是累加的,从0开始

4

[root@bgw-os-node152 ~]# mkdir -p /var/lib/ceph/osd/ceph-4

[root@bgw-os-node152 ~]# mkfs.xfs -f /dev/sdb

meta-data=/dev/sdb isize=256 agcount=4, agsize=18308499 blks

= sectsz=512 attr=2, projid32bit=0

data = bsize=4096 blocks=73233995,imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0

log =internal log bsize=4096 blocks=35758,version=2

= sectsz=512 sunit=0 blks,lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@bgw-os-node152 ~]# mount /dev/sdb /var/lib/ceph/osd/ceph-4

[root@bgw-os-node152 ~]# mount -o remount,user_xattr /var/lib/ceph/osd/ceph-4

[root@bgw-os-node152 ~]# echo "/dev/sdb /var/lib/ceph/osd/ceph-4 xfs defaults 0 0" >>/etc/fstab

[root@bgw-os-node152 ~]# echo "/dev/sdb /var/lib/ceph/osd/ceph-4 xfs remount,user_xattr 0 0" >>/etc/fstab

[root@bgw-os-node152 ~]# ceph-osd -i 4 --mkfs --mkkey

2015-03-19 14:23:57.488335 7f474bc637a0 -1journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aioanyway

2015-03-19 14:23:57.494038 7f474bc637a0 -1journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aioanyway

2015-03-19 14:23:57.494475 7f474bc637a0 -1filestore(/var/lib/ceph/osd/ceph-4) could not find23c2fcde/osd_superblock/0//-1 in index: (2) No such file or directory

2015-03-19 14:23:57.502901 7f474bc637a0 -1created object store /var/lib/ceph/osd/ceph-4 journal/var/lib/ceph/osd/ceph-4/journal for osd.4 fsid0071bd6f-849c-433a-8051-2e553df49aea

2015-03-19 14:23:57.502952 7f474bc637a0 -1auth: error reading file: /var/lib/ceph/osd/ceph-4/keyring: can't open/var/lib/ceph/osd/ceph-4/keyring: (2) No such file or directory

2015-03-19 14:23:57.503040 7f474bc637a0 -1created new key in keyring /var/lib/ceph/osd/ceph-4/keyring

[root@bgw-os-node152 ~]# ceph auth add osd.4 osd 'allow *' mon 'allow profile osd'-i /var/lib/ceph/osd/ceph-4/keyring

added key for osd.4

[root@bgw-os-node152 ~]# ceph osd crush add-bucket rack2 rack #添加rack规则rack2

added bucket rack2 type rack to crush map

[root@bgw-os-node152 ~]# ceph osd crush add-bucket bgw-os-node152 host #添加host到rack

added bucket bgw-os-node152 type host tocrush map

[root@bgw-os-node152 ~]# ceph osd crush move bgw-os-node152 rack=rack2

moved item id -5 name 'bgw-os-node152' tolocation {rack=rack2} in crush map

[root@bgw-os-node152 ~]# ceph osd crush move rack2 root=default

moved item id -4 name 'rack2' to location{root=default} in crush map

[root@bgw-os-node152 ~]# ceph osd crush add osd.4 1.0 host=bgw-os-node152

add item id 4 name 'osd.4' weight 1 atlocation {host=bgw-os-node152} to crush map

[root@bgw-os-node152 ~]# touch /var/lib/ceph/osd/ceph-4/sysvinit

[root@bgw-os-node152 ~]# /etc/init.d/ceph start osd.4

=== osd.4 ===

create-or-move updated item name 'osd.4'weight 0.27 at location {host=bgw-os-node152,root=default} to crush map

Starting Ceph osd.4 on bgw-os-node152...

starting osd.4 at :/0 osd_data/var/lib/ceph/osd/ceph-4 /var/lib/ceph/osd/ceph-4/journal

[root@bgw-os-node152 ~]# ceph osd tree

# id weight type name up/down reweight

-1 5 root default

-2 4 rack rack1

-3 4 hostbgw-os-node151

0 1 osd.0 up 1

1 1 osd.1 up 1

2 1 osd.2 up 1

3 1 osd.3 up 1

-4 1 rack rack2

-5 1 hostbgw-os-node152

4 1 osd.4 up 1

2.13 在bgw-os-node152上添加其他osd节点并加入规则rack2

[root@bgw-os-node152 ~]# ceph osd create

[root@bgw-os-node152 ~]# mkdir -p /var/lib/ceph/osd/ceph-5

[root@bgw-os-node152 ~]# mkfs.xfs -f /dev/sdc

[root@bgw-os-node152 ~]# mount /dev/sdc /var/lib/ceph/osd/ceph-5

[root@bgw-os-node152 ~]# mount -o remount,user_xattr /var/lib/ceph/osd/ceph-5

[root@bgw-os-node152 ~]# echo "/dev/sdc /var/lib/ceph/osd/ceph-5 xfs defaults 0 0" >>/etc/fstab

[root@bgw-os-node152 ~]# echo "/dev/sdc /var/lib/ceph/osd/ceph-5 xfs remount,user_xattr 0 0" >>/etc/fstab

[root@bgw-os-node152 ~]# ceph-osd -i 5 --mkfs --mkkey

[root@bgw-os-node152 ~]# ceph auth add osd.5 osd 'allow *' mon 'allow profile osd'-i /var/lib/ceph/osd/ceph-5/keyring

[root@bgw-os-node152 ~]# ceph osd crush add osd.5 1.0 host=bgw-os-node152

[root@bgw-os-node152 ~]# touch /var/lib/ceph/osd/ceph-5/sysvinit

[root@bgw-os-node152 ~]# /etc/init.d/ceph start osd.5

[root@bgw-os-node152 ~]# ceph osd create

[root@bgw-os-node152 ~]# mkdir -p /var/lib/ceph/osd/ceph-6

[root@bgw-os-node152 ~]# mkfs.xfs -f /dev/sdd

[root@bgw-os-node152 ~]# mount /dev/sdd /var/lib/ceph/osd/ceph-6

[root@bgw-os-node152 ~]# mount -o remount,user_xattr /var/lib/ceph/osd/ceph-6

[root@bgw-os-node152 ~]# echo "/dev/sdd /var/lib/ceph/osd/ceph-6 xfs defaults 0 0" >>/etc/fstab

[root@bgw-os-node152 ~]# echo "/dev/sdd /var/lib/ceph/osd/ceph-6 xfs remount,user_xattr 0 0" >>/etc/fstab

[root@bgw-os-node152 ~]# ceph-osd -i 6 --mkfs --mkkey

[root@bgw-os-node152 ~]# ceph auth add osd.6 osd 'allow *' mon 'allow profile osd'-i /var/lib/ceph/osd/ceph-6/keyring

[root@bgw-os-node152 ~]# ceph osd crush add osd.6 1.0 host=bgw-os-node152

[root@bgw-os-node152 ~]# touch /var/lib/ceph/osd/ceph-6/sysvinit

[root@bgw-os-node152 ~]# /etc/init.d/ceph start osd.6

[root@bgw-os-node152 ~]# ceph osd create

[root@bgw-os-node152 ~]# mkdir -p /var/lib/ceph/osd/ceph-7

[root@bgw-os-node152 ~]# mkfs.xfs -f /dev/sde

[root@bgw-os-node152 ~]# mount /dev/sde /var/lib/ceph/osd/ceph-7

[root@bgw-os-node152 ~]# mount -o remount,user_xattr /var/lib/ceph/osd/ceph-7

[root@bgw-os-node152 ~]# echo "/dev/sde /var/lib/ceph/osd/ceph-7 xfs defaults 0 0" >>/etc/fstab

[root@bgw-os-node152 ~]# echo "/dev/sde /var/lib/ceph/osd/ceph-7 xfs remount,user_xattr 0 0" >> /etc/fstab

[root@bgw-os-node152 ~]# ceph-osd -i 7 --mkfs --mkkey

[root@bgw-os-node152 ~]# ceph auth add osd.7 osd 'allow *' mon 'allow profile osd'-i /var/lib/ceph/osd/ceph-7/keyring

[root@bgw-os-node152 ~]# ceph osd crush add osd.7 1.0 host=bgw-os-node152

[root@bgw-os-node152 ~]# touch /var/lib/ceph/osd/ceph-7/sysvinit

[root@bgw-os-node152 ~]# /etc/init.d/ceph start osd.7

[root@bgw-os-node152 ~]# ceph osd tree

# id weight type name up/down reweight

-1 8 root default

-2 4 rack rack1

-3 4 hostbgw-os-node151

0 1 osd.0 up 1

1 1 osd.1 up 1

2 1 osd.2 up 1

3 1 osd.3 up 1

-4 4 rack rack2

-5 4 hostbgw-os-node152

4 1 osd.4 up 1

5 1 osd.5 up 1

6 1 osd.6 up 1

7 1 osd.7 up 1

2.14 在bgw-os-node153上添加osd,并加入到规则rack3(同152-- 略)

[root@bgw-os-node153 ~]# ceph osd tree

# id weight type name up/down reweight

-1 12 root default

-2 4 rack rack1

-3 4 hostbgw-os-node151

0 1 osd.0 up 1

1 1 osd.1 up 1

2 1 osd.2 up 1

3 1 osd.3 up 1

-4 4 rack rack2

-5 4 hostbgw-os-node152

4 1 osd.4 up 1

5 1 osd.5 up 1

6 1 osd.6 up 1

7 1 osd.7 up 1

-6 4 rack rack3

-7 4 hostbgw-os-node153

8 1 osd.8 up 1

9 1 osd.9 up 1

10 1 osd.10 up 1

11 1 osd.11 up 1

3.添加元数据服务器

3.1在bgw-os-node151上创建mds

[root@bgw-os-node151 ~]# mkdir -p /var/lib/ceph/mds/ceph-bgw-os-node151

[root@bgw-os-node151 ~]# ceph-authtool --create-keyring/var/lib/ceph/bootstrap-mds/ceph.keyring --gen-key -n client.bootstrap-mds #集群中仅需要执行一次

[root@bgw-os-node151 ~]# ceph auth list #存在client.bootstrap-mds用户下一条命令可省略

[root@bgw-os-node151 ~]# ceph auth add client.bootstrap-mds mon 'allow profilebootstrap-mds' -i /var/lib/ceph/bootstrap-mds/ceph.keyring

[root@bgw-os-node151 ~]# touch /root/ceph.bootstrap-mds.keyring

[root@bgw-os-node151 ~]# ceph-authtool --import-keyring/var/lib/ceph/bootstrap-mds/ceph.keyring ceph.bootstrap-mds.keyring

[root@bgw-os-node151 ~]# ceph --cluster ceph --name client.bootstrap-mds--keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-createmds.bgw-os-node151 osd 'allow rwx' mds 'allow' mon 'allow profile mds' -o/var/lib/ceph/mds/ceph-bgw-os-node151/keyring

[root@bgw-os-node151 ~]# touch /var/lib/ceph/mds/ceph-bgw-os-node151/sysvinit

[root@bgw-os-node151 ~]# touch /var/lib/ceph/mds/ceph-bgw-os-node151/done

[root@bgw-os-node151 ~]# service ceph start mds.bgw-os-node151

[root@bgw-os-node151 ~]# ceph -s

cluster 0071bd6f-849c-433a-8051-2e553df49aea

health HEALTH_WARN too few pgs per osd (16 < min 20); clock skewdetected on mon.bgw-os-node153

monmap e2: 3 mons at{bgw-os-node151=10.240.216.151:6789/0,bgw-os-node152=10.240.216.152:6789/0,bgw-os-node153=10.240.216.153:6789/0},election epoch 8, quorum 0,1,2 bgw-os-node151,bgw-os-node152,bgw-os-node153

mdsmap e4: 1/1/1 up {0=bgw-os-node151=up:active}

osdmap e81: 12 osds: 12 up, 12 in

pgmap v224: 192 pgs, 3 pools, 1884 bytes data, 20 objects

12703 MB used, 3338 GB / 3350 GB avail

192 active+clean

client io 0 B/s wr, 0 op/s

3.2在bgw-os-node152上创建mds

[root@bgw-os-node152 ~]# mkdir -p /var/lib/ceph/mds/ceph-bgw-os-node152

[root@bgw-os-node152 ~]# mkdir -p /var/lib/ceph/bootstrap-mds/

[root@bgw-os-node151 ~]# scp /var/lib/ceph/bootstrap-mds/ceph.keyringbgw-os-node152:/var/lib/ceph/bootstrap-mds/

[root@bgw-os-node151 ~]# scp /root/ceph.bootstrap-mds.keyring bgw-os-node152:/root

[root@bgw-os-node151 ~]# scp /var/lib/ceph/mds/ceph-bgw-os-node151/sysvinitbgw-os-node152:/var/lib/ceph/mds/ceph-bgw-os-node152/

[root@bgw-os-node152 ~]# ceph --cluster ceph --name client.bootstrap-mds --keyring/var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.bgw-os-node152osd 'allow rwx' mds 'allow' mon 'allow profile mds' -o/var/lib/ceph/mds/ceph-bgw-os-node152/keyring

[root@bgw-os-node152 ~]# touch /var/lib/ceph/mds/ceph-bgw-os-node152/done

[root@bgw-os-node152 ~]# service ceph start mds.bgw-os-node152

[root@bgw-os-node152 ~]# ceph -s

cluster 0071bd6f-849c-433a-8051-2e553df49aea

health HEALTH_WARN too few pgs per osd (16 < min 20); clock skewdetected on mon.bgw-os-node153

monmap e2: 3 mons at{bgw-os-node151=10.240.216.151:6789/0,bgw-os-node152=10.240.216.152:6789/0,bgw-os-node153=10.240.216.153:6789/0},election epoch 8, quorum 0,1,2 bgw-os-node151,bgw-os-node152,bgw-os-node153

mdsmap e5: 1/1/1 up {0=bgw-os-node151=up:active}, 1up:standby #注意这

osdmap e81: 12 osds: 12 up, 12 in

pgmap v229: 192 pgs, 3 pools, 1884 bytes data, 20 objects

12702 MB used, 3338 GB / 3350 GB avail

192 active+clean

3.3在bgw-os-node153上创建mds

[root@bgw-os-node153 ~]# mkdir -p /var/lib/ceph/mds/ceph-bgw-os-node153

[root@bgw-os-node153 ~]# mkdir -p /var/lib/ceph/bootstrap-mds/

[root@bgw-os-node151 ~]# scp /var/lib/ceph/bootstrap-mds/ceph.keyringbgw-os-node153:/var/lib/ceph/bootstrap-mds/

[root@bgw-os-node151 ~]# scp /root/ceph.bootstrap-mds.keyring bgw-os-node153:/root

[root@bgw-os-node151 ~]# scp /var/lib/ceph/mds/ceph-bgw-os-node151/sysvinitbgw-os-node153:/var/lib/ceph/mds/ceph-bgw-os-node153/

[root@bgw-os-node153 ~]# ceph --cluster ceph --name client.bootstrap-mds --keyring/var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.bgw-os-node153osd 'allow rwx' mds 'allow' mon 'allow profile mds' -o/var/lib/ceph/mds/ceph-bgw-os-node153/keyring

[root@bgw-os-node153 ~]# touch /var/lib/ceph/mds/ceph-bgw-os-node153/done

[root@bgw-os-node153 ~]# service cephstart mds.bgw-os-node153

=== mds.bgw-os-node153 ===

Starting Ceph mds.bgw-os-node153 onbgw-os-node153...

starting mds.bgw-os-node153 at :/0

[root@bgw-os-node153 ~]# ceph -s

cluster 0071bd6f-849c-433a-8051-2e553df49aea

health HEALTH_WARN too few pgs per osd (16 < min 20); clock skewdetected on mon.bgw-os-node153

monmap e2: 3 mons at{bgw-os-node151=10.240.216.151:6789/0,bgw-os-node152=10.240.216.152:6789/0,bgw-os-node153=10.240.216.153:6789/0},election epoch 8, quorum 0,1,2 bgw-os-node151,bgw-os-node152,bgw-os-node153

mdsmap e8: 1/1/1 up {0=bgw-os-node151=up:active}, 2up:standby #注意这

osdmap e81: 12 osds: 12 up, 12 in

pgmap v229: 192 pgs, 3 pools, 1884 bytes data, 20 objects

12702 MB used, 3338 GB / 3350 GB avail

192 active+clean

4.添加rules

在bgw-os-node151上执行如下命令:

[root@bgw-os-node151 ~]# ceph osd crush rule create-simplejiayuan-replicated-ruleset default rack

[root@bgw-os-node151 ~]# ceph osd pool set data crush_ruleset 1

[root@bgw-os-node151 ~]# ceph osd pool set metadata crush_ruleset 1

[root@bgw-os-node151 ~]# ceph osd pool set rbd crush_ruleset 1

[root@bgw-os-node151 ~]# ceph osd pool set images crush_ruleset 1

[root@bgw-os-node151 ~]# ceph osd pool set volumes crush_ruleset 1

[root@bgw-os-node151 ~]# ceph osd pool set compute crush_ruleset 1