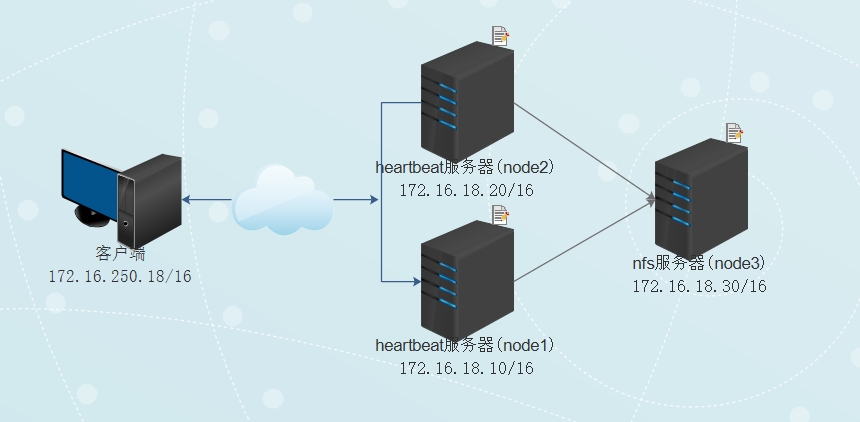

heartbeat搭建高可用NFS

基本环境准备;

[root@localhost ~]# vim/etc/sysconfig/network (修改主机名)

NETWORKING=yes

HOSTNAME=node1.dragon.com (这里每台主机自行修改为node1,2,3)

[root@localhost~]# vim /etc/hosts (修改主机文件,分别指向node1,2,3)

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

127.0.0.1node1.dragon.com node1 (这里每台主机自行修改为node1,2,3)

172.16.18.10node1.dragon.com node1

172.16.18.20node2.dragon.com node2

172.16.18.30node3.dragon.com node3

[root@localhost ~]# scp /etc/hosts node2:/etc/ (把文件传给node2,node3)

[root@localhost ~]# scp /etc/hosts node3:/etc/

[root@localhost ~]# scp/etc/sysconfig/network node2:/etc/sysconfig/

[root@localhost ~]# scp/etc/sysconfig/network node3:/etc/sysconfig/

[root@ localhost ~]# init 6(修改完三台服务器名称记得重启)

生成私钥;

方便几台服务器之间传输文件。这里仅是node1给node2和node3传私钥,如果node2想给node1传文件只需同样的配置;

[root@node1 ~]# ssh-keygen -t rsa (生成密钥,无密码)

[root@node1 ~]# ssh-copy-id node2 (密钥传给node2)

[root@node1 ~]# ssh-copy-id node3 (密钥传给node2)

安装相关包

(在两node1和node2执行)

[root@node1 yum.repos.d]# yum -ygroupinstall "development tools" "platform development" (安装开发平台,开发环境,三服务器全装)

[root@node1 ~]# yum install net-snmp-libs libnet PyXML perl-TimeDate (安装依赖关系,node1,node2装)

[root@node1 heartbeat2]# cd heartbeat2/

[root@node1 heartbeat2]# ll (相关包可去官网下载)

-rw-r--r-- 1 root root 1420924 Sep 10 2013 heartbeat-2.1.4-12.el6.x86_64.rpm

-rw-r--r-- 1 root root 3589552 Sep 10 2013heartbeat-debuginfo-2.1.4-12.el6.x86_64.rpm

-rw-r--r-- 1 root root 282836 Sep 10 2013 heartbeat-devel-2.1.4-12.el6.x86_64.rpm

-rw-r--r-- 1 root root 168052 Sep 10 2013 heartbeat-gui-2.1.4-12.el6.x86_64.rpm

-rw-r--r-- 1 root root 108932 Sep 10 2013 heartbeat-ldirectord-2.1.4-12.el6.x86_64.rpm

-rw-r--r-- 1 root root 92388 Sep 10 2013 heartbeat-pils-2.1.4-12.el6.x86_64.rpm

-rw-r--r-- 1 root root 166580 Sep 10 2013 heartbeat-stonith-2.1.4-12.el6.x86_64.rpm

[root@node1 heartbeat2]# rpm -ivh heartbeat-pils-2.1.4-12.el6.x86_64.rpmheartbeat-stonith-2.1.4-12.el6.x86_64.rpm heartbeat-2.1.4-12.el6.x86_64.rpm

设置时间同步

[root@node1 yum.repos.d]# date ;ssh node2'date' (查看时间 )

[root@node1 yum.repos.d]# ntpdate172.16.0.1 ;ssh node2 'ntpdate172.16.0.1' (我时间服务器是172.16.0.1,也可以去百度找时间服务器)

[root@node2 heartbeat2]# which ntpdate (查看某命令路径)

/usr/sbin/ntpdate

[root@node1 yum.repos.d]# crontab �Ce (在两节点执行,设置任务计划,每三分钟同步一次)

*/3 * * * */usr/sbin/ntpdate 172.16.0.1 &>/dev/null

配置heartbeat

[root@node1 yum.repos.d]# cd/usr/share/doc/heartbeat-2.1.4/

[root@node1 heartbeat-2.1.4]# cp -pauthkeys haresources ha.cf /etc/ha.d/ (保持原有属性,复制文件)

ha.cf:heartbeat的主配置文件;

authkeys:集群信息加密算法及密钥;

haresources:heartbeat v1的CRM配置接口;

[root@node1 heartbeat-2.1.4]# cd /etc/ha.d/

[root@node1 ha.d]# chmod 600 authkeys

[root@node1 ha.d]# vim ha.cf (编辑主配置文件;)

# File to write other messages to

logfile /var/log/ha-log

# Facility to use for syslog()/logger

#logfacility local0

……………..

mcast eth0 225.0.0.14 6941 0 (多播地址,范围224.0.2.0~238.255.255.255

,可随意填写, 基于多播的方式进行通信 ,选网卡,组,端口,多播报文ttl值(1是直达目标主机),0拒绝接收本地回环

)

# Set up a unicast / udp heartbeat medium

……….

# (note: auto_failback can be any boolean or "legacy")

auto_failback on (默认选项,出故障时自动恢复)

……….

# Tell what machines are in the cluster

# node nodename ... -- must match uname -n

#node ken3

#node kathy

node node1.dragon.com (定义节点名称,必须是各主机#uname -n的名称)

node node2.dragon.com

……….

# note: don't use a cluster node as ping node

#ping 10.10.10.254

ping 172.16.18.30 (定义仲裁节点,能Ping通就说明正常工作)

……….

# library in the system.

compression bz2 (定义压缩工具)

# Confiugre compression threshold

compression_threshold 2 (小于2K不压缩)

[root@node1 ~]# openssl rand -hex 6 (生成一个随机数)

febe06c057d0

[root@node1 ha.d]# vim authkeys (集群信息加密算法及密钥)

#auth 1

#1 crc

#2 sha1 HI!

#3 md5 Hello!

auth 1

1 sha1 febe06c057d0

[root@node1 ha.d]# vim haresources (设置资源管理haresources 主节点)

# They must match the names of the nodes listed in ha.cf, which in turn

# must match the `uname -n` of some node in the cluster. So they aren't

# virtual in any sense of the word.

node1.dragon.com 172.16.18.51/16/eth0/172.16.255.255 httpd (主节点,vip(虚拟IP),启动的服务)

[root@node1 ha.d]# scp -p authkeysharesources ha.cf node2:/etc/ha.d/ (node1,node2配置同步)

[root@node1 heartbeat2]# service heartbeatstart ; ssh node2 'service heartbeat start' (启动两节点heartbeat服务,会自动启用httpd服务)

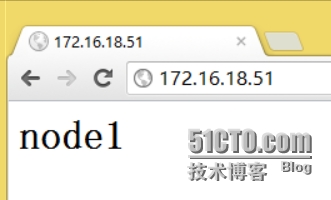

[root@node1 ha.d]# vim /var/www/html/index.html

<h1>node1</h1>

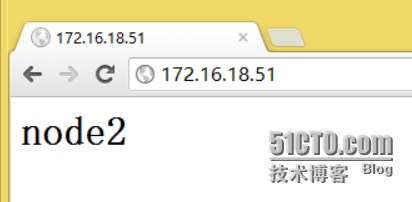

[root@node2 heartbeat2]# vim/var/www/html/index.html

<h1>node2</h1>

访问虚拟IP,发现vip在Node1节点上

[root@node1 ha.d]# service heartbeat stop (再访问172.16.18.51,会跳转到node2)

[root@node1 ha.d]# service heartbeat start

[root@node1 ha.d]# cd /usr/lib64/heartbeat/

[root@node1 heartbeat]# ./hb_standby (也可以输入命令使自己变备用节点)

[root@node1 heartbeat]#./hb_takeover (也可以输入命令使自己变主节点)

添加NFS服务器

[root@node3 yum.repos.d]# mkdir /web/htdocs�Cpv

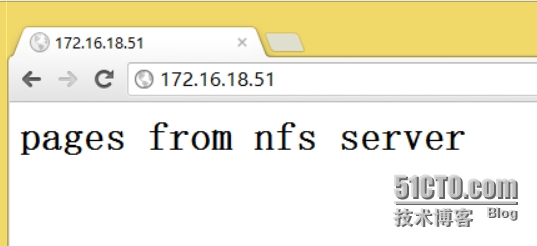

[root@node3 yum.repos.d]# vim/web/htdocs/index.html

<h1>pages from nfsserver</h1>

[[email protected]]# vim /etc/exports (共享文件/web/htdocs)

/web/htdocs172.16.0.0/16(rw,no_root_squash)

[root@node3 yum.repos.d]# service nfs start (启动NFS服务)

[root@node3 yum.repos.d]# chkconfig nfs on (设置开机启动此服务)

[root@node3 yum.repos.d]# chkconfig �Clist nfs (查看开机启动)

[root@node1 heartbeat]# cd /etc/ha.d/

[root@node1 ha.d]# service heartbeat stop;ssh node2 'service heartbeat stop' (先关闭服务)

[root@node1 ha.d]# mount -t nfs 172.16.18.30:/web/htdocs /mnt (尝试挂载,最好在两节点都亲尝试)

[root@node1 ha.d]# mount (查看是否挂载成功)

[root@node1 ha.d]# umount /mnt/ (成功后卸载)

[root@node1 ha.d]# vim haresources

#node1.dragon.com172.16.18.51/16/eth0/172.16.255.255 httpd (注释前面的配置)

node1.dragon.com172.16.18.51/16/eth0/172.16.255.255Filesystem::172.16.18.30:/web/htdocs::/var/www/html::nfs httpd

Node1(默认主节点名称) IP地址 Filesystem(代理文件)::172.16.18.30:/web/htdocs(代理文件地址,我们这里是共享文件,也可以是本地文件,如果是本地文件直接写本地路径)::/var/www/html挂在点::nfs文件系统 所用服务:httpd

[root@node1 ha.d]# scp haresourcesnode2:/etc/ha.d/ (复制给Node2)

[root@node1 ha.d]# service heartbeatrestart ;ssh node2 'service heartbeat restart' (启动服务)

[root@node1 ha.d]# mount (等待几秒发现文件已经挂载成功)

172.16.18.30:/web/htdocson /var/www/html type nfs (rw,vers=4,addr=172.16.18.30,clientaddr=172.16.18.10)

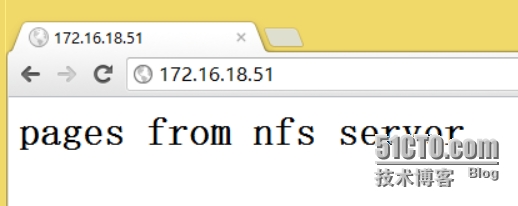

再次访问172.16.18.51(虚拟IP),就显示NFS的页面了!

[root@node1 ha.d]# serviceheartbeat stop (停止主节点服务)

[root@node2 ha.d]# mout (文件已经挂载到备用节点)

172.16.18.30:/web/htdocson /var/www/html type nfs (rw,vers=4,addr=172.16.18.30,clientaddr=172.16.18.20)

仍然可以访问!!!