如何采用Python zabbix_api 获取性能数据

如何采用Python zabbix_api 获取性能数据

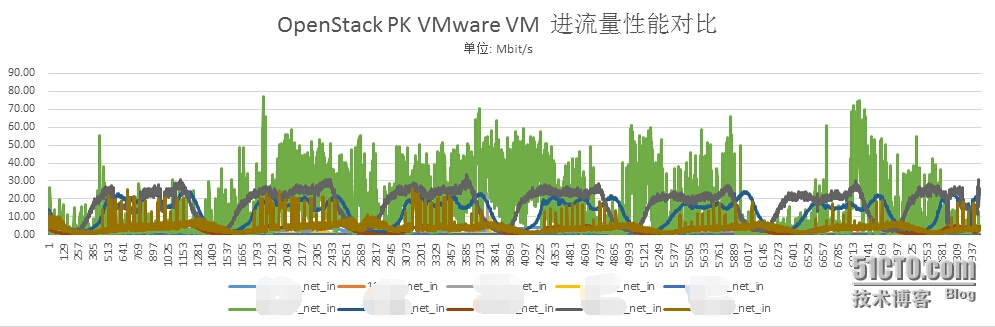

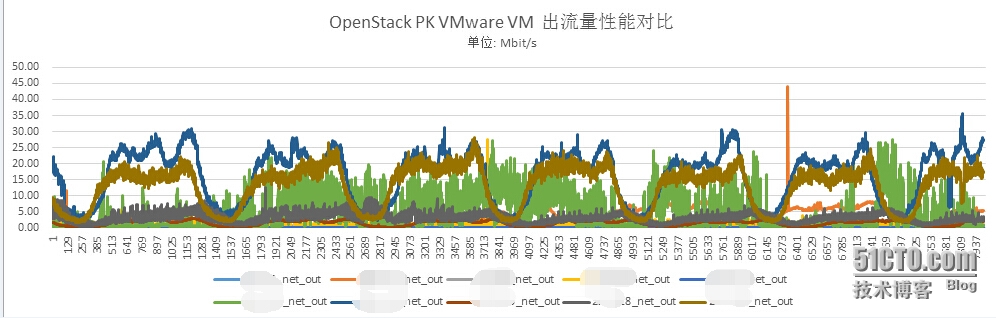

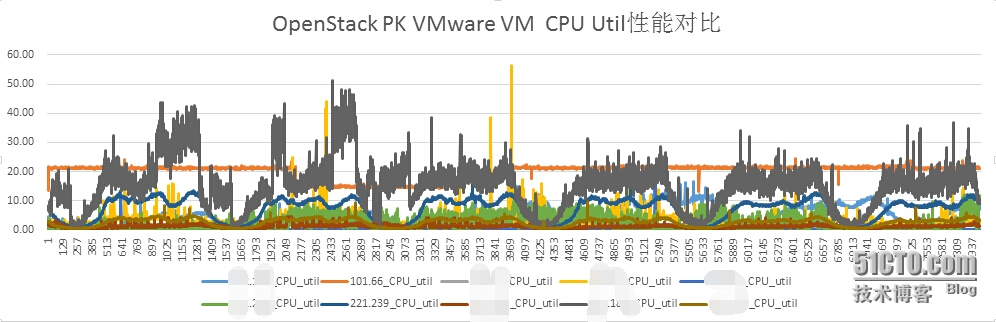

最近领导需要一份数据,OpenStack ,VMware,物理机之间的性能报告,在撰写报告之前需要数据支撑,我们采用的是zabbix 监控,需要采取一周内的历史数据作为对比,那数据如何获取,请看以下章节

一:安装zabbix api 接口,修改zabbix api 调用接口,获取数据

*****************************************************************************************

easy_install zabbix_api

*****************************************************************************************

#!/usr/bin/python

# The research leading to these results has received funding from the

# European Commission's Seventh Framework Programme (FP7/2007-13)

# under grant agreement no 257386.

# http://www.bonfire-project.eu/

# Copyright 2012 Yahya Al-Hazmi, TU Berlin

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License

# this script fetches resource monitoring information from Zabbix-Server

# through Zabbix-API

#

# To run this script you need to install python-argparse "apt-get install python-argparse"

from zabbix_api import ZabbixAPI

import sys

import datetime

import time

import argparse

def fetch_to_csv(username,password,server,hostname,key,output,datetime1,datetime2,debuglevel):

zapi = ZabbixAPI(server=server, log_level=debuglevel)

try:

zapi.login(username, password)

except:

print "zabbix server is not reachable: %s" % (server)

sys.exit()

host = zapi.host.get({"filter":{"host":hostname}, "output":"extend"})

if(len(host)==0):

print "hostname: %s not found in zabbix server: %s, exit" % (hostname,server)

sys.exit()

else:

hostid=host[0]["hostid"]

print '*' * 100

print key

print '*' * 100

if(key==""):

print '*' * 100

items = zapi.item.get({"filter":{"hostid":hostid} , "output":"extend"})

if(len(items)==0):

print "there is no item in hostname: %s, exit" % (hostname)

sys.exit()

dict={}

for item in items:

dict[str(item['itemid'])]=item['key_']

if (output == ''):

output=hostname+".csv"

f = open(output, 'w')

str1="#key;timestamp;value\n"

if (datetime1=='' and datetime2==''):

for itemid in items:

itemidNr=itemid["itemid"]

str1=str1+itemid["key_"]+";"+itemid["lastclock"]+";"+itemid["lastvalue"]+"\n"

f.write(str1)

print "Only the last value from each key has been fetched, specify t1 or t1 and t2 to fetch more data"

f.close()

elif (datetime1!='' and datetime2==''):

try:

d1=datetime.datetime.strptime(datetime1,'%Y-%m-%d %H:%M:%S')

except:

print "time data %s does not match format Y-m-d H:M:S, exit" % (datetime1)

sys.exit()

timestamp1=time.mktime(d1.timetuple())

timestamp2=int(round(time.time()))

inc=0

history = zapi.history.get({"hostids":[hostid,],"time_from":timestamp1,"time_till":timestamp2, "output":"extend" })

for h in history:

str1=str1+dict[h["itemid"]]+";"+h["clock"]+";"+h["value"]+"\n"

inc=inc+1

f.write(str1)

f.close()

print str(inc) +" records has been fetched and saved into: " + output

elif (datetime1=='' and datetime2!=''):

for itemid in items:

itemidNr=itemid["itemid"]

str1=str1+itemid["key_"]+";"+itemid["lastclock"]+";"+itemid["lastvalue"]+"\n"

f.write(str1)

print "Only the last value from each key has been fetched, specify t1 or t1 and t2 to fetch more data"

f.close()

else:

try:

d1=datetime.datetime.strptime(datetime1,'%Y-%m-%d %H:%M:%S')

except:

print "time data %s does not match format Y-m-d H:M:S, exit" % (datetime1)

sys.exit()

try:

d2=datetime.datetime.strptime(datetime2,'%Y-%m-%d %H:%M:%S')

except:

print "time data %s does not match format Y-m-d H:M:S, exit" % (datetime2)

sys.exit()

timestamp1=time.mktime(d1.timetuple())

timestamp2=time.mktime(d2.timetuple())

inc=0

history = zapi.history.get({"hostids":[hostid,],"time_from":timestamp1,"time_till":timestamp2, "output":"extend" })

for h in history:

str1=str1+dict[h["itemid"]]+";"+h["clock"]+";"+h["value"]+"\n"

inc=inc+1

f.write(str1)

f.close()

print str(inc) +" records has been fetched and saved into: " + output

else:

#print "key is: %s" %(key)

itemid = zapi.item.get({"filter":{"key_":key, "hostid":hostid} , "output":"extend"})

if(len(itemid)==0):

print "item key: %s not found in hostname: %s" % (key,hostname)

sys.exit()

itemidNr=itemid[0]["itemid"]

if (output == ''):

output=hostname+".csv"

f = open(output, 'w')

str1="#key;timestamp;value\n"

if (datetime1=='' and datetime2==''):

str1=str1+key+";"+itemid[0]["lastclock"]+";"+itemid[0]["lastvalue"]+"\n"

#f.write(str1)

f.write(str1)

f.close()

print "Only the last value has been fetched, specify t1 or t1 and t2 to fetch more data"

elif (datetime1!='' and datetime2==''):

d1=datetime.datetime.strptime(datetime1,'%Y-%m-%d %H:%M:%S')

timestamp1=time.mktime(d1.timetuple())

timestamp2=int(round(time.time()))

history = zapi.history.get({"history":itemid[0]["value_type"],"time_from":timestamp1,"time_till":timestamp2, "itemids":[itemidNr,], "output":"extend" })

inc=0

for h in history:

str1 = str1 + key + ";" + h["clock"] +";"+h["value"] + "\n"

inc=inc+1

f.write(str1)

f.close()

print str(inc) +" records has been fetched and saved into: " + output

elif (datetime1=='' and datetime2!=''):

str1=str1+key+";"+itemid[0]["lastclock"]+";"+itemid[0]["lastvalue"]+"\n"

f.write(str1)

f.close()

print "Only the last value has been fetched, specify t1 or t1 and t2 to fetch more data"

else:

d1=datetime.datetime.strptime(datetime1,'%Y-%m-%d %H:%M:%S')

d2=datetime.datetime.strptime(datetime2,'%Y-%m-%d %H:%M:%S')

timestamp1=time.mktime(d1.timetuple())

timestamp2=time.mktime(d2.timetuple())

history = zapi.history.get({"history":itemid[0]["value_type"],"time_from":timestamp1,"time_till":timestamp2, "itemids":[itemidNr,], "output":"extend" })

inc=0

for h in history:

str1 = str1 + key + ";" + h["clock"] +";"+h["value"] + "\n"

inc=inc+1

print str(inc) +" records has been fetched and saved into: " + output

f.write(str1)

f.close()

parser = argparse.ArgumentParser(description='Fetch history from aggregator and save it into CSV file')

parser.add_argument('-s', dest='server_IP', required=True,

help='aggregator IP address')

parser.add_argument('-n', dest='hostname', required=True,

help='name of the monitored host')

parser.add_argument('-k', dest='key',default='',

help='zabbix item key, if not specified the script will fetch all keys for the specified hostname')

parser.add_argument('-u', dest='username', default='Admin',

help='zabbix username, default Admin')

parser.add_argument('-p', dest='password', default='zabbix',

help='zabbix password')

parser.add_argument('-o', dest='output', default='',

help='output file name, default hostname.csv')

parser.add_argument('-t1', dest='datetime1', default='',

help='begin date-time, use this pattern \'2011-11-08 14:49:43\' if only t1 specified then time period will be t1-now ')

parser.add_argument('-t2', dest='datetime2', default='',

help='end date-time, use this pattern \'2011-11-08 14:49:43\'')

parser.add_argument('-v', dest='debuglevel', default=0, type=int,

help='log level, default 0')

args = parser.parse_args()

fetch_to_csv(args.username, args.password, "http://"+args.server_IP+"/zabbix", args.hostname, args.key, args.output, args.datetime1,args.datetime2,args.debuglevel)

二:撰写通过key获取一周内的数据

items : 在zabbix 中搜索主机,选择最新数据,找到项目(items),点击进入,能看到机器的所有keys,在负载到程序的items 字典中,程序会循环读取items ,获取数据

#/usr/bin/env python

#-*-coding:UTF-8

"""

wget http://doc.bonfire-project.eu/R4.1/_static/scripts/fetch_items_to_csv.py

"""

import os,sys,time

users=u'admin'

pawd = 'zabbix'

exc_py = '/data/zabbix/fetch_items_to_csv.py'

os.system('easy_install zabbix_api')

os.system('mkdir -p /data/zabbix/cvs/')

if not os.path.exists(exc_py):

os.system("mkdir -p /data")

os.system("wget http://doc.bonfire-project.eu/R4.1/_static/scripts/fetch_items_to_csv.py -O /data/zabbix/fetch_items_to_csv.py")

def show_items(moniter, dip):

items = dict()

items['io_read_win'] = "perf_counter[\\2\\16]"

items['io_write_win'] = "perf_counter[\\2\\18]"

items['io_read_lin'] = "iostat[,rkB/s]"

items['io_write_lin'] = "iostat[,wkB/s]"

items['io_read_lin_sda'] = "iostat[sda,rkB/s]"

items['io_write_lin_sda'] = "iostat[sda,wkB/s]"

items['io_read_lin_sdb'] = "iostat[sdb,rkB/s]"

items['io_write_lin_sdb'] = "iostat[sdb,wkB/s]"

# Add items, iostate vdb,vdb

items['io_read_lin_vda'] = "iostat[vda,rkB/s]"

items['io_write_lin_vda'] = "iostat[vda,wkB/s]"

items['io_read_lin_vdb'] = "iostat[vdb,rkB/s]"

items['io_write_lin_vdb'] = "iostat[vdb,wkB/s]"

items['cpu_util'] = "system.cpu.util"

items['net_in_linu_vm_web'] = "net.if.in[eth0]"

items['net_out_lin_vm_web'] = "net.if.out[eth0]"

items['net_in_linu_vm_db'] = "net.if.in[eth1]"

items['net_out_lin_vm_db'] = "net.if.out[eth1]"

items['net_in_win_vm'] = "net.if.in[Red Hat VirtIO Ethernet Adapter]"

items['net_in_win_vm_2'] = "net.if.in[Red Hat VirtIO Ethernet Adapter #2]"

items['net_in_win_vm_3'] = "net.if.in[Red Hat VirtIO Ethernet Adapter #3]"

items['net_out_win_vm'] = "net.if.out[Red Hat VirtIO Ethernet Adapter]"

items['net_out_win_vm_2'] = "net.if.out[Red Hat VirtIO Ethernet Adapter #2]"

items['net_out_win_vm_3'] = "net.if.out[Red Hat VirtIO Ethernet Adapter #3]"

items['net_in_phy_web_lin'] = "net.if.in[bond0]"

items['net_out_phy_web_lin'] = "net.if.out[bond0]"

items['net_in_phy_db_lin'] = "net.if.in[bond1]"

items['net_out_phy_db_lin'] = "net.if.out[bond1]"

items['net_in_phy_web_win'] = "net.if.in[TEAM : WEB-TEAM]"

items['net_out_phy_web_win'] = "net.if.in[TEAM : WEB-TEAM]"

items['net_in_phy_db_win'] = "net.if.in[TEAM : DB Team]"

items['net_out_phy_db_win'] = "net.if.out[TEAM : DB Team]"

items['net_in_phy_web_win_1'] = "net.if.in[TEAM : web]"

items['net_out_phy_web_win_1'] = "net.if.out[TEAM : web]"

items['net_in_phy_db_win_1'] = "net.if.in[TEAM : DB]"

items['net_out_phy_db_win_1'] = "net.if.out[TEAM : DB]"

items['net_in_win_pro'] = "net.if.in[Intel(R) PRO/1000 MT Network Connection]"

items['net_out_win_pro'] = "net.if.out[Intel(R) PRO/1000 MT Network Connection]"

items['net_in_phy_web_hp'] = "net.if.in[HP Network Team #1]"

items['net_out_phy_web_hp'] = "net.if.out[HP Network Team #1]"

items['iis_conntion'] = "perf_counter[\\Web Service(_Total)\\Current Connections]"

items['tcp_conntion'] = "k.tcp.conn[ESTABLISHED]"

for x,y in items.items():

os.system('mkdir -p /data/zabbix/cvs/%s' % dip)

cmds = """

python /data/zabbix/fetch_items_to_csv.py -s '%s' -n '%s' -k '%s' -u 'admin' -p '%s' -t1 '2015-06-23 00:00:01' -t2 '2015-06-30 00:00:01' -o /data/zabbix/cvs/%s/%s_%s.cvs""" %(moniter,dip,y,pawd,dip,dip,x)

os.system(cmds)

print "*" * 100

print cmds

print "*" * 100

def work():

moniter='192.168.1.1'

ip_list = ['192.168.1.15','192.168.1.13','192.168.1.66','192.168.1.5','192.168.1.7','192.168.1.16','192.168.1.38','192.168.1.2','192.168.1.13','192.168.1.10']

for ip in ip_list:

show_items(moniter,ip )

if __name__ == "__main__":

sc = work()

三:数据采集完毕,进行格式化输出

#!/usr/bin/env python

#-*-coding:utf8-*-

import os,sys,time

workfile = '/home/zabbix/zabbix/sjz/'

def collect_info():

dict_doc = dict()

for i in os.listdir(workfile):

dict_doc[i] = list()

for v in os.listdir('%s%s' %(workfile,i)):

dict_doc[i].append(v)

count = 0

for x,y in dict_doc.items():

for p in y:

fp = '%s/%s/%s' %(workfile,x,p)

op = open(fp,'r').readlines()

np = '%s.txt' %p

os.system( """ cat %s|awk -F";" '{print $3}' > %s """ %(fp,np))

count += 1

print count

if __name__ == "__main__":

sc = collect_info()

四,整理数据,汇报成图形,撰写技术报告