HBase-1.0.1学习笔记(三)客户端访问

鲁春利的工作笔记,谁说程序员不能有文艺范?

表设计

HBase的表由行和列共同组成,与关系型数据库不同的是其具有列族(column family)的概念。列族将一列或多列组织到一起,HBase的列必须属于某一个列族。

HBase没有数据类型,所有的数据都是转换成字节数组进行存储的。HBase中的行(row)是通过行键(row key)进行区分的,行键(row key)也是唯一用来确定一行的标识,因此所有表中的行都必须要有RowKey。HBase提供了命令行创建表,创建时只需指定表名和至少一个列族,新增数据时指定row key,而相同行键的插入操作被认为是同一行的操作。

逻辑上表可以看成是稀疏的行的集合,但在物理上,表是表是按列分开存储的。HBase的列按照列族分组,HFile是面向列的,存放行的不同列的物理文件,一个列族的数据存放在多个HFile中,由同一个Region管理。

在HDFS中每个表名都作为独立的目录结构,如通过hbase shell创建表t_domain

hbase(main):017:0> create 't_domain', 'c_domain' 0 row(s) in 0.4520 seconds => Hbase::Table - t_domain

通过HDFS查看

HBase中行键(RowKey)是表中唯一的,并且按照字典排序由低到高存储在表中的。

hbase(main):018:0> put 't_domain', 'row1', 'c_domain:name', 'baidu.com' 0 row(s) in 0.6830 seconds hbase(main):019:0> put 't_domain', 'row2', 'c_domain:name', 'sina.com' 0 row(s) in 0.0870 seconds hbase(main):020:0> put 't_domain', 'row3', 'c_domain:name', 'mycms.com' 0 row(s) in 0.0100 seconds hbase(main):021:0> put 't_domain', 'row10', 'c_domain:name', 'www.163.com' 0 row(s) in 0.0280 seconds hbase(main):022:0> put 't_domain', 'row21', 'c_domain:name', 'www.51cto.com' 0 row(s) in 0.0240 seconds hbase(main):023:0> scan 't_domain' ROW COLUMN+CELL row1 column=c_domain:name, timestamp=1440340474441, value=baidu.com row10 column=c_domain:name, timestamp=1440340536382, value=www.163.com row2 column=c_domain:name, timestamp=1440340492582, value=sina.com row21 column=c_domain:name, timestamp=1440340550534, value=www.51cto.com row3 column=c_domain:name, timestamp=1440340518003, value=mycms.com 5 row(s) in 0.1350 seconds

HBase客户端

Java客户端:

org.apache.hadoop.hbase.client.HTable类:该类的读写是非线程安全的,不再作为client API提供给开发用户使用,建议通过Table类替代。

/**

* Creates an object to access a HBase table.

* @param conf Configuration object to use.

* @param tableName Name of the table.

* @throws IOException if a remote or network exception occurs

* @deprecated Constructing HTable objects manually has been deprecated.

* {@link Connection} to instantiate a {@link Table} instead.

*/

@Deprecated

public HTable(Configuration conf, final String tableName)

throws IOException {

this(conf, TableName.valueOf(tableName));

}

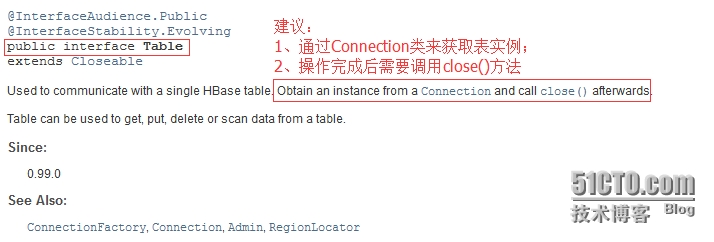

org.apache.hadoop.hbase.client.Table类:

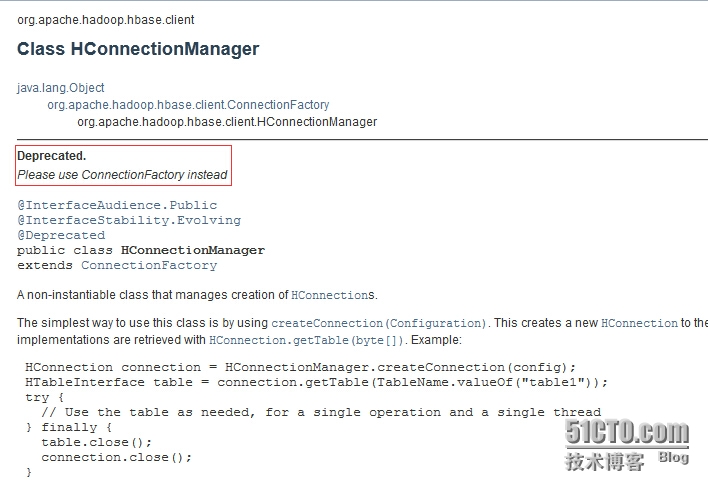

org.apache.hadoop.hbase.client.HConnectionManager类:

org.apache.hadoop.hbase.client.HBaseAdmin类:

@InterfaceAudience.Private

@InterfaceStability.Evolving

public class HBaseAdmin implements Admin {

private static final Log LOG = LogFactory.getLog(HBaseAdmin.class);

// 略

@Deprecated

public HBaseAdmin(Configuration c)

throws MasterNotRunningException, ZooKeeperConnectionException, IOException {

// Will not leak connections, as the new implementation of the constructor

// does not throw exceptions anymore.

this(ConnectionManager.getConnectionInternal(new Configuration(c)));

this.cleanupConnectionOnClose = true;

}

// 略

}

# 说明:HBaseAdmin不在作为客户端API使用,标记为Private表示为HBase-internal class。

# 使用Connection#getAdmin()来获取Admin实例。

org.apache.hadoop.hbase.client.ConnectionFactory类:

@InterfaceAudience.Public

@InterfaceStability.Evolving

public class ConnectionFactoryextends Object

// Example:

Connection connection = ConnectionFactory.createConnection(config);

Table table = connection.getTable(TableName.valueOf("table1"));

try {

// Use the table as needed, for a single operation and a single thread

} finally {

table.close();

connection.close();

}

客户端使用示例:

package com.invic.hbase;

import java.io.IOException;

import java.util.Iterator;

import java.util.List;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.Cell;

import org.apache.hadoop.hbase.CellUtil;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.filter.Filter;

import org.apache.hadoop.hbase.filter.PageFilter;

import org.apache.hadoop.hbase.util.Bytes;

/**

*

* @author lucl

* HBase的配置实例

*

*/

public class HBaseManagerMain {

private static final Log LOG = LogFactory.getLog(HBaseManagerMain.class);

// 在Eclipse中运行时报错如下

// Caused by: java.lang.ClassNotFoundException: org.apache.htrace.Trace

// Caused by: java.lang.NoClassDefFoundError: io/netty/channel/ChannelHandler

// 需要把单独的htrace-core-3.1.0-incubating.jar和netty-all-4.0.5.final.jar导入项目中

private static final String TABLE_NAME = "m_domain";

private static final String COLUMN_FAMILY_NAME = "cf";

/**

* @param args

*/

public static void main(String[] args) {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.master", "nnode:60000");

conf.set("hbase.zookeeper.property.clientport", "2181");

conf.set("hbase.zookeeper.quorum", "nnode,dnode1,dnode2");

HBaseManagerMain manageMain = new HBaseManagerMain();

try {

/**

* HTable类读写时是非线程安全的,已经标记为Deprecated

* 建议通过org.apache.hadoop.hbase.client.Connection来获取实例

*/

Connection connection = ConnectionFactory.createConnection(conf);

Admin admin = connection.getAdmin();

/**

* 列出所有的表

*/

manageMain.listTables(admin);

/**

* 判断表m_domain是否存在

*/

boolean exists = manageMain.isExists(admin);

/**

* 存在就删除

*/

if (exists) {

manageMain.deleteTable(admin);

}

/**

* 创建表

*/

manageMain.createTable(admin);

/**

* 再次列出所有的表

*/

manageMain.listTables(admin);

/**

* 添加数据

*/

manageMain.putDatas(connection);

/**

* 检索数据-表扫描

*/

manageMain.scanTable(connection);

/**

* 检索数据-单行读

*/

manageMain.getData(connection);

/**

* 检索数据-根据条件

*/

manageMain.queryByFilter(connection);

/**

* 删除数据

*/

manageMain.deleteDatas(connection);

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 列出表

* @param admin

* @throws IOException

*/

private void listTables (Admin admin) throws IOException {

TableName [] names = admin.listTableNames();

for (TableName tableName : names) {

LOG.info("Table Name is : " + tableName.getNameAsString());

}

}

/**

* 判断表是否存在

* @param admin

* @return

* @throws IOException

*/

private boolean isExists (Admin admin) throws IOException {

/**

* org.apache.hadoop.hbase.TableName为为代表了表名字的Immutable POJO class对象,

* 形式为<table namespace>:<table qualifier>。

* static TableName valueOf(byte[] fullName)

* static TableName valueOf(byte[] namespace, byte[] qualifier)

* static TableName valueOf(ByteBuffer namespace, ByteBuffer qualifier)

* static TableName valueOf(String name)

* static TableName valueOf(String namespaceAsString, String qualifierAsString)

* HBase系统默认定义了两个缺省的namespace

* hbase:系统内建表,包括namespace和meta表

* default:用户建表时未指定namespace的表都创建在此

* 在HBase中,namespace命名空间指对一组表的逻辑分组,类似RDBMS中的database,方便对表在业务上划分。

*

*/

TableName tableName = TableName.valueOf(TABLE_NAME);

boolean exists = admin.tableExists(tableName);

if (exists) {

LOG.info("Table " + tableName.getNameAsString() + " already exists.");

} else {

LOG.info("Table " + tableName.getNameAsString() + " not exists.");

}

return exists;

}

/**

* 创建表

* @param admin

* @throws IOException

*/

private void createTable (Admin admin) throws IOException {

TableName tableName = TableName.valueOf(TABLE_NAME);

LOG.info("To create table named " + TABLE_NAME);

HTableDescriptor tableDesc = new HTableDescriptor(tableName);

HColumnDescriptor columnDesc = new HColumnDescriptor(COLUMN_FAMILY_NAME);

tableDesc.addFamily(columnDesc);

admin.createTable(tableDesc);

}

/**

* 删除表

* @param admin

* @throws IOException

*/

private void deleteTable (Admin admin) throws IOException {

TableName tableName = TableName.valueOf(TABLE_NAME);

LOG.info("disable and then delete table named " + TABLE_NAME);

admin.disableTable(tableName);

admin.deleteTable(tableName);

}

/**

* 添加数据

* @param connection

* @throws IOException

*/

private void putDatas (Connection connection) throws IOException {

String [] rows = {"baidu.com_19991011_20151011", "alibaba.com_19990415_20220523"};

String [] columns = {"owner", "ipstr", "access_server", "reg_date", "exp_date"};

String [][] values = {

{"Beijing Baidu Technology Co.", "220.181.57.217", "北京", "1999年10月11日", "2015年10月11日"},

{"Hangzhou Alibaba Advertising Co.", "205.204.101.42", "杭州", "1999年04月15日", "2022年05月23日"}

};

TableName tableName = TableName.valueOf(TABLE_NAME);

byte [] family = Bytes.toBytes(COLUMN_FAMILY_NAME);

Table table = connection.getTable(tableName);

for (int i = 0; i < rows.length; i++) {

System.out.println("========================" + rows[i]);

byte [] rowkey = Bytes.toBytes(rows[i]);

Put put = new Put(rowkey);

for (int j = 0; j < columns.length; j++) {

byte [] qualifier = Bytes.toBytes(columns[j]);

byte [] value = Bytes.toBytes(values[i][j]);

put.addColumn(family, qualifier, value);

}

table.put(put);

}

table.close();

}

/**

* 检索数据-单行获取

* @param connection

* @throws IOException

*/

private void getData(Connection connection) throws IOException {

LOG.info("Get data from table " + TABLE_NAME + " by family.");

TableName tableName = TableName.valueOf(TABLE_NAME);

byte [] family = Bytes.toBytes(COLUMN_FAMILY_NAME);

byte [] row = Bytes.toBytes("baidu.com_19991011_20151011");

Table table = connection.getTable(tableName);

Get get = new Get(row);

get.addFamily(family);

// 也可以通过addFamily或addColumn来限定查询的数据

Result result = table.get(get);

List<Cell> cells = result.listCells();

for (Cell cell : cells) {

String qualifier = new String(CellUtil.cloneQualifier(cell));

String value = new String(CellUtil.cloneValue(cell), "UTF-8");

// @Deprecated

// LOG.info(cell.getQualifier() + "\t" + cell.getValue());

LOG.info(qualifier + "\t" + value);

}

}

/**

* 检索数据-表扫描

* @param connection

* @throws IOException

*/

private void scanTable(Connection connection) throws IOException {

LOG.info("Scan table " + TABLE_NAME + " to browse all datas.");

TableName tableName = TableName.valueOf(TABLE_NAME);

byte [] family = Bytes.toBytes(COLUMN_FAMILY_NAME);

Scan scan = new Scan();

scan.addFamily(family);

Table table = connection.getTable(tableName);

ResultScanner resultScanner = table.getScanner(scan);

for (Iterator<Result> it = resultScanner.iterator(); it.hasNext(); ) {

Result result = it.next();

List<Cell> cells = result.listCells();

for (Cell cell : cells) {

String qualifier = new String(CellUtil.cloneQualifier(cell));

String value = new String(CellUtil.cloneValue(cell), "UTF-8");

// @Deprecated

// LOG.info(cell.getQualifier() + "\t" + cell.getValue());

LOG.info(qualifier + "\t" + value);

}

}

}

/**

* 安装条件检索数据

* @param connection

*/

private void queryByFilter(Connection connection) {

// 简单分页过滤器示例程序

Filter filter = new PageFilter(15); // 每页15条数据

int totalRows = 0;

byte [] lastRow = null;

Scan scan = new Scan();

scan.setFilter(filter);

// 略

}

/**

* 删除数据

* @param connection

* @throws IOException

*/

private void deleteDatas(Connection connection) throws IOException {

LOG.info("delete data from table " + TABLE_NAME + " .");

TableName tableName = TableName.valueOf(TABLE_NAME);

byte [] family = Bytes.toBytes(COLUMN_FAMILY_NAME);

byte [] row = Bytes.toBytes("baidu.com_19991011_20151011");

Delete delete = new Delete(row);

// @deprecated Since hbase-1.0.0. Use {@link #addColumn(byte[], byte[])}

// delete.deleteColumn(family, qualifier); // 删除某个列的某个版本

delete.addColumn(family, Bytes.toBytes("owner"));

// @deprecated Since hbase-1.0.0. Use {@link #addColumns(byte[], byte[])}

// delete.deleteColumns(family, qualifier) // 删除某个列的所有版本

// @deprecated Since 1.0.0. Use {@link #(byte[])}

// delete.addFamily(family); // 删除某个列族

Table table = connection.getTable(tableName);

table.delete(delete);

}

}

HBase Shell工具:

选择一台HBase集群的节点(建议选择客户端节点),执行命令bin/hbase shell

看到如下命令说明已进入hbase shell环境,执行help命令可以查看帮助信息:

[hadoop@dnode1 bin]$ hbase shell

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 0.98.1-hadoop2, r1583035, Sat Mar 29 17:19:25 PDT 2014

hbase(main):001:0> help

HBase Shell, version 0.98.1-hadoop2, r1583035, Sat Mar 29 17:19:25 PDT 2014

Type 'help "COMMAND"', (e.g. 'help "get"' -- the quotes are necessary) for help on a specific command.

Commands are grouped. Type 'help "COMMAND_GROUP"', (e.g. 'help "general"') for help on a command group.

COMMAND GROUPS:

Group name: general

Commands: status, table_help, version, whoami

Group name: ddl

Commands: alter, alter_async, alter_status, create, describe, disable, disable_all, drop, drop_all, enable, enable_all, exists, get_table, is_disabled, is_enabled, list, show_filters

Group name: namespace

Commands: alter_namespace, create_namespace, describe_namespace, drop_namespace, list_namespace, list_namespace_tables

Group name: dml

Commands: append, count, delete, deleteall, get, get_counter, incr, put, scan, truncate, truncate_preserve

Group name: tools

Commands: assign, balance_switch, balancer, catalogjanitor_enabled, catalogjanitor_run, catalogjanitor_switch, close_region, compact, flush, hlog_roll, major_compact, merge_region, move, split, trace, unassign, zk_dump

Group name: replication

Commands: add_peer, disable_peer, enable_peer, list_peers, list_replicated_tables, remove_peer, set_peer_tableCFs, show_peer_tableCFs

Group name: snapshot

Commands: clone_snapshot, delete_snapshot, list_snapshots, rename_snapshot, restore_snapshot, snapshot

Group name: security

Commands: grant, revoke, user_permission

Group name: visibility labels

Commands: add_labels, clear_auths, get_auths, set_auths

# 查看集群状态

hbase(main):002:0> status

3 servers, 0 dead, 3.0000 average load

# 该集群有3台RegionServer,没有”死掉“的RegionServer中,平均每台有3个Region。

# HBase版本命令

hbase(main):003:0> version

0.98.1-hadoop2, r1583035, Sat Mar 29 17:19:25 PDT 2014

# 返回值为由逗号分割的三个部分,0.98.1-hadoop2表示HBase版本号,r1583035表示修订版本,第三部分为编译HBase的时间。

# 查看ddl命令组的帮助

hbase(main):006:0> help 'ddl'

Command: alter

Alter a table. Depending on the HBase setting ("hbase.online.schema.update.enable"),

the table must be disabled or not to be altered (see help 'disable').

You can add/modify/delete column families, as well as change table

configuration. Column families work similarly to create; column family

spec can either be a name string, or a dictionary with NAME attribute.

Dictionaries are described on the main help command output.

For example, to change or add the 'f1' column family in table 't1' from

current value to keep a maximum of 5 cell VERSIONS, do:

hbase> alter 't1', NAME => 'f1', VERSIONS => 5

You can operate on several column families:

hbase> alter 't1', 'f1', {NAME => 'f2', IN_MEMORY => true}, {NAME => 'f3', VERSIONS => 5}

// 略

# 示例:

hbase(main):033:0> create 'tb1', {NAME => 'cf1', VERSIONS => 5}

0 row(s) in 20.1040 seconds

=> Hbase::Table - tb1

hbase(main):035:0> create 'tb2', {NAME => 'cf1'}, {NAME => 'cf2'}, {NAME => 'cf3'}

0 row(s) in 19.1130 seconds

=> Hbase::Table - tb2

# 上述命令的简化版本如下

hbase(main):036:0> create 'tb3', 'cf1'

0 row(s) in 6.8610 seconds

=> Hbase::Table - tb3

hbase(main):037:0> create 'tb4', 'cf1', 'cf2', 'cf3' # 三个列族

0 row(s) in 6.2010 seconds

hbase(main):045:0> list

TABLE

httptable

tb1

tb2

tb3

tb4

testtable

6 row(s) in 2.3430 seconds

=> ["httptable", "tb1", "tb2", "tb3", "tb4", "testtable"]

hbase(main):046:0>

# 说明:

# => 表示赋值,如{NAME = 'cf1'};

# 字符串必须使用单引号引起来,如'cf2';

# 如果创建表时需要指定列族的特定属性,需要花括号括起来,如{NAME='cf1', VERSIONS=5}

hbase(main):025:0> help 'namespace'

Command: alter_namespace

Alter namespace properties.

To add/modify a property:

hbase> alter_namespace 'ns1', {METHOD => 'set', 'PROERTY_NAME' => 'PROPERTY_VALUE'}

To delete a property:

hbase> alter_namespace 'ns1', {METHOD => 'unset', NAME=>'PROERTY_NAME'}

// 略

hbase(main):048:0> create_namespace 'ns1'

0 row(s) in 4.7320 seconds

hbase(main):049:0> list_namespace

NAMESPACE

default

hbase

ns1

3 row(s) in 11.0490 seconds

hbase(main):050:0> create 'ns1:tb5', 'cf' # 列族cf

0 row(s) in 12.0680 seconds

=> Hbase::Table - ns1:tb5

hbase(main):051:0> list_namespace_tables 'ns1'

TABLE

tb5

1 row(s) in 2.1220 seconds

hbase(main):052:0>

# 查看DML命令的帮助

hbase(main):026:0> help 'dml'

hbase(main):052:0> put 'ns1:tb5', 'baidu.com', 'cf:owner', 'BeiJingBaiduCo.'

0 row(s) in 0.4400 seconds

hbase(main):053:0> put 'ns1:tb5', 'baidu.com', 'cf:ipstr', '61.135.169.121'

0 row(s) in 1.1640 seconds

hbase(main):054:0> put 'ns1:tb5', 'baidu.com', 'cf:reg_date', '19990415'

0 row(s) in 0.3530 seconds

hbase(main):055:0> put 'ns1:tb5', 'baidu.com', 'cf:address', '北京'

0 row(s) in 2.7540 seconds

hbase(main):056:0> put 'ns1:tb5', 'alibaba.com', 'cf:ipstr', '205.204.101.42'

0 row(s) in 1.2040 seconds

# 统计表的行数

hbase(main):064:0> count 'tb5'

ERROR: Unknown table tb5!

hbase(main):066:0> count 'ns1:tb5'

2 row(s) in 0.3110 seconds

=> 2

hbase(main):067:0> count 'ns1:tb5', INTERVAL => 100000

2 row(s) in 0.0170 seconds

=> 2

# 单行读

hbase(main):068:0> get 'ns1:tb5', 'baidu.com'

COLUMN CELL

cf:address timestamp=1441006344446, value=\xE5\x8C\x97\xE4\xBA\xAC

cf:ipstr timestamp=1441006329572, value=61.135.169.121

cf:owner timestamp=1441006321284, value=BeiJingBaiduCo.

cf:reg_date timestamp=1441006335701, value=19990415

4 row(s) in 0.1150 seconds

hbase(main):074:0> get 'ns1:tb5', 'baidu.com', 'cf:ipstr'

COLUMN CELL

cf:ipstr timestamp=1441006329572, value=61.135.169.121

1 row(s) in 0.0200 seconds

hbase(main):075:0>

# 如果COLUMN未指定column family则会提示错误

# ERROR: Unknown column family! Valid column names: cf:*

hbase(main):076:0> get 'ns1:tb5', 'baidu.com', {COLUMN => 'cf:reg_date'}

COLUMN CELL

cf:reg_date timestamp=1441006335701, value=19990415

1 row(s) in 0.0170 seconds

hbase(main):077:0>

# 扫描表

scan 'hbase:meta'

scan 'hbase:meta', {COLUMNS => 'info:regioninfo'}

# 删除列

hbase(main):004:0> delete 'ns1:tb5', 'baidu.com', 'cf:address'

0 row(s) in 0.2260 seconds

hbase(main):005:0> get 'ns1:tb5', 'baidu.com'

COLUMN CELL

cf:ipstr timestamp=1441006329572, value=61.135.169.121

cf:owner timestamp=1441006321284, value=BeiJingBaiduCo.

cf:reg_date timestamp=1441006335701, value=19990415

3 row(s) in 0.0210 seconds

# 清空表

hbase(main):006:0> truncate 'ns1:tb5'

Truncating 'ns1:tb5' table (it may take a while):

- Disabling table...

- Dropping table...

- Creating table...

0 row(s) in 76.4690 seconds

hbase(main):007:0> get 'ns1:tb5', 'baidu.com'

COLUMN CELL

0 row(s) in 0.0480 seconds

其他操作略。

Thrift客户端:

略

MapReduce批量操作HBase

见http://luchunli.blog.51cto.com/2368057/1691298