Linux iSCSI协议实现共享存储

大纲

一、存储设备类型

二、什么是SCSI和iSCSI

三、iSCSI安装与配置

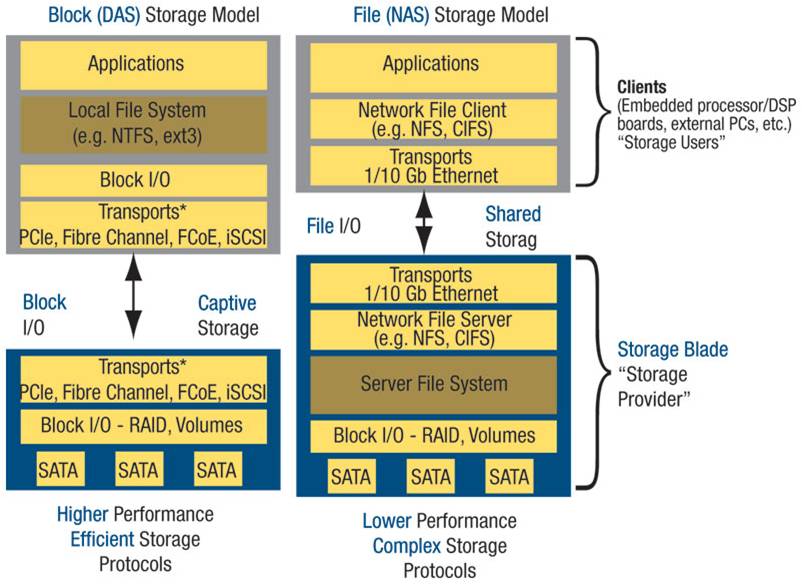

一、存储设备类型

DAS:Direct Attached Storage

直连主机的总线上的设备

NAS:network Attached Storage

文件共享服务器,共享级别file

SAN:Storage Area Network

把SCSI协议借助于其他网络协议实现传送

tcp/ip iscsi

FC(光纤):

FCoE

NAS与SAN的对比图

二、什么是SCSI和iSCSI

小型计算机系统接口(英语:Small Computer System Interface; 简写:SCSI),一种用于计算机和智能设备之间(硬盘、软驱、光驱、打印机、扫描仪等)系统级接口的独立处理器标准。 SCSI是一种智能的通用接口标准。

SCSI特点

SCSI接口是一个通用接口,在SCSI母线上可以连接主机适配器和八个SCSI外设控制器,外设可以包括磁盘、磁带、CD-ROM、可擦写光盘驱动器、打印机、扫描仪和通讯设备等。

SCSI是个多任务接口,设有母线仲裁功能。挂在一个SCSI母线上的多个外设可以同时工作。SCSI上的设备平等占有总线。

SCSI接口可以同步或异步传输数据,同步传输速率可以达到10MB/s,异步传输速率可以达到1.5MB/s。

SCSI接口接到外置设备时.它的连接电缆可以长达6m。

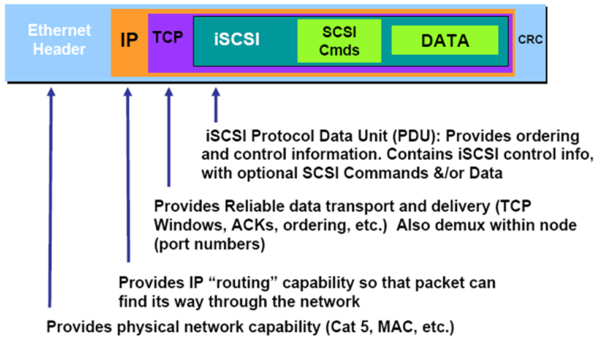

Internet 小型计算机系统接口(iSCSI)是一种基于TCP/IP的协议,用来建立和管理IP存储设备、主机和客户机等之间的相互连接,并创建存储区域网络(SAN)。SAN 使得 SCSI 协议应用于高速数据传输网络成为可能,这种传输以数据块级别(block-level)在多个数据存储网络间进行。

SCSI 结构基于客户/服务器模式,其通常应用环境是:设备互相靠近,并且这些设备由 SCSI 总线连接。iSCSI 的主要功能是在 TCP/IP 网络上的主机系统(启动器 initiator)和存储设备(目标器 target)之间进行大量数据的封装和可靠传输过程。此外,iSCSI 提供了在 IP 网络封装 SCSI 命令,且运行在 TCP 上。

iSCSI协议报文格式

三、iSCSI安装与配置

系统环境

CentOS5.8 x86_64

Initiator

node1.network.com node1 172.16.1.101

node2.network.com node2 172.16.1.105

node3.network.com node3 172.16.1.106

Target

node4.network.com /dev/hda 172.16.1.111

软件包

scsi-target-utils

iscsi-initiator-utils

拓扑图

1、安装scsi-target-utils并启动服务

[root@soysauce ~]# yum install -y scsi-target-utils

启动服务

[root@node4 ~]# service tgtd start

Starting SCSI target daemon: Starting target framework daemon

查看所监听的端口

[root@node4 ~]# ss -tnlp | grep 3260

0 0 *:3260 *:* users:(("tgtd",3979,6),("tgtd",3980,6))

0 0 :::3260 :::* users:(("tgtd",3979,5),("tgtd",3980,5))

设置开机自启动

[root@node4 ~]# chkconfig tgtd on

[root@node4 ~]# chkconfig --list tgtd

tgtd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

2、创建Target

tgtadm模式化命令 --mode 常用模式:target、logicalunit、account target --op new、delete、show、update、bind、unbind logicalunit --op new、delete account --op new、delete、bind、unbind 选项 --lld <driver>,-L:指定驱动类型 --tid <id>,-t:制定targetid --lun <lun>,-l:指定lun --backing-store <path>,-b:指定后端真正存储设备路径 --initiator-address <address>,-I:Initiator地址,用于实现ip认证 -T,--targetname <targetname>:Target名称 targetname定义格式:iqn.yyyy-mm.<reversed domain name>[:identifier] 创建target [root@node4 ~]# tgtadm --lld iscsi --mode target --op new --tid 1 --targetname iqn.2016-01.com.network:teststore.disk1 查看target [root@node4 ~]# tgtadm --lld iscsi --mode target --op show Target 1: iqn.2016-01.com.network:teststore.disk1 System information: Driver: iscsi State: ready I_T nexus information: LUN information: LUN: 0 Type: controller SCSI ID: IET 00010000 SCSI SN: beaf10 Size: 0 MB, Block size: 1 Online: Yes Removable media: No Readonly: No Backing store type: null Backing store path: None Backing store flags: Account information: ACL information:

3、创建lun

[root@node4 ~]# tgtadm --lld iscsi --mode logicalunit --op new --tid 1 --lun 1 --backing-store /dev/hda [root@node4 ~]# tgtadm --lld iscsi --mode target --op show Target 1: iqn.2016-01.com.network:teststore.disk1 System information: Driver: iscsi State: ready I_T nexus information: LUN information: LUN: 0 Type: controller SCSI ID: IET 00010000 SCSI SN: beaf10 Size: 0 MB, Block size: 1 Online: Yes Removable media: No Readonly: No Backing store type: null Backing store path: None Backing store flags: LUN: 1 Type: disk SCSI ID: IET 00010001 SCSI SN: beaf11 Size: 21475 MB, Block size: 512 Online: Yes Removable media: No Readonly: No Backing store type: rdwr Backing store path: /dev/hda Backing store flags: Account information: ACL information: 可以做授权绑定 [root@node4 ~]# tgtadm --lld iscsi --mode target --op bind --tid 1 --initiator-address 172.16.0.0/16 [root@node4 ~]# tgtadm --lld iscsi --mode target --op show Target 1: iqn.2016-01.com.network:teststore.disk1 System information: Driver: iscsi State: ready I_T nexus information: LUN information: LUN: 0 Type: controller SCSI ID: IET 00010000 SCSI SN: beaf10 Size: 0 MB, Block size: 1 Online: Yes Removable media: No Readonly: No Backing store type: null Backing store path: None Backing store flags: LUN: 1 Type: disk SCSI ID: IET 00010001 SCSI SN: beaf11 Size: 21475 MB, Block size: 512 Online: Yes Removable media: No Readonly: No Backing store type: rdwr Backing store path: /dev/hda Backing store flags: Account information: ACL information: 172.16.0.0/16

4、配置Initiator端

initiator端先安装iscsi-initiator-utils工具 [root@node1 ~]# yum install -y iscsi-initiator-utils 生成initiatorname [root@node1 ~]# echo "InitiatorName=`iscsi-iname -p iqn.2016-01.com.network`" > /etc/iscsi/initiatorname.iscsi [root@node1 ~]# cat /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.2016-01.com.network:a9ccb1cfe22 添加一个initiator别名 [root@node1 ~]# echo "InitiatorAlias=node1.network.com" >> /etc/iscsi/initiatorname.iscsi [root@node1 ~]# cat /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.2016-01.com.network:a9ccb1cfe22 InitiatorAlias=node1.network.com

5、启动Initiator端服务并尝试发现Target

iscsiadm模式化的命令

-m {discovery|node|session|iface}

discovery: 发现某服务器是否有target输出,以及输出了哪些target;

node: 管理跟某target的关联关系;

session: 会话管理

iface: 接口管理

iscsiadm -m discovery [ -d debug_level ] [ -P printlevel ] [ -I iface -t type -p ip:port [ -l ] ]

-d:指定debug级别,0-8

-I:指定网卡接口

-t type:SendTargets(st), SLP, and iSNS

-p:IP:port,端口不指定默认为3260

iscsiadm -m node [ -d debug_level ] [ -L all,manual,automatic ] | [ -U all,manual,automatic ]

iscsiadm -m node [ -d debug_level ] [ [ -T targetname -p ip:port -I ifaceN ] [ -l | -u ] ] [ [ -o operation ] [ -n name ] [ -v value ] ]

先启动服务

[root@node1 ~]# service iscsi start

iscsid (pid 2318) is running...

Setting up iSCSI targets: iscsiadm: No records found

[ OK ]

发现Target

[root@node1 ~]# iscsiadm -m discovery -t sendtargets -p 172.16.1.111

172.16.1.111:3260,1 iqn.2016-01.com.network:teststore.disk1

查看发现Target的数据信息

[root@node1 ~]# ls /var/lib/iscsi/send_targets/

172.16.1.111,3260

[root@node1 ~]# ls /var/lib/iscsi/send_targets/172.16.1.111,3260/

iqn.2016-01.com.network:teststore.disk1,172.16.1.111,3260,1,default st_config

6、登录Target

[root@node1 ~]# iscsiadm -m node -T iqn.2016-01.com.network:teststore.disk1 -p 172.16.1.111 -l Logging in to [iface: default, target: iqn.2016-01.com.network:teststore.disk1, portal: 172.16.1.111,3260] (multiple) Login to [iface: default, target: iqn.2016-01.com.network:teststore.disk1, portal: 172.16.1.111,3260] successful. 查看硬盘 [root@node1 ~]# fdisk -l Disk /dev/hda: 21.4 GB, 21474836480 bytes 15 heads, 63 sectors/track, 44384 cylinders Units = cylinders of 945 * 512 = 483840 bytes Device Boot Start End Blocks Id System /dev/hda1 1 2068 977098+ 83 Linux Disk /dev/sda: 21.4 GB, 21474836480 bytes 255 heads, 63 sectors/track, 2610 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Device Boot Start End Blocks Id System /dev/sda1 * 1 13 104391 83 Linux /dev/sda2 14 2610 20860402+ 8e Linux LVM Disk /dev/sdb: 21.4 GB, 21474836480 bytes 64 heads, 32 sectors/track, 20480 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Device Boot Start End Blocks Id System

7、分区格式化

对那个发现的设备做分区 [root@node1 ~]# fdisk /dev/sdb The number of cylinders for this disk is set to 20480. There is nothing wrong with that, but this is larger than 1024, and could in certain setups cause problems with: 1) software that runs at boot time (e.g., old versions of LILO) 2) booting and partitioning software from other OSs (e.g., DOS FDISK, OS/2 FDISK) Command (m for help): n Command action e extended p primary partition (1-4) p Partition number (1-4): 1 First cylinder (1-20480, default 1): Using default value 1 Last cylinder or +size or +sizeM or +sizeK (1-20480, default 20480): +2G Command (m for help): p Disk /dev/sdb: 21.4 GB, 21474836480 bytes 64 heads, 32 sectors/track, 20480 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Device Boot Start End Blocks Id System /dev/sdb1 1 1908 1953776 83 Linux Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot. Syncing disks. [root@node1 ~]# partprobe /dev/sdb [root@node1 ~]# cat /proc/partitions major minor #blocks name 3 0 20971520 hda 3 1 977098 hda1 8 0 20971520 sda 8 1 104391 sda1 8 2 20860402 sda2 253 0 18776064 dm-0 253 1 2064384 dm-1 8 16 20971520 sdb 8 17 1953776 sdb1 格式化为ext3文件系统 [root@node1 ~]# mke2fs -j /dev/sdb1 mke2fs 1.39 (29-May-2006) Filesystem label= OS type: Linux Block size=4096 (log=2) Fragment size=4096 (log=2) 244320 inodes, 488444 blocks 24422 blocks (5.00%) reserved for the super user First data block=0 Maximum filesystem blocks=503316480 15 block groups 32768 blocks per group, 32768 fragments per group 16288 inodes per group Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912 Writing inode tables: done Creating journal (8192 blocks): done Writing superblocks and filesystem accounting information: done This filesystem will be automatically checked every 32 mounts or 180 days, whichever comes first. Use tune2fs -c or -i to override. 挂载 [root@node1 ~]# mount /dev/sdb1 /mnt/ [root@node1 ~]# cp /etc/fstab /mnt/ [root@node1 ~]# ls /mnt/ fstab lost+found [root@node1 ~]# umount /mnt/

8、使用另一个节点挂载此设备进行访问测试

先安装与配置node2节点

[root@node2 ~]# yum install -y iscsi-initiator-utils

配置InitiatorName与InitiatorAlias

[root@node2 ~]# echo "InitiatorName=`iscsi-iname -p iqn.2016-01.com.network`" > /etc/iscsi/initiatorname.iscsi

[root@node2 ~]# echo "InitiatorAlias=node2.network.com" >> /etc/iscsi/initiatorname.iscsi

[root@node2 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2016-01.com.network:1d58f93825bf

InitiatorAlias=node2.network.com

启动iscsi服务

[root@node2 ~]# service iscsi start

iscsid (pid 2321) is running...

Setting up iSCSI targets: iscsiadm: No records found

[ OK ]

发现Target

[root@node2 ~]# iscsiadm -m discovery -t sendtargets -p 172.16.1.111

172.16.1.111:3260,1 iqn.2016-01.com.network:teststore.disk1

[root@node2 ~]# ls /var/lib/iscsi/send_targets/

172.16.1.111,3260

[root@node2 ~]# ls /var/lib/iscsi/send_targets/172.16.1.111,3260/

iqn.2016-01.com.network:teststore.disk1,172.16.1.111,3260,1,default st_config

登录

[root@node2 ~]# iscsiadm -m node -T iqn.2016-01.com.network:teststore.disk1 -p 172.16.1.111 -l

Logging in to [iface: default, target: iqn.2016-01.com.network:teststore.disk1, portal: 172.16.1.111,3260] (multiple)

Login to [iface: default, target: iqn.2016-01.com.network:teststore.disk1, portal: 172.16.1.111,3260] successful.

查看硬盘

[root@node2 ~]# fdisk -l

Disk /dev/hda: 21.4 GB, 21474836480 bytes

15 heads, 63 sectors/track, 44384 cylinders

Units = cylinders of 945 * 512 = 483840 bytes

Device Boot Start End Blocks Id System

/dev/hda1 1 2068 977098+ 83 Linux

Disk /dev/sda: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 13 104391 83 Linux

/dev/sda2 14 2610 20860402+ 8e Linux LVM

Disk /dev/sdb: 21.4 GB, 21474836480 bytes

64 heads, 32 sectors/track, 20480 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 1908 1953776 83 Linux

挂载此设备

[root@node2 ~]# mount /dev/sdb1 /mnt/

[root@node2 ~]# ls /mnt/

fstab lost+found

如果想删除此Target信息,则先登出,再删除,登出之前先确保已卸载

[root@node1 ~]# mount

/dev/mapper/VolGroup00-LogVol00 on / type ext3 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

/dev/sda1 on /boot type ext3 (rw)

tmpfs on /dev/shm type tmpfs (rw)

none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

登出

[root@node1 ~]# iscsiadm -m node -T iqn.2016-01.com.network:teststore.disk1 -p 172.16.1.111 -u

Logging out of session [sid: 1, target: iqn.2016-01.com.network:teststore.disk1, portal: 172.16.1.111,3260]

Logout of [sid: 1, target: iqn.2016-01.com.network:teststore.disk1, portal: 172.16.1.111,3260] successful.

删除数据库信息

[root@node1 ~]# iscsiadm -m node -T iqn.2016-01.com.network:teststore.disk1 -p 172.16.1.111 -o delete

删除目录

[root@node1 ~]# rm -rf /var/lib/iscsi/send_targets/172.16.1.111,3260/

注意iscsi-initiator-utils不支持discovery认证

如果想基于用户认证,则需要在Target端先配置好ip认证,再配置用户认证

[root@node4 ~]# tgtadm --lld iscsi --mode target --op bind --tid 1 --initiator-address 172.16.0.0/16

添加认证用户

[root@node4 ~]# tgtadm --lld iscsi --mode account --op new --user iscsiuser --password iscsiuser

绑定认证用户

[root@node4 ~]# tgtadm --lld iscsi --mode account --op bind --tid 1 --user iscsiuser

[root@node4 ~]# tgtadm --lld iscsi --mode target --op show

Target 1: iqn.2016-01.com.network:teststore.disk1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Readonly: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 21475 MB, Block size: 512

Online: Yes

Removable media: No

Readonly: No

Backing store type: rdwr

Backing store path: /dev/hda

Backing store flags:

Account information:

iscsiuser

ACL information:

172.16.0.0/16

Target端配置完成,接下来Initiator端只需要修改下配置文件即可

[root@node1 ~]# vim /etc/iscsi/iscsid.conf

node.session.auth.authmethod = CHAP

node.session.auth.username = iscsiuser

node.session.auth.password = iscsiuser

发现Target端

[root@node1 ~]# iscsiadm -m discovery -t st -p 172.16.1.111

172.16.1.111:3260,1 iqn.2016-01.com.network:teststore.disk1

登录

[root@node1 ~]# iscsiadm -m node -T iqn.2016-01.com.network:teststore.disk1 -p 172.16.1.111 -l

Logging in to [iface: default, target: iqn.2016-01.com.network:teststore.disk1, portal: 172.16.1.111,3260] (multiple)

Login to [iface: default, target: iqn.2016-01.com.network:teststore.disk1, portal: 172.16.1.111,3260] successful.

此时可以发现,两个节点可以使用同一个存储,但是当其中一个节点写文件时,另一个节点是看不到的

如果正好两个写的是同一个文件,完成之后再从内存同步到硬盘中,会发生文件系统崩溃的情况

所以这是十分危险的,因为我们可以使用集群文件系统

这样,当一个节点写,会立即通知给其他节点,避免文件系统崩溃

补充,如何使用集群文件系统

首先安装gfs2-utils

[root@node1 ~]# yum install -y gfs2-utils

创建分区并格式化

[root@node1 ~]# fdisk /dev/sdb

The number of cylinders for this disk is set to 20480.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-20480, default 1):

Using default value 1

Last cylinder or +size or +sizeM or +sizeK (1-20480, default 20480): +3G

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@node1 ~]# partprobe /dev/sdb

[root@node1 ~]# mkfs.gfs2 -j 2 -p lock_dlm -t tcluster:mysqlstore /dev/sdb1

This will destroy any data on /dev/sdb1.

It appears to contain a ext3 filesystem.

Are you sure you want to proceed? [y/n] y

Device: /dev/sdb1

Blocksize: 4096

Device Size 2.79 GB (732668 blocks)

Filesystem Size: 2.79 GB (732666 blocks)

Journals: 2

Resource Groups: 12

Locking Protocol: "lock_dlm"

Lock Table: "tcluster:mysqlstore"

UUID: 47BBB672-E012-9B1C-CB07-277410109CD4

挂载之前,请先确保cman服务已启动,不然会报错的

[root@node1 ~]# mount /dev/sdb1 /mnt/

[root@node1 ~]# ls /mnt/

[root@node1 ~]# cd /mnt/

[root@node1 mnt]# touch node1.txt

[root@node1 mnt]# ls

node1.txt

这时候再在node2上挂载此设备,看能否看到刚才创建的测试文件

[root@node2 ~]# mount /dev/sdb1 /mnt/

[root@node2 ~]# cd /mnt/

[root@node2 mnt]# ls

node1.txt

[root@node2 mnt]# touch node2.txt

[root@node2 mnt]# ls

node1.txt node2.txt

这时候再在node3节点数挂载此设备

[root@node3 ~]# mount /dev/sdb1 /mnt/

/sbin/mount.gfs2: Too many nodes mounting filesystem, no free journals

因为我们格式化成gfs2文件系统时,指定的日志区域为2个,所以只能两个设备挂载使用

[root@node2 mnt]# gfs2_tool journals /dev/sdb1

journal1 - 128MB

journal0 - 128MB

2 journal(s) found.

现在增加一个日志区域,

[root@node1 mnt]# gfs2_jadd -j 1 /dev/sdb1

Filesystem: /mnt

Old Journals 2

New Journals 3

再尝试在node3节点上挂载

[root@node3 ~]# mount /dev/sdb1 /mnt/

[root@node3 ~]# ls /mnt/

node1.txt node2.txt

补充

mkfs.gfs2

-j #: 指定日志区域的个数,有几个就能够被几个节点所挂载;

-J #: 指定日志区域的大小,默认为128MB;

-p {lock_dlm|lock_nolock}:

-t <name>: 锁表的名称,格式为clustername:locktablename, clustername为当前节点所在的集群的名称,locktablename要在当前集群惟一;

集群节点最好不要大于16个,否则gfs2文件系统性能会下降很多

下一篇讲 cman + rgmanager + iscsi + gfs2 + cLVM 实现集群共享存储