Lucene+Tika 文件索引的创建与搜索

使用Lucene+Tika进行文件索引的创建与查询,在Windows环境下测试没问题,可以解析各种文件(Tika支持的),另外从源代码可以看出还对zip压缩文件解析支持!

但是,在Linux环境下发现不能很好的解析2008以上的办公文档!也还没有找到具体原因,希望高手能看看这个问题!

对于 T

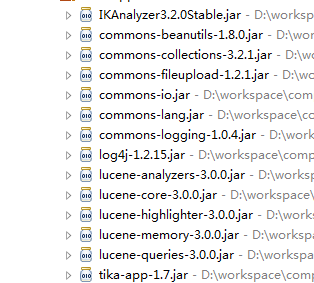

相关jar包,

源码附上:

package com.leagsoft.tika.repos;

import java.io.File;

import java.io.FileInputStream;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Collections;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.zip.ZipEntry;

import java.util.zip.ZipFile;

import org.apache.log4j.Logger;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.Field.Index;

import org.apache.lucene.document.Field.Store;

import org.apache.lucene.index.CorruptIndexException;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriter.MaxFieldLength;

import org.apache.lucene.queryParser.MultiFieldQueryParser;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.search.highlight.Formatter;

import org.apache.lucene.search.highlight.Fragmenter;

import org.apache.lucene.search.highlight.Highlighter;

import org.apache.lucene.search.highlight.InvalidTokenOffsetsException;

import org.apache.lucene.search.highlight.QueryScorer;

import org.apache.lucene.search.highlight.Scorer;

import org.apache.lucene.search.highlight.SimpleFragmenter;

import org.apache.lucene.search.highlight.SimpleHTMLFormatter;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.Version;

import org.apache.tika.Tika;

import org.apache.tika.metadata.Metadata;

import org.wltea.analyzer.lucene.IKAnalyzer;

/**

*

* @author Heweipo

*

*/

public class ReposUtil {

private static IndexWriter indexWriter;

private static IndexSearcher indexSearcher;

private static Analyzer analyzer = new IKAnalyzer();

private static Object lock = new Object();

public final static String ARTICLE_CONTENT = "fileContent";

public final static String ARTICLE_NAME = "fileName";

public final static String ARTICLE_PATH = "filePath";

public final static String ARTICLE_FRAGMENT = "fragment"; // 测试使用,如果不需要,则设置 ARTICLE_FRAGMENT_USE = false

public static boolean ARTICLE_FRAGMENT_USE = true;

private static Logger log = Logger.getLogger(ReposUtil.class);

/**

* 创建索引

*/

@SuppressWarnings("unchecked")

private static boolean writeRepos(IndexWriter indexWriter , File file){

if(file == null || !file.exists() || !file.canRead()) return false;

if(file.isDirectory()){

// 文件夹

File[] files = file.listFiles();

for(File f : files){

writeRepos(indexWriter,f);

}

}else{

// 文件

Document doc = null;

ZipFile zf = null;

try{

doc = new Document();

Tika tika = new Tika();

Metadata metadata = new Metadata();

metadata.add(Metadata.CONTENT_ENCODING, "utf-8");

if(file.getName().endsWith(".zip")){

zf = new ZipFile(file);

List<ZipEntry> list = (List<ZipEntry>) Collections.list(zf.entries());

for(ZipEntry entry : list){

doc.add(new Field(ARTICLE_CONTENT,tika.parse(zf.getInputStream(entry),metadata)));

}

}else{

doc.add(new Field(ARTICLE_CONTENT,tika.parse(new FileInputStream(file),metadata)));

}

doc.add(new Field(ARTICLE_NAME,file.getName(),Store.YES,Index.ANALYZED));

doc.add(new Field(ARTICLE_PATH,file.getAbsolutePath(),Store.YES,Index.NO));

if(ARTICLE_FRAGMENT_USE){

doc.add(new Field(ARTICLE_FRAGMENT,new Tika().parseToString(new FileInputStream(file),metadata),Store.YES,Index.NO));

}

indexWriter.addDocument(doc);

log.info("正在加载文件索引:"+file.getName()+" "+file.getAbsolutePath());

}catch(Exception e){

log.info("索引创建失败:"+e.getMessage());

e.printStackTrace();

return false;

}finally{

if(zf != null){

try {

zf.close();

} catch (IOException e) {

e.printStackTrace();

log.error(e);

}

}

}

}

return true;

}

/**

* 创建索引

*/

public static boolean writeRepos(File file){

IndexWriter indexWriter = getIndexWriter();

if(!writeRepos(indexWriter , file)) return false;

try {

// 提交,在这里提交保证程序的原子性

getIndexWriter().commit();

log.info("文件索引创建成功:"+file.getName()+" "+file.getAbsolutePath());

} catch (Exception e) {

log.info("文件索引创建之后提交失败:"+e.getMessage());

e.printStackTrace();

return false;

}

return true;

}

/**

* 读取索引

*/

public static List<Map<String,String>> readRepos(String[] fields , String value , int numb){

if(fields == null || fields.length == 0){

fields = new String[]{ReposUtil.ARTICLE_CONTENT,ReposUtil.ARTICLE_NAME};

}

List<Map<String,String>> list = new ArrayList<Map<String,String>>();

try {

Query query = new MultiFieldQueryParser(Version.LUCENE_30, fields, analyzer).parse(value);

TopDocs matchs = getIndexSearcher().search(query, numb);

numb = Math.min(numb, matchs.totalHits);

ScoreDoc[] scoreDocs = matchs.scoreDocs;

// 设置style

Highlighter lighter = null;

if(ARTICLE_FRAGMENT_USE){

Formatter format = new SimpleHTMLFormatter("<font color='red'>","</font>");

Scorer scorer = new QueryScorer(query);

Fragmenter fragmenter = new SimpleFragmenter(100);

lighter = new Highlighter(format,scorer);

lighter.setTextFragmenter(fragmenter);

}

for(int i = 0 ; i < numb ; i++){

Document document = getIndexSearcher().doc(scoreDocs[i].doc);

list.add(document2map(document,lighter));

}

} catch (Exception e) {

log.info("搜索索引时异常:"+e.getMessage());

e.printStackTrace();

return null;

}

return list;

}

/**

* transfer

*/

private static Map<String,String> document2map(Document doc , Highlighter lighter){

Map<String,String> map = new HashMap<String,String>();

if(doc == null) return map;

map.put(ARTICLE_NAME, doc.get(ARTICLE_NAME));

map.put(ARTICLE_PATH, doc.get(ARTICLE_PATH));

map.put(ARTICLE_CONTENT, doc.get(ARTICLE_CONTENT));

if(ARTICLE_FRAGMENT_USE){

try {

String fragment = lighter.getBestFragment(analyzer,ARTICLE_FRAGMENT,doc.get(ARTICLE_FRAGMENT));

if(fragment != null){

fragment = fragment.trim().replaceAll("\t", "")

.replaceAll("\n", "").replaceAll("\r", "")

.replaceAll("\n\r", "");

map.put(ARTICLE_FRAGMENT, fragment);

}

} catch (IOException e) {

log.info("document转换Map异常:"+e.getMessage());

e.printStackTrace();

} catch (InvalidTokenOffsetsException e) {

log.info("document转换Map异常:"+e.getMessage());

e.printStackTrace();

}

}

return map;

}

/**

* 获取IndexSearcher

* @return

*/

public static IndexSearcher getIndexSearcher(){

// 单例

if(indexSearcher == null){

synchronized(lock){

Directory directory = null;

try {

directory = FSDirectory.open(new File("./Repos"));

indexSearcher = new IndexSearcher(directory);

return indexSearcher;

}catch(IOException e){

log.info("获取IndexSearcher异常:"+e.getMessage());

e.printStackTrace();

}

}

}

// 实时更新

try {

IndexReader indexReader = indexSearcher.getIndexReader();

if(!indexReader.isCurrent()){

indexSearcher = new IndexSearcher(indexReader.reopen(true));

return indexSearcher;

}

} catch (CorruptIndexException e) {

e.printStackTrace();

log.info("获取IndexSearcher异常:"+e.getMessage());

} catch (IOException e) {

e.printStackTrace();

log.info("获取IndexSearcher异常:"+e.getMessage());

}

return indexSearcher;

}

/**

* 获取IndexWriter

* @return

*/

public static IndexWriter getIndexWriter(){

if(indexWriter == null){

FSDirectory directory = null;

synchronized (lock) {

File file = new File("./Repos");

if(!file.exists()) file.mkdir();

try {

directory = FSDirectory.open(file);

indexWriter = new IndexWriter(directory,analyzer,MaxFieldLength.UNLIMITED);

} catch (IOException e) {

e.printStackTrace();

log.info("获取IndexWriter异常:"+e.getMessage());

}

}

}

return indexWriter;

}

/**

* 通用分词器

*/

public static Analyzer getAnalyzer(){

return analyzer;

}

}