机器学习经典算法3-朴素贝叶斯

一、算法简要

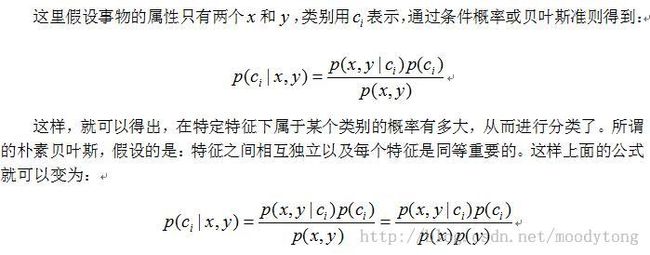

贝叶斯是从统计概率的角度来进行分类,确切来说是条件概率,例如要猜是哪一类动物,该动物具备的特征是:四条腿、高度超过x米、哺乳动物,那么在这些特征前提下,计算其为哪种动物的概率。

二、算法一般流程

1.数据的收集

2.数据的准备:数值型或布尔型

3.分析数据

4.训练算法:计算不同的独立特征的条件概率

5.测试算法:计算错误率

6.使用算法:以实际应用为驱动

三、朴素贝叶斯伪代码

1.计算各个独立特征在各个分类中的条件概率

2.计算各类别出现的概率

3.对于特定的特征输入,计算其相应属于特定分类的条件概率

4.选择条件概率最大的类别作为该输入类别进行返回

四、代码实现与示例

trainBN0中计算各个独立特征在不同分类中的条件概率和各类别的概率(为了更好的计算,避免独立特征条件概率为0,将所有词出现的次数初始化为1,同时将分母初始化为2---即p0Num=ones(numWords) p1Num=ones(numWords) p0Denom=2.0 p1Denom=2.0);setOfWords2Vec根据字典形成相应的vector(vector中只有0和1,对词出现的次数多少不区分),而bagOfWords2VecMN中则进行了区分;在classifyNB中进行条件概率计算时,进行了一些log转换。

from numpy import *

def loadDataSet():

postingList=[['my','dog','has','flea',

'problems','help','please'],

['maybe','not','take','him',

'to','dog','park','stupid'],

['my','dalmation','is','so','cute',

'I','love','him'],

['stop','posting','stupid','worthless','garbage'],

['mr','licks','ate','my','steak','how','to','stop','him'],

['quit','buying','worthless','dog','food','stupid']

]

classVec=[0,1,0,1,0,1]

return postingList, classVec

def createVocabList(dataSet):

vocaSet = set([])

for doc in dataSet:

vocaSet = vocaSet | set(doc)

return list(vocaSet)

def setOfWords2Vec(vocabList, inputSet):

returnVec=[]

for doc in inputSet:

tmpVec = [0]*len(vocabList)

for word in doc:

if word in vocabList:

tmpVec[vocabList.index(word)]=1

else:

print "the word: %s is not in my vacobulary!"%word

returnVec.append(tmpVec)

return returnVec

def bagOfWords2VecMN(vocabList, inputSet):

returnVec = []

for doc in inputSet:

tmpVec = [0]*len(vocabList)

for word in doc:

if word in vocabList:

if word in vocabList:

tmpVec[vocabList.index(word)]+=1

else:

print "the word: %s is not in my vacobulary"%word

returnVec.append(tmpVec)

return returnVec

def trainNB0(trainMatrix, trainCategory):

numTrainDocs = len(trainMatrix)

numWords = len(trainMatrix[0])

pAbusive = sum(trainCategory)/float(numTrainDocs)

'''

p0Num = zeros(numWords)

p1Num = zeros(numWords)

'''

p0Num = ones(numWords)

p1Num = ones(numWords)

p0Denom=2.0

p1Denom=2.0

for i in range(numTrainDocs):

if trainCategory[i]==1:

p1Num += trainMatrix[i]

p1Denom += sum(trainMatrix[i])

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p1Vect = p1Num/p1Denom

p0Vect = p0Num/p0Denom

return p0Vect, p1Vect, pAbusive

def classifyNB(vec2Classify, p0Vec, p1Vec, pClass):

p1 = sum(vec2Classify*p1Vec)+log(pClass)

p0 = sum(vec2Classify*p0Vec)+log(1.0-pClass)

if p1>p0:

return 1

else:

return 0

def testingNB():

listOPosts, listClasses = loadDataSet()

myVocabList = createVocabList(listOPosts)

trainMat = setOfWords2Vec(myVocabList,listOPosts)

p0V, p1V, pAb = trainNB0(array(trainMat),array(listClasses))

result = []

testSet=[['love','my', 'dalmation'],['stupid','garbage']]

testVec = setOfWords2Vec(myVocabList, testSet)

for test in testVec:

tmp_r = classifyNB(test, p0V, p1V, pAb)

result.append(tmp_r)

for i in range(len(testSet)):

print testSet[i]

print "The class of it is: "+str(result[i])

testingNB()