Robust Face Recognition via Sparse Representation

以前稀疏模型这块写过不少代码 都陆续找不到了

Face Recognition小记

整理下代码

读此文J.Wright ... Yi Ma, “Robust Face Recognition via Sparse Representation”, PAMI.2009时

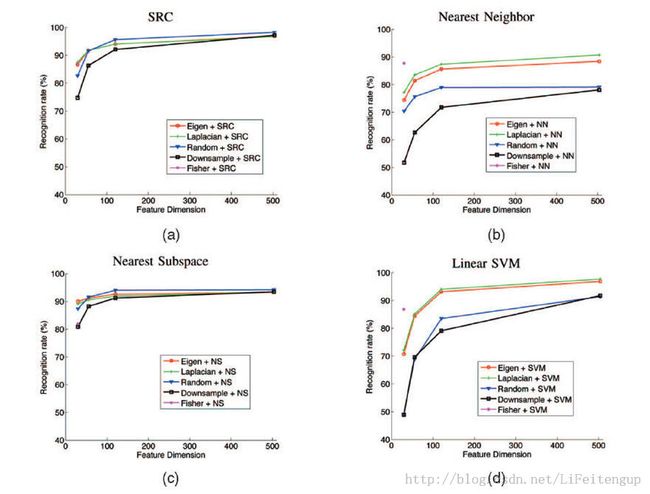

把PCA/LDA/Laplacian Face都写了一通,主要是降维上,上面三个在识别上都可以统一使用1-NN/linear-svm/near-subspace

相比:Robust Face Recognition via Sparse Representation(SRC)使用稀疏表示来做分类器,对降维不再敏感(子空间方法也不敏感)

后来发现个matlab降维工具包

我当时还把PCA/LDA/Laplacian写了一遍,费时。

以下仅贴SRC部分:

% test some thinking

clear;close all;clc

DatabasePath = 'C:\Users\CGGI003\Desktop\LiFeiteng\DataSets\CroppedYale';

newSize = floor([192 168]/5); %压缩Yale人脸 /5

[FaceData Label] = getFaceData(DatabasePath, newSize);

trainData = FaceData.train;

trainLabel = Label.train;

testData = double(FaceData.test);

testLabel = Label.test;

fprintf('...数据生成完成...\n')

fprintf('...SRC...\n')

% 使用降维工具包

no_dims = 200; %PCA降维

[trainData_R, mapping] = compute_mapping(trainData', 'PCA', no_dims);

trainData_R = trainData_R';

avg = mapping.mean';

testData_R = mapping.M'*testData;

avg = mapping.M'*avg;

% Robust Face Recognition via Sparse Representation(SRC)

SRC_Rate = Recognition_SRC(trainData_R, testData_R, trainLabel, testLabel,avg)

SRC部分 注意avg的使用

function RecognitionRate = Recognition_SRC(A, testData, trainLabel, testLabel, avg)

%

%2013/05/21 绠楁硶缂栧啓娴嬭瘯

%2013/05/22 A -> B=[A I] + 娴嬭瘯

% Algorithm1 step2

A = double(A); testData = double(testData);

[m, n] = size(testData);

[~, N1] = size(A);

AA = A.*A;

norm_col = sqrt(sum(AA,1));

norm_col = repmat(norm_col,size(A,1),1);

A = A./norm_col;

%% 澧炲姞瀵归伄鎸$殑澶勭悊

B = [A eye(m)];

K = max(size(unique(trainLabel)));

sigmaK = [];

for k = 1:K

sigmaK(:,k) = ones(N1,1).*(trainLabel==k)';

end

estLabel = [];

h = waitbar(0,'Recognition...');

N = size(B,2);

for i = 1:n

y = testData(:,i)-avg;

w = SolveBP(B, y, N); %%SparseLab toolbox

residual = [];

x = w(1:N1);

for k =1:K

%residual(k) = norm(y - A*(sigmaK(:,k).*x));

residual(k) = norm(y - A*(sigmaK(:,k).*x));

end

[~,index] = min(residual);

estLabel(1,i) = index;

waitbar(i/n);

end

close(h);

RecognitionRate = sum(estLabel==testLabel)/max(size(testLabel));

end

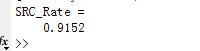

PCA-200为时的正确率:

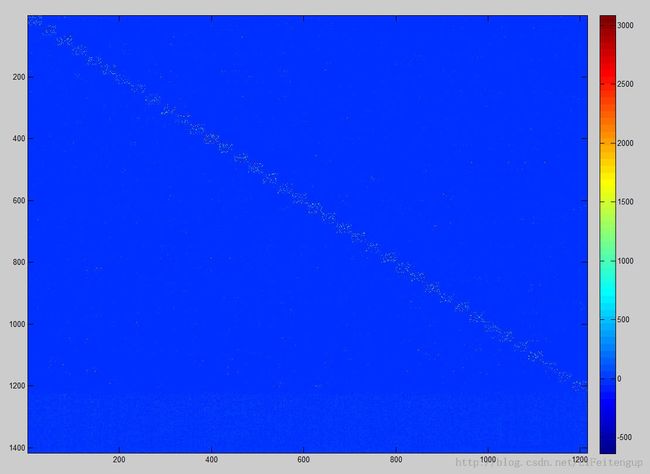

test 的系数矩阵 test数据是按类别依次排列的 可以看出 非零稀疏集中在对应类别上 跟预期效果符合

由于扩展了字典 [A I] 且使用的CroppedYale库 所以I部分对应的系数 很多非零 也可以理解了

字典A 可以做些处理 效果应该会更好些