A couple of years ago I implemented an LRU cache to lookup keyword IDs for keywords. The data structure turned out to be an interesting one because the required throughput was high enough to eliminate heavy use of locks and the synchronized keyword — the application was implemented in Java.

It occurred to me that a sequence of atomic reference assignments would be sufficient to keep LRU order around a ConcurrentHashMap. I began by wrapping the value with an entry that has a reference to a node in a doubly-linked LRU list. The tail of the list keeps track of which entries were most recently used, and the head identifies those that may be evicted when the cache reaches a certain size. Each node refers back to the entry for which it was created on lookup.

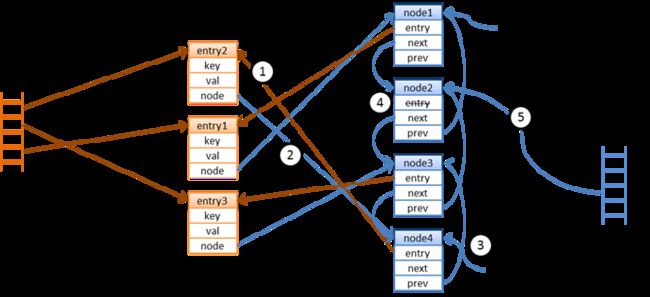

LRU cache with 3 entries and 3 nodes

When you look up a value by key, the cache first checks the map to see if such a value exists. If not, it relies on a loader to load the value from a data source in read-through manner and enters the value into the map using a put-if-absent method. The challenge in ensuring high throughput lies in efficient maintenance of the LRU list. The concurrent hash map is partitioned and doesn’t suffer much thread contention as long as the number of threads stays below a certain level (you can specify a concurrency level when you construct the map). But the LRU list cannot be partitioned in the same manner. To deal with this problem I introduced an auxiliary queue that is used for cleanup operations.

There are six basic operations involved in looking up a value in the cache. For a cache hit, a lookup involves two basic operations: get and offer. For a cache miss it involves four basic operations: get, load, put and offer. On a put, we may also trigger the evict operation, and on a get with a cache hit, we passively do some cleanup in the LRU list — let’s call it a purge operation.

get : lookup entry in the map by key load : load value from a data source put : create entry and map it to key offer: append a node at the tail of the LRU list that refers to a recently accessed entry evict: remove nodes at the head of the list and associated entries from the map (after the cache reaches a certain size) purge: delete unused nodes in the LRU list -- we refer to these nodes as holes, and the cleanup queue keeps track of these

The evict and purge operations are handled in bulk. Let’s take a look at the details of each operation.

The get operation works as follows:

get(K) -> V

lookup entry by key k

if cache hit, we have an entry e

offer entry e

try purge some holes

else

load value v for key k

create entry e <- (k,v)

try put entry e

end

return value e.v

If the key exists, we offer a new node at the tail of the LRU list indicating that this is a recently accessed value. The sequence of get and offer isn't executed as an atomic operation (there's no lock here), so we can't say that the offered node will refer to the most recently accessed entry, but it'll be one of the most recently accessed entries when we have concurrent executions of get. We don't enforce a strict order for get and offer pairs across threads as that would limit throughput significantly. After offering a node we try to do some cleanup and then return the value. We'll take a closer look at the offer and purge operations below.

If a cache miss occurs, we invoke the loader to load the value for the key, create a new entry and try to put it into the map. The put operation works like this:

put(E) -> E

existing entry ex <- map.putIfAbsent(e.k, e)

if absent

offer entry e;

if size reaches evict-threshold

evict some entries

end

return entry e

else, we have an existing entry ex

return entry ex

end

As you can see, two or more threads may compete in putting an entry into the map, but only one will win and thus invoke offer. After offering a node at the tail of the LRU list we check to see whether the size of the cache has reached a threshold above which we trigger batch eviction. In this particular implementation, the threshold is set at a low multiple of the concurrency level above the cache capacity. Eviction occurs in small batches, not one entry at a time; and multiple threads may participate in eviction until the size falls to the cache capacity. Lock free and thread safe, eviction entails removing nodes at the head of the LRU list and relies on careful atomic assignment of references to prevent multiple threads from stepping over each other while removing entries in the map.

The offer operation is interesting in that it always creates a new node and doesn't attempt to move or immediately delete nodes in the LRU list which are no longer needed.

offer(E)

if tail node doesn't refer to entry e

assign current node c <- e.n

create a new node n(e), new node refers to entry e

if atomic compare-and-set node e.n, expect c, assign n

add node n to tail of LRU list

if node c not null

set entry c.e to null, c now has a hole

add node c to cleanup queue

end

end

end

First it will check that the node at the tail of the list doesn't already refer to the just accessed entry. This is unlikely unless all threads frequently access the same key/value pair. It will create a new node to be offered at the tail of the list if the entry is different. Before offering the node, it attempts a compare-and-set to assign the node to the entry, which prevents multiple threads from doing the same work.

The thread that successfully assigns the node proceeds to offer the new node at the tail of the LRU list. This operation is the same as what you would find in a ConcurrentLinkedQueue, which relies on algorithms described inSimple, Fast, and Practical Non-Blocking and Blocking Concurrent Queue Algorithms by Maged M. Michael and Michael L. Scott.

The thread then checks if the entry was previously associated with another node (cache hit). If it was, the old node is not immediately deleted, but rather just marked as a hole (its entry reference is set to null) and added to the cleanup queue.

Order of assignments in offer(E) during a lookup of entry 2. The cleanup queue captures node2 as a new hole.

What happens to the nodes in the cleanup queue? We specify a threshold (typically a multiple of the concurrency level) for the size of the cleanup queue. The cache regularly purges nodes to keep the number of nodes in the cleanup queue under this threshold. It does so in a passive manner, i.e. without relying on a background thread for cleanup. A thread that looks up a value without suffering a cache miss purges some of the oldest nodes in the cleanup queue as a side-effect.

We limit execution of the purge operation to just one thread at a time because node removal involves two updates: a reference to the successor and a reference to the predecessor node. If more than one thread could delete a node in the list, a lock would be required to guarantee a safe delete and correct visibility. Instead, we use an atomic flag to guard the batch purge operation. A thread that successfully sets the flag, purges some nodes and then unsets it again. This particular implementation purges nodes until the count falls below a low multiple of the concurrency level. An atomic integer is used to keep track of the number of nodes in the cleanup queue, and the queue itself is implemented as a ConcurrentLinkedQueue.

How does this cache perform? It depends. I recently got a new workstation with the following specs: dual CPU with 6 cores each for a total of 12 cores, 24GB of RAM, three drives, one of which is an SSD. It was reason enough for me to write another test for my LRU Cache and tweak it for 12 cores. I remember testing the throughput of the cache on my old system a couple of years ago where I achieved almost 1 million (1M) lookups per second with 4 cores and 16 threads.

Before we look at some of the results, let me emphasize that the data set (e.g. the frequency distribution of keywords), the ratio of threads to cores, the concurrency level, the various thresholds (evict and purge) and certain implementation details have a significant impact on throughput; so take these results with a grain of salt. My test is quite simple and deliberately minimizes the impact of load times by reducing the load operation to a very cheap string-to-number conversion. I'm primarily interested in measuring cache throughput in lookups per second given the overhead of maintaining LRU order – ignoring the fact that loading values from a real data source would lead to very different results.

The cache capacity is set to 1M, the set of possible values is 10M, but 2M of these are modeled as non-existent; which forces the cache to store a sentinel. So what is the maximum number of lookups per second?

On my new workstation and with the data set that I have, I can achieve well over 1M lookups/s with up to 3 threads per core (3x12 = 36 -- the concurrency level). With more than 3 threads per core, throughput starts to deteriorate. The eviction rate for this test, which depends heavily on the frequency distribution of the lookup keys, comes in at around 5%.

After some experimenting, I can say that cache performance is quite sensitive to the way purge operates. I've implemented some optimizations and enhancements to deal with edge cases (e.g. no eviction when everything fits into the cache) which are left as exercises to the reader; but you can get a sense of how dramatically performance changes by looking at the following snapshots of CPU Usage History in my task manager.

When things are running smoothly, you can see all CPUs are kept busy and the graphs are quite flat.

If the number of threads increases to the point where the purge operation can't keep up, the graphs will start to look increasingly choppy.

I’ve also encountered some very choppy graphs like the one below where throughput basically goes off a cliff.

I haven’t encountered a real application with enough traffic to require this level of throughput, but it’s good to know the LRU cache can be ruled out when there’s an I/O bottleneck in the system.

There are some draw-backs to this particular implementation. The cost of lookups isn't consistent due to the side-effects of eviction and purging. We may achieve more consistent behavior with an active cache that has its own background thread to handle cleanup operations. One might also consider throttling as an option to prevent overload.

If we examine memory usage, it becomes clear that this data structure would benefit greatly from a C/C++ implementation. The Java object model imposes a significant overhead when the keys and values are small. So if you have the option, I would recommend using C/C++ with free-lists to efficiently manage memory for entries and nodes. A C/C++ implementation would also open up new possibilities for memory-based optimizations. While I don’t have concrete measurements, I suspect performance of the Java implementation is bounded to some degree by the pressure it puts on hardware caches on the data path to memory.