Android系统Camera录像过程分析

最近调试系统Camera,遇到如下问题:在录像过程中,拔掉Camera;会出现应用程序卡死现象。

先说说之前的设计架构:

当用户拔掉Camera时,会给应用程序发送广播;当应用程序收到广播后调用Activity类的finish方法(系统会自动调用onPause方法),而我们的onPause方法做了停止录制和关闭Camera的动作。

问题出在:

当调用系统MediaRecorder的stop方法停止录制时,应用程序因为阻塞而卡死。

下面就着重分析下系统Camera和MediaRecorder(libstagefright中MPEG4Writer以及CameraSource的关系)。

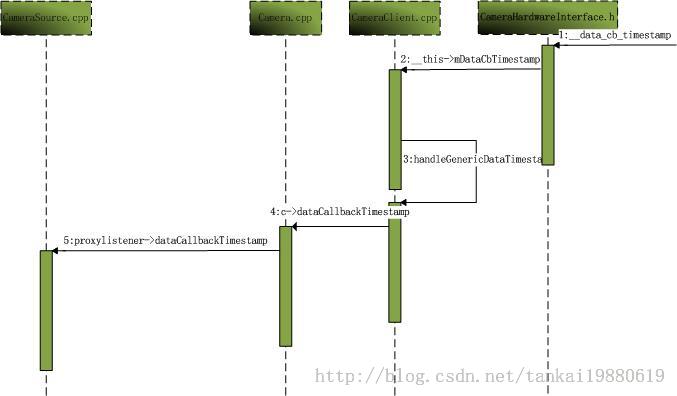

首先,通过图示、看看Android系统Camera录像时的调用时序:

1.录像命令时序

2.录像数据回调时序

一、应用部分

1.主Activity启动

packages/apps/Camera/src/com/android/camera/CameraActivity.java

public void onCreate(Bundle state)

{

mCurrentModule.init(this, mFrame, true);

}

2.录像Activity初始化

packages/apps/Camera/src/com/android/camera/VideoModule.java

public void init(CameraActivity activity, View root, boolean reuseScreenNail) {

{

CameraOpenThread cameraOpenThread = new CameraOpenThread();

/*

protected class CameraOpenThread extends Thread {

@Override

public void run() {

openCamera();

}

}

*/

}

3.开始录制和停止录制

//当用户点击录像后调用

public void onShutterButtonClick()

{

startVideoRecording();

}

private void startVideoRecording()

{

initializeRecorder();

/*

private void initializeRecorder()

{

mMediaRecorder = new MediaRecorder();

//调用至frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp

/*

status_t StagefrightRecorder::setCamera(const sp<ICamera> &camera,

const sp<ICameraRecordingProxy> &proxy) {

mCamera = camera;

}

*/

mMediaRecorder.setCamera(mActivity.mCameraDevice.getCamera());

mMediaRecorder.setAudioSource(MediaRecorder.AudioSource.CAMCORDER);

mMediaRecorder.setVideoSource(MediaRecorder.VideoSource.CAMERA);

mMediaRecorder.setProfile(mProfile);

mMediaRecorder.setMaxDuration(mMaxVideoDurationInMs);

mMediaRecorder.setOutputFile(mVideoFilename);

}

*/

mMediaRecorder.start();

}

//当用户点击停止录制后调用

public void onShutterButtonClick() {

public void onShutterButtonClick() {

}

private void onStopVideoRecording() {

stopVideoRecording();

}

private boolean stopVideoRecording() {

mMediaRecorder.setOnErrorListener(null);

mMediaRecorder.setOnInfoListener(null);

mMediaRecorder.stop();

closeCamera(closeEffects);

}

二、框架部分

1.MediaRecorder的API部分

frameworks/base/media/java/android/media/MediaRecorder.java

public native void start() throws IllegalStateException; public native void stop() throws IllegalStateException;

2.Native部分

frameworks/base/media/jni/android_media_MediaRecorder.cpp

static JNINativeMethod gMethods[] = {

{"start", "()V", (void *)android_media_MediaRecorder_start},

{"stop", "()V", (void *)android_media_MediaRecorder_stop},

}

static void

android_media_MediaRecorder_start(JNIEnv *env, jobject thiz)

{

ALOGV("start");

sp<MediaRecorder> mr = getMediaRecorder(env, thiz);

process_media_recorder_call(env, mr->start(), "java/lang/RuntimeException", "start failed.");

}

static void

android_media_MediaRecorder_stop(JNIEnv *env, jobject thiz)

{

ALOGV("stop");

sp<MediaRecorder> mr = getMediaRecorder(env, thiz);

process_media_recorder_call(env, mr->stop(), "java/lang/RuntimeException", "stop failed.");

}

3.C++部分

frameworks/av/media/libmedia/MediaRecorder.cpp

status_t MediaRecorder::start()

{

status_t ret = mMediaRecorder->start();

/*

MediaRecorder::MediaRecorder() : mSurfaceMediaSource(NULL)

{

const sp<IMediaPlayerService>& service(getMediaPlayerService());

mMediaRecorder = service->createMediaRecorder(getpid());

//frameworks/av/media/libmediaplayerservice/MediaPlayerService.cpp

//sp<IMediaRecorder> MediaPlayerService::createMediaRecorder(pid_t pid)

//{

// sp<MediaRecorderClient> recorder = new MediaRecorderClient(this, pid);

// return recorder;

//}

}

*/

}

4.服务端(Android的Binder)

frameworks/av/media/libmediaplayerservice/MediaRecorderClient.cpp

MediaRecorderClient::MediaRecorderClient(const sp<MediaPlayerService>& service, pid_t pid)

{

ALOGV("Client constructor");

mPid = pid;

mRecorder = new StagefrightRecorder;

mMediaPlayerService = service;

}

status_t MediaRecorderClient::start()

{

return mRecorder->start();

}

Android4.2多媒体使用Stagefright架构

frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp

status_t StagefrightRecorder::start() {

switch (mOutputFormat) {

case OUTPUT_FORMAT_MPEG_4:

status = startMPEG4Recording();

break;

case OUTPUT_FORMAT_AMR_WB:

status = startAMRRecording();

break;

case OUTPUT_FORMAT_AAC_ADTS:

status = startAACRecording();

break;

case OUTPUT_FORMAT_RTP_AVP:

status = startRTPRecording();

break;

case OUTPUT_FORMAT_MPEG2TS:

status = startMPEG2TSRecording();

break;

}

}

status_t StagefrightRecorder::startMPEG4Recording() {

status_t err = setupMPEG4Recording(

mOutputFd, mVideoWidth, mVideoHeight,

mVideoBitRate, &totalBitRate, &mWriter);

/*

status_t StagefrightRecorder::setupMPEG4Recording(

int outputFd,

int32_t videoWidth, int32_t videoHeight,

int32_t videoBitRate,

int32_t *totalBitRate,

sp<MediaWriter> *mediaWriter) {

sp<MediaWriter> writer = new MPEG4Writer(outputFd);

if (mVideoSource < VIDEO_SOURCE_LIST_END) {

sp<MediaSource> mediaSource;

//创建CameraSource:

//这步使用mCamera,mMediaRecorder.setCamera(mActivity.mCameraDevice.getCamera())应用设置过来的

err = setupMediaSource(&mediaSource);

sp<MediaSource> encoder;

//重要!!!!!设置输入类型,如:YUV420SP等

err = setupVideoEncoder(mediaSource, videoBitRate, &encoder);

//sp<MetaData> meta = cameraSource->getFormat();

//CameraSource.cpp

////mColorFormat = getColorFormat(params.get(CameraParameters::KEY_VIDEO_FRAME_FORMAT));

//CameraHal.cpp:

////p.set(CameraParameters::KEY_VIDEO_FRAME_FORMAT, (const char *) CameraParameters::PIXEL_FORMAT_YUV420SP);

//setVideoInputFormat(mMIME, meta);

//setVideoPortFormatType

writer->addSource(encoder);

}

if (!mCaptureTimeLapse && (mAudioSource != AUDIO_SOURCE_CNT)) {

//创建AudioSource;这步使用new AudioRecord

err = setupAudioEncoder(writer);

if (err != OK) return err;

*totalBitRate += mAudioBitRate;

}

}

*/

err = mWriter->start(meta.get());

}MPEG4编码器部分

frameworks/av/media/libstagefright/MPEG4Writer.cpp

status_t MPEG4Writer::Track::start(MetaData *params) {

pthread_create(&mThread, &attr, ThreadWrapper, this);

/*

void *MPEG4Writer::Track::ThreadWrapper(void *me) {

Track *track = static_cast<Track *>(me);

status_t err = track->threadEntry();

return (void *) err;

}

status_t MPEG4Writer::Track::threadEntry() {

while (!mDone && (err = mSource->read(&buffer)) == OK) {

//如上read即是frameworks/av/media/libstagefright/CameraSource.cpp的read

}

}

*/

}

status_t MPEG4Writer::Track::stop() {

mDone = true;

void *dummy;

pthread_join(mThread, &dummy); //等待刚才的主线程退出

}

当有数据来时CameraSource的dataCallbackTimestamp函数会被调用,如此、完成视频录制。

三、分析问题

我们的问题就出在直接拔掉Camera时;应用程序调用mMediaRecorder.stop()超时卡死;经分析是上述框架部分“Camera拔出时录制的主线程不能退出、导致接口阻塞”。后调试发现:mDone变量并不能在两个线程间传参数;后打入之前一个patcher(see bug 4724339),修改了主线程中的mSource->read、并在相应的while循环中做判断,问题解决。

frameworks/av/media/libstagefright/CameraSource.cpp

status_t CameraSource::read(

MediaBuffer **buffer, const ReadOptions *options) {

ALOGW("Timed out waiting for incoming camera video frames: %lld us",

mLastFrameTimestampUs);

//add by tankai

// For funtion OMXCodec::read timeout return in writer, then Writer (e.g. VECaptureWriter) thread can exit when

// media recorder stop

// reason: when media recorder start with no frame send to OMXCodec(with camera source),

// media recorder can not stop always (because writer can't exit)

// this change impact cameras: vecapture fake camera and Webcam

return ERROR_END_OF_STREAM;

//end tankai

}

四、补充,分析MPEG4中Audio流程;接分析二中的实现

1.Audio录音

frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp

status_t StagefrightRecorder::setupAudioEncoder(const sp<MediaWriter>& writer) {() {

sp<MediaSource> audioEncoder = createAudioSource();

writer->addSource(audioEncoder);

}

sp<MediaSource> StagefrightRecorder::createAudioSource() {

sp<AudioSource> audioSource =

new AudioSource(

mAudioSource,

mSampleRate,

mAudioChannels);

}frameworks/av/media/libstagefright/AudioSource.cpp

AudioSource::AudioSource(

audio_source_t inputSource, uint32_t sampleRate, uint32_t channelCount)

: mRecord(NULL),

mStarted(false),

mSampleRate(sampleRate),

mPrevSampleTimeUs(0),

mNumFramesReceived(0),

mNumClientOwnedBuffers(0) {

mRecord = new AudioRecord(

inputSource, sampleRate, AUDIO_FORMAT_PCM_16_BIT,

audio_channel_in_mask_from_count(channelCount),

bufCount * frameCount,

AudioRecordCallbackFunction,

this,

frameCount);

}

至此,MediaRecorder与AudioFlinger建立联系。

2.Audio放音

MediaPlayer播放音频服务端(后边有时间在具体分析应用程序/客户端流程):

frameworks/av/media/libmediaplayerservice/MediaPlayerService.cpp

status_t MediaPlayerService::AudioOutput::open(

uint32_t sampleRate, int channelCount, audio_channel_mask_t channelMask,

audio_format_t format, int bufferCount,

AudioCallback cb, void *cookie,

audio_output_flags_t flags)

{

AudioTrack *t;

//最终放声音使用AudioTrack

t = new AudioTrack(

mStreamType,

sampleRate,

format,

channelMask,

frameCount,

flags,

CallbackWrapper,

newcbd,

0, // notification frames

mSessionId);

}