Android之Media播放器源码分析(framework——native)

一、概述:

声明:下面我们看的是Android 4.0版本下的Media播放器的框架,我们首先从一个简单的media播放器apk源码入手,从Java->JNI->C/C++一步步研究Android是如何通过Java一个MediaPlayer实现解码到屏幕的输出。

通常在Android中播放视频用到的是MediaPlayer类,展示视频使用的是SurfaceView控件。

二、apk实现:

我们首先在main.xml布局文件中添加用于视频画面绘制的SurfaceView控件:

<SurfaceView android:layout_width="fill_parent"android:layout_height="240dip"android:id="@+id/surfaceView"/>

例如我们编写一个简单的视频播放器调用的常用方法如下。

SurfaceView surfaceView = (SurfaceView)this.findViewById(R.id.surfaceView);

surfaceView.getHolder().setFixedSize(720, 576); //设置分辨率

/*下面设置Surface不维护自己的缓冲区,而是等待屏幕的渲染引擎将内容推送到用户面前*/

surfaceView.getHolder().setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

/* new 一个播放器 mediaPlayer */

MediaPlayer mediaPlayer = new MediaPlayer();

mediaPlayer.reset(); //重置为初始状态

mediaPlayer.setAudioStreamType(AudioManager.STREAM_MUSIC);

/* 设置Video影片以SurfaceHolder播放 */

mediaPlayer.setDisplay(surfaceView.getHolder());

mediaPlayer.setDataSource("/mnt/sdcard/test.ts");

mediaPlayer.prepare(); //缓冲

mediaPlayer.start(); //播放

mediaPlayer.pause(); //暂停播放

mediaPlayer.start(); //恢复播放

mediaPlayer.stop(); //停止播放

mediaPlayer.release(); //释放资源

三、源码分析

我们首先来看MediaPlayer类,Java层的MediaPlayer.java位于frameworks/base/media/java/android/media/目录下:

public class MediaPlayer

{

...

static {

System.loadLibrary("media_jni");

native_init();

}

private int mNativeContext;

private int mNativeSurfaceTexture;

private int mListenerContext;

private SurfaceHolder mSurfaceHolder;

private EventHandler mEventHandler;

public MediaPlayer() {

Looper loop;

if((loop = Looper.myLooper()) != null) {

mEventHandler = new EventHandler(this, looper);

} else if((looper = Looper.getMainLooper()) != null) {

mEventHandler = new EventHandler(this. looper);

} else {

mEventHandler = null;

}

native_setup(new WeakReference<MediaPlayer>(this));

}

....

}

(一) static代码块

首先会加载libmedia_jni.so库,调用native_init()方法,对应JNI接口为

android_media_MediaPlayer_native_init(JNIEnv* env)

{

jclass clazz;

class = env->FindClass("android/media/MediaPlayer");

fields.context = env->GetFieldID(clazz, "mNativeContext"); // Java类中保存JNI层的mediaplayer对象

/* JNI 事件通知Java,static 函数 */

fields.post_event = env->GetStaticMethodID(clazz, "postEventFromNative", "(Ljava/lang/Object;IIILjava/lang/Object;)V");

fields.surface_texture = env->GetFieldID(clazz, "mNativeSurfaceTexture", "I");

jclass surface = env->FindClass("android/view/Surface");

fields.bitmapClazz = env->FindClass("android/graphics/Bitmap");

fields.bitmapContstructor = env->GetMethodID(fields.bitmapClazz, "<init>", "(I[BZ[BI)V"); // 找到Bitmap的构造函数

}

(二) 构造方法 MediaPlayer

这里在MediaPlayer构造函数中会new 一个 EventHandler,其中EventHandler是MediaPlayer的一个内部类,继承于Handler。用于处理各种消息:MEDIA_PREPARED、MEDIA_PLAYBACK_COMPLETE、MEDIA_BUFFERING_UPDATE、MEDIA_SEEK_COMPLETE、MEDIA_SET_VIDEO_SIZE、MEDIA_ERROR、MEDIA_INFO、MEDIA_TIMED_TEXT、MEDIA_NOP等消息,对此分别调用接口OnPreparedListener的onPrepared()、OnCompletionListener的onCompletion()、OnBufferingUpdateListener的onBufferingUpdate()。。。等方法来处理,而这些方法我们都可以通过实现相应的接口来处理。

重点还是native_setup(new WeakReference<MediaPlayer>(this))这句话,调用的JNI方法:

android_media_MediaPlayer_setup(JNIEnv* env, JObject thiz, jobject weak_this)

{

/* 这里参数中:thiz代码Java层的MediaPlayer对象,weak_this表示对Java层MediaPlayer对象的弱引用*/

/* 这里首先在JNI层 new 一个 MediaPlayer对象 */

sp<MediaPlayer> mp = new MediaPlayer();

// Create new listener and give it to MediaPlayer

sp<JNIMediaPlayerListener> listener = new JNIMediaPlayerListener(env, thiz, weak_this);

mp->setListener(listener);

setMediaPlayer(env, thiz, mp); // Stow out new C++ MediaPlayer

}

这里我们首先看看JNIMediaPlayerListener类:

class JNIMediaPlayerListener : public MediaPlayerListener

{

public:

JNIMediaPlayerListener(JNIEnv* env, jobject thiz, jobject weak_thiz);

~JNIMediaPlayerListener();

virtual void notify(int msg, int ext1, int ext2, const Parcel *obj = NULL);

private:

JNIMediaPlayerListener();

jclass mClass; // 对 MediaPlayer类的引用

jobject mObject; // 对Java层MediaPlayer对象的弱引用

}

我们只需要重点关注下notify这个函数,用于JNI层向Java层通知事件,以后在分析底层播放的时候我们会用到:

void JNIMediaPlayerListener::notify(int msg, int ext1, int ext2, const Parcel *obj = NULL)

{

JNIEnv *env = AndroidRuntime::getJNIEnvt();

if(obj && obj->dataSize() > 0)

{

jbyteArray jArray = env->NewByteArray(obj->dataSize());

jbyte *nArray = env->GetByteArrayElements(jArray, NULL);

memcpy(nArray, obj->data(), obj->dataSize());

env->ReleaseByteArrayElements(jArray, nArray, 0);

env->CallStaticVoidMethod(mClass, fields.post_event, mObject, msg, ext1, ext2, jArray);

env->DeleteLocalRef(jArray);

}

else

env->CallStaticVoidMethod(mClass, fields.post_event, mObject, msg, ext1, ext2, NULL);

}

我们在前面native_init的JNI实现中初始化了fields.post_event对应的是Java层MediaPlayer对象中的postEventFromNative,

private static void postEventFromNative(Object mediaplayer_ref, int what, int arg1, int arg2, Object obj)

{

MediaPlayer mp = (MediaPlayer)((WeakReference)mediaplayer_ref).get();

Message m = mp.mEventHandler.obtainMessage(what, arg1, arg2, obj);

mp.mEventHandler.sendMessage(m);

}

最后是通过EventHandler来处理。

现在我们看看构造JNI层的MediaPlayer类:定义在frameworks/base/include/media/mediaplayer.h中

class MediaPlayer : public BnMediaPlayerClient, public virtual IMediaDeathNotifier

{

public:

MediaPlayer();

~MediaPlayer();

void died();

void disconnect();

status_t setDataSource(const char* url, const KeyedVector<String8, String8> *headers);

...

status_t setVideoSurfaceTexture(const sp<ISurfaceTexture>& surfaceTexture);

status_t setListener(const sp<MediaPlayerListener>& listener);

status_t prepare();

status_t start();

status_t stop();

status_t pause();

...

void notify(int msg, int ext1, int ext2, const Parcel &obj = NULL);

static sp<IMemory> decode(const char* url, uint32_t *pSampleRate, int *pNumChannels, int *pFormat);

status_t setAudioSessionID(int sessionId);

....

private:

sp<IMediaPlayer> mPlayer; // 对应着MediaPlayerService内部类Client在客户端的代理,相当于BpMediaPlayer

thread_id_t mLockThreadId;

sp<MediaPlayerListener> mListener;

....

};

new 完MediaPlayer对象之后,设置其监听变量mListener = listener,然后将其保存到Java层对象中。

static sp<MediaPlayer> setMediaPlayer(JNIEnv* env, jobject thiz, const sp<MediaPlayer>& player)

{

Mutex::Autolock l(sLock);

sp<MediaPlayer> old = (MediaPlayer*)env>GetIntField(thiz, fields.context);

if(player.get())

player->incStrong(thiz); // 增加player对象强引用计数

if(old != 0)

old->decStrong(thiz); // 对原来的Java层保存的JNI层MediaPlayer对象减少强引用计数

// 将新的player对象保存到Java层的thiz对象的fields.context对应的变量mNativeContext中

env->SetIntField(thiz, fields.context, (int)player.get());

return old;

}

(三)设置播放器参数

好了,前面这么多就是我们在Java代码中调用MediaPlayer mp = new MediaPlayer(),Android整个Media所需要执行的过程,下面我们继续执行mp.reset() 和 mp.setAudioStreamType(AudioManager.STREAM_MUSIC);这两个函数比较简单都是直接调用到JNI层函数,我们直接跳到JNI函数:

static void android_media_MediaPlayer_reset(JNIEnv *env, jobject thiz)

{

sp<MediaPlayer> mp = getMediaPlayer(env, thiz);

process_media_player_call(env, thiz, mp->reset(), NULL, NULL);

} 调用的C++层MediaPlayer的reset()函数,其reset()就是设置相应的播放器状态等,如mCurrentState = MEDIA_PLAYER_IDLE将MediaPlayer类的mPlayer对象设置为0.(这里的sp<IMediaPlayer> mPlayer对应的就是IMediaPlayer的Bp客户端的代理,实际类型为MediaPlayerService的内部类Client。)

status MediaPlayer::setAudioStreamType(int type)就是根据当前播放器的状态类配置mStreamType变量,如果当前MediaPlayer对象已经调用过了prepare(),播放器进入了MEDIA_PLAYER_PREPARED状态则无法设置streamType。

下面我们看看mp.setDisplay()设置播放器显示输出。

public vodi setDisplay(SurfaceHolder sh)

{

mSurfaceHolder = sh;

Surface surface;

surface = sh.getSurface();

_setVideoSurface(surface); // 调用JNI函数

updateSurfaceScreenOn();

}

这里的SurfaceHolder实际是一个接口,需要继承类去实现,定义frameworks/base/core/java/android/view/SurfaceHolder.java

我们看到注释介绍:Abstract interface to someone holding a display surface. Allows you to control the surface size and format, edit the pixels in the surface, and monitor changes to the surface.

原来是一个抽象接口类,里面封装了Surface,主要用于对Surface的控制操作如:改变大小、格式、像素等。

_setVideoSurface()在JNI层实现为:

static void setVideoSurface(JNIEnv* env, jobject thiz, jobject jsurface, jboolean mediaPlayerMustBeAlive = true)

{

sp<MediaPlayer> mp = getMediaPlayer(env, thiz);

decVideoSurfaceRef(env, thiz); // 减少Java类中mNativeSurfaceTexture保存的JNI层对之前ISurfaceTexture对象的弱引用

// Surface 是Android中比较复杂的一个模块,我们以后再分析,现在只需要知道是一块显示区域就行了。

sp<ISurfaceTexture> new_st;

sp<Surface> surface(Surface_getSurface(env, jsurface));

new_st = surface->getSurfaceTexture();

new_st->incStrong(thiz);

env->SetIntField(thiz, fields.surface_texture, (int)new_st.get()); // 重新设置到Java类中mNativeSurfaceTexture保存的JNI层对象的引用

mp->setVideoSurfaceTexture(new_st);

}

(四) 设置播放源setDataSource

setDataSource()设置播放源,对应的JNI函数为:

static void android_media_MediaPlayer_setDataSource(JNIEnv *env, jobject thiz, jstring path)

{

android_media_MediaPlayer_setDataSourceAndHeaders(env, thiz, path, NULL, NULL);

}

调用的是mp.setDataSource(path, NULL);

status_t MediaPlayer::setDataSource(const char* url, const KeyedVector<String8, String8>* headers)

{

const sp<IMediaPlayerService>& service(getMediaPlayerService());

sp<IMediaPlayer> player(service->create(getpid(), this, mAudioSessionId));

player->setDataSource(url, headers);

attachNewPlayer(player);

}

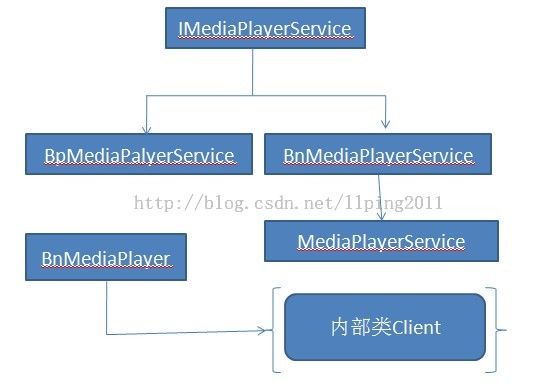

这里首先 MediaPlayer的基类IMediaDeathNotifier的static函数getMediaPlayerService()函数获取MediaPlayerService的代理BpMediaPlayerService,然后通过Binder通信调用IMediaPlayerService的create()函数,传入的参数分别为当前线程的PID,当前MediaPlayer对象和前面获取的mAudioSessionId,返回一个IMediaPlayer对象实际类型为MediaPlayerService内部类型Client。

sp<IMediaPlayerService> IMediaDeathNotifier::sMediaPlayerService;

const sp<IMediaPlayerService>& IMediaDeathNotifier::getMediaPlayerService()

{

if(sMediaPlayerService.get() == 0)

{

sp<IServiceManager> sm = defaultServiceManager();

sp<IBinder> binder;

do {

binder = sm->getService(String16("media.player"));

if(binder != 0)

break;

usleep(500000); // 0.5 s

} while(true);

if(sDeathNotifier == NULL)

sDeathNotifier = new DeathNotifier();

}

binder->linkToDeath(sDeathNotifier);

sMediaPlayerService = interface_cast<IMediaPlayerService>(binder);

return sMediaPlayerService;

}

这里调用的service->create(getpid(), this, mAudioSessionId); 是通过Binder通信调用到MediaPlayerService::create()。

@frameworks/base/media/libmediaplayerservice/MediaPlayerService.cpp

class MediaPlayerService : public BnMediaPlayerService

{

class Client;

class AudioOutput : public MediaPlayerBase::AudioSink

{

public:

AudioOutput(int sessionId);

virtual ~AutioOutput();

virtual status_t open(uint32_t sampleRate, int channelCount, int format, int bufferCount, AudioCallback cb, void *cookie);

virtual void start();

virtual ssize_t write(const void* buffer, size_t size);

...

private:

AudioTrack* mTrack;

AudioCallback mCallback;

...

};

class AudioCache : public MediaPlayserBase::AudioSink

{

...

};

public:

static void instantiate(); // start the MediaPlayerService

virtual sp<IMediaPlayer> create(pid_t pid, cosnt sp<IMediaPlayerClient>& client, int audioSessionId);

virtual sp<IOMX> getOMX();

...

private:

virtual MediaPlayerService();

virtual ~MediaPlayerService();

SortedVector< wp<Client> > mClient;

SortedVector< wp<MediaRecorderClient> > mMediaRecorderClients;

sp<IOMX> mOMX;

// 有个内部类 Client

class Client : public BnMediaPlayer

{

public:

virtual status_t setVideoSurfaceTexture(const sp<ISurfaceTexture>& surfaceTexture);

virtual status_t prepareAsync();

virtual status_t start();

...

sp<MediaPlayerBase> createPlayer(player_type playerType);

virtual status_t setDataSource(const char* url, ...);

static void notify(void* cookie, int msg, int ext1, int ext2, const Parcel *obj);

private:

friend class MediaPlayerService;

Client(const sp<MediaPlayerService>& service, pid_t pid, int32_t connId, const sp<IMediaPlayerClient>& client,

int audioSessionId, uid_t uid);

sp<MediaPlayerBase> mPlayer;

sp<MediaPlayerService> mService;

sp<IMediaPlayerClient> mClient;

};

};

这里看下MediaPlayerService的create函数:

sp<IMediaPlayer> create(pid_t pid, cosnt sp<IMediaPlayerClient>& client, int audioSessionId)

{

int32_t connId = android_atomic_inc(&mNextConnId);

// 穿入参数分别为:当前MediaPlayerService对象、客户端进程pid、客户端MediaPlayer对象的引用、audioSessionId等

sp<Client> c = new Client(this, pid, client, audioSessionId, IPCThreadState::self()->getCallingUid());

wp<Client> w = c; // Client的构造函数就是一些简单的给变量赋值操作了

mClients.add(w);

return c;

}

分析到这里我们在Java中调用setDataSource()的时候底层MediaPlayer类首先通过Binder获取MediaPlayerService的代理BpMediaPlayerService,然后调用其create函数,由MediaPlayerService来处理,返回一个匿名Binder对象Client,返回类型为IMediaPlayer,保存在MediaPlayer类的sp<IMediaPlayer> mPlayer中。后续我们就可以直接使用这个mPlayer对象了,调用它的setDataSource、prepare、start、stop等。

下面我们可以直接跳到MediaPlayerService内部类Client里面去看setDataSource():

MediaPlayerService::Client::setDataSource(const char* url, const KeyedVector<String8, String8> *headers)

{

if(strncmp(url, "http://", 7) == 0 || strncmp(url, "https://", 8)==0 || strncmp(url, "rtsp://", 7) == 0)

{

checkPermission("android.permission.INTERNET"); // 检查是否具有网络权限

}

if(strncmp(url, "content://", 10) == 0)

{

String16 url16(url);

int fd = android::openContentProviderFile(url16);

setDataSource(fd, 0, 0x7ffffffffLL);

close(fd);

return mStatus;

} else {

player_type playerType = getPlayerType(url); // 根据URL获取播放器类型

sp<MediaPlayerBase> p = createPlayer(playerType); // 根据播放器类型创建播放器

// 这里我们分析Android的StagefrightPlayer播放器

if(!p->hardwareOutput()) {

mAudioOutput = new AudioOutput(mAudioSessionId);

static_cast<MediaPlayerInterface*>(p.get())->setAudioSink(mAudioOutput);

}

}

mStatus = p->setDataSource(url, headers);

mPlayer = p;

return mStatus;

}

分析代码我们发现StagefrightPlayer其实就是AwesomePlayer的封装,基本上StagefrightPlayer所有的方法都是调用AwesomePlayer来实现的。AwesomePlayer类比较复杂,涉及到获取音视频流的格式、找到并打开相应的解码器、缓冲音视频数据送到解码器解码都是在这个类里面完成,主要通过mVideoEvent、mStreamDoneEvent、mBufferingEvent、mAsyncPrepareEvent等几个事件队列来进行驱动和调用完成视频的播放。