基于Java线性代数库jblas的ConjugateGradient和LBFGS例子

作者:金良([email protected]) csdn博客: http://blog.csdn.net/u012176591

1.关于jblas

jblas is a fast linear algebra library for Java. jblas is based on BLAS and LAPACK, the de-facto industry standard for matrix computations, and uses state-of-the-art implementations like ATLAS for all its computational routines, making jBLAS very fast.

主页地址:http://jblas.org/

该库的API文档地址:http://jblas.org/javadoc/index.html

在Java工程中我们只要 引入jar包文件就可以了。

下面是其小册子:

jblas - Fast matrix computations for Java

## 2.共轭梯度下降的例子:import org.jblas.*;

import static org.jblas.DoubleMatrix.*;

import static org.jblas.MatrixFunctions.*;

/** * Example of how to implement conjugate gradienst with jblas. * * Again, the main objective of this code is to show how to use * jblas, not how to package/modularize numerical code in Java ;) * * Closely follows http://en.wikipedia.org/wiki/Conjugate_gradient_method */

public class CG {

public static void main(String[] args) {

new CG().runExample();

}

void runExample() {

int n = 100;

double w = 1;

double lambda = 1e-6;

DoubleMatrix[] ds = sincDataset(n, 0.1);

//ds[0].print();

//ds[1].print();

DoubleMatrix A = gaussianKernel(w, ds[0], ds[0]).add( eye(n).mul(lambda) );

DoubleMatrix x = zeros(n);

DoubleMatrix b = ds[1];

cg(A, b, x, lambda);

}

DoubleMatrix cg(DoubleMatrix A, DoubleMatrix b, DoubleMatrix x, double thresh) {

int n = x.length;

DoubleMatrix r = b.sub(A.mmul(x));

DoubleMatrix p = r.dup();

double alpha = 0, beta = 0;

DoubleMatrix r2 = zeros(n), Ap = zeros(n);

while (true) {

A.mmuli(p, Ap);

alpha = r.dot(r) / p.dot(Ap);

x.addi(p.mul(alpha));

r.subi(Ap.mul(alpha), r2);

double error = r2.norm2();

System.out.printf("Residual error = %f\n", error);

if (error < thresh)

break;

beta = r2.dot(r2) / r.dot(r);

r2.addi(p.mul(beta), p);

DoubleMatrix temp = r;

r = r2;

r2 = temp;

}

return x;

}

/** * Compute the Gaussian kernel for the rows of X and Z, and kernel width w. */

public static DoubleMatrix gaussianKernel(double w, DoubleMatrix X, DoubleMatrix Z) {

DoubleMatrix d = Geometry.pairwiseSquaredDistances(X.transpose(), Z.transpose());

return exp(d.div(w).neg());

}

/** * The sinc function (save version). * * This version is save, as it replaces zero entries of x by 1. * Then, sinc(0) = sin(0) / 1 = 1. * */

DoubleMatrix safeSinc(DoubleMatrix x) {

return sin(x).div(x.add(x.eq(0)));

}

/** * Create a sinc data set. * * X ~ uniformly from -4..4 * Y ~ sinc(x) + noise * gaussian noise. */

DoubleMatrix[] sincDataset(int n, double noise) {

DoubleMatrix X = rand(n).mul(8).sub(4);

DoubleMatrix Y = safeSinc(X) .add( randn(n).mul(noise) );

return new DoubleMatrix[] {X, Y};

}

}3.岭回归例子

import org.jblas.*;

import static org.jblas.DoubleMatrix.*;

import static org.jblas.MatrixFunctions.*;

/** * A simple example which computes kernel ridge regression using jblas. * * This code is by no means meant to be an example of how you should do * machine learning in Java, as there is absolutely no encapsulation. It * is rather just an example of how to use jblas to perform different kinds * of computations. */

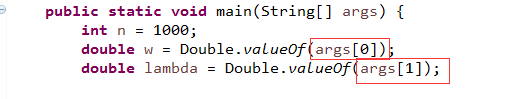

public class KRR {

public static void main(String[] args) {

int n = 1000;

double w = Double.valueOf(args[0]);

double lambda = Double.valueOf(args[1]);

new KRR().run(n, w, lambda);

}

void run(int n, double w, double lambda) {

DoubleMatrix[] ds = sincDataset(n, 0.1);

DoubleMatrix alpha = learnKRR(ds[0], ds[1], w, lambda);

DoubleMatrix Yh = predictKRR(ds[0], ds[0], w, alpha);

DoubleMatrix XE = rand(1000).mul(8).sub(4);

System.out.printf("Mean squared error = %.5f\n", mse(Yh, ds[1]));

}

/** * The sinc function. * * This version is not save since it divides by zero if one * of the entries of x are zero. */

DoubleMatrix sinc(DoubleMatrix x) {

return sin(x).div(x);

}

/** * The sinc function (save version). * * This version is save, as it replaces zero entries of x by 1. * Then, sinc(0) = sin(0) / 1 = 1. * */

DoubleMatrix safeSinc(DoubleMatrix x) {

return sin(x).div(x.add(x.eq(0)));

}

/** * Create a sinc data set. * * X ~ uniformly from -4..4 * Y ~ sinc(x) + noise * gaussian noise. */

DoubleMatrix[] sincDataset(int n, double noise) {

DoubleMatrix X = rand(n).mul(8).sub(4);

DoubleMatrix Y = safeSinc(X) .add( randn(n).mul(noise) );

return new DoubleMatrix[] {X, Y};

}

/** * Compute the alpha for Kernel Ridge Regression. * * Computes alpha = (K + lambda I)^-1 Y. */

DoubleMatrix learnKRR(DoubleMatrix X, DoubleMatrix Y,

double w, double lambda) {

int n = X.rows;

DoubleMatrix K = gaussianKernel(w, X, X);

K.addi(eye(n).muli(lambda));

DoubleMatrix alpha = Solve.solveSymmetric(K, Y);

return alpha;

}

/** * Compute the Gaussian kernel for the rows of X and Z, and kernel width w. */

DoubleMatrix gaussianKernel(double w, DoubleMatrix X, DoubleMatrix Z) {

DoubleMatrix d = Geometry.pairwiseSquaredDistances(X.transpose(), Z.transpose());

return exp(d.div(w).neg());

}

/** * Predict KRR on XE which has been trained on X, w, and alpha. * * In a real world application, you would put all the data from training * in a class of its own, of course! */

DoubleMatrix predictKRR(DoubleMatrix XE, DoubleMatrix X, double w, DoubleMatrix alpha) {

DoubleMatrix K = gaussianKernel(w, XE, X);

return K.mmul(alpha);

}

double mse(DoubleMatrix Y1, DoubleMatrix Y2) {

DoubleMatrix diff = Y1.sub(Y2);

return pow(diff, 2).mean();

}

}3.LBFGS的例子

相关例子和库文件jar包下载 http://download.csdn.net/detail/u012176591/8660849

————————————————————

安装jar包的javadoc文档

加入jblas的jar包的javadoc文档,这样使得我们能够在eclipse中方便地查看方法或对象的参数和用法。

首先将Javadoc压缩文件解压到某处,如下:

Package Explorer视图中,选择jar包,右键单击Properties,在弹出框中作如下设置

在程序中,将鼠标放到类名或方法名上,自动弹出其用法。效果如下:

eclipse设置Java命令行执行参数

如下图所示,程序执行时需要两个参数,需要我们在执行程序之前对其进行设定。

下面是设置方法,首先右键java文件–>运行方式–>运行配置,打开配置文件,如下:

对自变量进行设置,这里设置两个数字,分别对应两个参数。