live555学习2(转)

首先更正一个概念:

ServerMediaSession原先说代表一个流,其实是不准确的。它代表的是server端的一个媒体的名字,而说ServerMediaSubsession代表一个Track是准确的。以后流指的是那些有数据流动的组合。

RTP的建立:

RTP的建立过程无非是这样:client告诉server自己的rtp/rtcp端口号,server建立自己的rtp/rtcp socket,然后在收到PLAY请求时向客户端发数据。看起来非常简单。

在收到SETUP请求时才建立连接,让我们看一下处理这个命令的函数:

- void RTSPServer::RTSPClientSession::handleCmd_SETUP(

- char const* cseq,

- char const* urlPreSuffix,

- char const* urlSuffix,

- char const* fullRequestStr)

- {

- // Normally, "urlPreSuffix" should be the session (stream) name,

- // and "urlSuffix" should be the subsession (track) name.

- // However (being "liberal in what we accept"), we also handle

- // 'aggregate' SETUP requests (i.e., without a track name),

- // in the special case where we have only a single track. I.e.,

- // in this case, we also handle:

- // "urlPreSuffix" is empty and "urlSuffix" is the session (stream) name, or

- // "urlPreSuffix" concatenated with "urlSuffix" (with "/" inbetween)

- // is the session (stream) name.

- char const* streamName = urlPreSuffix; // in the normal case

- char const* trackId = urlSuffix; // in the normal case

- char* concatenatedStreamName = NULL; // in the normal case

- do {

- // First, make sure the specified stream name exists:

- fOurServerMediaSession = fOurServer.lookupServerMediaSession(streamName);

- if (fOurServerMediaSession == NULL) {

- // Check for the special case (noted above), before we up:

- if (urlPreSuffix[0] == '\0') {

- streamName = urlSuffix;

- } else {

- concatenatedStreamName = new char[strlen(urlPreSuffix)

- + strlen(urlSuffix) + 2]; // allow for the "/" and the trailing '\0'

- sprintf(concatenatedStreamName, "%s/%s", urlPreSuffix,

- urlSuffix);

- streamName = concatenatedStreamName;

- }

- trackId = NULL;

- // Check again:

- fOurServerMediaSession = fOurServer.lookupServerMediaSession(streamName);

- }

- if (fOurServerMediaSession == NULL) {

- handleCmd_notFound(cseq);

- break;

- }

- fOurServerMediaSession->incrementReferenceCount();

- //为一个流中所有的track都分配一个stream state

- if (fStreamStates == NULL) {

- // This is the first "SETUP" for this session. Set up our

- // array of states for all of this session's subsessions (tracks):

- ServerMediaSubsessionIterator iter(*fOurServerMediaSession);

- for (fNumStreamStates = 0; iter.next() != NULL; ++fNumStreamStates) {

- } // begin by counting the number of subsessions (tracks)

- fStreamStates = new struct streamState[fNumStreamStates];

- iter.reset();

- ServerMediaSubsession* subsession;

- for (unsigned i = 0; i < fNumStreamStates; ++i) {

- subsession = iter.next();

- fStreamStates[i].subsession = subsession;

- fStreamStates[i].streamToken = NULL; // for now; it may be changed by the "getStreamParameters()" call that comes later

- }

- }

- //查找当前请求的track的信息

- // Look up information for the specified subsession (track):

- ServerMediaSubsession* subsession = NULL;

- unsigned streamNum;

- if (trackId != NULL && trackId[0] != '\0') { // normal case

- for (streamNum = 0; streamNum < fNumStreamStates; ++streamNum) {

- subsession = fStreamStates[streamNum].subsession;

- if (subsession != NULL && strcmp(trackId, subsession->trackId()) == 0)

- break; //找到啦!

- }

- if (streamNum >= fNumStreamStates) {

- // The specified track id doesn't exist, so this request fails:

- handleCmd_notFound(cseq);

- break;

- }

- } else {

- // Weird case: there was no track id in the URL.

- // This works only if we have only one subsession:

- if (fNumStreamStates != 1) {

- handleCmd_bad(cseq);

- break;

- }

- streamNum = 0;

- subsession = fStreamStates[streamNum].subsession;

- }

- // ASSERT: subsession != NULL

- //分析RTSP请求字符串中的传输要求

- // Look for a "Transport:" header in the request string, to extract client parameters:

- StreamingMode streamingMode;

- char* streamingModeString = NULL; // set when RAW_UDP streaming is specified

- char* clientsDestinationAddressStr;

- u_int8_t clientsDestinationTTL;

- portNumBits clientRTPPortNum, clientRTCPPortNum;

- unsigned char rtpChannelId, rtcpChannelId;

- parseTransportHeader(fullRequestStr, streamingMode, streamingModeString,

- clientsDestinationAddressStr, clientsDestinationTTL,

- clientRTPPortNum, clientRTCPPortNum, rtpChannelId,

- rtcpChannelId);

- if (streamingMode == RTP_TCP && rtpChannelId == 0xFF

- || streamingMode != RTP_TCP &&

- fClientOutputSocket != fClientInputSocket) {

- // An anomolous situation, caused by a buggy client. Either:

- // 1/ TCP streaming was requested, but with no "interleaving=" fields. (QuickTime Player sometimes does this.), or

- // 2/ TCP streaming was not requested, but we're doing RTSP-over-HTTP tunneling (which implies TCP streaming).

- // In either case, we assume TCP streaming, and set the RTP and RTCP channel ids to proper values:

- streamingMode = RTP_TCP;

- rtpChannelId = fTCPStreamIdCount;

- rtcpChannelId = fTCPStreamIdCount + 1;

- }

- fTCPStreamIdCount += 2;

- Port clientRTPPort(clientRTPPortNum);

- Port clientRTCPPort(clientRTCPPortNum);

- // Next, check whether a "Range:" header is present in the request.

- // This isn't legal, but some clients do this to combine "SETUP" and "PLAY":

- double rangeStart = 0.0, rangeEnd = 0.0;

- fStreamAfterSETUP = parseRangeHeader(fullRequestStr, rangeStart,

- rangeEnd) || parsePlayNowHeader(fullRequestStr);

- // Then, get server parameters from the 'subsession':

- int tcpSocketNum = streamingMode == RTP_TCP ? fClientOutputSocket : -1;

- netAddressBits destinationAddress = 0;

- u_int8_t destinationTTL = 255;

- #ifdef RTSP_ALLOW_CLIENT_DESTINATION_SETTING

- if (clientsDestinationAddressStr != NULL) {

- // Use the client-provided "destination" address.

- // Note: This potentially allows the server to be used in denial-of-service

- // attacks, so don't enable this code unless you're sure that clients are

- // trusted.

- destinationAddress = our_inet_addr(clientsDestinationAddressStr);

- }

- // Also use the client-provided TTL.

- destinationTTL = clientsDestinationTTL;

- #endif

- delete[] clientsDestinationAddressStr;

- Port serverRTPPort(0);

- Port serverRTCPPort(0);

- // Make sure that we transmit on the same interface that's used by

- // the client (in case we're a multi-homed server):

- struct sockaddr_in sourceAddr;

- SOCKLEN_T namelen = sizeof sourceAddr;

- getsockname(fClientInputSocket, (struct sockaddr*) &sourceAddr, &namelen);

- netAddressBits origSendingInterfaceAddr = SendingInterfaceAddr;

- netAddressBits origReceivingInterfaceAddr = ReceivingInterfaceAddr;

- // NOTE: The following might not work properly, so we ifdef it out for now:

- #ifdef HACK_FOR_MULTIHOMED_SERVERS

- ReceivingInterfaceAddr = SendingInterfaceAddr = sourceAddr.sin_addr.s_addr;

- #endif

- //获取rtp连接信息,在其中已建立起了server端的rtp和rtcp socket,返回

- //fStreamStates[streamNum].streamToken表示数据流已经建立起来了

- subsession->getStreamParameters(fOurSessionId,

- fClientAddr.sin_addr.s_addr, clientRTPPort, clientRTCPPort,

- tcpSocketNum, rtpChannelId, rtcpChannelId, destinationAddress,

- destinationTTL, fIsMulticast, serverRTPPort, serverRTCPPort,

- fStreamStates[streamNum].streamToken);

- SendingInterfaceAddr = origSendingInterfaceAddr;

- ReceivingInterfaceAddr = origReceivingInterfaceAddr;

- //形成RTSP回应字符串

- struct in_addr destinationAddr;

- destinationAddr.s_addr = destinationAddress;

- char* destAddrStr = strDup(our_inet_ntoa(destinationAddr));

- char* sourceAddrStr = strDup(our_inet_ntoa(sourceAddr.sin_addr));

- if (fIsMulticast) {

- switch (streamingMode) {

- case RTP_UDP:

- snprintf(

- (char*) fResponseBuffer,

- sizeof fResponseBuffer,

- "RTSP/1.0 200 OK\r\n"

- "CSeq: %s\r\n"

- "%s"

- "Transport: RTP/AVP;multicast;destination=%s;source=%s;port=%d-%d;ttl=%d\r\n"

- "Session: %08X\r\n\r\n", cseq, dateHeader(),

- destAddrStr, sourceAddrStr, ntohs(serverRTPPort.num()),

- ntohs(serverRTCPPort.num()), destinationTTL,

- fOurSessionId);

- break;

- case RTP_TCP:

- // multicast streams can't be sent via TCP

- handleCmd_unsupportedTransport(cseq);

- break;

- case RAW_UDP:

- snprintf(

- (char*) fResponseBuffer,

- sizeof fResponseBuffer,

- "RTSP/1.0 200 OK\r\n"

- "CSeq: %s\r\n"

- "%s"

- "Transport: %s;multicast;destination=%s;source=%s;port=%d;ttl=%d\r\n"

- "Session: %08X\r\n\r\n", cseq, dateHeader(),

- streamingModeString, destAddrStr, sourceAddrStr,

- ntohs(serverRTPPort.num()), destinationTTL,

- fOurSessionId);

- break;

- }

- } else {

- switch (streamingMode) {

- case RTP_UDP: {

- snprintf(

- (char*) fResponseBuffer,

- sizeof fResponseBuffer,

- "RTSP/1.0 200 OK\r\n"

- "CSeq: %s\r\n"

- "%s"

- "Transport: RTP/AVP;unicast;destination=%s;source=%s;client_port=%d-%d;server_port=%d-%d\r\n"

- "Session: %08X\r\n\r\n", cseq, dateHeader(),

- destAddrStr, sourceAddrStr, ntohs(clientRTPPort.num()),

- ntohs(clientRTCPPort.num()), ntohs(serverRTPPort.num()),

- ntohs(serverRTCPPort.num()), fOurSessionId);

- break;

- }

- case RTP_TCP: {

- snprintf(

- (char*) fResponseBuffer,

- sizeof fResponseBuffer,

- "RTSP/1.0 200 OK\r\n"

- "CSeq: %s\r\n"

- "%s"

- "Transport: RTP/AVP/TCP;unicast;destination=%s;source=%s;interleaved=%d-%d\r\n"

- "Session: %08X\r\n\r\n", cseq, dateHeader(),

- destAddrStr, sourceAddrStr, rtpChannelId, rtcpChannelId,

- fOurSessionId);

- break;

- }

- case RAW_UDP: {

- snprintf(

- (char*) fResponseBuffer,

- sizeof fResponseBuffer,

- "RTSP/1.0 200 OK\r\n"

- "CSeq: %s\r\n"

- "%s"

- "Transport: %s;unicast;destination=%s;source=%s;client_port=%d;server_port=%d\r\n"

- "Session: %08X\r\n\r\n", cseq, dateHeader(),

- streamingModeString, destAddrStr, sourceAddrStr,

- ntohs(clientRTPPort.num()), ntohs(serverRTPPort.num()),

- fOurSessionId);

- break;

- }

- }

- }

- delete[] destAddrStr;

- delete[] sourceAddrStr;

- delete[] streamingModeString;

- } while (0);

- delete[] concatenatedStreamName;

- //返回后,回应字符串会被立即发送

- }

void RTSPServer::RTSPClientSession::handleCmd_SETUP(

char const* cseq,

char const* urlPreSuffix,

char const* urlSuffix,

char const* fullRequestStr)

{

// Normally, "urlPreSuffix" should be the session (stream) name,

// and "urlSuffix" should be the subsession (track) name.

// However (being "liberal in what we accept"), we also handle

// 'aggregate' SETUP requests (i.e., without a track name),

// in the special case where we have only a single track. I.e.,

// in this case, we also handle:

// "urlPreSuffix" is empty and "urlSuffix" is the session (stream) name, or

// "urlPreSuffix" concatenated with "urlSuffix" (with "/" inbetween)

// is the session (stream) name.

char const* streamName = urlPreSuffix; // in the normal case

char const* trackId = urlSuffix; // in the normal case

char* concatenatedStreamName = NULL; // in the normal case

do {

// First, make sure the specified stream name exists:

fOurServerMediaSession = fOurServer.lookupServerMediaSession(streamName);

if (fOurServerMediaSession == NULL) {

// Check for the special case (noted above), before we up:

if (urlPreSuffix[0] == '\0') {

streamName = urlSuffix;

} else {

concatenatedStreamName = new char[strlen(urlPreSuffix)

+ strlen(urlSuffix) + 2]; // allow for the "/" and the trailing '\0'

sprintf(concatenatedStreamName, "%s/%s", urlPreSuffix,

urlSuffix);

streamName = concatenatedStreamName;

}

trackId = NULL;

// Check again:

fOurServerMediaSession = fOurServer.lookupServerMediaSession(streamName);

}

if (fOurServerMediaSession == NULL) {

handleCmd_notFound(cseq);

break;

}

fOurServerMediaSession->incrementReferenceCount();

//为一个流中所有的track都分配一个stream state

if (fStreamStates == NULL) {

// This is the first "SETUP" for this session. Set up our

// array of states for all of this session's subsessions (tracks):

ServerMediaSubsessionIterator iter(*fOurServerMediaSession);

for (fNumStreamStates = 0; iter.next() != NULL; ++fNumStreamStates) {

} // begin by counting the number of subsessions (tracks)

fStreamStates = new struct streamState[fNumStreamStates];

iter.reset();

ServerMediaSubsession* subsession;

for (unsigned i = 0; i < fNumStreamStates; ++i) {

subsession = iter.next();

fStreamStates[i].subsession = subsession;

fStreamStates[i].streamToken = NULL; // for now; it may be changed by the "getStreamParameters()" call that comes later

}

}

//查找当前请求的track的信息

// Look up information for the specified subsession (track):

ServerMediaSubsession* subsession = NULL;

unsigned streamNum;

if (trackId != NULL && trackId[0] != '\0') { // normal case

for (streamNum = 0; streamNum < fNumStreamStates; ++streamNum) {

subsession = fStreamStates[streamNum].subsession;

if (subsession != NULL && strcmp(trackId, subsession->trackId()) == 0)

break; //找到啦!

}

if (streamNum >= fNumStreamStates) {

// The specified track id doesn't exist, so this request fails:

handleCmd_notFound(cseq);

break;

}

} else {

// Weird case: there was no track id in the URL.

// This works only if we have only one subsession:

if (fNumStreamStates != 1) {

handleCmd_bad(cseq);

break;

}

streamNum = 0;

subsession = fStreamStates[streamNum].subsession;

}

// ASSERT: subsession != NULL

//分析RTSP请求字符串中的传输要求

// Look for a "Transport:" header in the request string, to extract client parameters:

StreamingMode streamingMode;

char* streamingModeString = NULL; // set when RAW_UDP streaming is specified

char* clientsDestinationAddressStr;

u_int8_t clientsDestinationTTL;

portNumBits clientRTPPortNum, clientRTCPPortNum;

unsigned char rtpChannelId, rtcpChannelId;

parseTransportHeader(fullRequestStr, streamingMode, streamingModeString,

clientsDestinationAddressStr, clientsDestinationTTL,

clientRTPPortNum, clientRTCPPortNum, rtpChannelId,

rtcpChannelId);

if (streamingMode == RTP_TCP && rtpChannelId == 0xFF

|| streamingMode != RTP_TCP &&

fClientOutputSocket != fClientInputSocket) {

// An anomolous situation, caused by a buggy client. Either:

// 1/ TCP streaming was requested, but with no "interleaving=" fields. (QuickTime Player sometimes does this.), or

// 2/ TCP streaming was not requested, but we're doing RTSP-over-HTTP tunneling (which implies TCP streaming).

// In either case, we assume TCP streaming, and set the RTP and RTCP channel ids to proper values:

streamingMode = RTP_TCP;

rtpChannelId = fTCPStreamIdCount;

rtcpChannelId = fTCPStreamIdCount + 1;

}

fTCPStreamIdCount += 2;

Port clientRTPPort(clientRTPPortNum);

Port clientRTCPPort(clientRTCPPortNum);

// Next, check whether a "Range:" header is present in the request.

// This isn't legal, but some clients do this to combine "SETUP" and "PLAY":

double rangeStart = 0.0, rangeEnd = 0.0;

fStreamAfterSETUP = parseRangeHeader(fullRequestStr, rangeStart,

rangeEnd) || parsePlayNowHeader(fullRequestStr);

// Then, get server parameters from the 'subsession':

int tcpSocketNum = streamingMode == RTP_TCP ? fClientOutputSocket : -1;

netAddressBits destinationAddress = 0;

u_int8_t destinationTTL = 255;

#ifdef RTSP_ALLOW_CLIENT_DESTINATION_SETTING

if (clientsDestinationAddressStr != NULL) {

// Use the client-provided "destination" address.

// Note: This potentially allows the server to be used in denial-of-service

// attacks, so don't enable this code unless you're sure that clients are

// trusted.

destinationAddress = our_inet_addr(clientsDestinationAddressStr);

}

// Also use the client-provided TTL.

destinationTTL = clientsDestinationTTL;

#endif

delete[] clientsDestinationAddressStr;

Port serverRTPPort(0);

Port serverRTCPPort(0);

// Make sure that we transmit on the same interface that's used by

// the client (in case we're a multi-homed server):

struct sockaddr_in sourceAddr;

SOCKLEN_T namelen = sizeof sourceAddr;

getsockname(fClientInputSocket, (struct sockaddr*) &sourceAddr, &namelen);

netAddressBits origSendingInterfaceAddr = SendingInterfaceAddr;

netAddressBits origReceivingInterfaceAddr = ReceivingInterfaceAddr;

// NOTE: The following might not work properly, so we ifdef it out for now:

#ifdef HACK_FOR_MULTIHOMED_SERVERS

ReceivingInterfaceAddr = SendingInterfaceAddr = sourceAddr.sin_addr.s_addr;

#endif

//获取rtp连接信息,在其中已建立起了server端的rtp和rtcp socket,返回

//fStreamStates[streamNum].streamToken表示数据流已经建立起来了

subsession->getStreamParameters(fOurSessionId,

fClientAddr.sin_addr.s_addr, clientRTPPort, clientRTCPPort,

tcpSocketNum, rtpChannelId, rtcpChannelId, destinationAddress,

destinationTTL, fIsMulticast, serverRTPPort, serverRTCPPort,

fStreamStates[streamNum].streamToken);

SendingInterfaceAddr = origSendingInterfaceAddr;

ReceivingInterfaceAddr = origReceivingInterfaceAddr;

//形成RTSP回应字符串

struct in_addr destinationAddr;

destinationAddr.s_addr = destinationAddress;

char* destAddrStr = strDup(our_inet_ntoa(destinationAddr));

char* sourceAddrStr = strDup(our_inet_ntoa(sourceAddr.sin_addr));

if (fIsMulticast) {

switch (streamingMode) {

case RTP_UDP:

snprintf(

(char*) fResponseBuffer,

sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\n"

"CSeq: %s\r\n"

"%s"

"Transport: RTP/AVP;multicast;destination=%s;source=%s;port=%d-%d;ttl=%d\r\n"

"Session: %08X\r\n\r\n", cseq, dateHeader(),

destAddrStr, sourceAddrStr, ntohs(serverRTPPort.num()),

ntohs(serverRTCPPort.num()), destinationTTL,

fOurSessionId);

break;

case RTP_TCP:

// multicast streams can't be sent via TCP

handleCmd_unsupportedTransport(cseq);

break;

case RAW_UDP:

snprintf(

(char*) fResponseBuffer,

sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\n"

"CSeq: %s\r\n"

"%s"

"Transport: %s;multicast;destination=%s;source=%s;port=%d;ttl=%d\r\n"

"Session: %08X\r\n\r\n", cseq, dateHeader(),

streamingModeString, destAddrStr, sourceAddrStr,

ntohs(serverRTPPort.num()), destinationTTL,

fOurSessionId);

break;

}

} else {

switch (streamingMode) {

case RTP_UDP: {

snprintf(

(char*) fResponseBuffer,

sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\n"

"CSeq: %s\r\n"

"%s"

"Transport: RTP/AVP;unicast;destination=%s;source=%s;client_port=%d-%d;server_port=%d-%d\r\n"

"Session: %08X\r\n\r\n", cseq, dateHeader(),

destAddrStr, sourceAddrStr, ntohs(clientRTPPort.num()),

ntohs(clientRTCPPort.num()), ntohs(serverRTPPort.num()),

ntohs(serverRTCPPort.num()), fOurSessionId);

break;

}

case RTP_TCP: {

snprintf(

(char*) fResponseBuffer,

sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\n"

"CSeq: %s\r\n"

"%s"

"Transport: RTP/AVP/TCP;unicast;destination=%s;source=%s;interleaved=%d-%d\r\n"

"Session: %08X\r\n\r\n", cseq, dateHeader(),

destAddrStr, sourceAddrStr, rtpChannelId, rtcpChannelId,

fOurSessionId);

break;

}

case RAW_UDP: {

snprintf(

(char*) fResponseBuffer,

sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\n"

"CSeq: %s\r\n"

"%s"

"Transport: %s;unicast;destination=%s;source=%s;client_port=%d;server_port=%d\r\n"

"Session: %08X\r\n\r\n", cseq, dateHeader(),

streamingModeString, destAddrStr, sourceAddrStr,

ntohs(clientRTPPort.num()), ntohs(serverRTPPort.num()),

fOurSessionId);

break;

}

}

}

delete[] destAddrStr;

delete[] sourceAddrStr;

delete[] streamingModeString;

} while (0);

delete[] concatenatedStreamName;

//返回后,回应字符串会被立即发送

}

live555 中有两个 streamstate,一个是类 StreamState ,一个是此处的结构 struct streamState。类 SteamState 就是 streamToken,而 struct streamState 中保存了 MediaSubsession (即track) 和类 StreamState 的对应。类 StreamState 代表一个真正流动起来的数据流。这个数据流是从源流到 Sink 。客户端与服务端的一个 rtp 会话中,有两个数据流,服务端是从 XXXFileSouce 流到 RTPSink,而客户端则是从 RTPSource 流到 XXXFileSink 。建立数据流的过程就是把 Source 与 Sink 连接起来。

为何不把 StreamToken 保存在 MediaSubsession 中呢?看起来在 struct streamState 中是一个 MediaSubsession 对应一个 streamToken 呀? 因为 MediaSubsession 代表一个 track 的静态数据,它是可以被其它 rtp 会话重用的。比如不同的用户可能会连接到同一个媒体的同一个 track 。所以 streamToken 与 MediaSubsession 独立存在,只是被 RTSPClientSession 给对应了起来。

streamToken的建立过程存在于函数subsession->getStreamParameters()中,让我们看一下下:

- void OnDemandServerMediaSubsession::getStreamParameters(

- unsigned clientSessionId,

- netAddressBits clientAddress,

- Port const& clientRTPPort,

- Port const& clientRTCPPort,

- int tcpSocketNum,

- unsigned char rtpChannelId,

- unsigned char rtcpChannelId,

- netAddressBits& destinationAddress,

- u_int8_t& /*destinationTTL*/,

- Boolean& isMulticast,

- Port& serverRTPPort,

- Port& serverRTCPPort,

- void*& streamToken)

- {

- if (destinationAddress == 0)

- destinationAddress = clientAddress;

- struct in_addr destinationAddr;

- destinationAddr.s_addr = destinationAddress;

- isMulticast = False;

- //ServerMediaSubsession并没有保存所有基于自己的数据流,而是只记录了最后一次建立的数据流。

- //利用这个变量和fReuseFirstSource可以实现多client连接到一个流的形式。

- if (fLastStreamToken != NULL && fReuseFirstSource) {

- //如果已经基于这个ServerMediaSubsession创建了一个连接,并且希望使用这个连接

- //则直接返回这个连接。

- // Special case: Rather than creating a new 'StreamState',

- // we reuse the one that we've already created:

- serverRTPPort = ((StreamState*) fLastStreamToken)->serverRTPPort();

- serverRTCPPort = ((StreamState*) fLastStreamToken)->serverRTCPPort();

- ++((StreamState*) fLastStreamToken)->referenceCount();

- streamToken = fLastStreamToken;

- } else {

- // Normal case: Create a new media source:

- unsigned streamBitrate;

- FramedSource* mediaSource = createNewStreamSource(clientSessionId,streamBitrate);

- // Create 'groupsock' and 'sink' objects for the destination,

- // using previously unused server port numbers:

- RTPSink* rtpSink;

- BasicUDPSink* udpSink;

- Groupsock* rtpGroupsock;

- Groupsock* rtcpGroupsock;

- portNumBits serverPortNum;

- if (clientRTCPPort.num() == 0) {

- // We're streaming raw UDP (not RTP). Create a single groupsock:

- NoReuse dummy; // ensures that we skip over ports that are already in use

- for (serverPortNum = fInitialPortNum;; ++serverPortNum) {

- struct in_addr dummyAddr;

- dummyAddr.s_addr = 0;

- serverRTPPort = serverPortNum;

- rtpGroupsock = new Groupsock(envir(), dummyAddr, serverRTPPort, 255);

- if (rtpGroupsock->socketNum() >= 0)

- break; // success

- }

- rtcpGroupsock = NULL;

- rtpSink = NULL;

- udpSink = BasicUDPSink::createNew(envir(), rtpGroupsock);

- } else {

- // Normal case: We're streaming RTP (over UDP or TCP). Create a pair of

- // groupsocks (RTP and RTCP), with adjacent port numbers (RTP port number even):

- NoReuse dummy; // ensures that we skip over ports that are already in use

- for (portNumBits serverPortNum = fInitialPortNum;; serverPortNum += 2) {

- struct in_addr dummyAddr;

- dummyAddr.s_addr = 0;

- serverRTPPort = serverPortNum;

- rtpGroupsock = new Groupsock(envir(), dummyAddr, serverRTPPort, 255);

- if (rtpGroupsock->socketNum() < 0) {

- delete rtpGroupsock;

- continue; // try again

- }

- serverRTCPPort = serverPortNum + 1;

- rtcpGroupsock = new Groupsock(envir(), dummyAddr,serverRTCPPort, 255);

- if (rtcpGroupsock->socketNum() < 0) {

- delete rtpGroupsock;

- delete rtcpGroupsock;

- continue; // try again

- }

- break; // success

- }

- unsigned char rtpPayloadType = 96 + trackNumber() - 1; // if dynamic

- rtpSink = createNewRTPSink(rtpGroupsock, rtpPayloadType,mediaSource);

- udpSink = NULL;

- }

- // Turn off the destinations for each groupsock. They'll get set later

- // (unless TCP is used instead):

- if (rtpGroupsock != NULL)

- rtpGroupsock->removeAllDestinations();

- if (rtcpGroupsock != NULL)

- rtcpGroupsock->removeAllDestinations();

- if (rtpGroupsock != NULL) {

- // Try to use a big send buffer for RTP - at least 0.1 second of

- // specified bandwidth and at least 50 KB

- unsigned rtpBufSize = streamBitrate * 25 / 2; // 1 kbps * 0.1 s = 12.5 bytes

- if (rtpBufSize < 50 * 1024)

- rtpBufSize = 50 * 1024;

- increaseSendBufferTo(envir(), rtpGroupsock->socketNum(),rtpBufSize);

- }

- // Set up the state of the stream. The stream will get started later:

- streamToken = fLastStreamToken = new StreamState(*this, serverRTPPort,

- serverRTCPPort, rtpSink, udpSink, streamBitrate, mediaSource,

- rtpGroupsock, rtcpGroupsock);

- }

- // Record these destinations as being for this client session id:

- Destinations* destinations;

- if (tcpSocketNum < 0) { // UDP

- destinations = new Destinations(destinationAddr, clientRTPPort, clientRTCPPort);

- } else { // TCP

- destinations = new Destinations(tcpSocketNum, rtpChannelId, rtcpChannelId);

- }

- //记录下所有clientSessionID对应的目的rtp/rtcp地址,是因为现在不能把目的rtp,rtcp地址加入到

- //server端rtp的groupSocket中。试想在ReuseFirstSource时,这样会引起client端立即收到rtp数据。

- //其次,也可以利用这个hash table找出client的rtp/rtcp端口等信息,好像其它地方还真没有可以保存的

- //RTSPClientSession中的streamstates在ReuseFirstSource时也不能准确找出client端的端口等信息。

- fDestinationsHashTable->Add((char const*) clientSessionId, destinations);

- }

void OnDemandServerMediaSubsession::getStreamParameters(

unsigned clientSessionId,

netAddressBits clientAddress,

Port const& clientRTPPort,

Port const& clientRTCPPort,

int tcpSocketNum,

unsigned char rtpChannelId,

unsigned char rtcpChannelId,

netAddressBits& destinationAddress,

u_int8_t& /*destinationTTL*/,

Boolean& isMulticast,

Port& serverRTPPort,

Port& serverRTCPPort,

void*& streamToken)

{

if (destinationAddress == 0)

destinationAddress = clientAddress;

struct in_addr destinationAddr;

destinationAddr.s_addr = destinationAddress;

isMulticast = False;

//ServerMediaSubsession并没有保存所有基于自己的数据流,而是只记录了最后一次建立的数据流。

//利用这个变量和fReuseFirstSource可以实现多client连接到一个流的形式。

if (fLastStreamToken != NULL && fReuseFirstSource) {

//如果已经基于这个ServerMediaSubsession创建了一个连接,并且希望使用这个连接

//则直接返回这个连接。

// Special case: Rather than creating a new 'StreamState',

// we reuse the one that we've already created:

serverRTPPort = ((StreamState*) fLastStreamToken)->serverRTPPort();

serverRTCPPort = ((StreamState*) fLastStreamToken)->serverRTCPPort();

++((StreamState*) fLastStreamToken)->referenceCount();

streamToken = fLastStreamToken;

} else {

// Normal case: Create a new media source:

unsigned streamBitrate;

FramedSource* mediaSource = createNewStreamSource(clientSessionId,streamBitrate);

// Create 'groupsock' and 'sink' objects for the destination,

// using previously unused server port numbers:

RTPSink* rtpSink;

BasicUDPSink* udpSink;

Groupsock* rtpGroupsock;

Groupsock* rtcpGroupsock;

portNumBits serverPortNum;

if (clientRTCPPort.num() == 0) {

// We're streaming raw UDP (not RTP). Create a single groupsock:

NoReuse dummy; // ensures that we skip over ports that are already in use

for (serverPortNum = fInitialPortNum;; ++serverPortNum) {

struct in_addr dummyAddr;

dummyAddr.s_addr = 0;

serverRTPPort = serverPortNum;

rtpGroupsock = new Groupsock(envir(), dummyAddr, serverRTPPort, 255);

if (rtpGroupsock->socketNum() >= 0)

break; // success

}

rtcpGroupsock = NULL;

rtpSink = NULL;

udpSink = BasicUDPSink::createNew(envir(), rtpGroupsock);

} else {

// Normal case: We're streaming RTP (over UDP or TCP). Create a pair of

// groupsocks (RTP and RTCP), with adjacent port numbers (RTP port number even):

NoReuse dummy; // ensures that we skip over ports that are already in use

for (portNumBits serverPortNum = fInitialPortNum;; serverPortNum += 2) {

struct in_addr dummyAddr;

dummyAddr.s_addr = 0;

serverRTPPort = serverPortNum;

rtpGroupsock = new Groupsock(envir(), dummyAddr, serverRTPPort, 255);

if (rtpGroupsock->socketNum() < 0) {

delete rtpGroupsock;

continue; // try again

}

serverRTCPPort = serverPortNum + 1;

rtcpGroupsock = new Groupsock(envir(), dummyAddr,serverRTCPPort, 255);

if (rtcpGroupsock->socketNum() < 0) {

delete rtpGroupsock;

delete rtcpGroupsock;

continue; // try again

}

break; // success

}

unsigned char rtpPayloadType = 96 + trackNumber() - 1; // if dynamic

rtpSink = createNewRTPSink(rtpGroupsock, rtpPayloadType,mediaSource);

udpSink = NULL;

}

// Turn off the destinations for each groupsock. They'll get set later

// (unless TCP is used instead):

if (rtpGroupsock != NULL)

rtpGroupsock->removeAllDestinations();

if (rtcpGroupsock != NULL)

rtcpGroupsock->removeAllDestinations();

if (rtpGroupsock != NULL) {

// Try to use a big send buffer for RTP - at least 0.1 second of

// specified bandwidth and at least 50 KB

unsigned rtpBufSize = streamBitrate * 25 / 2; // 1 kbps * 0.1 s = 12.5 bytes

if (rtpBufSize < 50 * 1024)

rtpBufSize = 50 * 1024;

increaseSendBufferTo(envir(), rtpGroupsock->socketNum(),rtpBufSize);

}

// Set up the state of the stream. The stream will get started later:

streamToken = fLastStreamToken = new StreamState(*this, serverRTPPort,

serverRTCPPort, rtpSink, udpSink, streamBitrate, mediaSource,

rtpGroupsock, rtcpGroupsock);

}

// Record these destinations as being for this client session id:

Destinations* destinations;

if (tcpSocketNum < 0) { // UDP

destinations = new Destinations(destinationAddr, clientRTPPort, clientRTCPPort);

} else { // TCP

destinations = new Destinations(tcpSocketNum, rtpChannelId, rtcpChannelId);

}

//记录下所有clientSessionID对应的目的rtp/rtcp地址,是因为现在不能把目的rtp,rtcp地址加入到

//server端rtp的groupSocket中。试想在ReuseFirstSource时,这样会引起client端立即收到rtp数据。

//其次,也可以利用这个hash table找出client的rtp/rtcp端口等信息,好像其它地方还真没有可以保存的

//RTSPClientSession中的streamstates在ReuseFirstSource时也不能准确找出client端的端口等信息。

fDestinationsHashTable->Add((char const*) clientSessionId, destinations);

}

流程不复杂:如果需要重用上一次建立的流,就利用之(这样就可以实现一rtp server对应多个rtp client的形式);如果不需要,则创建合适的source,然后创建rtp sink,然后利用它们创建streamSoken。

启动一个流:

当RTSPClientSession收到PLAY请求时,就开始传输RTP数据。下面看一下流启动的代码:

- void RTSPServer::RTSPClientSession::handleCmd_PLAY(

- ServerMediaSubsession* subsession,

- char const* cseq,

- char const* fullRequestStr)

- {

- char* rtspURL = fOurServer.rtspURL(fOurServerMediaSession,fClientInputSocket);

- unsigned rtspURLSize = strlen(rtspURL);

- // Parse the client's "Scale:" header, if any:

- float scale;

- Boolean sawScaleHeader = parseScaleHeader(fullRequestStr, scale);

- // Try to set the stream's scale factor to this value:

- if (subsession == NULL /*aggregate op*/) {

- fOurServerMediaSession->testScaleFactor(scale);

- } else {

- subsession->testScaleFactor(scale);

- }

- char buf[100];

- char* scaleHeader;

- if (!sawScaleHeader) {

- buf[0] = '\0'; // Because we didn't see a Scale: header, don't send one back

- } else {

- sprintf(buf, "Scale: %f\r\n", scale);

- }

- scaleHeader = strDup(buf);

- //分析客户端对于播放范围的要求

- // Parse the client's "Range:" header, if any:

- double rangeStart = 0.0, rangeEnd = 0.0;

- Boolean sawRangeHeader = parseRangeHeader(fullRequestStr, rangeStart,rangeEnd);

- // Use this information, plus the stream's duration (if known), to create

- // our own "Range:" header, for the response:

- float duration = subsession == NULL /*aggregate op*/

- ? fOurServerMediaSession->duration() : subsession->duration();

- if (duration < 0.0) {

- // We're an aggregate PLAY, but the subsessions have different durations.

- // Use the largest of these durations in our header

- duration = -duration;

- }

- // Make sure that "rangeStart" and "rangeEnd" (from the client's "Range:" header) have sane values

- // before we send back our own "Range:" header in our response:

- if (rangeStart < 0.0)

- rangeStart = 0.0;

- else if (rangeStart > duration)

- rangeStart = duration;

- if (rangeEnd < 0.0)

- rangeEnd = 0.0;

- else if (rangeEnd > duration)

- rangeEnd = duration;

- if ((scale > 0.0 && rangeStart > rangeEnd && rangeEnd > 0.0)

- || (scale < 0.0 && rangeStart < rangeEnd)) {

- // "rangeStart" and "rangeEnd" were the wrong way around; swap them:

- double tmp = rangeStart;

- rangeStart = rangeEnd;

- rangeEnd = tmp;

- }

- char* rangeHeader;

- if (!sawRangeHeader) {

- buf[0] = '\0'; // Because we didn't see a Range: header, don't send one back

- } else if (rangeEnd == 0.0 && scale >= 0.0) {

- sprintf(buf, "Range: npt=%.3f-\r\n", rangeStart);

- } else {

- sprintf(buf, "Range: npt=%.3f-%.3f\r\n", rangeStart, rangeEnd);

- }

- rangeHeader = strDup(buf);

- // Create a "RTP-Info:" line. It will get filled in from each subsession's state:

- char const* rtpInfoFmt = "%s" // "RTP-Info:", plus any preceding rtpInfo items

- "%s"// comma separator, if needed

- "url=%s/%s"

- ";seq=%d"

- ";rtptime=%u";

- unsigned rtpInfoFmtSize = strlen(rtpInfoFmt);

- char* rtpInfo = strDup("RTP-Info: ");

- unsigned i, numRTPInfoItems = 0;

- // Do any required seeking/scaling on each subsession, before starting streaming:

- for (i = 0; i < fNumStreamStates; ++i) {

- if (subsession == NULL /* means: aggregated operation */

- || subsession == fStreamStates[i].subsession) {

- if (sawScaleHeader) {

- fStreamStates[i].subsession->setStreamScale(fOurSessionId,

- fStreamStates[i].streamToken, scale);

- }

- if (sawRangeHeader) {

- double streamDuration = 0.0; // by default; means: stream until the end of the media

- if (rangeEnd > 0.0 && (rangeEnd + 0.001) < duration) { // the 0.001 is because we limited the values to 3 decimal places

- // We want the stream to end early. Set the duration we want:

- streamDuration = rangeEnd - rangeStart;

- if (streamDuration < 0.0)

- streamDuration = -streamDuration; // should happen only if scale < 0.0

- }

- u_int64_t numBytes;

- fStreamStates[i].subsession->seekStream(fOurSessionId,

- fStreamStates[i].streamToken, rangeStart,

- streamDuration, numBytes);

- }

- }

- }

- // Now, start streaming:

- for (i = 0; i < fNumStreamStates; ++i) {

- if (subsession == NULL /* means: aggregated operation */

- || subsession == fStreamStates[i].subsession) {

- unsigned short rtpSeqNum = 0;

- unsigned rtpTimestamp = 0;

- //启动流

- fStreamStates[i].subsession->startStream(fOurSessionId,

- fStreamStates[i].streamToken,

- (TaskFunc*) noteClientLiveness, this, rtpSeqNum,

- rtpTimestamp, handleAlternativeRequestByte, this);

- const char *urlSuffix = fStreamStates[i].subsession->trackId();

- char* prevRTPInfo = rtpInfo;

- unsigned rtpInfoSize = rtpInfoFmtSize + strlen(prevRTPInfo) + 1

- + rtspURLSize + strlen(urlSuffix) + 5 /*max unsigned short len*/

- + 10 /*max unsigned (32-bit) len*/

- + 2 /*allows for trailing \r\n at final end of string*/;

- rtpInfo = new char[rtpInfoSize];

- sprintf(rtpInfo, rtpInfoFmt, prevRTPInfo,

- numRTPInfoItems++ == 0 ? "" : ",", rtspURL, urlSuffix,

- rtpSeqNum, rtpTimestamp);

- delete[] prevRTPInfo;

- }

- }

- if (numRTPInfoItems == 0) {

- rtpInfo[0] = '\0';

- } else {

- unsigned rtpInfoLen = strlen(rtpInfo);

- rtpInfo[rtpInfoLen] = '\r';

- rtpInfo[rtpInfoLen + 1] = '\n';

- rtpInfo[rtpInfoLen + 2] = '\0';

- }

- // Fill in the response:

- snprintf((char*) fResponseBuffer, sizeof fResponseBuffer,

- "RTSP/1.0 200 OK\r\n"

- "CSeq: %s\r\n"

- "%s"

- "%s"

- "%s"

- "Session: %08X\r\n"

- "%s\r\n", cseq, dateHeader(), scaleHeader, rangeHeader,

- fOurSessionId, rtpInfo);

- delete[] rtpInfo;

- delete[] rangeHeader;

- delete[] scaleHeader;

- delete[] rtspURL;

- }

void RTSPServer::RTSPClientSession::handleCmd_PLAY(

ServerMediaSubsession* subsession,

char const* cseq,

char const* fullRequestStr)

{

char* rtspURL = fOurServer.rtspURL(fOurServerMediaSession,fClientInputSocket);

unsigned rtspURLSize = strlen(rtspURL);

// Parse the client's "Scale:" header, if any:

float scale;

Boolean sawScaleHeader = parseScaleHeader(fullRequestStr, scale);

// Try to set the stream's scale factor to this value:

if (subsession == NULL /*aggregate op*/) {

fOurServerMediaSession->testScaleFactor(scale);

} else {

subsession->testScaleFactor(scale);

}

char buf[100];

char* scaleHeader;

if (!sawScaleHeader) {

buf[0] = '\0'; // Because we didn't see a Scale: header, don't send one back

} else {

sprintf(buf, "Scale: %f\r\n", scale);

}

scaleHeader = strDup(buf);

//分析客户端对于播放范围的要求

// Parse the client's "Range:" header, if any:

double rangeStart = 0.0, rangeEnd = 0.0;

Boolean sawRangeHeader = parseRangeHeader(fullRequestStr, rangeStart,rangeEnd);

// Use this information, plus the stream's duration (if known), to create

// our own "Range:" header, for the response:

float duration = subsession == NULL /*aggregate op*/

? fOurServerMediaSession->duration() : subsession->duration();

if (duration < 0.0) {

// We're an aggregate PLAY, but the subsessions have different durations.

// Use the largest of these durations in our header

duration = -duration;

}

// Make sure that "rangeStart" and "rangeEnd" (from the client's "Range:" header) have sane values

// before we send back our own "Range:" header in our response:

if (rangeStart < 0.0)

rangeStart = 0.0;

else if (rangeStart > duration)

rangeStart = duration;

if (rangeEnd < 0.0)

rangeEnd = 0.0;

else if (rangeEnd > duration)

rangeEnd = duration;

if ((scale > 0.0 && rangeStart > rangeEnd && rangeEnd > 0.0)

|| (scale < 0.0 && rangeStart < rangeEnd)) {

// "rangeStart" and "rangeEnd" were the wrong way around; swap them:

double tmp = rangeStart;

rangeStart = rangeEnd;

rangeEnd = tmp;

}

char* rangeHeader;

if (!sawRangeHeader) {

buf[0] = '\0'; // Because we didn't see a Range: header, don't send one back

} else if (rangeEnd == 0.0 && scale >= 0.0) {

sprintf(buf, "Range: npt=%.3f-\r\n", rangeStart);

} else {

sprintf(buf, "Range: npt=%.3f-%.3f\r\n", rangeStart, rangeEnd);

}

rangeHeader = strDup(buf);

// Create a "RTP-Info:" line. It will get filled in from each subsession's state:

char const* rtpInfoFmt = "%s" // "RTP-Info:", plus any preceding rtpInfo items

"%s"// comma separator, if needed

"url=%s/%s"

";seq=%d"

";rtptime=%u";

unsigned rtpInfoFmtSize = strlen(rtpInfoFmt);

char* rtpInfo = strDup("RTP-Info: ");

unsigned i, numRTPInfoItems = 0;

// Do any required seeking/scaling on each subsession, before starting streaming:

for (i = 0; i < fNumStreamStates; ++i) {

if (subsession == NULL /* means: aggregated operation */

|| subsession == fStreamStates[i].subsession) {

if (sawScaleHeader) {

fStreamStates[i].subsession->setStreamScale(fOurSessionId,

fStreamStates[i].streamToken, scale);

}

if (sawRangeHeader) {

double streamDuration = 0.0; // by default; means: stream until the end of the media

if (rangeEnd > 0.0 && (rangeEnd + 0.001) < duration) { // the 0.001 is because we limited the values to 3 decimal places

// We want the stream to end early. Set the duration we want:

streamDuration = rangeEnd - rangeStart;

if (streamDuration < 0.0)

streamDuration = -streamDuration; // should happen only if scale < 0.0

}

u_int64_t numBytes;

fStreamStates[i].subsession->seekStream(fOurSessionId,

fStreamStates[i].streamToken, rangeStart,

streamDuration, numBytes);

}

}

}

// Now, start streaming:

for (i = 0; i < fNumStreamStates; ++i) {

if (subsession == NULL /* means: aggregated operation */

|| subsession == fStreamStates[i].subsession) {

unsigned short rtpSeqNum = 0;

unsigned rtpTimestamp = 0;

//启动流

fStreamStates[i].subsession->startStream(fOurSessionId,

fStreamStates[i].streamToken,

(TaskFunc*) noteClientLiveness, this, rtpSeqNum,

rtpTimestamp, handleAlternativeRequestByte, this);

const char *urlSuffix = fStreamStates[i].subsession->trackId();

char* prevRTPInfo = rtpInfo;

unsigned rtpInfoSize = rtpInfoFmtSize + strlen(prevRTPInfo) + 1

+ rtspURLSize + strlen(urlSuffix) + 5 /*max unsigned short len*/

+ 10 /*max unsigned (32-bit) len*/

+ 2 /*allows for trailing \r\n at final end of string*/;

rtpInfo = new char[rtpInfoSize];

sprintf(rtpInfo, rtpInfoFmt, prevRTPInfo,

numRTPInfoItems++ == 0 ? "" : ",", rtspURL, urlSuffix,

rtpSeqNum, rtpTimestamp);

delete[] prevRTPInfo;

}

}

if (numRTPInfoItems == 0) {

rtpInfo[0] = '\0';

} else {

unsigned rtpInfoLen = strlen(rtpInfo);

rtpInfo[rtpInfoLen] = '\r';

rtpInfo[rtpInfoLen + 1] = '\n';

rtpInfo[rtpInfoLen + 2] = '\0';

}

// Fill in the response:

snprintf((char*) fResponseBuffer, sizeof fResponseBuffer,

"RTSP/1.0 200 OK\r\n"

"CSeq: %s\r\n"

"%s"

"%s"

"%s"

"Session: %08X\r\n"

"%s\r\n", cseq, dateHeader(), scaleHeader, rangeHeader,

fOurSessionId, rtpInfo);

delete[] rtpInfo;

delete[] rangeHeader;

delete[] scaleHeader;

delete[] rtspURL;

}

有个问题,如果这个streamToken使用的是已存在的(还记得ReuseFirstSource吗),为它再次调用startStream()时,究竟会做什么呢?你猜啊!呵呵我猜吧:应该是把这个客户端的地址和rtp/rtcp端口传给rtp server的groupSocket,rtp server自然就开始向这个客户端发送数据了。是不是这样尼?看代码吧:

- void StreamState::startPlaying(

- Destinations* dests,

- TaskFunc* rtcpRRHandler,

- void* rtcpRRHandlerClientData,

- ServerRequestAlternativeByteHandler* serverRequestAlternativeByteHandler,

- void* serverRequestAlternativeByteHandlerClientData)

- {

- //目标ip address rtp && rtcp port

- if (dests == NULL)

- return;

- //创建RTCPInstance对象,以计算和收发RTCP包

- if (fRTCPInstance == NULL && fRTPSink != NULL) {

- // Create (and start) a 'RTCP instance' for this RTP sink:

- fRTCPInstance = RTCPInstance::createNew(fRTPSink->envir(), fRTCPgs,

- fTotalBW, (unsigned char*) fMaster.fCNAME, fRTPSink,

- NULL /* we're a server */);

- // Note: This starts RTCP running automatically

- }

- if (dests->isTCP) {

- //如果RTP over TCP

- // Change RTP and RTCP to use the TCP socket instead of UDP:

- if (fRTPSink != NULL) {

- fRTPSink->addStreamSocket(dests->tcpSocketNum, dests->rtpChannelId);

- fRTPSink->setServerRequestAlternativeByteHandler(

- dests->tcpSocketNum, serverRequestAlternativeByteHandler,

- serverRequestAlternativeByteHandlerClientData);

- }

- if (fRTCPInstance != NULL) {

- fRTCPInstance->addStreamSocket(dests->tcpSocketNum,

- dests->rtcpChannelId);

- fRTCPInstance->setSpecificRRHandler(dests->tcpSocketNum,

- dests->rtcpChannelId, rtcpRRHandler,

- rtcpRRHandlerClientData);

- }

- } else {

- //向RTP和RTCP的groupsocket增加这个目标

- // Tell the RTP and RTCP 'groupsocks' about this destination

- // (in case they don't already have it):

- if (fRTPgs != NULL)

- fRTPgs->addDestination(dests->addr, dests->rtpPort);

- if (fRTCPgs != NULL)

- fRTCPgs->addDestination(dests->addr, dests->rtcpPort);

- if (fRTCPInstance != NULL) {

- fRTCPInstance->setSpecificRRHandler(dests->addr.s_addr,

- dests->rtcpPort, rtcpRRHandler, rtcpRRHandlerClientData);

- }

- }

- if (!fAreCurrentlyPlaying && fMediaSource != NULL) {

- //如果还没有启动传输,现在启动之。

- if (fRTPSink != NULL) {

- fRTPSink->startPlaying(*fMediaSource, afterPlayingStreamState,

- this);

- fAreCurrentlyPlaying = True;

- } else if (fUDPSink != NULL) {

- fUDPSink->startPlaying(*fMediaSource, afterPlayingStreamState,this);

- fAreCurrentlyPlaying = True;

- }

- }

- }

void StreamState::startPlaying(

Destinations* dests,

TaskFunc* rtcpRRHandler,

void* rtcpRRHandlerClientData,

ServerRequestAlternativeByteHandler* serverRequestAlternativeByteHandler,

void* serverRequestAlternativeByteHandlerClientData)

{

//目标ip address rtp && rtcp port

if (dests == NULL)

return;

//创建RTCPInstance对象,以计算和收发RTCP包

if (fRTCPInstance == NULL && fRTPSink != NULL) {

// Create (and start) a 'RTCP instance' for this RTP sink:

fRTCPInstance = RTCPInstance::createNew(fRTPSink->envir(), fRTCPgs,

fTotalBW, (unsigned char*) fMaster.fCNAME, fRTPSink,

NULL /* we're a server */);

// Note: This starts RTCP running automatically

}

if (dests->isTCP) {

//如果RTP over TCP

// Change RTP and RTCP to use the TCP socket instead of UDP:

if (fRTPSink != NULL) {

fRTPSink->addStreamSocket(dests->tcpSocketNum, dests->rtpChannelId);

fRTPSink->setServerRequestAlternativeByteHandler(

dests->tcpSocketNum, serverRequestAlternativeByteHandler,

serverRequestAlternativeByteHandlerClientData);

}

if (fRTCPInstance != NULL) {

fRTCPInstance->addStreamSocket(dests->tcpSocketNum,

dests->rtcpChannelId);

fRTCPInstance->setSpecificRRHandler(dests->tcpSocketNum,

dests->rtcpChannelId, rtcpRRHandler,

rtcpRRHandlerClientData);

}

} else {

//向RTP和RTCP的groupsocket增加这个目标

// Tell the RTP and RTCP 'groupsocks' about this destination

// (in case they don't already have it):

if (fRTPgs != NULL)

fRTPgs->addDestination(dests->addr, dests->rtpPort);

if (fRTCPgs != NULL)

fRTCPgs->addDestination(dests->addr, dests->rtcpPort);

if (fRTCPInstance != NULL) {

fRTCPInstance->setSpecificRRHandler(dests->addr.s_addr,

dests->rtcpPort, rtcpRRHandler, rtcpRRHandlerClientData);

}

}

if (!fAreCurrentlyPlaying && fMediaSource != NULL) {

//如果还没有启动传输,现在启动之。

if (fRTPSink != NULL) {

fRTPSink->startPlaying(*fMediaSource, afterPlayingStreamState,

this);

fAreCurrentlyPlaying = True;

} else if (fUDPSink != NULL) {

fUDPSink->startPlaying(*fMediaSource, afterPlayingStreamState,this);

fAreCurrentlyPlaying = True;

}

}

}

嘿嘿,还真的这样嘀!

七 RTP打包与发送

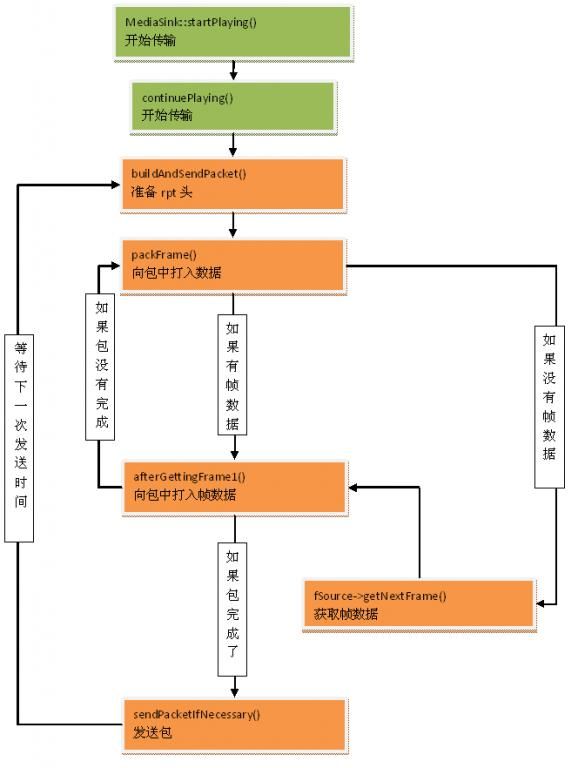

rtp传送开始于函数:MediaSink::startPlaying()。想想也有道理,应是sink跟source要数据,所以从sink上调用startplaying(嘿嘿,相当于directshow的拉模式)。

看一下这个函数:

- Boolean MediaSink::startPlaying(MediaSource& source,

- afterPlayingFunc* afterFunc, void* afterClientData)

- {

- //参数afterFunc是在播放结束时才被调用。

- // Make sure we're not already being played:

- if (fSource != NULL) {

- envir().setResultMsg("This sink is already being played");

- return False;

- }

- // Make sure our source is compatible:

- if (!sourceIsCompatibleWithUs(source)) {

- envir().setResultMsg(

- "MediaSink::startPlaying(): source is not compatible!");

- return False;

- }

- //记下一些要使用的对象

- fSource = (FramedSource*) &source;

- fAfterFunc = afterFunc;

- fAfterClientData = afterClientData;

- return continuePlaying();

- }

Boolean MediaSink::startPlaying(MediaSource& source,

afterPlayingFunc* afterFunc, void* afterClientData)

{

//参数afterFunc是在播放结束时才被调用。

// Make sure we're not already being played:

if (fSource != NULL) {

envir().setResultMsg("This sink is already being played");

return False;

}

// Make sure our source is compatible:

if (!sourceIsCompatibleWithUs(source)) {

envir().setResultMsg(

"MediaSink::startPlaying(): source is not compatible!");

return False;

}

//记下一些要使用的对象

fSource = (FramedSource*) &source;

fAfterFunc = afterFunc;

fAfterClientData = afterClientData;

return continuePlaying();

}

为了进一步封装(让继承类少写一些代码),搞出了一个虚函数continuePlaying()。让我们来看一下:

- Boolean MultiFramedRTPSink::continuePlaying() {

- // Send the first packet.

- // (This will also schedule any future sends.)

- buildAndSendPacket(True);

- return True;

- }

Boolean MultiFramedRTPSink::continuePlaying() {

// Send the first packet.

// (This will also schedule any future sends.)

buildAndSendPacket(True);

return True;

}

MultiFramedRTPSink是与帧有关的类,其实它要求每次必须从source获得一个帧的数据,所以才叫这个name。可以看到continuePlaying()完全被buildAndSendPacket()代替。看一下buildAndSendPacket():

- void MultiFramedRTPSink::buildAndSendPacket(Boolean isFirstPacket)

- {

- //此函数中主要是准备rtp包的头,为一些需要跟据实际数据改变的字段留出位置。

- fIsFirstPacket = isFirstPacket;

- // Set up the RTP header:

- unsigned rtpHdr = 0x80000000; // RTP version 2; marker ('M') bit not set (by default; it can be set later)

- rtpHdr |= (fRTPPayloadType << 16);

- rtpHdr |= fSeqNo; // sequence number

- fOutBuf->enqueueWord(rtpHdr);//向包中加入一个字

- // Note where the RTP timestamp will go.

- // (We can't fill this in until we start packing payload frames.)

- fTimestampPosition = fOutBuf->curPacketSize();

- fOutBuf->skipBytes(4); // leave a hole for the timestamp 在缓冲中空出时间戳的位置

- fOutBuf->enqueueWord(SSRC());

- // Allow for a special, payload-format-specific header following the

- // RTP header:

- fSpecialHeaderPosition = fOutBuf->curPacketSize();

- fSpecialHeaderSize = specialHeaderSize();

- fOutBuf->skipBytes(fSpecialHeaderSize);

- // Begin packing as many (complete) frames into the packet as we can:

- fTotalFrameSpecificHeaderSizes = 0;

- fNoFramesLeft = False;

- fNumFramesUsedSoFar = 0; // 一个包中已打入的帧数。

- //头准备好了,再打包帧数据

- packFrame();

- }

void MultiFramedRTPSink::buildAndSendPacket(Boolean isFirstPacket)

{

//此函数中主要是准备rtp包的头,为一些需要跟据实际数据改变的字段留出位置。

fIsFirstPacket = isFirstPacket;

// Set up the RTP header:

unsigned rtpHdr = 0x80000000; // RTP version 2; marker ('M') bit not set (by default; it can be set later)

rtpHdr |= (fRTPPayloadType << 16);

rtpHdr |= fSeqNo; // sequence number

fOutBuf->enqueueWord(rtpHdr);//向包中加入一个字

// Note where the RTP timestamp will go.

// (We can't fill this in until we start packing payload frames.)

fTimestampPosition = fOutBuf->curPacketSize();

fOutBuf->skipBytes(4); // leave a hole for the timestamp 在缓冲中空出时间戳的位置

fOutBuf->enqueueWord(SSRC());

// Allow for a special, payload-format-specific header following the

// RTP header:

fSpecialHeaderPosition = fOutBuf->curPacketSize();

fSpecialHeaderSize = specialHeaderSize();

fOutBuf->skipBytes(fSpecialHeaderSize);

// Begin packing as many (complete) frames into the packet as we can:

fTotalFrameSpecificHeaderSizes = 0;

fNoFramesLeft = False;

fNumFramesUsedSoFar = 0; // 一个包中已打入的帧数。

//头准备好了,再打包帧数据

packFrame();

}

继续看packFrame():

- void MultiFramedRTPSink::packFrame()

- {

- // First, see if we have an overflow frame that was too big for the last pkt

- if (fOutBuf->haveOverflowData()) {

- //如果有帧数据,则使用之。OverflowData是指上次打包时剩下的帧数据,因为一个包可能容纳不了一个帧。

- // Use this frame before reading a new one from the source

- unsigned frameSize = fOutBuf->overflowDataSize();

- struct timeval presentationTime = fOutBuf->overflowPresentationTime();

- unsigned durationInMicroseconds =fOutBuf->overflowDurationInMicroseconds();

- fOutBuf->useOverflowData();

- afterGettingFrame1(frameSize, 0, presentationTime,durationInMicroseconds);

- } else {

- //一点帧数据都没有,跟source要吧。

- // Normal case: we need to read a new frame from the source

- if (fSource == NULL)

- return;

- //更新缓冲中的一些位置

- fCurFrameSpecificHeaderPosition = fOutBuf->curPacketSize();

- fCurFrameSpecificHeaderSize = frameSpecificHeaderSize();

- fOutBuf->skipBytes(fCurFrameSpecificHeaderSize);

- fTotalFrameSpecificHeaderSizes += fCurFrameSpecificHeaderSize;

- //从source获取下一帧

- fSource->getNextFrame(fOutBuf->curPtr(),//新数据存放开始的位置

- fOutBuf->totalBytesAvailable(),//缓冲中空余的空间大小

- afterGettingFrame, //因为可能source中的读数据函数会被放在任务调度中,所以把获取帧后应调用的函数传给source

- this,

- ourHandleClosure, //这个是source结束时(比如文件读完了)要调用的函数。

- this);

- }

- }

void MultiFramedRTPSink::packFrame()

{

// First, see if we have an overflow frame that was too big for the last pkt

if (fOutBuf->haveOverflowData()) {

//如果有帧数据,则使用之。OverflowData是指上次打包时剩下的帧数据,因为一个包可能容纳不了一个帧。

// Use this frame before reading a new one from the source

unsigned frameSize = fOutBuf->overflowDataSize();

struct timeval presentationTime = fOutBuf->overflowPresentationTime();

unsigned durationInMicroseconds =fOutBuf->overflowDurationInMicroseconds();

fOutBuf->useOverflowData();

afterGettingFrame1(frameSize, 0, presentationTime,durationInMicroseconds);

} else {

//一点帧数据都没有,跟source要吧。

// Normal case: we need to read a new frame from the source

if (fSource == NULL)

return;

//更新缓冲中的一些位置

fCurFrameSpecificHeaderPosition = fOutBuf->curPacketSize();

fCurFrameSpecificHeaderSize = frameSpecificHeaderSize();

fOutBuf->skipBytes(fCurFrameSpecificHeaderSize);

fTotalFrameSpecificHeaderSizes += fCurFrameSpecificHeaderSize;

//从source获取下一帧

fSource->getNextFrame(fOutBuf->curPtr(),//新数据存放开始的位置

fOutBuf->totalBytesAvailable(),//缓冲中空余的空间大小

afterGettingFrame, //因为可能source中的读数据函数会被放在任务调度中,所以把获取帧后应调用的函数传给source

this,

ourHandleClosure, //这个是source结束时(比如文件读完了)要调用的函数。

this);

}

}

可以想像下面就是source从文件(或某个设备)中读取一帧数据,读完后返回给sink,当然不是从函数返回了,而是以调用afterGettingFrame这个回调函数的方式。所以下面看一下afterGettingFrame():

- void MultiFramedRTPSink::afterGettingFrame(void* clientData,

- unsigned numBytesRead, unsigned numTruncatedBytes,

- struct timeval presentationTime, unsigned durationInMicroseconds)

- {

- MultiFramedRTPSink* sink = (MultiFramedRTPSink*) clientData;

- sink->afterGettingFrame1(numBytesRead, numTruncatedBytes, presentationTime,

- durationInMicroseconds);

- }

void MultiFramedRTPSink::afterGettingFrame(void* clientData,

unsigned numBytesRead, unsigned numTruncatedBytes,

struct timeval presentationTime, unsigned durationInMicroseconds)

{

MultiFramedRTPSink* sink = (MultiFramedRTPSink*) clientData;

sink->afterGettingFrame1(numBytesRead, numTruncatedBytes, presentationTime,

durationInMicroseconds);

}

没什么可看的,只是过度为调用成员函数,所以afterGettingFrame1()才是重点:

- void MultiFramedRTPSink::afterGettingFrame1(

- unsigned frameSize,

- unsigned numTruncatedBytes,

- struct timeval presentationTime,

- unsigned durationInMicroseconds)

- {

- if (fIsFirstPacket) {

- // Record the fact that we're starting to play now:

- gettimeofday(&fNextSendTime, NULL);

- }

- //如果给予一帧的缓冲不够大,就会发生截断一帧数据的现象。但也只能提示一下用户

- if (numTruncatedBytes > 0) {

- unsigned const bufferSize = fOutBuf->totalBytesAvailable();

- envir()

- << "MultiFramedRTPSink::afterGettingFrame1(): The input frame data was too large for our buffer size ("

- << bufferSize

- << "). "

- << numTruncatedBytes

- << " bytes of trailing data was dropped! Correct this by increasing \"OutPacketBuffer::maxSize\" to at least "

- << OutPacketBuffer::maxSize + numTruncatedBytes

- << ", *before* creating this 'RTPSink'. (Current value is "

- << OutPacketBuffer::maxSize << ".)\n";

- }

- unsigned curFragmentationOffset = fCurFragmentationOffset;

- unsigned numFrameBytesToUse = frameSize;

- unsigned overflowBytes = 0;

- //如果包只已经打入帧数据了,并且不能再向这个包中加数据了,则把新获得的帧数据保存下来。

- // If we have already packed one or more frames into this packet,

- // check whether this new frame is eligible to be packed after them.

- // (This is independent of whether the packet has enough room for this

- // new frame; that check comes later.)

- if (fNumFramesUsedSoFar > 0) {

- //如果包中已有了一个帧,并且不允许再打入新的帧了,则只记录下新的帧。

- if ((fPreviousFrameEndedFragmentation && !allowOtherFramesAfterLastFragment())

- || !frameCanAppearAfterPacketStart(fOutBuf->curPtr(), frameSize))

- {

- // Save away this frame for next time:

- numFrameBytesToUse = 0;

- fOutBuf->setOverflowData(fOutBuf->curPacketSize(), frameSize,

- presentationTime, durationInMicroseconds);

- }

- }

- //表示当前打入的是否是上一个帧的最后一块数据。

- fPreviousFrameEndedFragmentation = False;

- //下面是计算获取的帧中有多少数据可以打到当前包中,剩下的数据就作为overflow数据保存下来。

- if (numFrameBytesToUse > 0) {

- // Check whether this frame overflows the packet

- if (fOutBuf->wouldOverflow(frameSize)) {

- // Don't use this frame now; instead, save it as overflow data, and

- // send it in the next packet instead. However, if the frame is too

- // big to fit in a packet by itself, then we need to fragment it (and

- // use some of it in this packet, if the payload format permits this.)

- if (isTooBigForAPacket(frameSize)

- && (fNumFramesUsedSoFar == 0 || allowFragmentationAfterStart())) {

- // We need to fragment this frame, and use some of it now:

- overflowBytes = computeOverflowForNewFrame(frameSize);

- numFrameBytesToUse -= overflowBytes;

- fCurFragmentationOffset += numFrameBytesToUse;

- } else {

- // We don't use any of this frame now:

- overflowBytes = frameSize;

- numFrameBytesToUse = 0;

- }

- fOutBuf->setOverflowData(fOutBuf->curPacketSize() + numFrameBytesToUse,

- overflowBytes, presentationTime, durationInMicroseconds);

- } else if (fCurFragmentationOffset > 0) {

- // This is the last fragment of a frame that was fragmented over

- // more than one packet. Do any special handling for this case:

- fCurFragmentationOffset = 0;

- fPreviousFrameEndedFragmentation = True;

- }

- }

- if (numFrameBytesToUse == 0 && frameSize > 0) {

- //如果包中有数据并且没有新数据了,则发送之。(这种情况好像很难发生啊!)

- // Send our packet now, because we have filled it up:

- sendPacketIfNecessary();

- } else {

- //需要向包中打入数据。

- // Use this frame in our outgoing packet:

- unsigned char* frameStart = fOutBuf->curPtr();

- fOutBuf->increment(numFrameBytesToUse);

- // do this now, in case "doSpecialFrameHandling()" calls "setFramePadding()" to append padding bytes

- // Here's where any payload format specific processing gets done:

- doSpecialFrameHandling(curFragmentationOffset, frameStart,

- numFrameBytesToUse, presentationTime, overflowBytes);

- ++fNumFramesUsedSoFar;

- // Update the time at which the next packet should be sent, based

- // on the duration of the frame that we just packed into it.

- // However, if this frame has overflow data remaining, then don't

- // count its duration yet.

- if (overflowBytes == 0) {

- fNextSendTime.tv_usec += durationInMicroseconds;

- fNextSendTime.tv_sec += fNextSendTime.tv_usec / 1000000;

- fNextSendTime.tv_usec %= 1000000;

- }

- //如果需要,就发出包,否则继续打入数据。

- // Send our packet now if (i) it's already at our preferred size, or

- // (ii) (heuristic) another frame of the same size as the one we just

- // read would overflow the packet, or

- // (iii) it contains the last fragment of a fragmented frame, and we

- // don't allow anything else to follow this or

- // (iv) one frame per packet is allowed:

- if (fOutBuf->isPreferredSize()

- || fOutBuf->wouldOverflow(numFrameBytesToUse)

- || (fPreviousFrameEndedFragmentation

- && !allowOtherFramesAfterLastFragment())

- || !frameCanAppearAfterPacketStart(

- fOutBuf->curPtr() - frameSize, frameSize)) {

- // The packet is ready to be sent now

- sendPacketIfNecessary();

- } else {

- // There's room for more frames; try getting another:

- packFrame();

- }

- }

- }

void MultiFramedRTPSink::afterGettingFrame1(

unsigned frameSize,

unsigned numTruncatedBytes,

struct timeval presentationTime,

unsigned durationInMicroseconds)

{

if (fIsFirstPacket) {

// Record the fact that we're starting to play now:

gettimeofday(&fNextSendTime, NULL);

}

//如果给予一帧的缓冲不够大,就会发生截断一帧数据的现象。但也只能提示一下用户

if (numTruncatedBytes > 0) {

unsigned const bufferSize = fOutBuf->totalBytesAvailable();

envir()

<< "MultiFramedRTPSink::afterGettingFrame1(): The input frame data was too large for our buffer size ("

<< bufferSize

<< "). "

<< numTruncatedBytes

<< " bytes of trailing data was dropped! Correct this by increasing \"OutPacketBuffer::maxSize\" to at least "

<< OutPacketBuffer::maxSize + numTruncatedBytes

<< ", *before* creating this 'RTPSink'. (Current value is "

<< OutPacketBuffer::maxSize << ".)\n";

}

unsigned curFragmentationOffset = fCurFragmentationOffset;

unsigned numFrameBytesToUse = frameSize;

unsigned overflowBytes = 0;

//如果包只已经打入帧数据了,并且不能再向这个包中加数据了,则把新获得的帧数据保存下来。

// If we have already packed one or more frames into this packet,

// check whether this new frame is eligible to be packed after them.

// (This is independent of whether the packet has enough room for this

// new frame; that check comes later.)

if (fNumFramesUsedSoFar > 0) {

//如果包中已有了一个帧,并且不允许再打入新的帧了,则只记录下新的帧。

if ((fPreviousFrameEndedFragmentation && !allowOtherFramesAfterLastFragment())

|| !frameCanAppearAfterPacketStart(fOutBuf->curPtr(), frameSize))

{

// Save away this frame for next time:

numFrameBytesToUse = 0;

fOutBuf->setOverflowData(fOutBuf->curPacketSize(), frameSize,

presentationTime, durationInMicroseconds);

}

}

//表示当前打入的是否是上一个帧的最后一块数据。

fPreviousFrameEndedFragmentation = False;

//下面是计算获取的帧中有多少数据可以打到当前包中,剩下的数据就作为overflow数据保存下来。

if (numFrameBytesToUse > 0) {

// Check whether this frame overflows the packet

if (fOutBuf->wouldOverflow(frameSize)) {

// Don't use this frame now; instead, save it as overflow data, and

// send it in the next packet instead. However, if the frame is too

// big to fit in a packet by itself, then we need to fragment it (and

// use some of it in this packet, if the payload format permits this.)

if (isTooBigForAPacket(frameSize)

&& (fNumFramesUsedSoFar == 0 || allowFragmentationAfterStart())) {

// We need to fragment this frame, and use some of it now:

overflowBytes = computeOverflowForNewFrame(frameSize);

numFrameBytesToUse -= overflowBytes;

fCurFragmentationOffset += numFrameBytesToUse;

} else {

// We don't use any of this frame now:

overflowBytes = frameSize;

numFrameBytesToUse = 0;

}

fOutBuf->setOverflowData(fOutBuf->curPacketSize() + numFrameBytesToUse,

overflowBytes, presentationTime, durationInMicroseconds);

} else if (fCurFragmentationOffset > 0) {

// This is the last fragment of a frame that was fragmented over

// more than one packet. Do any special handling for this case:

fCurFragmentationOffset = 0;

fPreviousFrameEndedFragmentation = True;

}

}

if (numFrameBytesToUse == 0 && frameSize > 0) {

//如果包中有数据并且没有新数据了,则发送之。(这种情况好像很难发生啊!)

// Send our packet now, because we have filled it up:

sendPacketIfNecessary();

} else {

//需要向包中打入数据。

// Use this frame in our outgoing packet:

unsigned char* frameStart = fOutBuf->curPtr();

fOutBuf->increment(numFrameBytesToUse);

// do this now, in case "doSpecialFrameHandling()" calls "setFramePadding()" to append padding bytes

// Here's where any payload format specific processing gets done:

doSpecialFrameHandling(curFragmentationOffset, frameStart,

numFrameBytesToUse, presentationTime, overflowBytes);

++fNumFramesUsedSoFar;

// Update the time at which the next packet should be sent, based

// on the duration of the frame that we just packed into it.

// However, if this frame has overflow data remaining, then don't

// count its duration yet.

if (overflowBytes == 0) {

fNextSendTime.tv_usec += durationInMicroseconds;

fNextSendTime.tv_sec += fNextSendTime.tv_usec / 1000000;

fNextSendTime.tv_usec %= 1000000;

}

//如果需要,就发出包,否则继续打入数据。

// Send our packet now if (i) it's already at our preferred size, or

// (ii) (heuristic) another frame of the same size as the one we just

// read would overflow the packet, or

// (iii) it contains the last fragment of a fragmented frame, and we

// don't allow anything else to follow this or

// (iv) one frame per packet is allowed:

if (fOutBuf->isPreferredSize()

|| fOutBuf->wouldOverflow(numFrameBytesToUse)

|| (fPreviousFrameEndedFragmentation

&& !allowOtherFramesAfterLastFragment())

|| !frameCanAppearAfterPacketStart(

fOutBuf->curPtr() - frameSize, frameSize)) {

// The packet is ready to be sent now

sendPacketIfNecessary();

} else {

// There's room for more frames; try getting another:

packFrame();

}

}

}

看一下发送数据的函数:

- void MultiFramedRTPSink::sendPacketIfNecessary()

- {

- //发送包

- if (fNumFramesUsedSoFar > 0) {

- // Send the packet:

- #ifdef TEST_LOSS

- if ((our_random()%10) != 0) // simulate 10% packet loss #####

- #endif

- if (!fRTPInterface.sendPacket(fOutBuf->packet(),fOutBuf->curPacketSize())) {

- // if failure handler has been specified, call it

- if (fOnSendErrorFunc != NULL)

- (*fOnSendErrorFunc)(fOnSendErrorData);

- }

- ++fPacketCount;

- fTotalOctetCount += fOutBuf->curPacketSize();

- fOctetCount += fOutBuf->curPacketSize() - rtpHeaderSize

- - fSpecialHeaderSize - fTotalFrameSpecificHeaderSizes;

- ++fSeqNo; // for next time

- }

- //如果还有剩余数据,则调整缓冲区

- if (fOutBuf->haveOverflowData()

- && fOutBuf->totalBytesAvailable() > fOutBuf->totalBufferSize() / 2) {

- // Efficiency hack: Reset the packet start pointer to just in front of

- // the overflow data (allowing for the RTP header and special headers),

- // so that we probably don't have to "memmove()" the overflow data

- // into place when building the next packet:

- unsigned newPacketStart = fOutBuf->curPacketSize()-

- (rtpHeaderSize + fSpecialHeaderSize + frameSpecificHeaderSize());

- fOutBuf->adjustPacketStart(newPacketStart);

- } else {

- // Normal case: Reset the packet start pointer back to the start:

- fOutBuf->resetPacketStart();

- }

- fOutBuf->resetOffset();

- fNumFramesUsedSoFar = 0;

- if (fNoFramesLeft) {

- //如果再没有数据了,则结束之

- // We're done:

- onSourceClosure(this);

- } else {

- //如果还有数据,则在下一次需要发送的时间再次打包发送。

- // We have more frames left to send. Figure out when the next frame

- // is due to start playing, then make sure that we wait this long before

- // sending the next packet.

- struct timeval timeNow;

- gettimeofday(&timeNow, NULL);

- int secsDiff = fNextSendTime.tv_sec - timeNow.tv_sec;

- int64_t uSecondsToGo = secsDiff * 1000000

- + (fNextSendTime.tv_usec - timeNow.tv_usec);

- if (uSecondsToGo < 0 || secsDiff < 0) { // sanity check: Make sure that the time-to-delay is non-negative:

- uSecondsToGo = 0;

- }

- // Delay this amount of time:

- nextTask() = envir().taskScheduler().scheduleDelayedTask(uSecondsToGo,

- (TaskFunc*) sendNext, this);

- }

- }

void MultiFramedRTPSink::sendPacketIfNecessary()

{

//发送包

if (fNumFramesUsedSoFar > 0) {

// Send the packet:

#ifdef TEST_LOSS

if ((our_random()%10) != 0) // simulate 10% packet loss #####

#endif

if (!fRTPInterface.sendPacket(fOutBuf->packet(),fOutBuf->curPacketSize())) {

// if failure handler has been specified, call it

if (fOnSendErrorFunc != NULL)

(*fOnSendErrorFunc)(fOnSendErrorData);

}

++fPacketCount;

fTotalOctetCount += fOutBuf->curPacketSize();

fOctetCount += fOutBuf->curPacketSize() - rtpHeaderSize

- fSpecialHeaderSize - fTotalFrameSpecificHeaderSizes;

++fSeqNo; // for next time

}

//如果还有剩余数据,则调整缓冲区

if (fOutBuf->haveOverflowData()

&& fOutBuf->totalBytesAvailable() > fOutBuf->totalBufferSize() / 2) {

// Efficiency hack: Reset the packet start pointer to just in front of

// the overflow data (allowing for the RTP header and special headers),

// so that we probably don't have to "memmove()" the overflow data

// into place when building the next packet:

unsigned newPacketStart = fOutBuf->curPacketSize()-

(rtpHeaderSize + fSpecialHeaderSize + frameSpecificHeaderSize());

fOutBuf->adjustPacketStart(newPacketStart);

} else {

// Normal case: Reset the packet start pointer back to the start:

fOutBuf->resetPacketStart();

}

fOutBuf->resetOffset();

fNumFramesUsedSoFar = 0;

if (fNoFramesLeft) {

//如果再没有数据了,则结束之

// We're done:

onSourceClosure(this);

} else {

//如果还有数据,则在下一次需要发送的时间再次打包发送。

// We have more frames left to send. Figure out when the next frame

// is due to start playing, then make sure that we wait this long before

// sending the next packet.

struct timeval timeNow;

gettimeofday(&timeNow, NULL);

int secsDiff = fNextSendTime.tv_sec - timeNow.tv_sec;

int64_t uSecondsToGo = secsDiff * 1000000

+ (fNextSendTime.tv_usec - timeNow.tv_usec);

if (uSecondsToGo < 0 || secsDiff < 0) { // sanity check: Make sure that the time-to-delay is non-negative:

uSecondsToGo = 0;

}

// Delay this amount of time:

nextTask() = envir().taskScheduler().scheduleDelayedTask(uSecondsToGo,

(TaskFunc*) sendNext, this);

}

}

可以看到为了延迟包的发送,使用了delay task来执行下次打包发送任务。

sendNext()中又调用了buildAndSendPacket()函数,呵呵,又是一个圈圈。

总结一下调用过程:

最后,再说明一下包缓冲区的使用:

MultiFramedRTPSink中的帧数据和包缓冲区共用一个,只是用一些额外的变量指明缓冲区中属于包的部分以及属于帧数据的部分(包以外的数据叫做overflow data)。它有时会把overflow data以mem move的方式移到包开始的位置,有时把包的开始位置直接设置到overflow data开始的地方。那么这个缓冲的大小是怎样确定的呢?是跟据调用者指定的的一个最大的包的大小+60000算出的。这个地方把我搞胡涂了:如果一次从source获取一个帧的话,那这个缓冲应设为不小于最大的一个帧的大小才是,为何是按包的大小设置呢?可以看到,当缓冲不够时只是提示一下:

- if (numTruncatedBytes > 0) {

- unsigned const bufferSize = fOutBuf->totalBytesAvailable();

- envir()

- << "MultiFramedRTPSink::afterGettingFrame1(): The input frame data was too large for our buffer size ("

- << bufferSize

- << "). "

- << numTruncatedBytes

- << " bytes of trailing data was dropped! Correct this by increasing \"OutPacketBuffer::maxSize\" to at least "

- << OutPacketBuffer::maxSize + numTruncatedBytes

- << ", *before* creating this 'RTPSink'. (Current value is "

- << OutPacketBuffer::maxSize << ".)\n";

- }

if (numTruncatedBytes > 0) {

unsigned const bufferSize = fOutBuf->totalBytesAvailable();

envir()

<< "MultiFramedRTPSink::afterGettingFrame1(): The input frame data was too large for our buffer size ("

<< bufferSize

<< "). "

<< numTruncatedBytes

<< " bytes of trailing data was dropped! Correct this by increasing \"OutPacketBuffer::maxSize\" to at least "

<< OutPacketBuffer::maxSize + numTruncatedBytes

<< ", *before* creating this 'RTPSink'. (Current value is "

<< OutPacketBuffer::maxSize << ".)\n";

}

当然此时不会出错,但有可能导致时间戳计算不准,或增加时间戳计算与source端处理的复杂性(因为一次取一帧时间戳是很好计算的)。

八 RTSPClient分析

有RTSPServer,当然就要有RTSPClient。

如果按照Server端的架构,想一下Client端各部分的组成可能是这样:

因为要连接RTSP server,所以RTSPClient要有TCP socket。当获取到server端的DESCRIBE后,应建立一个对应于ServerMediaSession的ClientMediaSession。对应每个Track,ClientMediaSession中应建立ClientMediaSubsession。当建立RTP Session时,应分别为所拥有的Track发送SETUP请求连接,在获取回应后,分别为所有的track建立RTP socket,然后请求PLAY,然后开始传输数据。事实是这样吗?只能分析代码了。

testProgs中的OpenRTSP是典型的RTSPClient示例,所以分析它吧。

main()函数在playCommon.cpp文件中。main()的流程比较简单,跟服务端差别不大:建立任务计划对象--建立环境对象--处理用户输入的参数(RTSP地址)--创建RTSPClient实例--发出第一个RTSP请求(可能是OPTIONS也可能是DESCRIBE)--进入Loop。

RTSP的tcp连接是在发送第一个RTSP请求时才建立的,在RTSPClient的那几个发请求的函数sendXXXXXXCommand()中最终都调用sendRequest(),sendRequest()中会跟据情况建立起TCP连接。在建立连接时马上向任务计划中加入处理从这个TCP接收数据的socket handler:RTSPClient::incomingDataHandler()。

下面就是发送RTSP请求,OPTIONS就不必看了,从请求DESCRIBE开始:

- void getSDPDescription(RTSPClient::responseHandler* afterFunc)

- {

- ourRTSPClient->sendDescribeCommand(afterFunc, ourAuthenticator);

- }

- unsigned RTSPClient::sendDescribeCommand(responseHandler* responseHandler,

- Authenticator* authenticator)

- {

- if (authenticator != NULL)

- fCurrentAuthenticator = *authenticator;

- return sendRequest(new RequestRecord(++fCSeq, "DESCRIBE", responseHandler));

- }

void getSDPDescription(RTSPClient::responseHandler* afterFunc)

{

ourRTSPClient->sendDescribeCommand(afterFunc, ourAuthenticator);

}

unsigned RTSPClient::sendDescribeCommand(responseHandler* responseHandler,

Authenticator* authenticator)

{

if (authenticator != NULL)

fCurrentAuthenticator = *authenticator;

return sendRequest(new RequestRecord(++fCSeq, "DESCRIBE", responseHandler));

}参数responseHandler是调用者提供的回调函数,用于在处理完请求的回应后再调用之。并且在这个回调函数中会发出下一个请求--所有的请求都是这样依次发出的。使用回调函数的原因主要是因为socket的发送与接收不是同步进行的。类RequestRecord就代表一个请求,它不但保存了RTSP请求相关的信息,而且保存了请求完成后的回调函数--就是responseHandler。有些请求发出时还没建立tcp连接,不能立即发送,则加入fRequestsAwaitingConnection队列;有些发出后要等待Server端的回应,就加入fRequestsAwaitingResponse队列,当收到回应后再从队列中把它取出。

由于RTSPClient::sendRequest()太复杂,就不列其代码了,其无非是建立起RTSP请求字符串然后用TCP socket发送之。

现在看一下收到DESCRIBE的回应后如何处理它。理论上是跟据媒体信息建立起MediaSession了,看看是不是这样:

- void continueAfterDESCRIBE(RTSPClient*, int resultCode, char* resultString)

- {

- char* sdpDescription = resultString;

- //跟据SDP创建MediaSession。

- // Create a media session object from this SDP description:

- session = MediaSession::createNew(*env, sdpDescription);

- delete[] sdpDescription;

- // Then, setup the "RTPSource"s for the session:

- MediaSubsessionIterator iter(*session);

- MediaSubsession *subsession;

- Boolean madeProgress = False;

- char const* singleMediumToTest = singleMedium;

- //循环所有的MediaSubsession,为每个设置其RTPSource的参数

- while ((subsession = iter.next()) != NULL) {

- //初始化subsession,在其中会建立RTP/RTCP socket以及RTPSource。

- if (subsession->initiate(simpleRTPoffsetArg)) {

- madeProgress = True;

- if (subsession->rtpSource() != NULL) {

- // Because we're saving the incoming data, rather than playing

- // it in real time, allow an especially large time threshold

- // (1 second) for reordering misordered incoming packets:

- unsigned const thresh = 1000000; // 1 second

- subsession->rtpSource()->setPacketReorderingThresholdTime(thresh);

- // Set the RTP source's OS socket buffer size as appropriate - either if we were explicitly asked (using -B),

- // or if the desired FileSink buffer size happens to be larger than the current OS socket buffer size.