hive join explain

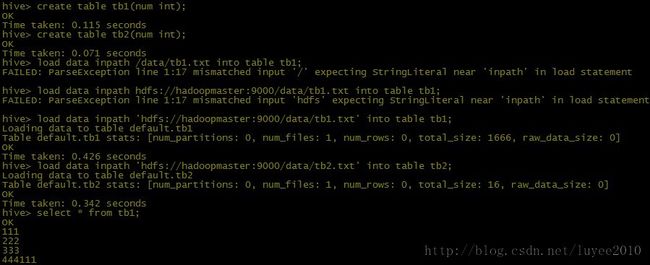

数据:

1,join

2,left outer join

select * from tb1 left outer join tb2 on tb1.num=tb2.num;

hive> select * from tb1 left outer join tb2 on tb1.num=tb2.num

> ;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

Starting Job = job_201307162036_0003, Tracking URL = http://hadoopmaster:50030/jobdetails.jsp?jobid=job_201307162036_0003

Kill Command = /home/hadoop/bigdata/hadoop-1.0.4/libexec/../bin/hadoop job -kill job_201307162036_0003

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 1

2013-07-17 19:30:36,174 Stage-1 map = 0%, reduce = 0%

2013-07-17 19:30:42,199 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 0.68 sec

2013-07-17 19:30:43,208 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec

2013-07-17 19:30:44,218 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec

2013-07-17 19:30:45,226 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec

2013-07-17 19:30:46,238 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec

2013-07-17 19:30:47,247 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec

2013-07-17 19:30:48,253 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec

2013-07-17 19:30:49,264 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec

2013-07-17 19:30:50,272 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec

2013-07-17 19:30:51,280 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 1.58 sec

2013-07-17 19:30:52,288 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 1.58 sec

2013-07-17 19:30:53,300 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 1.58 sec

2013-07-17 19:30:54,305 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.09 sec

2013-07-17 19:30:55,313 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.09 sec

2013-07-17 19:30:56,323 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.09 sec

2013-07-17 19:30:57,332 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.09 sec

2013-07-17 19:30:58,341 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.09 sec

2013-07-17 19:30:59,349 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.09 sec

2013-07-17 19:31:00,361 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.09 sec

MapReduce Total cumulative CPU time: 3 seconds 90 msec

Ended Job = job_201307162036_0003

MapReduce Jobs Launched:

Job 0: Map: 2 Reduce: 1 Cumulative CPU: 3.09 sec HDFS Read: 452 HDFS Write: 31 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 90 msec

OK

111 111

222 222

333 333

444 NULL

Time taken: 32.755 seconds

3,right outer join

hive> select * from tb1 right outer join tb2 on tb1.num=tb2.num

> ;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

Starting Job = job_201307162036_0004, Tracking URL = http://hadoopmaster:50030/jobdetails.jsp?jobid=job_201307162036_0004

Kill Command = /home/hadoop/bigdata/hadoop-1.0.4/libexec/../bin/hadoop job -kill job_201307162036_0004

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 1

2013-07-17 19:31:44,850 Stage-1 map = 0%, reduce = 0%

2013-07-17 19:31:50,873 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 0.62 sec

2013-07-17 19:31:51,885 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.64 sec

2013-07-17 19:31:52,898 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.64 sec

2013-07-17 19:31:53,906 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.64 sec

2013-07-17 19:31:54,914 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.64 sec

2013-07-17 19:31:55,923 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.64 sec

2013-07-17 19:31:56,934 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.64 sec

2013-07-17 19:31:57,939 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.64 sec

2013-07-17 19:31:58,946 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.64 sec

2013-07-17 19:31:59,955 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 1.64 sec

2013-07-17 19:32:00,963 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 1.64 sec

2013-07-17 19:32:01,972 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 1.64 sec

2013-07-17 19:32:02,979 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.19 sec

2013-07-17 19:32:03,986 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.19 sec

2013-07-17 19:32:04,996 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.19 sec

2013-07-17 19:32:06,004 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.19 sec

2013-07-17 19:32:07,015 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.19 sec

2013-07-17 19:32:08,025 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.19 sec

2013-07-17 19:32:09,034 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.19 sec

MapReduce Total cumulative CPU time: 3 seconds 190 msec

Ended Job = job_201307162036_0004

MapReduce Jobs Launched:

Job 0: Map: 2 Reduce: 1 Cumulative CPU: 3.19 sec HDFS Read: 452 HDFS Write: 31 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 190 msec

OK

111 111

222 222

333 333

NULL 555

Time taken: 32.695 seconds

4,full outer join

hive> select * from tb1 full outer join tb2 on tb1.num=tb2.num

> ;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

Starting Job = job_201307162036_0005, Tracking URL = http://hadoopmaster:50030/jobdetails.jsp?jobid=job_201307162036_0005

Kill Command = /home/hadoop/bigdata/hadoop-1.0.4/libexec/../bin/hadoop job -kill job_201307162036_0005

Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 1

2013-07-17 19:32:49,596 Stage-1 map = 0%, reduce = 0%

2013-07-17 19:32:55,617 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 0.58 sec

2013-07-17 19:32:56,624 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 0.58 sec

2013-07-17 19:32:57,631 Stage-1 map = 50%, reduce = 0%, Cumulative CPU 0.58 sec

2013-07-17 19:32:58,641 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.08 sec

2013-07-17 19:32:59,648 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.08 sec

2013-07-17 19:33:00,654 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.08 sec

2013-07-17 19:33:01,662 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.08 sec

2013-07-17 19:33:02,670 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.08 sec

2013-07-17 19:33:03,679 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.08 sec

2013-07-17 19:33:04,688 Stage-1 map = 100%, reduce = 17%, Cumulative CPU 2.08 sec

2013-07-17 19:33:05,697 Stage-1 map = 100%, reduce = 17%, Cumulative CPU 2.08 sec

2013-07-17 19:33:06,706 Stage-1 map = 100%, reduce = 17%, Cumulative CPU 2.08 sec

2013-07-17 19:33:07,711 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.8 sec

2013-07-17 19:33:08,716 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.8 sec

2013-07-17 19:33:09,725 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.8 sec

2013-07-17 19:33:10,735 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.8 sec

2013-07-17 19:33:11,745 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.8 sec

2013-07-17 19:33:12,754 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.8 sec

2013-07-17 19:33:13,763 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 3.8 sec

MapReduce Total cumulative CPU time: 3 seconds 800 msec

Ended Job = job_201307162036_0005

MapReduce Jobs Launched:

Job 0: Map: 2 Reduce: 1 Cumulative CPU: 3.8 sec HDFS Read: 452 HDFS Write: 38 SUCCESS

Total MapReduce CPU Time Spent: 3 seconds 800 msec

OK

111 111

222 222

333 333

444 NULL

NULL 555

Time taken: 32.671 seconds

5,left semi join

select * from tb1 left semi join tb2 on tb1.num=tb2.num;

hive> select * from tb1 left semi join tb2 on tb1.num=tb2.num; Total MapReduce jobs = 1 Launching Job 1 out of 1 Number of reduce tasks not specified. Estimated from input data size: 1 In order to change the average load for a reducer (in bytes): set hive.exec.reducers.bytes.per.reducer=<number> In order to limit the maximum number of reducers: set hive.exec.reducers.max=<number> In order to set a constant number of reducers: set mapred.reduce.tasks=<number> Starting Job = job_201307162036_0006, Tracking URL = http://hadoopmaster:50030/jobdetails.jsp?jobid=job_201307162036_0006 Kill Command = /home/hadoop/bigdata/hadoop-1.0.4/libexec/../bin/hadoop job -kill job_201307162036_0006 Hadoop job information for Stage-1: number of mappers: 2; number of reducers: 1 2013-07-17 19:41:56,040 Stage-1 map = 0%, reduce = 0% 2013-07-17 19:42:02,465 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.3 sec 2013-07-17 19:42:03,479 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.3 sec 2013-07-17 19:42:04,491 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.3 sec 2013-07-17 19:42:05,504 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.3 sec 2013-07-17 19:42:06,514 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.3 sec 2013-07-17 19:42:07,521 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.3 sec 2013-07-17 19:42:08,528 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.3 sec 2013-07-17 19:42:09,537 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.3 sec 2013-07-17 19:42:10,546 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 4.3 sec 2013-07-17 19:42:11,552 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 4.3 sec 2013-07-17 19:42:12,560 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 4.3 sec 2013-07-17 19:42:13,571 Stage-1 map = 100%, reduce = 33%, Cumulative CPU 4.3 sec 2013-07-17 19:42:14,586 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.83 sec 2013-07-17 19:42:15,595 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.83 sec 2013-07-17 19:42:16,605 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.83 sec 2013-07-17 19:42:17,615 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.83 sec 2013-07-17 19:42:18,626 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.83 sec 2013-07-17 19:42:19,635 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.83 sec 2013-07-17 19:42:20,645 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 5.83 sec MapReduce Total cumulative CPU time: 5 seconds 830 msec Ended Job = job_201307162036_0006 MapReduce Jobs Launched: Job 0: Map: 2 Reduce: 1 Cumulative CPU: 5.83 sec HDFS Read: 452 HDFS Write: 12 SUCCESS Total MapReduce CPU Time Spent: 5 seconds 830 msec OK 111 222 333 Time taken: 34.525 seconds

-------------------------------------------EXPLAIN-------------------------------------------------------------------------------------------

1,EXPLAIN:

hive> select *from tb1;

OK

111

222

333

444

Time taken: 0.083 seconds

hive> select *from tb1 where num=333;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_201307162036_0030, Tracking URL = http://hadoopmaster:50030/jobdetails.jsp?jobid=job_201307162036_0030

Kill Command = /home/hadoop/bigdata/hadoop-1.0.4/libexec/../bin/hadoop job -kill job_201307162036_0030

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2013-07-17 21:40:37,868 Stage-1 map = 0%, reduce = 0%

2013-07-17 21:40:43,898 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.68 sec

2013-07-17 21:40:44,904 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.68 sec

2013-07-17 21:40:45,911 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.68 sec

2013-07-17 21:40:46,915 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.68 sec

2013-07-17 21:40:47,921 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.68 sec

2013-07-17 21:40:48,929 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 0.68 sec

2013-07-17 21:40:49,936 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 0.68 sec

MapReduce Total cumulative CPU time: 680 msec

Ended Job = job_201307162036_0030

MapReduce Jobs Launched:

Job 0: Map: 1 Cumulative CPU: 0.68 sec HDFS Read: 226 HDFS Write: 4 SUCCESS

Total MapReduce CPU Time Spent: 680 msec

OK

333

Time taken: 20.543 seconds

hive> explain select *from tb1 where num=333;

OK

ABSTRACT SYNTAX TREE:

(TOK_QUERY (TOK_FROM (TOK_TABREF (TOK_TABNAME tb1))) (TOK_INSERT (TOK_DESTINATION (TOK_DIR TOK_TMP_FILE)) (TOK_SELECT (TOK_SELEXPR TOK_ALLCOLREF)) (TOK_WHERE (= (TOK_TABLE_OR_COL num) 333))))

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 is a root stage

STAGE PLANS:

Stage: Stage-1

Map Reduce

Alias -> Map Operator Tree:

tb1

TableScan

alias: tb1

Filter Operator

predicate:

expr: (num = 333)

type: boolean

Select Operator

expressions:

expr: num

type: int

outputColumnNames: _col0

File Output Operator

compressed: false

GlobalTableId: 0

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Stage: Stage-0

Fetch Operator

limit: -1

Time taken: 0.072 seconds

-------------------------------------

hive> explain select * from tb1 left semi join tb2 on tb1.num=tb2.num ;

OK

ABSTRACT SYNTAX TREE:

(TOK_QUERY (TOK_FROM (TOK_LEFTSEMIJOIN (TOK_TABREF (TOK_TABNAME tb1)) (TOK_TABREF (TOK_TABNAME tb2)) (= (. (TOK_TABLE_OR_COL tb1) num) (. (TOK_TABLE_OR_COL tb2) num)))) (TOK_INSERT (TOK_DESTINATION (TOK_DIR TOK_TMP_FILE)) (TOK_SELECT (TOK_SELEXPR TOK_ALLCOLREF))))

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 is a root stage

STAGE PLANS:

Stage: Stage-1

Map Reduce

Alias -> Map Operator Tree:

tb1

TableScan

alias: tb1

Reduce Output Operator

key expressions:

expr: num

type: int

sort order: +

Map-reduce partition columns:

expr: num

type: int

tag: 0

value expressions:

expr: num

type: int

tb2

TableScan

alias: tb2

Select Operator

expressions:

expr: num

type: int

outputColumnNames: num

Group By Operator

bucketGroup: false

keys:

expr: num

type: int

mode: hash

outputColumnNames: _col0

Reduce Output Operator

key expressions:

expr: _col0

type: int

sort order: +

Map-reduce partition columns:

expr: _col0

type: int

tag: 1

Reduce Operator Tree:

Join Operator

condition map:

Left Semi Join 0 to 1

condition expressions:

0 {VALUE._col0}

1

handleSkewJoin: false

outputColumnNames: _col0

Select Operator

expressions:

expr: _col0

type: int

outputColumnNames: _col0

File Output Operator

compressed: false

GlobalTableId: 0

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

Stage: Stage-0

Fetch Operator

limit: -1

Time taken: 2.215 seconds

hive>

2,EXPLAIN EXTENDED:

hive> explain extended select * from tb1 left semi join tb2 on tb1.num=tb2.num limit 2;

OK

ABSTRACT SYNTAX TREE:

(TOK_QUERY (TOK_FROM (TOK_LEFTSEMIJOIN (TOK_TABREF (TOK_TABNAME tb1)) (TOK_TABREF (TOK_TABNAME tb2)) (= (. (TOK_TABLE_OR_COL tb1) num) (. (TOK_TABLE_OR_COL tb2) num)))) (TOK_INSERT (TOK_DESTINATION (TOK_DIR TOK_TMP_FILE)) (TOK_SELECT (TOK_SELEXPR TOK_ALLCOLREF)) (TOK_LIMIT 2)))

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 is a root stage

STAGE PLANS:

Stage: Stage-1

Map Reduce

Alias -> Map Operator Tree:

tb1

TableScan

alias: tb1

GatherStats: false

Reduce Output Operator

key expressions:

expr: num

type: int

sort order: +

Map-reduce partition columns:

expr: num

type: int

tag: 0

value expressions:

expr: num

type: int

tb2

TableScan

alias: tb2

GatherStats: false

Select Operator

expressions:

expr: num

type: int

outputColumnNames: num

Group By Operator

bucketGroup: false

keys:

expr: num

type: int

mode: hash

outputColumnNames: _col0

Reduce Output Operator

key expressions:

expr: _col0

type: int

sort order: +

Map-reduce partition columns:

expr: _col0

type: int

tag: 1

Needs Tagging: true

Path -> Alias:

hdfs://hadoopmaster:9000/user/hive/warehouse/tb1 [tb1]

hdfs://hadoopmaster:9000/user/hive/warehouse/tb2 [tb2]

Path -> Partition:

hdfs://hadoopmaster:9000/user/hive/warehouse/tb1

Partition

base file name: tb1

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

properties:

bucket_count -1

columns num

columns.types int

file.inputformat org.apache.hadoop.mapred.TextInputFormat

file.outputformat org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

location hdfs://hadoopmaster:9000/user/hive/warehouse/tb1

name default.tb1

numFiles 1

numPartitions 0

numRows 0

rawDataSize 0

serialization.ddl struct tb1 { i32 num}

serialization.format 1

serialization.lib org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

totalSize 16

transient_lastDdlTime 1374060092

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

properties:

bucket_count -1

columns num

columns.types int

file.inputformat org.apache.hadoop.mapred.TextInputFormat

file.outputformat org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

location hdfs://hadoopmaster:9000/user/hive/warehouse/tb1

name default.tb1

numFiles 1

numPartitions 0

numRows 0

rawDataSize 0

serialization.ddl struct tb1 { i32 num}

serialization.format 1

serialization.lib org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

totalSize 16

transient_lastDdlTime 1374060092

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: default.tb1

name: default.tb1

hdfs://hadoopmaster:9000/user/hive/warehouse/tb2

Partition

base file name: tb2

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

properties:

bucket_count -1

columns num

columns.types int

file.inputformat org.apache.hadoop.mapred.TextInputFormat

file.outputformat org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

location hdfs://hadoopmaster:9000/user/hive/warehouse/tb2

name default.tb2

numFiles 1

numPartitions 0

numRows 0

rawDataSize 0

serialization.ddl struct tb2 { i32 num}

serialization.format 1

serialization.lib org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

totalSize 16

transient_lastDdlTime 1374059714

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

properties:

bucket_count -1

columns num

columns.types int

file.inputformat org.apache.hadoop.mapred.TextInputFormat

file.outputformat org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

location hdfs://hadoopmaster:9000/user/hive/warehouse/tb2

name default.tb2

numFiles 1

numPartitions 0

numRows 0

rawDataSize 0

serialization.ddl struct tb2 { i32 num}

serialization.format 1

serialization.lib org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

totalSize 16

transient_lastDdlTime 1374059714

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe

name: default.tb2

name: default.tb2

Reduce Operator Tree:

Join Operator

condition map:

Left Semi Join 0 to 1

condition expressions:

0 {VALUE._col0}

1

handleSkewJoin: false

outputColumnNames: _col0

Select Operator

expressions:

expr: _col0

type: int

outputColumnNames: _col0

Limit

File Output Operator

compressed: false

GlobalTableId: 0

directory: hdfs://hadoopmaster:9000/tmp/hive-hadoop/hive_2013-07-17_20-19-28_883_5816218321243775043/-ext-10001

NumFilesPerFileSink: 1

Stats Publishing Key Prefix: hdfs://hadoopmaster:9000/tmp/hive-hadoop/hive_2013-07-17_20-19-28_883_5816218321243775043/-ext-10001/

table:

input format: org.apache.hadoop.mapred.TextInputFormat

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat

properties:

columns _col0

columns.types int

escape.delim \

serialization.format 1

TotalFiles: 1

GatherStats: false

MultiFileSpray: false

Truncated Path -> Alias:

/tb1 [tb1]

/tb2 [tb2]

Stage: Stage-0

Fetch Operator

limit: 2

Time taken: 0.138 seconds