Kubernetes网络配置方案

1. 直接路由方案

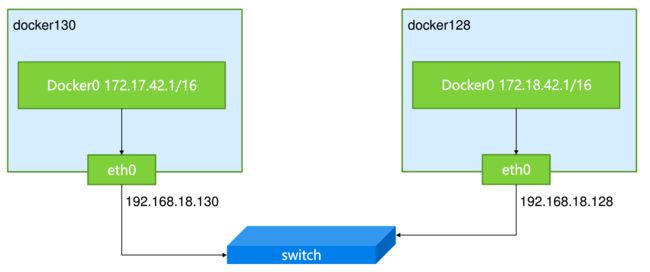

通过在每个Node上添加到其他Node上docker0的静态路由规则,就可以将不同物理服务器上Docker Daemon创建的docker0网桥互联互通。

注:两个Node上docker0地址是不能相同的,通过docker daemon –bip参数来修改网桥IP地址

例如:

Pod1所在docker0网桥的IP子网是10.1.10.0,Node地址为192.168.1.128;而Pod2所在docker0网桥的IP子网是10.1.20.0,Node地址为192.168.1.129。

在Node1上用route add命令增加一条到Node2上docker0的静态路由规则:

route add -net 10.1.20.0 netmask 255.255.255.0 gw 192.168.1.129

在Node2上增加一条到Node1上docker0的静态路由规则:

route add -net 10.1.10.0 netmask 255.255.255.0 gw 192.168.1.128

在Node1上通过ping命令验证到Node2上docker0的网络连通性。这里10.1.20.1为Node2上docker0网桥自身的IP地址。

# ping 10.1.20.1

集群中机器的数量通常可能很多,可以使用Quagga软件来实现路由规则的动态添加。主页为:http://www.nongnu.org/quagga/

在每台服务器安装Quagga软件并启动,还可以使用互联网上的一个Quagga容器来运行,使用index.alauda.cn/georce/router镜像启动Quagga。在每台Node上下载该Docker镜像。

# docker pull index.alauda.cn/georce/router

在运行Quagga路由器之前,需要确保每个Node上docker0网桥的子网地址不能重叠,也不能与物理机所在的网络重叠。

Node1: # ifconfig docker0 10.1.10.1/24

Node2: # ifconfig docker0 10.1.20.1/24

Node3: # ifconfig docker0 10.1.30.1/24然后在每个Node上启动Quagga容器。需要说明的是,Quagga需要以–privileged 特权模式运行,并且指定–net=host,表示直接使用物理机的网络。

# docker run -itd --name=router --privileged --net=host kubernetes-master:5000/router

Node1: # route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.123.250 0.0.0.0 UG 100 0 0 eno16777736

10.1.10.0 0.0.0.0 255.255.255.0 U 0 0 0 docker0

10.1.20.0 192.168.123.203 255.255.255.0 UG 20 0 0 eno16777736

10.1.30.0 192.168.123.204 255.255.255.0 UG 20 0 0 eno16777736

192.168.123.0 0.0.0.0 255.255.255.0 U 100 0 0 eno167777362. 使用flannel叠加网络

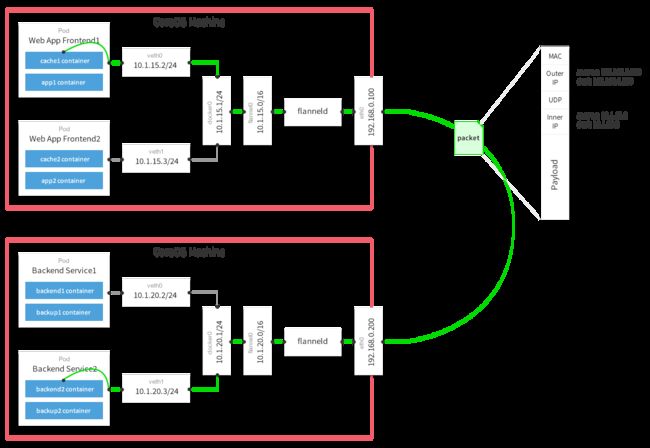

flannel采用叠加网络(Overlay Network)模型来完成网络的打通。

(1) 安装etcd

由于flannel使用etcd作为数据库,所以需要预先安装好etcd

(2) 安装flannel

从https://github.com/coreos/flannel/releases 下载最新稳定版本

# tar zxf flannel-0.5.5-linux-amd64.tar.gz #解压文件

# cp flannel-0.5.5/* /usr/bin/ #拷贝可执行文件至系统变量path(3) 配置flannel

使用systemd来管理flannel服务

[root@docker2 ~]# cat /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/etc/sysconfig/flanneld

EnvironmentFile=-/etc/sysconfig/docker-network

ExecStart=/usr/bin/flanneld -etcd-endpoints=${FLANNEL_ETCD} $FLANNEL_OPTIONS

[Install]

RequiredBy=docker.service

WantedBy=multi-user.target设置etcd地址

[root@docker2 ~]# cat /etc/sysconfig/flanneld

FLANNEL_ETCD= "http://kubernetes-master:2379"添加一条网络配置记录,这个配置将用于flannel**分配给每个Docker的虚拟IP地址段**

# etcdctl set /coreos.com/network/config '{ "Network": "10.1.0.0/16" }'

(4)由于flannel将覆盖docker0网桥,所以如果Docker服务已启动,则停止Docker服务

# systemctl stop docker

(5)编写flannel启动脚本,并加入自启动

[root@docker2 ~]# cat /etc/init.d/start_flannel.sh

#!/bin/bash

systemctl stop docker #停止docker服务

systemctl restart flanneld #启动flannel服务

mk-docker-opts.sh -i #生成环境变量

source /run/flannel/subnet.env #将环境变量生效

ifconfig docker0 ${FLANNEL_SUBNET} #设置docker0的网卡ip

systemctl start docker #启动docker服务完成后确认网络接口docker0的IP地址属于flannel0的子网

[root@docker2 ~]# ip a

......

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:ba:02:60:d5 brd ff:ff:ff:ff:ff:ff

inet 10.1.7.1/24 brd 10.1.7.255 scope global docker0

valid_lft forever preferred_lft forever

4: flannel0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1472 qdisc pfifo_fast state UNKNOWN qlen 500

link/none

inet 10.1.7.0/16 scope global flannel0

valid_lft forever preferred_lft forever注意docker0的IP地址:

3: flannel0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1472 qdisc pfifo_fast state UNKNOWN qlen 500

link/none

inet 10.1.34.0/16 scope global flannel0

valid_lft forever preferred_lft forever

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:4f:f4:05:07 brd ff:ff:ff:ff:ff:ff

inet 172.17.42.1/16 scope global docker0

valid_lft forever preferred_lft forever如果系统重启出现docker0和flannel0的IP不一致的问题,可能docker服务还没启动完成就进行配置IP地址出错,需要把docker启动完成再运行脚本内容。最好把kubelet,kube-proxy重启,让master识别node节点

# tail -f /var/log/message

Feb 17 15:57:59 docker3 rc.local: ifconfig docker0 10.1.65.1/24

Feb 17 15:57:59 docker3 rc.local: SIOCSIFADDR: No such device

Feb 17 15:57:59 docker3 rc.local: docker0: ERROR while getting interface flags: No such device

Feb 17 15:57:59 docker3 rc.local: SIOCSIFNETMASK: No such device添加启动docker启动

# cat /etc/init.d/start_flannel.sh

#!/bin/bash

systemctl start docker #启动docker服务

systemctl stop docker #停止docker服务

systemctl restart flanneld #启动flannel服务

mk-docker-opts.sh -i #生成环境变量

source /run/flannel/subnet.env #将环境变量生效

ifconfig docker0 ${FLANNEL_SUBNET} #设置docker0的网卡ip

systemctl restart docker kubelet kube-proxy #启动docker服务使用ping命令验证各Node上docker0之间的相互访问。

在etcd中也可以查看到flannel设置的flannel0地址与物理机IP地址的路由规则

[root@docker1 ~]# etcdctl ls /coreos.com/network/subnets

/coreos.com/network/subnets/10.1.7.0-24

/coreos.com/network/subnets/10.1.10.0-24

[root@docker1 ~]# etcdctl get /coreos.com/network/subnets/10.1.7.0-24

{"PublicIP":"192.168.123.202"}3. 使用Open vSwitch

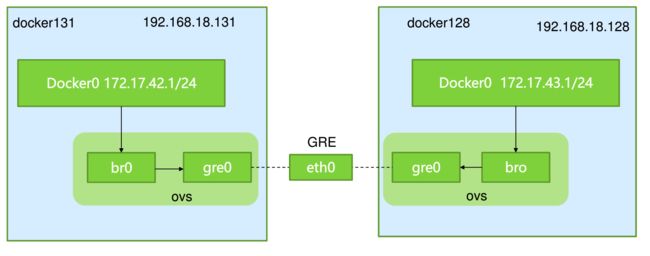

首先确保节点192.168.18.128的Docker0采用了172.17.43.0/24网段,而192.168.18.131的Docker0采用172.17.42.0/24网段,对应参数为Docker Daemon 进程里的–bip参数。

或者临时修改docker0地址ifconfig docker0 172.17.42.1/24

(1) 两个Node上安装ovs

# yum install openvswitch-2.4.0-1.x86_64.rpm

禁止SELINUX功能,配置后重启机器

[root@docker2 ~]# cat /etc/selinux/config

SELINUX=disabled

# /etc/init.d/openvswitch restart #重启服务

# /etc/init.d/openvswitch status #查看状态查看Open vSwitch的相关日志

# more /var/log/messages | grep openvs

Feb 5 00:16:55 docker2 openvswitch: /etc/openvswitch/conf.db does not exist ... (warning).

Feb 5 00:16:55 docker2 openvswitch: Creating empty database /etc/openvswitch/conf.db [ OK ]

Feb 5 00:16:55 docker2 openvswitch: Starting ovsdb-server [ OK ]

Feb 5 00:16:55 docker2 openvswitch: Configuring Open vSwitch system IDs [ OK ]

Feb 5 00:16:55 docker2 kernel: openvswitch: Open vSwitch switching datapath

Feb 5 00:16:55 docker2 openvswitch: Inserting openvswitch module [ OK ]

Feb 5 00:16:55 docker2 openvswitch: Starting ovs-vswitchd [ OK ]

Feb 5 00:16:55 docker2 openvswitch: Enabling remote OVSDB managers [ OK ]

Feb 5 00:19:24 docker2 kernel: openvswitch: netlink: Key attribute has unexpected length (type=21, length=4, expected=0).

Feb 5 00:19:24 docker2 NetworkManager[845]: <info> (ovs-system): new Generic device (driver: 'openvswitch' ifindex: 6)

Feb 5 00:19:24 docker2 NetworkManager[845]: <info> (br0): new Generic device (driver: 'openvswitch' ifindex: 7)安装网桥管理工具

# yum -y install bridge-utils

配置网络

添加ovs网桥

# ovs-vsctl add-br br0

创建GRE隧道连接对端,remote_ip为对端eth0的网卡地址

# ovs-vsctl add-port br0 gre1 -- set interface gre1 type=gre option:remote_ip=192.168.18.128

添加br0到本地docker0,使得容器流量通过OVS流经tunnel

# brctl addif docker0 br0

启动br0与docker0网桥

# ip link set dev br0 up

# ip link set dev docker0 up清空Docker0自带的iptables规则及Linux的规则,后者存在拒绝icmp报文通过防火墙的规则

# iptables -t nat -F; iptables -F

添加路由规则

# ip route add 172.17.0.0/16 dev docker0

查看192.168.18.131的IP地址,docker0的IP地址及路由等重要信息

# ip addr

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:77:1f:5d brd ff:ff:ff:ff:ff:ff

inet 192.168.18.131/24 brd 192.168.18.255 scope global eno16777736

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe77:1f5d/64 scope link

valid_lft forever preferred_lft forever

4: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP

link/ether 02:42:06:47:14:71 brd ff:ff:ff:ff:ff:ff

inet 172.17.42.1/24 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:6ff:fe47:1471/64 scope link

valid_lft forever preferred_lft forever

6: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN

link/ether 06:52:f3:b7:9a:86 brd ff:ff:ff:ff:ff:ff

7: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UNKNOWN

link/ether c2:a6:d8:d8:30:45 brd ff:ff:ff:ff:ff:ff

inet6 fe80::c0a6:d8ff:fed8:3045/64 scope link

valid_lft forever preferred_lft forever