Linux内核分析之三——使用gdb跟踪调试内核从start_kernel到init进程启动

作者:姚开健

原创作品转载请注明出处

《Linux内核分析》MOOC课程http://mooc.study.163.com/course/USTC-1000029000

Linux内核(本文以Linux-3.18.6为例)的启动在源代码init文件夹里的main.c文件,在经过执行一些汇编代码(把内核代码文件放到内存中解压缩,初始化C执行环境等等工作)后,会进入一个C编写的函数start_kernel,这是汇编代码与C代码的分界点,函数如下:

asmlinkage __visible void __init start_kernel(void)

501{

502 char *command_line;

503 char *after_dashes;

504

505 /*

506 * Need to run as early as possible, to initialize the

507 * lockdep hash:

508 */

509 lockdep_init();

510 set_task_stack_end_magic(&init_task);

511 smp_setup_processor_id();

512 debug_objects_early_init();

513

514 /*

515 * Set up the the initial canary ASAP:

516 */

517 boot_init_stack_canary();

518

519 cgroup_init_early();

520

521 local_irq_disable();

522 early_boot_irqs_disabled = true;

523

524/*

525 * Interrupts are still disabled. Do necessary setups, then

526 * enable them

527 */

528 boot_cpu_init();

529 page_address_init();

530 pr_notice("%s", linux_banner);

531 setup_arch(&command_line);

532 mm_init_cpumask(&init_mm);

533 setup_command_line(command_line);

534 setup_nr_cpu_ids();

535 setup_per_cpu_areas();

536 smp_prepare_boot_cpu(); /* arch-specific boot-cpu hooks */

537

538 build_all_zonelists(NULL, NULL);

539 page_alloc_init();

540

541 pr_notice("Kernel command line: %s\n", boot_command_line);

542 parse_early_param();

543 after_dashes = parse_args("Booting kernel",

544 static_command_line, __start___param,

545 __stop___param - __start___param,

546 -1, -1, &unknown_bootoption);

547 if (!IS_ERR_OR_NULL(after_dashes))

548 parse_args("Setting init args", after_dashes, NULL, 0, -1, -1,

549 set_init_arg);

550

551 jump_label_init();

552

553 /*

554 * These use large bootmem allocations and must precede

555 * kmem_cache_init()

556 */

557 setup_log_buf(0);

558 pidhash_init();

559 vfs_caches_init_early();

560 sort_main_extable();

561 trap_init();

562 mm_init();

563

564 /*

565 * Set up the scheduler prior starting any interrupts (such as the

566 * timer interrupt). Full topology setup happens at smp_init()

567 * time - but meanwhile we still have a functioning scheduler.

568 */

569 sched_init();

570 /*

571 * Disable preemption - early bootup scheduling is extremely

572 * fragile until we cpu_idle() for the first time.

573 */

574 preempt_disable();

575 if (WARN(!irqs_disabled(),

576 "Interrupts were enabled *very* early, fixing it\n"))

577 local_irq_disable();

578 idr_init_cache();

579 rcu_init();

580 context_tracking_init();

581 radix_tree_init();

582 /* init some links before init_ISA_irqs() */

583 early_irq_init();

584 init_IRQ();

585 tick_init();

586 rcu_init_nohz();

587 init_timers();

588 hrtimers_init();

589 softirq_init();

590 timekeeping_init();

591 time_init();

592 sched_clock_postinit();

593 perf_event_init();

594 profile_init();

595 call_function_init();

596 WARN(!irqs_disabled(), "Interrupts were enabled early\n");

597 early_boot_irqs_disabled = false;

598 local_irq_enable();

599

600 kmem_cache_init_late();

601

602 /*

603 * HACK ALERT! This is early. We're enabling the console before

604 * we've done PCI setups etc, and console_init() must be aware of

605 * this. But we do want output early, in case something goes wrong.

606 */

607 console_init();

608 if (panic_later)

609 panic("Too many boot %s vars at `%s'", panic_later,

610 panic_param);

611

612 lockdep_info();

613

614 /*

615 * Need to run this when irqs are enabled, because it wants

616 * to self-test [hard/soft]-irqs on/off lock inversion bugs

617 * too:

618 */

619 locking_selftest();

620

621#ifdef CONFIG_BLK_DEV_INITRD

622 if (initrd_start && !initrd_below_start_ok &&

623 page_to_pfn(virt_to_page((void *)initrd_start)) < min_low_pfn) {

624 pr_crit("initrd overwritten (0x%08lx < 0x%08lx) - disabling it.\n",

625 page_to_pfn(virt_to_page((void *)initrd_start)),

626 min_low_pfn);

627 initrd_start = 0;

628 }

629#endif

630 page_cgroup_init();

631 debug_objects_mem_init();

632 kmemleak_init();

633 setup_per_cpu_pageset();

634 numa_policy_init();

635 if (late_time_init)

636 late_time_init();

637 sched_clock_init();

638 calibrate_delay();

639 pidmap_init();

640 anon_vma_init();

641 acpi_early_init();

642#ifdef CONFIG_X86

643 if (efi_enabled(EFI_RUNTIME_SERVICES))

644 efi_enter_virtual_mode();

645#endif

646#ifdef CONFIG_X86_ESPFIX64

647 /* Should be run before the first non-init thread is created */

648 init_espfix_bsp();

649#endif

650 thread_info_cache_init();

651 cred_init();

652 fork_init(totalram_pages);

653 proc_caches_init();

654 buffer_init();

655 key_init();

656 security_init();

657 dbg_late_init();

658 vfs_caches_init(totalram_pages);

659 signals_init();

660 /* rootfs populating might need page-writeback */

661 page_writeback_init();

662 proc_root_init();

663 cgroup_init();

664 cpuset_init();

665 taskstats_init_early();

666 delayacct_init();

667

668 check_bugs();

669

670 sfi_init_late();

671

672 if (efi_enabled(EFI_RUNTIME_SERVICES)) {

673 efi_late_init();

674 efi_free_boot_services();

675 }

676

677 ftrace_init();

678

679 /* Do the rest non-__init'ed, we're now alive */

680 rest_init();

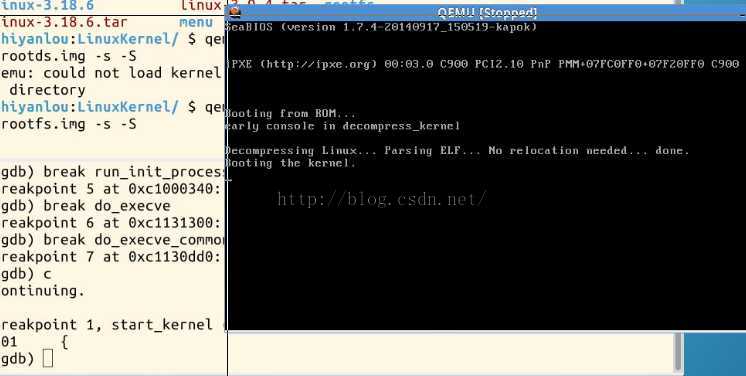

681}使用GDB设置断点start_kernel运行至该函数:

如函数代码所示,进入函数后会进行一系列的初始化工作,即大量的init函数。我们注意到,在这个start_kernel函数执行到最后之前我们的内核并没有进程的概念,就是一路执行汇编和此函数的初始化代码,其实在start_kernel函数执行的最后一个函数调用rest_init()时,Linux系统开始有了一个进程,此进程pid为0,我们假设它为0号进程。0号进程并非常规的通过fork调用来产生的进程,因为它是从汇编代码一直到rest_init函数调用才产生,这是内核自己生成的进程,其进程上下文包括最开始的汇编代码到start_kernel函数。

static noinline void __init_refok rest_init(void)

394{

395 int pid;

396

397 rcu_scheduler_starting();

398 /*

399 * We need to spawn init first so that it obtains pid 1, however

400 * the init task will end up wanting to create kthreads, which, if

401 * we schedule it before we create kthreadd, will OOPS.

402 */

403 kernel_thread(kernel_init, NULL, CLONE_FS);

404 numa_default_policy();

405 pid = kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES);

406 rcu_read_lock();

407 kthreadd_task = find_task_by_pid_ns(pid, &init_pid_ns);

408 rcu_read_unlock();

409 complete(&kthreadd_done);

410

411 /*

412 * The boot idle thread must execute schedule()

413 * at least once to get things moving:

414 */

415 init_idle_bootup_task(current);

416 schedule_preempt_disabled();

417 /* Call into cpu_idle with preempt disabled */

418 cpu_startup_entry(CPUHP_ONLINE);

419}

420

421/* Check for early params. */

422static int __init do_early_param(char *param, char *val, const char *unused)

423{

424 const struct obs_kernel_param *p;

425

426 for (p = __setup_start; p < __setup_end; p++) {

427 if ((p->early && parameq(param, p->str)) ||

428 (strcmp(param, "console") == 0 &&

429 strcmp(p->str, "earlycon") == 0)

430 ) {

431 if (p->setup_func(val) != 0)

432 pr_warn("Malformed early option '%s'\n", param);

433 }

434 }

435 /* We accept everything at this stage. */

436 return 0;

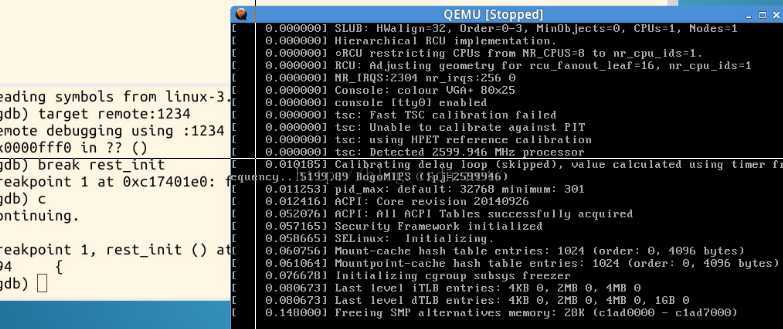

437}使用GDB设置断点rest_init并运行至此:

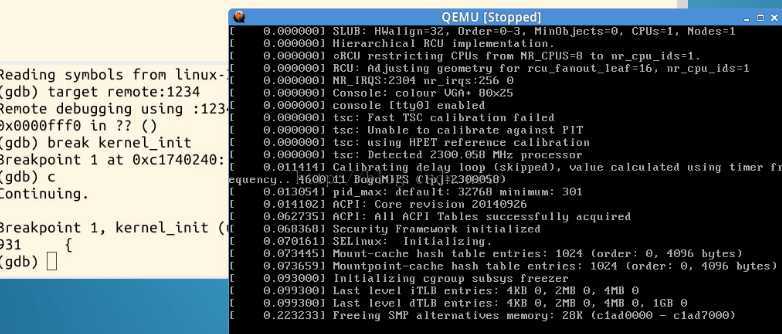

在执行到rest_init函数时,注意这行代码 kernel_thread( kernel_init, NULL, CLONE_FS);使用GDB设置断点kernel_init并运行至此:

它会产生第二个进程,pid为1,即1号进程。它是Linux内核建立进程概念后第一个通过kernel_thread,do_fork产生的进程,它在内核态执行,之后通过一系列系统调用来执行用户空间的程序/sbin/init等,如下所示:

if (!try_to_run_init_process("/sbin/init") ||

966 !try_to_run_init_process("/etc/init") ||

967 !try_to_run_init_process("/bin/init") ||

968 !try_to_run_init_process("/bin/sh"))

969 return 0;

static int try_to_run_init_process(const char *init_filename)

915{

916 int ret;

917

918 ret = run_init_process(init_filename);

919

920 if (ret && ret != -ENOENT) {

921 pr_err("Starting init: %s exists but couldn't execute it (error %d)\n",

922 init_filename, ret);

923 }

924

925 return ret;

926}

设置run_init_process断点运行如下:

<img src="http://img.blog.csdn.net/20160311170028195?watermark/2/text/aHR0cDovL2Jsb2cuY3Nkbi5uZXQv/font/5a6L5L2T/fontsize/400/fill/I0JBQkFCMA==/dissolve/70/gravity/Center" alt="" />

static int run_init_process(const char *init_filename)

907{

908 argv_init[0] = init_filename;

909 return do_execve(getname_kernel(init_filename),

910 (const char __user *const __user *)argv_init,

911 (const char __user *const __user *)envp_init);

912}在产生了1号进程后,0号进程就被sched_init()函数里面的

/* 7149 * Make us the idle thread. Technically, schedule() should not be 7150 * called from this thread, however somewhere below it might be, 7151 * but because we are the idle thread, we just pick up running again 7152 * when this runqueue becomes "idle". 7153 */ 7154 init_idle(current, smp_processor_id());init_idle函数调用变为了idle进程,在init_idle函数中把idle进程加入到CPU的运行队列:

void init_idle(struct task_struct *idle, int cpu)

4615{

4616 struct rq *rq = cpu_rq(cpu);

4617 unsigned long flags;

4618

4619 raw_spin_lock_irqsave(&rq->lock, flags);

4620

4621 __sched_fork(0, idle);

4622 idle->state = TASK_RUNNING;

4623 idle->se.exec_start = sched_clock();

4624

4625 do_set_cpus_allowed(idle, cpumask_of(cpu));

4626 /*

4627 * We're having a chicken and egg problem, even though we are

4628 * holding rq->lock, the cpu isn't yet set to this cpu so the

4629 * lockdep check in task_group() will fail.

4630 *

4631 * Similar case to sched_fork(). / Alternatively we could

4632 * use task_rq_lock() here and obtain the other rq->lock.

4633 *

4634 * Silence PROVE_RCU

4635 */

4636 rcu_read_lock();

4637 __set_task_cpu(idle, cpu);

4638 rcu_read_unlock();

4639

4640 rq->curr = rq->idle = idle;

4641 idle->on_rq = TASK_ON_RQ_QUEUED;

4642#if defined(CONFIG_SMP)

4643 idle->on_cpu = 1;

4644#endif

4645 raw_spin_unlock_irqrestore(&rq->lock, flags);

4646

4647 /* Set the preempt count _outside_ the spinlocks! */

4648 init_idle_preempt_count(idle, cpu);

4649

4650 /*

4651 * The idle tasks have their own, simple scheduling class:

4652 */

4653 idle->sched_class = &idle_sched_class;

4654 ftrace_graph_init_idle_task(idle, cpu);

4655 vtime_init_idle(idle, cpu);

4656#if defined(CONFIG_SMP)

4657 sprintf(idle->comm, "%s/%d", INIT_TASK_COMM, cpu);

4658#endif

4659}

至此,我们大概了解了Linux内核0号进程和1号进程的创建过程,并用GDB跟踪调试了内核代码展示了内核一步一步的函数调用。从start_kernel函数开始,在rest_init函数调用时内核静态地,非do_fork地创建了0号进程,此时内核开始有了进程的概念,0号进程随后变为idle进程在默默地运行着,而在rest_init函数中,则通过kernel_thread和do_fork创建了1号进程,它是用户进程的始祖。