python3下multiprocessing、threading和gevent性能对比

转自: http://blog.csdn.net/littlethunder/article/details/40983031

目前计算机程序一般会遇到两类I/O:硬盘I/O和网络I/O。我就针对网络I/O的场景分析下python3下进程、线程、协程效率的对比。进程采用multiprocessing.Pool进程池,线程是自己封装的进程池,协程采用gevent的库。用python3自带的urlllib.request和开源的requests做对比。代码如下:

import urllib.request

import requests

import time

import multiprocessing

import threading

import queue

def startTimer():

return time.time()

def ticT(startTime):

useTime = time.time() - startTime

return round(useTime, 3)

#def tic(startTime, name):

# useTime = time.time() - startTime

# print('[%s] use time: %1.3f' % (name, useTime))

def download_urllib(url):

req = urllib.request.Request(url,

headers={'user-agent': 'Mozilla/5.0'})

res = urllib.request.urlopen(req)

data = res.read()

try:

data = data.decode('gbk')

except UnicodeDecodeError:

data = data.decode('utf8', 'ignore')

return res.status, data

def download_requests(url):

req = requests.get(url,

headers={'user-agent': 'Mozilla/5.0'})

return req.status_code, req.text

class threadPoolManager:

def __init__(self,urls, workNum=10000,threadNum=20):

self.workQueue=queue.Queue()

self.threadPool=[]

self.__initWorkQueue(urls)

self.__initThreadPool(threadNum)

def __initWorkQueue(self,urls):

for i in urls:

self.workQueue.put((download_requests,i))

def __initThreadPool(self,threadNum):

for i in range(threadNum):

self.threadPool.append(work(self.workQueue))

def waitAllComplete(self):

for i in self.threadPool:

if i.isAlive():

i.join()

class work(threading.Thread):

def __init__(self,workQueue):

threading.Thread.__init__(self)

self.workQueue=workQueue

self.start()

def run(self):

while True:

if self.workQueue.qsize():

do,args=self.workQueue.get(block=False)

do(args)

self.workQueue.task_done()

else:

break

urls = ['http://www.ustchacker.com'] * 10

urllibL = []

requestsL = []

multiPool = []

threadPool = []

N = 20

PoolNum = 100

for i in range(N):

print('start %d try' % i)

urllibT = startTimer()

jobs = [download_urllib(url) for url in urls]

#for status, data in jobs:

# print(status, data[:10])

#tic(urllibT, 'urllib.request')

urllibL.append(ticT(urllibT))

print('1')

requestsT = startTimer()

jobs = [download_requests(url) for url in urls]

#for status, data in jobs:

# print(status, data[:10])

#tic(requestsT, 'requests')

requestsL.append(ticT(requestsT))

print('2')

requestsT = startTimer()

pool = multiprocessing.Pool(PoolNum)

data = pool.map(download_requests, urls)

pool.close()

pool.join()

multiPool.append(ticT(requestsT))

print('3')

requestsT = startTimer()

pool = threadPoolManager(urls, threadNum=PoolNum)

pool.waitAllComplete()

threadPool.append(ticT(requestsT))

print('4')

import matplotlib.pyplot as plt

x = list(range(1, N+1))

plt.plot(x, urllibL, label='urllib')

plt.plot(x, requestsL, label='requests')

plt.plot(x, multiPool, label='requests MultiPool')

plt.plot(x, threadPool, label='requests threadPool')

plt.xlabel('test number')

plt.ylabel('time(s)')

plt.legend()

plt.show()

运行结果如下:

从上图可以看出,python3自带的urllib.request效率还是不如开源的requests,multiprocessing进程池效率明显提升,但还低于自己封装的线程池,有一部分原因是创建、调度进程的开销比创建线程高(测试程序中我把创建的代价也包括在里面)。

下面是gevent的测试代码:

import urllib.request

import requests

import time

import gevent.pool

import gevent.monkey

gevent.monkey.patch_all()

def startTimer():

return time.time()

def ticT(startTime):

useTime = time.time() - startTime

return round(useTime, 3)

#def tic(startTime, name):

# useTime = time.time() - startTime

# print('[%s] use time: %1.3f' % (name, useTime))

def download_urllib(url):

req = urllib.request.Request(url,

headers={'user-agent': 'Mozilla/5.0'})

res = urllib.request.urlopen(req)

data = res.read()

try:

data = data.decode('gbk')

except UnicodeDecodeError:

data = data.decode('utf8', 'ignore')

return res.status, data

def download_requests(url):

req = requests.get(url,

headers={'user-agent': 'Mozilla/5.0'})

return req.status_code, req.text

urls = ['http://www.ustchacker.com'] * 10

urllibL = []

requestsL = []

reqPool = []

reqSpawn = []

N = 20

PoolNum = 100

for i in range(N):

print('start %d try' % i)

urllibT = startTimer()

jobs = [download_urllib(url) for url in urls]

#for status, data in jobs:

# print(status, data[:10])

#tic(urllibT, 'urllib.request')

urllibL.append(ticT(urllibT))

print('1')

requestsT = startTimer()

jobs = [download_requests(url) for url in urls]

#for status, data in jobs:

# print(status, data[:10])

#tic(requestsT, 'requests')

requestsL.append(ticT(requestsT))

print('2')

requestsT = startTimer()

pool = gevent.pool.Pool(PoolNum)

data = pool.map(download_requests, urls)

#for status, text in data:

# print(status, text[:10])

#tic(requestsT, 'requests with gevent.pool')

reqPool.append(ticT(requestsT))

print('3')

requestsT = startTimer()

jobs = [gevent.spawn(download_requests, url) for url in urls]

gevent.joinall(jobs)

#for i in jobs:

# print(i.value[0], i.value[1][:10])

#tic(requestsT, 'requests with gevent.spawn')

reqSpawn.append(ticT(requestsT))

print('4')

import matplotlib.pyplot as plt

x = list(range(1, N+1))

plt.plot(x, urllibL, label='urllib')

plt.plot(x, requestsL, label='requests')

plt.plot(x, reqPool, label='requests geventPool')

plt.plot(x, reqSpawn, label='requests Spawn')

plt.xlabel('test number')

plt.ylabel('time(s)')

plt.legend()

plt.show()

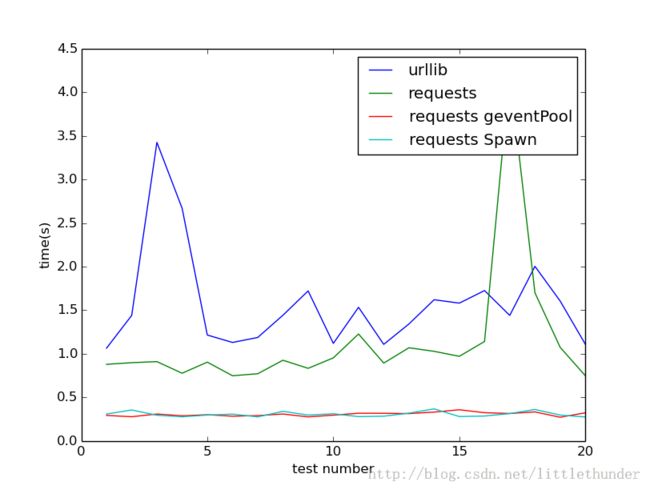

运行结果如下:

从上图可以看到,对于I/O密集型任务,gevent还是能对性能做很大提升的,由于协程的创建、调度开销都比线程小的多,所以可以看到不论使用gevent的Spawn模式还是Pool模式,性能差距不大。

因为在gevent中需要使用monkey补丁,会提高gevent的性能,但会影响multiprocessing的运行,如果要同时使用,需要如下代码:

gevent.monkey.patch_all(thread=False, socket=False, select=False)

可是这样就不能充分发挥gevent的优势,所以不能把multiprocessing Pool、threading Pool、gevent Pool在一个程序中对比。不过比较两图可以得出结论,线程池和gevent的性能最优的,其次是进程池。附带得出个结论,requests库比urllib.request库性能要好一些