Solr 4.10.1 + tomcat 7 + zookeeper + HDFS 集成 (SolrCloud+HDFS)配置

关于搭建solrCloud + zookeeper +HDFS的实践经验

搭建zookeeper:

1、 到zookeeper官网下载zookeeper(用于管理solrcloud云的配置文件)http://mirror.bit.edu.cn/apache/zookeeper/zookeeper-3.4.6/

2、 准备三台服务器,或者搭建3台虚拟机: 例如:host3.com 192.168.2.87

Host5.com 192.168.2.89

Host4.com 192.168.2.94

3、 上传zookeeper-3.4.6.tar.gz到任意一台服务器/usr/local/目录,并解压到当前目录:zookeeper: tar –zxvf zookeeper-3.4.6.tar.gz 改名:zookeeper-3.4.6为 zookeeper:mv zookeeper-3.4.6 zookeeper

4、 在zookeeper目录下建立data和 logs目录,同时将zookeeper目录下conf/zoo_simple.cfg文件复制一份成 zoo.cfg

5、 修改zoo.cfg

# The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can passbetween # sending a request and getting anacknowledgement syncLimit=5 # the directory where the snapshot isstored. # do not use /tmp for storage, /tmp hereis just # example sakes. dataDir=/usr/local/zookeeper/data # the port at which the clients willconnect clientPort=2181 # the maximum number of clientconnections. # increase this if you need to handle moreclients #maxClientCnxns=60 # # Be sure to read the maintenance sectionof the # administrator guide before turning onautopurge. # #http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # The number of snapshots to retain indataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable autopurge feature #autopurge.purgeInterval=1 dataLogDir=/usr/local/zookeeper/logs server.1=192.168.2.89:2888:3888 server.2=192.168.3.87:2888:3888 server.3=192.168.3.94:2888:3888

6、 拷贝zookeeper目录到另外两台服务器:

scp-r /usr/local/zookeeper [email protected]:/usr/local/</a> scp–r /usr/local/zookeeper [email protected]:/usr/local/</a>

分别在几台服务器的data目录下建立myid 其 ip对应相应的server.* server.1 的myid内容为1 server.2的myid内容为2 server.3的myid为 3

7、 启动ZooKeeper集群,在每个节点上分别启动ZooKeeper服务:

| cd/usr/local/zookeeper/ |

|

| bin/zkServer.sh start |

|

8、 可以查看ZooKeeper集群的状态,保证集群启动没有问题:分别查看每台服务器的zookeeper状态 zookeeper#bin/zkServer.shstatus查看那些是following那个是leader

Eg:

[[email protected]]# bin/zkServer.sh status JMXenabled by default Usingconfig: /home/hadoop/zookeeper-3.3.6/bin/../conf/zoo.cfg Mode:follower [root@host5/]# cd /home/hadoop/zookeeper-3.3.6/ [[email protected]]# bin/zkServer.sh status JMXenabled by default Usingconfig: /home/hadoop/zookeeper-3.3.6/bin/../conf/zoo.cfg Mode:leader [root@host3multicore]# cd /home/hadoop/zookeeper-3.3.6/ [[email protected]]# bin/zkServer.sh status JMXenabled by default Usingconfig: /home/hadoop/zookeeper-3.3.6/bin/../conf/zoo.cfg Mode:follower

建立SolrCloud:

1、 到Apache官网下载solr安装文件 solr-4.10.1.tgz http://mirror.bit.edu.cn/apache/lucene/solr/4.10.1/ 并解压tar –xvzf solr-4.10.1.tgz 更改solr-4.10.1目录:mv solr-4.10.1 solr

2、 创建在根目录下创建目录mkdir –p /data0/solrcloud 将solr目录移动到data0下,那么data0下包含两个目录{solr、solrcloud}

3、 将/data0/solr/example/webapps/solr.war放到tomcat的webapps目录下,启动tomcat,这是tomcat下多出solr目录

4、 将/data0/solr/example/lib/ext/下的所有的jar文件复制到tomcat/webapps/solr/WEB-INF/lib/下,建立mkdir –p /data0/solrcloud/{multicore,solr-lib}目录,在将tomcat/webapps/solr/WEB-INF/lib/* 复制一份到solr-lib/下

cp /usr/local/tomcat/webapps/solr/WEB-INF/lib/* /data0/solrcloud/solr-lib/

5、 在建立一个装在配置文件的目录

mkdir–p /data0/solrcloud/multicore/collection/{conf,data}

同时将 /data0/solr/ example/solr/collection1/conf/*

复制到/data0/solrcloud/multicore/collection/conf目录下

将example/solr/multicore下的solr.xml和zoo.cfg复制到/data0/solrcloud/multicore目录下 eg:

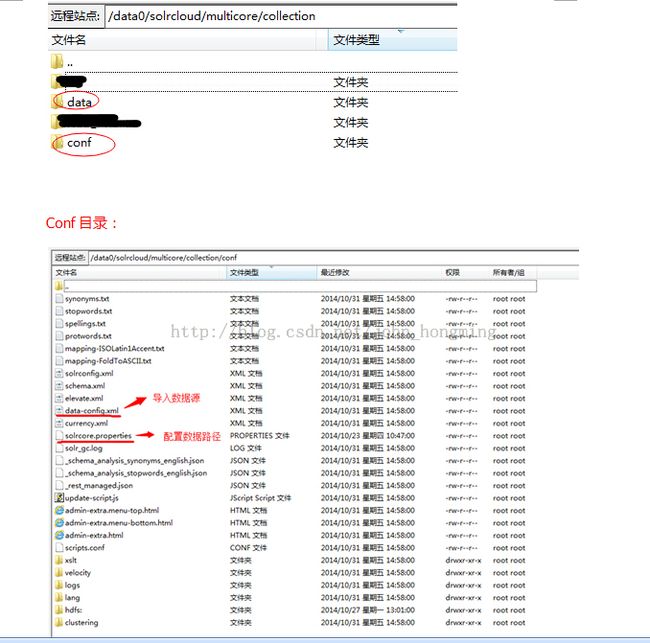

Collection目录:

Data-config.xml文件是数据导入的配置文件查看:

http://blog.csdn.net/john_hongming/article/details/40181451

需要自己建立的 solrcore.properties文件

文件内容:

solr.shard.data.dir=/data0/solrcloud/multicore/collection/data

说明:属性solr.shard.data.dir在solrconfig.xml文件中被引用过,指定索引数据的存放位置。

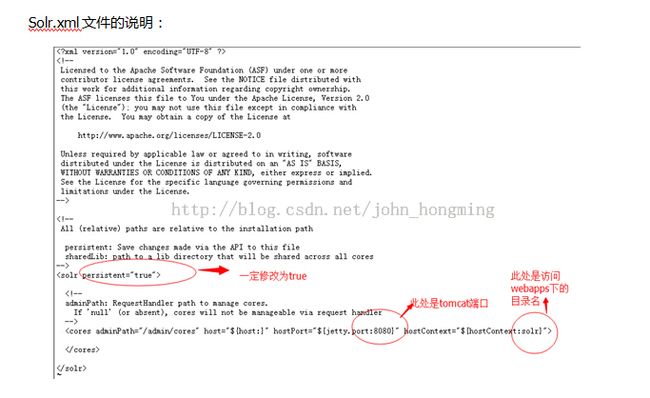

Solr.xml文件的说明:

6、 通过zookeeper管理配置文件:

#zookeeper上传配置文件 # java-classpath .:/data0/solrcloud/solr-lib/* org.apache.solr.cloud.ZkCLI -cmdupconfig -zkhost host3.com:2181,host4.com:2181,host5.com:2181 -confdir/data0/solrcloud/multicore/collection/conf -confname myconf #zookeeper连接多个节点# java-classpath .:/data0/solrcloud/solr-lib/* org.apache.solr.cloud.ZkCLI -cmdlinkconfig -collection collection1 -confname myconf -zkhosthost3.com:2181,host4.com:2181,host5.com:2181

7、 在启动文件tomcat/bin/Catalina.sh中添加如下配置:

#配置tomcat/bin/catlina.sh下的启动参数# JAVA_OPTS="-server -Xmx2048m-Xms1024m -verbose:gc -Xloggc:solr_gc.log -Dsolr.solr.home=/data0/solrcloud/multicore -DzkHost=host3.com:2181,host4.com:2181,host5.com:2181"

8、 修改tomcat/webapps/solr/WEB-INF/web.xml

context.xml

<!-- The contents of this file will be loaded for each web application -->

<Context docBase="solr.war" debug="0" crossContext="false">

<!-- Default set of monitored resources -->

<WatchedResource>WEB-INF/web.xml</WatchedResource>

<!-- Uncomment this to disable session persistence across Tomcat restarts -->

<!--

<Manager pathname="" />

-->

<!-- Uncomment this to enable Comet connection tacking (provides events

on session expiration as well as webapp lifecycle) -->

<!--

<Valve className="org.apache.catalina.valves.CometConnectionManagerValve" />

-->

<Environment name="solr/home" type="java.lang.String" value="/data0/solrcloud/multicore" override="true"/>

</Context>

web.xml

<env-entry>

<env-entry-name>solr/home</env-entry-name>

<env-entry-value>/data0/solrcloud/multicore</env-entry-value>

<env-entry-type>java.lang.String</env-entry-type>

</env-entry>

<env-entry>

<env-entry-name>solr/home</env-entry-name>

<env-entry-value>/data0/solrcloud/multicore</env-entry-value>

<env-entry-type>java.lang.String</env-entry-type>

</env-entry>

9、 Solrcloud的IK分词器的配置:

首先在solrcloud中的multicore/collection/下建立lib目录,将IK分词器的配置文件最主要的就是IKAnalyzer.cfg.xml和 stopword.dic移动到lib下

之后修改multicore/collection/conf下的schema.xml文件

添加:

<fieldTypename="ikanalyzer"class="solr.TextField">

<analyzertype="index"isMaxWordLength="false"class="org.wltea.analyzer.lucene.IKAnalyzer"/>

<analyzertype="query"isMaxWordLength="true"class="org.wltea.analyzer.lucene.IKAnalyzer"/>

<analyzertype="multiterm">

<tokenizerclass="solr.KeywordTokenizerFactory"/>

</analyzer>

</fieldType>

Field会根据type的属性进行分词

至此,IKAnalyzer中文分词基本添加完成,更新下zookeeper的solr配置:

java -classpath .:/usr/local/solrcloud/solr-lib/*org.apache.solr.cloud.ZkCLI -cmd upconfig -zkhost 192.168.3.119:2181,192.168.3.111:2181,192.168.3.127:2181 -confdir/usr/local/solrcloud/multicore/collection/conf -confname myconf

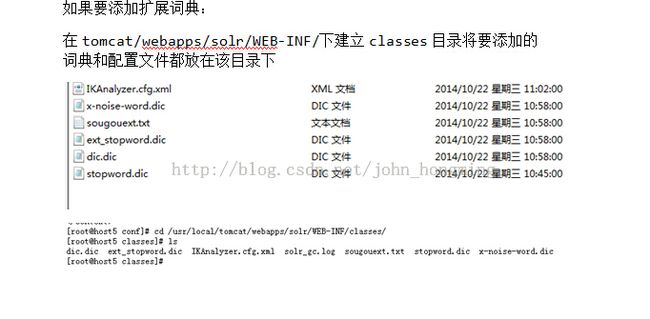

如果要添加扩展词典:

在tomcat/webapps/solr/WEB-INF/下建立classes目录将要添加的词典和配置文件都放在该目录下

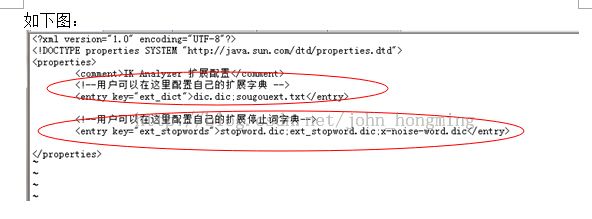

编辑IKAnalyzer.cfg.xml 添加词典

如下图:

10、 将配置好的data0目录 scp到另外两个服务器:

scp –r /data0 [email protected]:/ scp –r /[email protected]:/ scp –r /usr/local/tomcat [email protected]:/usr/local/ scp –r /usr/local/tomcat [email protected]:/usr/local/

启动三台服务器bin/startup.sh start

11、 创建collection和shard

#创建collection 分3片一个副本集#

#创建三个分片,每个分片一个副本集#

curl 'http://192.168.2.89:8080/solr/admin/collections?action=CREATE&name=mycollection&numShards=3&replicationFactor=1'

#创建shard 的副本 在89创建shard1的副本集mycollection_shard1_replica_2#

curl'http://192.168.2.89:8080/solr/admin/cores?action=CREATE&collection=mycollection&name=mycollection_shard1_replica_2&shard=shard1'

curl'http://192.168.2.87:8080/solr/admin/cores?action=CREATE&collection=mycollection&name=mycollection_shard1_replica_3&shard=shard1'

curl'http://192.168.2.89:8080/solr/admin/cores?action=CREATE&collection=mycollection&name=mycollection_shard2_replica_2&shard=shard2'

curl'http://192.168.2.87:8080/solr/admin/cores?action=CREATE&collection=mycollection&name=mycollection_shard2_replica_3&shard=shard2'

#对shard1再次在94上shard #

curl'http://192.168.2.94:8080/solr/admin/collections?action=SPLITSHARD&collection=mycollection&shard=shard1'

1、 编辑/usr/local/tomcat/bin/catlina.sh 添加红色部分

JAVA_OPTS="-server-Xmx2048m -Xms1024m -verbose:gc -Xloggc:solr_gc.log <span style="color:#ff0000;">-XX:MaxDirectMemorySize=1g -Dsolr.directoryFactory=HdfsDirectoryFactory-Dsolr.lock.type=hdfs -Dsolr.hdfs.home=hdfs://host1xyz.com:9000/solr </span>-Dsolr.solr.home=/data0/solrcloud/multicore-DzkHost=host3.com:2181,host4.com:2181,host5.com:2181"

2、 修改/data0/solrcloud/multicore/collection/conf/solrconfig.xml文件

添加这部分:

<directoryFactoryname="DirectoryFactory"class="solr.HdfsDirectoryFactory">

<strname="solr.hdfs.home">hdfs://host1xyz.com:9000/solr</str>

<boolname="solr.hdfs.blockcache.enabled">true</bool>

<int name="solr.hdfs.blockcache.slab.count">1</int>

<boolname="solr.hdfs.blockcache.direct.memory.allocation">true</bool>

<intname="solr.hdfs.blockcache.blocksperbank">16384</int>

<boolname="solr.hdfs.blockcache.read.enabled">true</bool>

<bool name="solr.hdfs.blockcache.write.enabled">true</bool>

<boolname="solr.hdfs.nrtcachingdirectory.enable">true</bool>

<intname="solr.hdfs.nrtcachingdirectory.maxmergesizemb">16</int>

<intname="solr.hdfs.nrtcachingdirectory.maxcachedmb">192</int>

<str name="solr.hdfs.confdir">/home/hadoop/hadoop-2.2.0/etc/hadoop</str>

</directoryFactory>

再找到

<lockType>${solr.lock.type:native}</lockType>将其修改为<lockType>${solr.lock.type:hdfs}</lockType>

注意:这时<dataDir>${solr.data.dir:}</dataDir>是这种状态,如果添加路径,就会覆盖掉hdfs的路径

参考:

https://cwiki.apache.org/confluence/display/solr/Running+Solr+on+HDFS

http://shiyanjun.cn/archives/100.html

http://blog.csdn.net/shirdrn/article/details/9770829

http://blog.csdn.net/john_hongming/article/details/40113641

http://blog.csdn.net/john_hongming/article/details/40080947