- Spring Boot中实现跨域请求

BABA8891

springboot后端java

在SpringBoot中实现跨域请求(CORS,Cross-OriginResourceSharing)可以通过多种方式,以下是几种常见的方法:1.使用@CrossOrigin注解在SpringBoot中,你可以在控制器或者具体的请求处理方法上使用@CrossOrigin注解来允许跨域请求。在控制器上应用:importorg.springframework.web.bind.annotation.

- 神经网络-损失函数

红米煮粥

神经网络人工智能深度学习

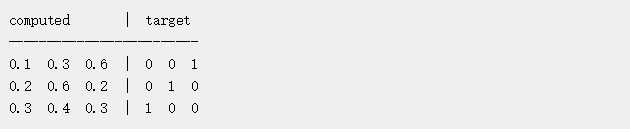

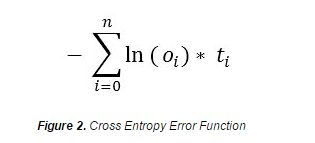

文章目录一、回归问题的损失函数1.均方误差(MeanSquaredError,MSE)2.平均绝对误差(MeanAbsoluteError,MAE)二、分类问题的损失函数1.0-1损失函数(Zero-OneLossFunction)2.交叉熵损失(Cross-EntropyLoss)3.合页损失(HingeLoss)三、总结在神经网络中,损失函数(LossFunction)扮演着至关重要的角色,它

- 损失函数与反向传播

Star_.

PyTorchpytorch深度学习python

损失函数定义与作用损失函数(lossfunction)在深度学习领域是用来计算搭建模型预测的输出值和真实值之间的误差。1.损失函数越小越好2.计算实际输出与目标之间的差距3.为更新输出提供依据(反向传播)常见的损失函数回归常见的损失函数有:均方差(MeanSquaredError,MSE)、平均绝对误差(MeanAbsoluteErrorLoss,MAE)、HuberLoss是一种将MSE与MAE

- 神经网络传递函数sigmoid,神经网络传递函数作用

快乐的小荣荣

神经网络机器学习深度学习人工智能

神经网络传递函数选取不同会有特别大差别嘛?只是最后一层,但前面层是非线性,那么可能存在区别不大的情况。线性函数f(a*input)=af(input),一般来说,input为向量,最简化情况下,可以假设input的各个维度,a1=a2=a3。。。意味着你线性层只是简单的对输入做了scale~而神经网络能起作用的原因,在于通过足够复杂的非线性函数,来模拟任何的分布。所以,神经网络必须要用非线性函数。

- 在Flask中实现跨域请求(CORS)

ac-er8888

flaskpython后端

在Flask中实现跨域请求(CORS,Cross-OriginResourceSharing)主要涉及到对Flask应用的配置,以允许来自不同源的请求访问服务器上的资源。以下是在Flask中实现CORS的详细步骤和方法:一、理解CORSCORS是一种机制,它使用额外的HTTP头部来告诉浏览器,让运行在一个origin(域)上的Web应用被准许访问来自不同源服务器上的指定的资源。当一个资源从与该资源

- QCMA:开源的PS Vita内容管理新选择

瞿格女

QCMA:开源的PSVita内容管理新选择qcmaCross-platformcontentmanagerassistantforthePSVita(Nolongermaintained)项目地址:https://gitcode.com/gh_mirrors/qc/qcma随着游戏界的日新月异,对于那些怀旧的游戏玩家而言,PSVita仍然是一颗璀璨的明珠。为了让更多人能够自由地管理自己的游戏和媒体

- Mac上有哪些虚拟机软件?性能兼容性都怎么样该如何下载安装

Hot1422

macoswindows

Mac上常用的虚拟机软件大概有三个,一个是VMwareFusionVM虚拟机,一个是ParallelsDesktopPD虚拟机,还有一个是CrossOver其中PD与VM都是纯正的虚拟机软件,通过安装windows操作系统来运行windows软件,而CrossOver是无需安装操作系统的,但兼容性比较差,下面详细介绍一下:ParallelsDesktop(PD虚拟机)PD虚拟机对于RAM架构的Ma

- SQL server CROSS JOIN 的用法

潇锐killer

数据库sql

SELECT@DateThresholdasdt,t3.ck_id,t3.ck_name,t3.title_id,t3.title,casewhent4.numisnullthen0elset4.numendnum,casewhent4.moneyisnullthen0elset4.moneyendmoney,t3.startDay,t3.endDayfrom(SELECTt1.ck_id,t1.

- sql中的APPLY 和 LATERAL

鲨鱼辣椒ii

sqlsql

简介APPLY是sqlserver的内容,LATERAL和pgsql的内容,用起来是类似的,名字不太一样apply两种方式:OUTERAPPLY和CROSSAPPY,分别对应做链接和自链接

- Google大数据架构技术栈

剑海风云

BigData大数据架构GoogleBigData

数据存储层ColossusColossus作为Google下一代GFS(GoogleFileSystem)。GFS本身存在一些不足单主瓶颈GFS依赖单个主节点进行元数据管理,随着数据量和访问请求的增长,出现了可扩展性瓶颈。想象一下,只有一位图书管理员管理着一个庞大的图书馆——最终,事情变得难以承受。元数据可扩展性有限主节点上的集中元数据存储无法有效扩展,影响了性能并妨碍了PB和EB级数据的管理。实

- vue前端根据接口返回的url 下载图片

爱心觉罗晓宇

java前端服务器

downloadPicture(imgSrc,name){constimage=newImage();//解决跨域Canvas污染问题image.setAttribute("crossOrigin","anonymous");image.src=imgSrc;image.onload=()=>{constcanvas=document.createElement("canvas");canvas.

- cross-plateform 跨平台应用程序-08-Ionic 介绍

老马啸西风

java

跨平台系列cross-plateform跨平台应用程序-01-概览cross-plateform跨平台应用程序-02-有哪些主流技术栈?cross-plateform跨平台应用程序-03-如果只选择一个框架,应该选择哪一个?cross-plateform跨平台应用程序-04-ReactNative介绍cross-plateform跨平台应用程序-05-Flutter介绍cross-platefor

- cross-plateform 跨平台应用程序-09-phonegap/Apache Cordova 介绍

老马啸西风

java

跨平台系列cross-plateform跨平台应用程序-01-概览cross-plateform跨平台应用程序-02-有哪些主流技术栈?cross-plateform跨平台应用程序-03-如果只选择一个框架,应该选择哪一个?cross-plateform跨平台应用程序-04-ReactNative介绍cross-plateform跨平台应用程序-05-Flutter介绍cross-platefor

- cross-plateform 跨平台应用程序-10-naitvescript 介绍

老马啸西风

java

跨平台系列cross-plateform跨平台应用程序-01-概览cross-plateform跨平台应用程序-02-有哪些主流技术栈?cross-plateform跨平台应用程序-03-如果只选择一个框架,应该选择哪一个?cross-plateform跨平台应用程序-04-ReactNative介绍cross-plateform跨平台应用程序-05-Flutter介绍cross-platefor

- cross-plateform 跨平台应用程序-02-有哪些主流技术栈?

老马啸西风

java

跨平台系列cross-plateform跨平台应用程序-01-概览cross-plateform跨平台应用程序-02-有哪些主流技术栈?cross-plateform跨平台应用程序-03-如果只选择一个框架,应该选择哪一个?cross-plateform跨平台应用程序-04-ReactNative介绍cross-plateform跨平台应用程序-05-Flutter介绍cross-platefor

- cross-plateform 跨平台应用程序-01-概览

老马啸西风

java

跨平台系列cross-plateform跨平台应用程序-01-概览cross-plateform跨平台应用程序-02-有哪些主流技术栈?cross-plateform跨平台应用程序-03-如果只选择一个框架,应该选择哪一个?cross-plateform跨平台应用程序-04-ReactNative介绍cross-plateform跨平台应用程序-05-Flutter介绍cross-platefor

- cross-plateform 跨平台应用程序-06-uni-app 介绍

知识分享官

uni-app

详细介绍一下uni-app?whatuni-app是一个使用Vue.js开发所有前端应用的框架,开发者编写一次代码,可发布到iOS、Android、Web(响应式)、以及各种小程序(微信/支付宝/百度/头条/飞书/QQ/快手/京东/美团/钉钉/淘宝)、快应用等多个平台。以下是uni-app的一些核心特性和优势:使用Vue.js开发:uni-app基于Vue.js,这意味着如果你已经熟悉Vue.js

- cross-plateform 跨平台应用程序-05-Flutter 介绍

老马啸西风

java

跨平台系列cross-plateform跨平台应用程序-01-概览cross-plateform跨平台应用程序-02-有哪些主流技术栈?cross-plateform跨平台应用程序-03-如果只选择一个框架,应该选择哪一个?cross-plateform跨平台应用程序-04-ReactNative介绍cross-plateform跨平台应用程序-05-Flutter介绍cross-platefor

- 高效管理CrossOver容器里的程序

想干啥就干啥

CrossOverforMac软件的容器里并不是Windows操作系统,没有Windows操作系统那么丰富的软件管理工具,它只有一个操作界面,默认显示只有“运行命令”和控制面板。一些重要的工具(如:explorer、uninstall等)都隐藏在CrossOver的Windows目录下,要运行非常的麻烦。此外,安装的Windows应用程序,其执行文件有时无法在操作界面的“程序”区域显示,使用程序也

- Python中item()和items()的用处

~|Bernard|

深度学习疑点总结pythonpytorch深度学习

item()区别一:在pytorch训练时,一般用到.item()。比如loss.item()。我们可以做个简单测试代码看看它的区别:importtorchx=torch.randn(2,2)print(x)print(x[1,1])print(x[1,1].item())运行结果:tensor([[-2.0743,0.1675],[0.7016,-0.6779]])tensor(-0.6779)

- 如何使用Pytorch-Metric-Learning?

鱼儿也有烦恼

PyTorchpytorch

文章目录如何使用Pytorch-Metric-Learning?1.Pytorch-Metric-Learning库9个模块的功能1.1Sampler模块1.2Miner模块1.3Loss模块1.4Reducer模块1.5Distance模块1.6Regularizer模块1.7Trainer模块1.8Tester模块1.9Utils模块2.如何使用PyTorchMetricLearning库中的

- MySql关键字

zm2714

mysql

ADDALLALTERANALYZEANDASASCASENSITIVEBEFOREBETWEENBIGINTBINARYBLOBBOTHBYCALLCASCADECASECHANGECHARCHARACTERCHECKCOLLATECOLUMNCONDITIONCONNECTIONCONSTRAINTCONTINUECONVERTCREATECROSSCURRENT_DATECURRENT_TI

- Flex布局

FL1623863121

前端编程csscss3前端

Flex布局Flex布局也称弹性布局,可以为盒状模型提供最大的灵活性,是布局的首选方案,现已得到所有现代浏览器的支持。基础通过指定display:flex来标识一个弹性布局盒子,称为FLEX容器,容器内部的盒子就成为FLEX容器的成员,容器默认两根轴线,水平的主轴与垂直的交叉轴,主轴的开始位置叫做mainstart,结束位置叫做mainend;交叉轴的开始位置叫做crossstart,结束位置叫做

- CMake项目的CMackeLists.txt内容语法详解

过好每一天的女胖子

linuxWindows跨平台cmake跨平台

文章目录1、CMake构建级别2、CMakeLists.txt文件基本结构语法解析宏变量含义1、CMakeCMake(crossplatformmake)是一个跨平台的安装编译工具,可以使用简单的语句描述安装编译过程,输出安装编译过程中产生的中间文件。CMake不直接产生最终的文件,而是产生对应的构造文件,如linux下的makefile,windows下的vs的projects等CMake的编译

- 运行CrossOver应用程序的四种方法

想干啥就干啥

众所周知,在MacOS里面是不能运行exe执行文件的,要运行exe文件,必须在CrossOver运行的情况下,在操作界面上或者使用“运行命令”才行。在CrossOverforMac软件中,应用程序分为两种——Windows自带的exe执行文件和从外部安装的应用程序。本章节小编将教会小伙伴如何运行CrossOver里的应用程序。一、在“程序”区域中运行在CrossOver操作界面上,“程序”区域里的

- Web安全之CSRF攻击详解与防护

J老熊

JavaWeb安全web安全csrf安全java面试运维

在互联网应用中,安全性问题是开发者必须时刻关注的核心内容之一。跨站请求伪造(Cross-SiteRequestForgery,CSRF),是一种常见的Web安全漏洞。通过CSRF攻击,黑客可以冒用受害者的身份,发送恶意请求,执行诸如转账、订单提交等操作,导致严重的安全后果。本文将详细讲解CSRF攻击的原理及其防御方法,结合电商交易系统的场景给出错误和正确的示范代码,并分析常见的安全问题与解决方案,

- 【激活函数总结】Pytorch中的激活函数详解: ReLU、Leaky ReLU、Sigmoid、Tanh 以及 Softmax

阿_旭

深度学习知识点pytorch人工智能python激活函数深度学习

《博主简介》小伙伴们好,我是阿旭。专注于人工智能、AIGC、python、计算机视觉相关分享研究。感谢小伙伴们点赞、关注!《------往期经典推荐------》一、AI应用软件开发实战专栏【链接】项目名称项目名称1.【人脸识别与管理系统开发】2.【车牌识别与自动收费管理系统开发】3.【手势识别系统开发】4.【人脸面部活体检测系统开发】5.【图片风格快速迁移软件开发】6.【人脸表表情识别系统】7.

- 深度学习之sigmoid函数介绍

yueguang8

人工智能深度学习人工智能

1.基本概念Sigmoid函数,也称为Logistic函数,是一种常用的数学函数,其数学表达式为:其中,e是自然对数的底数,Zj是输入变量。Sigmoid函数曲线如下所示:计算示例:原始输出结果Zj:[-0.6,1.4,2.5]使用Sigmoid函数后输出为:[0.35,0.8,0.92]2.Sigmoid函数特点Sigmoid函数具有以下特点:值域限定在(0,1)之间:Sigmoid函数的输出范

- 基于图的推荐算法(12):Handling Information Loss of Graph Neural Networks for Session-based Recommendation

阿瑟_TJRS

前言KDD2020,针对基于会话推荐任务提出的GNN方法对已有的GNN方法的缺陷进行分析并做出改进主要针对lossysessionencoding和ineffectivelong-rangedependencycapturing两个问题:基于GNN的方法存在损失部分序列信息的问题,主要是在session转换为图以及消息传播过程中的排列无关(permutation-invariant)的聚合过程中造

- AttributeError: ‘tuple‘ object has no attribute ‘shape‘

晓胡同学

keras深度学习tensorflow

AttributeError:‘tuple’objecthasnoattribute‘shape’在将keras代码改为tensorflow2代码的时候报了如下错误AttributeError:'tuple'objecthasnoattribute'shape'经过调查发现,损失函数写错了原来的是这样model.compile(loss=['binary_crossentropy'],optimi

- mondb入手

木zi_鸣

mongodb

windows 启动mongodb 编写bat文件,

mongod --dbpath D:\software\MongoDBDATA

mongod --help 查询各种配置

配置在mongob

打开批处理,即可启动,27017原生端口,shell操作监控端口 扩展28017,web端操作端口

启动配置文件配置,

数据更灵活

- 大型高并发高负载网站的系统架构

bijian1013

高并发负载均衡

扩展Web应用程序

一.概念

简单的来说,如果一个系统可扩展,那么你可以通过扩展来提供系统的性能。这代表着系统能够容纳更高的负载、更大的数据集,并且系统是可维护的。扩展和语言、某项具体的技术都是无关的。扩展可以分为两种:

1.

- DISPLAY变量和xhost(原创)

czmmiao

display

DISPLAY

在Linux/Unix类操作系统上, DISPLAY用来设置将图形显示到何处. 直接登陆图形界面或者登陆命令行界面后使用startx启动图形, DISPLAY环境变量将自动设置为:0:0, 此时可以打开终端, 输出图形程序的名称(比如xclock)来启动程序, 图形将显示在本地窗口上, 在终端上输入printenv查看当前环境变量, 输出结果中有如下内容:DISPLAY=:0.0

- 获取B/S客户端IP

周凡杨

java编程jspWeb浏览器

最近想写个B/S架构的聊天系统,因为以前做过C/S架构的QQ聊天系统,所以对于Socket通信编程只是一个巩固。对于C/S架构的聊天系统,由于存在客户端Java应用,所以直接在代码中获取客户端的IP,应用的方法为:

String ip = InetAddress.getLocalHost().getHostAddress();

然而对于WEB

- 浅谈类和对象

朱辉辉33

编程

类是对一类事物的总称,对象是描述一个物体的特征,类是对象的抽象。简单来说,类是抽象的,不占用内存,对象是具体的,

占用存储空间。

类是由属性和方法构成的,基本格式是public class 类名{

//定义属性

private/public 数据类型 属性名;

//定义方法

publ

- android activity与viewpager+fragment的生命周期问题

肆无忌惮_

viewpager

有一个Activity里面是ViewPager,ViewPager里面放了两个Fragment。

第一次进入这个Activity。开启了服务,并在onResume方法中绑定服务后,对Service进行了一定的初始化,其中调用了Fragment中的一个属性。

super.onResume();

bindService(intent, conn, BIND_AUTO_CREATE);

- base64Encode对图片进行编码

843977358

base64图片encoder

/**

* 对图片进行base64encoder编码

*

* @author mrZhang

* @param path

* @return

*/

public static String encodeImage(String path) {

BASE64Encoder encoder = null;

byte[] b = null;

I

- Request Header简介

aigo

servlet

当一个客户端(通常是浏览器)向Web服务器发送一个请求是,它要发送一个请求的命令行,一般是GET或POST命令,当发送POST命令时,它还必须向服务器发送一个叫“Content-Length”的请求头(Request Header) 用以指明请求数据的长度,除了Content-Length之外,它还可以向服务器发送其它一些Headers,如:

- HttpClient4.3 创建SSL协议的HttpClient对象

alleni123

httpclient爬虫ssl

public class HttpClientUtils

{

public static CloseableHttpClient createSSLClientDefault(CookieStore cookies){

SSLContext sslContext=null;

try

{

sslContext=new SSLContextBuilder().l

- java取反 -右移-左移-无符号右移的探讨

百合不是茶

位运算符 位移

取反:

在二进制中第一位,1表示符数,0表示正数

byte a = -1;

原码:10000001

反码:11111110

补码:11111111

//异或: 00000000

byte b = -2;

原码:10000010

反码:11111101

补码:11111110

//异或: 00000001

- java多线程join的作用与用法

bijian1013

java多线程

对于JAVA的join,JDK 是这样说的:join public final void join (long millis )throws InterruptedException Waits at most millis milliseconds for this thread to die. A timeout of 0 means t

- Java发送http请求(get 与post方法请求)

bijian1013

javaspring

PostRequest.java

package com.bijian.study;

import java.io.BufferedReader;

import java.io.DataOutputStream;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.HttpURL

- 【Struts2二】struts.xml中package下的action配置项默认值

bit1129

struts.xml

在第一部份,定义了struts.xml文件,如下所示:

<!DOCTYPE struts PUBLIC

"-//Apache Software Foundation//DTD Struts Configuration 2.3//EN"

"http://struts.apache.org/dtds/struts

- 【Kafka十三】Kafka Simple Consumer

bit1129

simple

代码中关于Host和Port是割裂开的,这会导致单机环境下的伪分布式Kafka集群环境下,这个例子没法运行。

实际情况是需要将host和port绑定到一起,

package kafka.examples.lowlevel;

import kafka.api.FetchRequest;

import kafka.api.FetchRequestBuilder;

impo

- nodejs学习api

ronin47

nodejs api

NodeJS基础 什么是NodeJS

JS是脚本语言,脚本语言都需要一个解析器才能运行。对于写在HTML页面里的JS,浏览器充当了解析器的角色。而对于需要独立运行的JS,NodeJS就是一个解析器。

每一种解析器都是一个运行环境,不但允许JS定义各种数据结构,进行各种计算,还允许JS使用运行环境提供的内置对象和方法做一些事情。例如运行在浏览器中的JS的用途是操作DOM,浏览器就提供了docum

- java-64.寻找第N个丑数

bylijinnan

java

public class UglyNumber {

/**

* 64.查找第N个丑数

具体思路可参考 [url] http://zhedahht.blog.163.com/blog/static/2541117420094245366965/[/url]

*

题目:我们把只包含因子

2、3和5的数称作丑数(Ugly Number)。例如6、8都是丑数,但14

- 二维数组(矩阵)对角线输出

bylijinnan

二维数组

/**

二维数组 对角线输出 两个方向

例如对于数组:

{ 1, 2, 3, 4 },

{ 5, 6, 7, 8 },

{ 9, 10, 11, 12 },

{ 13, 14, 15, 16 },

slash方向输出:

1

5 2

9 6 3

13 10 7 4

14 11 8

15 12

16

backslash输出:

4

3

- [JWFD开源工作流设计]工作流跳跃模式开发关键点(今日更新)

comsci

工作流

既然是做开源软件的,我们的宗旨就是给大家分享设计和代码,那么现在我就用很简单扼要的语言来透露这个跳跃模式的设计原理

大家如果用过JWFD的ARC-自动运行控制器,或者看过代码,应该知道在ARC算法模块中有一个函数叫做SAN(),这个函数就是ARC的核心控制器,要实现跳跃模式,在SAN函数中一定要对LN链表数据结构进行操作,首先写一段代码,把

- redis常见使用

cuityang

redis常见使用

redis 通常被认为是一个数据结构服务器,主要是因为其有着丰富的数据结构 strings、map、 list、sets、 sorted sets

引入jar包 jedis-2.1.0.jar (本文下方提供下载)

package redistest;

import redis.clients.jedis.Jedis;

public class Listtest

- 配置多个redis

dalan_123

redis

配置多个redis客户端

<?xml version="1.0" encoding="UTF-8"?><beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi=&quo

- attrib命令

dcj3sjt126com

attr

attrib指令用于修改文件的属性.文件的常见属性有:只读.存档.隐藏和系统.

只读属性是指文件只可以做读的操作.不能对文件进行写的操作.就是文件的写保护.

存档属性是用来标记文件改动的.即在上一次备份后文件有所改动.一些备份软件在备份的时候会只去备份带有存档属性的文件.

- Yii使用公共函数

dcj3sjt126com

yii

在网站项目中,没必要把公用的函数写成一个工具类,有时候面向过程其实更方便。 在入口文件index.php里添加 require_once('protected/function.php'); 即可对其引用,成为公用的函数集合。 function.php如下:

<?php /** * This is the shortcut to D

- linux 系统资源的查看(free、uname、uptime、netstat)

eksliang

netstatlinux unamelinux uptimelinux free

linux 系统资源的查看

转载请出自出处:http://eksliang.iteye.com/blog/2167081

http://eksliang.iteye.com 一、free查看内存的使用情况

语法如下:

free [-b][-k][-m][-g] [-t]

参数含义

-b:直接输入free时,显示的单位是kb我们可以使用b(bytes),m

- JAVA的位操作符

greemranqq

位运算JAVA位移<<>>>

最近几种进制,加上各种位操作符,发现都比较模糊,不能完全掌握,这里就再熟悉熟悉。

1.按位操作符 :

按位操作符是用来操作基本数据类型中的单个bit,即二进制位,会对两个参数执行布尔代数运算,获得结果。

与(&)运算:

1&1 = 1, 1&0 = 0, 0&0 &

- Web前段学习网站

ihuning

Web

Web前段学习网站

菜鸟学习:http://www.w3cschool.cc/

JQuery中文网:http://www.jquerycn.cn/

内存溢出:http://outofmemory.cn/#csdn.blog

http://www.icoolxue.com/

http://www.jikexue

- 强强联合:FluxBB 作者加盟 Flarum

justjavac

r

原文:FluxBB Joins Forces With Flarum作者:Toby Zerner译文:强强联合:FluxBB 作者加盟 Flarum译者:justjavac

FluxBB 是一个快速、轻量级论坛软件,它的开发者是一名德国的 PHP 天才 Franz Liedke。FluxBB 的下一个版本(2.0)将被完全重写,并已经开发了一段时间。FluxBB 看起来非常有前途的,

- java统计在线人数(session存储信息的)

macroli

javaWeb

这篇日志是我写的第三次了 前两次都发布失败!郁闷极了!

由于在web开发中常常用到这一部分所以在此记录一下,呵呵,就到备忘录了!

我对于登录信息时使用session存储的,所以我这里是通过实现HttpSessionAttributeListener这个接口完成的。

1、实现接口类,在web.xml文件中配置监听类,从而可以使该类完成其工作。

public class Ses

- bootstrp carousel初体验 快速构建图片播放

qiaolevip

每天进步一点点学习永无止境bootstrap纵观千象

img{

border: 1px solid white;

box-shadow: 2px 2px 12px #333;

_width: expression(this.width > 600 ? "600px" : this.width + "px");

_height: expression(this.width &

- SparkSQL读取HBase数据,通过自定义外部数据源

superlxw1234

sparksparksqlsparksql读取hbasesparksql外部数据源

关键字:SparkSQL读取HBase、SparkSQL自定义外部数据源

前面文章介绍了SparSQL通过Hive操作HBase表。

SparkSQL从1.2开始支持自定义外部数据源(External DataSource),这样就可以通过API接口来实现自己的外部数据源。这里基于Spark1.4.0,简单介绍SparkSQL自定义外部数据源,访

- Spring Boot 1.3.0.M1发布

wiselyman

spring boot

Spring Boot 1.3.0.M1于6.12日发布,现在可以从Spring milestone repository下载。这个版本是基于Spring Framework 4.2.0.RC1,并在Spring Boot 1.2之上提供了大量的新特性improvements and new features。主要包含以下:

1.提供一个新的sprin