轻松搭建hadoop-1.2.1伪分布

轻松搭建hadoop-1.2.1伪分布

以CentOS为例:

CentOS虚拟机安装:http://blog.csdn.net/baolibin528/article/details/32918565

网络设置:http://blog.csdn.net/baolibin528/article/details/43797107

PieTTY用法:http://blog.csdn.net/baolibin528/article/details/43822509

WinSCP用法:http://blog.csdn.net/baolibin528/article/details/43819289

只要把虚拟系统装好之后,一切就可以用软件远程操作它。

1、设置IP

配置伪分布只在一台机器上运行,因此用 localhost 代替 IP 地址也可以。

如果要用远程工具(PieTTY、WinSCP等)连接 Linux,那就必须要设置 IP 地址。

在装系统的时候就可以顺便把IP地址配好。

如果现配执行命令: service network restart //重启网络

ifconfig //查看IP 信息

[root@baolibin ~]# ifconfig

eth0 Linkencap:Ethernet HWaddr 00:0C:29:44:A3:A5

inetaddr:192.168.1.100 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe44:a3a5/64 Scope:Link

UP BROADCAST RUNNINGMULTICAST MTU:1500 Metric:1

RX packets:97040errors:0 dropped:0 overruns:0 frame:0

TX packets:10935errors:0 dropped:0 overruns:0 carrier:0

collisions:0txqueuelen:1000

RX bytes:141600363(135.0 MiB) TX bytes:1033124 (1008.9KiB)

lo Link encap:LocalLoopback

inetaddr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128Scope:Host

UP LOOPBACKRUNNING MTU:16436 Metric:1

RX packets:16 errors:0 dropped:0 overruns:0frame:0

TX packets:16errors:0 dropped:0 overruns:0 carrier:0

collisions:0txqueuelen:0

RX bytes:1184 (1.1KiB) TX bytes:1184 (1.1 KiB)

2、关闭防火墙

执行命令: service iptables stop

验证: service iptables status

上面那个命令执行完之后,再次开机防火墙还会打开。

永久关闭防火墙: chkconfig iptables off

验证: chkconfig --list | grep iptables

[root@baolibin~]# chkconfig --list | grep iptables iptables 0:关闭 1:关闭 2:关闭 3:关闭 4:关闭 5:关闭 6:关闭

3、设置主机名:

命令1: hostname 主机名

这个命令是暂时修改主机名,重启后无效。下面这个命令是永久修改主机名。

命令2: vim /etc/sysconfig/network

NETWORKING=yes HOSTNAME=baolibin GATEWAY=192.168.1.1

4、IP与hostname 绑定:

命令: vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4localhost4.localdomain4 ::1 localhost localhost.localdomainlocalhost6 localhost6.localdomain6 192.168.1.100 baolibin

5、设置SSH免密码登陆:

执行命令: ssh-keygen -t rsa -P ''

cat ~/.ssh/id_rsa.pub >>~/.ssh/authorized_keys

chmod 600~/.ssh/authorized_keys

[root@baolibin~]# ssh 192.168.1.100 Last login: SunFeb 15 21:01:30 2015 from baolibin [root@baolibin~]#

6、安装JDK:

给JDK安装赋予可执行权限: chmodu+x jdk-6u45-linux-x64.bin

解压JDK : ./jdk-6u45-linux-x64.bin

给解压的文件夹改名: mv jdk1.6.0_45jdk

修改权限: chown -R hadoop:hadoop jdk

在/etc/profile 文件中添加:

#set java environment export JAVA_HOME=/usr/local/jdk export JRE_HOME=/usr/local/jdk/jre export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

[root@baolibinlocal]# vim /etc/profile [root@baolibinlocal]# source /etc/profile [root@baolibinlocal]# java -version java version"1.6.0_45" Java(TM) SERuntime Environment (build 1.6.0_45-b06) JavaHotSpot(TM) 64-Bit Server VM (build 20.45-b01, mixed mode) [root@baolibinlocal]#

7、安装Hadoop:

解压hadoop: tar -zxvf hadoop-1.2.1.tar.gz

修改权限: chown -R hadoop:hadoop hadoop-1.2.1

重命名: mv hadoop-1.2.1hadoop

编辑 : vim /etc/profile

#sethadoop environment export HADOOP_HOME=/usr/hadoop export PATH=$PATH:$HADOOP_HOME/bin

source /etc/profile

修改conf目录下的配置文件hadoop-env.sh、core-site.xml、hdfs-site.xml、mapred-site.xml

hadoop-env.sh:

export JAVA_HOME=/usr/local/jdk

core-site.xml:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://192.168.1.100:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/dfs</value>

</property>

</configuration>

注意:之前写的是 <value>/usr/hadoop/tmp</value> 每次使用需要重新格式化,要不然JobTracker过一会会自动消失,改成非tmp目录就正常了。

hdfs-site.xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

mapred-site.xml:

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>192.168.1.100:9001</value>

</property>

</configuration>

在conf下再修改 vim masters 和 vim slaves

内容均为192.168.1.100

8、格式化:

执行命令: hadoop namenode -format

[hadoop@baolibin ~]$ jps 29363 Jps [hadoop@baolibin ~]$ cd /usr/hadoop/bin [hadoop@baolibin bin]$ hadoop namenode -format Warning: $HADOOP_HOME is deprecated. 15/02/15 21:04:06 INFO namenode.NameNode:STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = baolibin/192.168.1.100 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 1.2.1 STARTUP_MSG: build =https://svn.apache.org/repos/asf/hadoop/common/branches/branch-1.2 -r 1503152;compiled by 'mattf' on Mon Jul 22 15:23:09 PDT 2013 STARTUP_MSG: java = 1.6.0_45 ************************************************************/ 15/02/15 21:04:06 INFO util.GSet: Computingcapacity for map BlocksMap 15/02/15 21:04:06 INFO util.GSet: VMtype = 64-bit 15/02/15 21:04:06 INFO util.GSet: 2.0% maxmemory = 1013645312 15/02/15 21:04:06 INFO util.GSet:capacity = 2^21 = 2097152 entries 15/02/15 21:04:06 INFO util.GSet:recommended=2097152, actual=2097152 15/02/15 21:04:07 INFOnamenode.FSNamesystem: fsOwner=hadoop 15/02/15 21:04:07 INFOnamenode.FSNamesystem: supergroup=supergroup 15/02/15 21:04:07 INFOnamenode.FSNamesystem: isPermissionEnabled=false 15/02/15 21:04:07 INFOnamenode.FSNamesystem: dfs.block.invalidate.limit=100 15/02/15 21:04:07 INFOnamenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0min(s), accessTokenLifetime=0 min(s) 15/02/15 21:04:07 INFO namenode.FSEditLog:dfs.namenode.edits.toleration.length = 0 15/02/15 21:04:07 INFO namenode.NameNode:Caching file names occuring more than 10 times 15/02/15 21:04:08 INFO common.Storage:Image file /usr/hadoop/tmp/dfs/name/current/fsimage of size 112 bytes saved in0 seconds. 15/02/15 21:04:08 INFO namenode.FSEditLog:closing edit log: position=4, editlog=/usr/hadoop/tmp/dfs/name/current/edits 15/02/15 21:04:08 INFO namenode.FSEditLog:close success: truncate to 4, editlog=/usr/hadoop/tmp/dfs/name/current/edits 15/02/15 21:04:08 INFO common.Storage:Storage directory /usr/hadoop/tmp/dfs/name has been successfully formatted. 15/02/15 21:04:08 INFO namenode.NameNode:SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode atbaolibin/192.168.1.100 ************************************************************/ [hadoop@baolibin bin]$

9、启动hadoop :

执行命令: start-all.sh

[hadoop@baolibinbin]$ start-all.sh Warning:$HADOOP_HOME is deprecated. startingnamenode, logging to/usr/hadoop/libexec/../logs/hadoop-hadoop-namenode-baolibin.out 192.168.1.100:starting datanode, logging to/usr/hadoop/libexec/../logs/hadoop-hadoop-datanode-baolibin.out 192.168.1.100:starting secondarynamenode, logging to/usr/hadoop/libexec/../logs/hadoop-hadoop-secondarynamenode-baolibin.out startingjobtracker, logging to/usr/hadoop/libexec/../logs/hadoop-hadoop-jobtracker-baolibin.out 192.168.1.100:starting tasktracker, logging to /usr/hadoop/libexec/../logs/hadoop-hadoop-tasktracker-baolibin.out [hadoop@baolibinbin]$

查看进程:

[hadoop@baolibinbin]$ jps 29707SecondaryNameNode 29804JobTracker 29928TaskTracker 29585DataNode 30049 Jps 29470NameNode [hadoop@baolibinbin]$

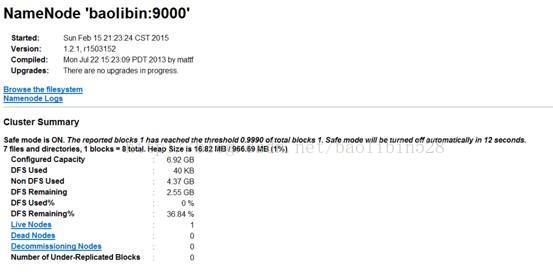

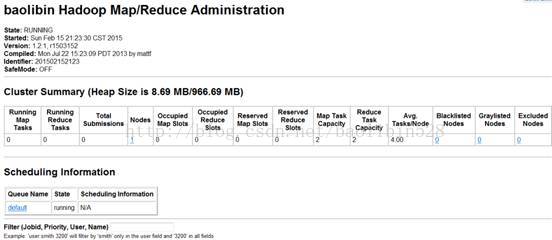

10、在浏览器查看: http://192.168.1.100:50070 http://192.168.1.100:50030

用远程软件登录,直接在Windows 网页上打开即可:

50070:

50030:

11、关闭hadoop

执行命令: stop-all.sh

[hadoop@baolibinbin]$ stop-all.sh Warning:$HADOOP_HOME is deprecated. stoppingjobtracker 192.168.1.100:stopping tasktracker stoppingnamenode 192.168.1.100:stopping datanode 192.168.1.100:stopping secondarynamenode [hadoop@baolibinbin]$

12、启动时没有NameNode的可能原因:

(1)没有格式化

(2)环境变量设置错误

(3)ip与hostname绑定失败