集成模型——随机森林

本文的数据集和上一篇一样,是美国个人收入信息。在上一篇末尾提到了随机森林算法,这一篇就介绍随机森林。

Ensemble Models

前面提到决策树容易过拟合,解决过拟合最有效的一个方法就是随机森林。随机森林是一种集成模型(Ensemble Models),集成模型一般是结合多个模型然后创建了一个精度更高的模型。那么随机森林自然就是结合多个不同的树,然后构造一个决策树森林,平衡每棵树的结果产生一个最终结果。

- 下面我们创建两个决策树,使得他们的参数不相同,然后计算他们的预测精度:

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import roc_auc_score

columns = ["age", "workclass", "education_num", "marital_status", "occupation", "relationship", "race", "sex", "hours_per_week", "native_country"]

clf = DecisionTreeClassifier(random_state=1, min_samples_leaf=75)

clf.fit(train[columns], train["high_income"])

clf2 = DecisionTreeClassifier(random_state=1, max_depth=6)

clf2.fit(train[columns], train["high_income"])

predictions = clf.predict(test[columns])

print(roc_auc_score(predictions, test["high_income"]))

predictions = clf2.predict(test[columns])

print(roc_auc_score(predictions, test["high_income"]))

''' 0.784853009097 0.771031199892 '''Combining Our Predictions

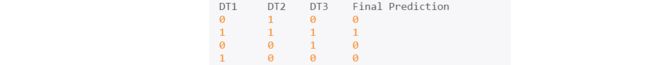

当我们有多个分类器的时候,我们可以将其预测结果做成矩阵形式,如下:

然后采用多数表决的方式生成最终的预测结果(当然其实有很多的生成最终结果的方式),此时分类器的个数要大于2,并且最好是奇数,因为是偶数的话,还要写一个规则来打破平局。

由于上面我们只生成了两个决策树,因此我们采用另一种方法来生成最终结果:我们不用predict函数,而用predict_proba 函数来生成每个样本属于0和1的概率,像下面这样:

- 然后我们只采用每个分类器预测结果的第二列(属于1的概率),然后求平均。

predictions = clf.predict_proba(test[columns])[:,1]

predictions2 = clf2.predict_proba(test[columns])[:,1]

combined = (predictions + predictions2) / 2

rounded = numpy.round(combined)

print(roc_auc_score(rounded, test["high_income"]))

''' 0.789959895266 '''Why Ensembling Works

- 对比这三个精度:第一棵树{0.784853009097},第二棵树{0.771031199892},集成树{0.789959895266}.发现集成的决策树的精度要好于任何一棵单独的树,这就是集成模型的强大之处。

The more”diverse“, or dissimilar, the models used to construct an ensemble, the stronger the combined predictions will be (assuming that all models have about the same accuracy).

- 结合一个精度相当的逻辑回归模型和一个决策树模型的效果会比结合两个决策树的效果要好,因为他们的机制相差很大,各自有各自的优点,结合这些优点才会创造更好的模型。

- 结合两个精度相差很大的模型并不会提升整体的精度,比如结合0.75和0.86的模型的精度会介于这两者之间。解决精度有差距的方法就是加权,对不同的模型赋予不同的重要性。

Bagging

随机森林是决策树的集成,如果不加修改,那么这些树的都相同,性能就不会得到提升。而随机森林(random forest)就中的random就是暗示树的随机性。随机森林做了两个修改bagging 和andom feature subsets.随机森林是一个包含多个决策树的分类器,并且其输出的类别是所有决策树输出的类别的众数而定。

- bagging:从N个训练样本中以有放回抽样的方式,取样N次,形成一个训练集(即bootstrap取样)。随机抽样是从训练集中进行抽样60%,测试集还是原来的测试集。

tree_count = 10

# Each "bag" will have 60% of the number of original rows.

bag_proportion = .6

predictions = []

for i in range(tree_count):

# We select 60% of the rows from train, sampling with replacement.

# We set a random state to ensure we'll be able to replicate our results.

# We set it to i instead of a fixed value so we don't get the same sample every loop.

# That would make all of our trees the same.

bag = train.sample(frac=bag_proportion, replace=True, random_state=i)

# Fit a decision tree model to the "bag".

clf = DecisionTreeClassifier(random_state=1, min_samples_leaf=75)

clf.fit(bag[columns], bag["high_income"])

# Using the model, make predictions on the test data.

predictions.append(clf.predict_proba(test[columns])[:,1])

combined = numpy.sum(predictions, axis=0) / 10

rounded = numpy.round(combined)

print(roc_auc_score(rounded, test["high_income"]))

''' 0.785415640465 '''Selecting Random Features

- random feature subsets:对于每一个节点,随机选择m个特征,决策树上每个节点的决定都是基于这些特征确定的。根据这m个特征,计算其最佳的分裂方式。

- 那么在原有的选择最优属性进行分类的函数中需要做一些修改,候选属性是随机选择的一个子集,而不是原来的全部的属性。

# Create the dataset that we used 2 missions ago. data = pandas.DataFrame([ [0,4,20,0], [0,4,60,2], [0,5,40,1], [1,4,25,1], [1,5,35,2], [1,5,55,1] ]) data.columns = ["high_income", "employment", "age", "marital_status"] # Set a random seed to make results reproducible. numpy.random.seed(1) # The dictionary to store our tree. tree = {} nodes = [] def find_best_column(data, target_name, columns): information_gains = [] # Select two columns randomly. cols = numpy.random.choice(columns, 2) for col in cols: information_gain = calc_information_gain(data, col, "high_income") information_gains.append(information_gain) highest_gain_index = information_gains.index(max(information_gains)) # Get the highest gain by indexing cols. highest_gain = cols[highest_gain_index] return highest_gain # The function to construct an id3 decision tree. def id3(data, target, columns, tree): unique_targets = pandas.unique(data[target]) nodes.append(len(nodes) + 1) tree["number"] = nodes[-1] if len(unique_targets) == 1: if 0 in unique_targets: tree["label"] = 0 elif 1 in unique_targets: tree["label"] = 1 return best_column = find_best_column(data, target, columns) column_median = data[best_column].median() tree["column"] = best_column tree["median"] = column_median left_split = data[data[best_column] <= column_median] right_split = data[data[best_column] > column_median] split_dict = [["left", left_split], ["right", right_split]] for name, split in split_dict: tree[name] = {} id3(split, target, columns, tree[name]) id3(data, "high_income", ["employment", "age", "marital_status"], tree) print(tree) ''' {'median': 37.5, 'number': 1, 'left': {'median': 4.0, 'number': 2, 'left': {'median': 22.5, 'number': 3, 'left': {'label': 0, 'number': 4}, 'right': {'label': 1, 'number': 5}, 'column': 'age'}, 'right': {'label': 1, 'number': 6}, 'column': 'employment'}, 'right': {'median': 55.0, 'number': 7, 'left': {'median': 47.5, 'number': 8, 'left': {'label': 0, 'number': 9}, 'right': {'label': 1, 'number': 10}, 'column': 'age'}, 'right': {'label': 0, 'number': 11}, 'column': 'age'}, 'column': 'age'} '''- 重点就是cols = numpy.random.choice(columns, 2)这一句,表达了每次长树时随机地选择两个属性作为候选分裂属性。

Random Subsets In Scikit-Learn

- 上面自己写随机森林参数选择比较复杂,在scikit-learn这个包中DecisionTreeClassifier已经实现了这项功能,通过一个splitter参数,将其设置为”random”,将max_features设置为为”auto”:如果我们有N个特征,那么会选择一个特征子集(大小为N开根号)。

# We'll build 10 trees

tree_count = 10

# Each "bag" will have 70% of the number of original rows.

bag_proportion = .7

predictions = []

for i in range(tree_count):

# We select 80% of the rows from train, sampling with replacement.

# We set a random state to ensure we'll be able to replicate our results.

# We set it to i instead of a fixed value so we don't get the same sample every time.

bag = train.sample(frac=bag_proportion, replace=True, random_state=i)

# Fit a decision tree model to the "bag".

clf = DecisionTreeClassifier(random_state=1, min_samples_leaf=75, splitter="random", max_features="auto")

clf.fit(bag[columns], bag["high_income"])

# Using the model, make predictions on the test data.

predictions.append(clf.predict_proba(test[columns])[:,1])

combined = numpy.sum(predictions, axis=0) / 10

rounded = numpy.round(combined)

print(roc_auc_score(rounded, test["high_income"]))

''' 0.789767997764 '''

Putting It All Together

- 从上面的结果显示,使用特征子集的方式的精度要比放回抽样的精度好。在sklearn中实现了随机森林算法:RandomForestClassifier以及RandomForestRegressor。

from sklearn.ensemble import RandomForestClassifier

clf = RandomForestClassifier(n_estimators=10, random_state=1, min_samples_leaf=75)

clf.fit(train[columns], train["high_income"])

predictions = clf.predict(test[columns])

print(roc_auc_score(predictions, test["high_income"]))

''' 0.791634978035 '''Parameter Tweaking

与决策树相同随机森林也可以通过调整参数得到更高的精度,随机森林的参数如下,前四个参数和决策树的参数相同,第五个参数

- min_samples_leaf

- min_samples_split

- max_depth

- max_leaf_nodes

- n_estimators : 随机森林中决策树的个数

- bootstrap – defaults to True. Bootstrap aggregation is another name for bagging(是否要采用放回抽样)。

from sklearn.ensemble import RandomForestClassifier

clf = RandomForestClassifier(n_estimators=150, random_state=1, min_samples_leaf=75)

clf.fit(train[columns], train["high_income"])

predictions = clf.predict(test[columns])

print(roc_auc_score(predictions, test["high_income"]))

''' 0.793788646293 '''Reducing Overfitting

- 随机森林算法降低了决策树算法的过拟合程度,因为一棵树过拟合了,但是有100棵树平均下来,就可以忽略这个噪声。

clf = RandomForestClassifier(n_estimators=150, random_state=1, min_samples_leaf=75)

clf.fit(train[columns], train["high_income"])

predictions = clf.predict(train[columns])

print(roc_auc_score(predictions, train["high_income"]))

predictions = clf.predict(test[columns])

print(roc_auc_score(predictions, test["high_income"]))

''' 0.794137608735 0.793788646293 '''- 发现训练集和测试集的性能相当,表示过拟合很小。

When To Use Random Forests

随机森林的优点有:

- 对于很多种数据集,它都可以产生高准确度的分类器。

- 它可以处理大量的输入变量。

- 它可以在决定类别时,评估变量的重要性。

- 在构造森林时,它可以在内部对于一般化后的误差产生无偏的估计。

- 它包含一个好方法可以估计遗失的数据,并且,如果有很大一部分的数据遗失,仍可以维持准确度。

- 它提供一个实验方法,可以去侦测variable interactions。

- 对于不平衡的分类数据集来说,它可以平衡误差。

- 它计算各例中的亲近度,对于数据挖掘、侦测偏离者(outlier)和将数据视觉化非常有用。

- 使用上述。它可被延伸应用在未标记的数据上,这类数据通常是使用非监督式聚类。也可侦测偏离者和观看数据。

- 学习过程是很快速的。

随机森林和神经网络以及gradient boosted trees是表现最好的算法之三。

随机森林的缺点:

- 难以解释,因为它是平均了很多树的结果。

- 构造过程很长,构造的树越多事假越长,但是我们可以采用多核并行处理这个问题,sklearn中有个参数n_jobs表示用几个CPU来执行算法 。

所以结合了优点以及缺点,通常在精度是至关重要且不要求解释决策的情况下使用随机森林是很好的。当就要求效率(时间复杂度)且需要解释时使用单个的决策树会比较合适。