第50课:HadoopMapReduce倒排索引解析与实战

1数据文件

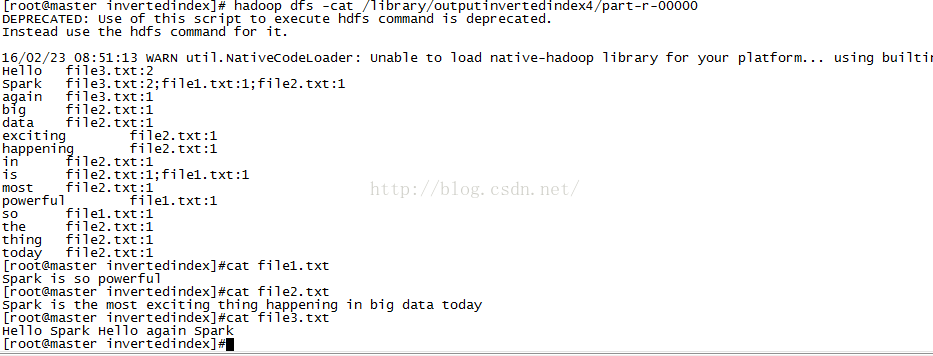

[root@master invertedindex]#cat file1.txt

Spark is so powerful

[root@master invertedindex]#cat file2.txt

Spark is the most exciting thing happening in big data today

[root@master invertedindex]#cat file3.txt

Hello Spark Hello again Spark

[root@master invertedindex]#

2、运行结果

[root@master invertedindex]# hadoop dfs -cat /library/outputinvertedindex4/part-r-00000

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

16/02/23 08:51:13 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Hello file3.txt:2

Spark file3.txt:2;file1.txt:1;file2.txt:1

again file3.txt:1

big file2.txt:1

data file2.txt:1

exciting file2.txt:1

happening file2.txt:1

in file2.txt:1

is file2.txt:1;file1.txt:1

most file2.txt:1

powerful file1.txt:1

so file1.txt:1

the file2.txt:1

thing file2.txt:1

today file2.txt:1

4、源代码

public class InvertedIndex {

public static class DataMapper

extends Mapper<LongWritable, Text, Text, Text>{

private final Text number = new Text("1");

private String fileName;

public void map(LongWritable key, Text value, Context context

) throws IOException, InterruptedException {

System.out.println("Map Methond Invoked!!!");

String line = value.toString();

if(line.trim().length() > 0){

StringTokenizer stringTokenizer = new StringTokenizer(line);

while(stringTokenizer.hasMoreTokens()){

String keyForCombiner = stringTokenizer.nextToken() + ":" + fileName;

System.out.println(keyForCombiner);

context.write(new Text(keyForCombiner), number);

}

}

}

@Override

protected void setup(Mapper<LongWritable, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

FileSplit inputSplit = (FileSplit)context.getInputSplit();

fileName = inputSplit.getPath().getName();

}

}

public static class DataCombiner

extends Reducer<Text,Text,Text, Text> {

public void reduce(Text key, Iterable<Text> values,

Context context

) throws IOException, InterruptedException {

System.out.println("Combiner Methond Invoked!!!" );

int sum = 0;

for(Text item : values){

sum += Integer.valueOf(item.toString());

}

String[] keyArray = key.toString().split(":");

System.out.println("keyArray[0] "+keyArray[0] +"keyArray[1] sum " + keyArray[1]+":"+sum);

context.write(new Text(keyArray[0]), new Text(keyArray[1]+":"+sum));

}

}

public static class DataReducer

extends Reducer<Text,Text,Text, Text> {

public void reduce(Text key, Iterable<Text> values,

Context context

) throws IOException, InterruptedException {

System.out.println("Reduce Methond Invoked!!!" );

StringBuffer result = new StringBuffer();

for(Text item : values){

result.append(item + ";");

}

context.write(key, new Text(result.toString().substring(0, result.toString().length() -1)));

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length < 2) {

System.err.println("Usage: InvertedIndex <in> [<in>...] <out>");

System.exit(2);

}

Job job = Job.getInstance(conf, "InvertedIndex");

job.setJarByClass(InvertedIndex.class);

job.setMapperClass(DataMapper.class);

job.setCombinerClass(DataCombiner.class);

job.setReducerClass(DataReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

for (int i = 0; i < otherArgs.length - 1; ++i) {

FileInputFormat.addInputPath(job, new Path(otherArgs[i]));

}

FileOutputFormat.setOutputPath(job,

new Path(otherArgs[otherArgs.length - 1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

5、打得日志

INFO [main] (org.apache.hadoop.conf.Configuration.deprecation:1049) 2016-02-23 21:55:12,806 ---- session.id is deprecated. Instead, use dfs.metrics.session-id

INFO [main] (org.apache.hadoop.metrics.jvm.JvmMetrics:76) 2016-02-23 21:55:12,816 ---- Initializing JVM Metrics with processName=JobTracker, sessionId=

WARN [main] (org.apache.hadoop.mapreduce.JobSubmitter:261) 2016-02-23 21:55:13,419 ---- No job jar file set. User classes may not be found. See Job or Job#setJar(String).

INFO [main] (org.apache.hadoop.mapreduce.lib.input.FileInputFormat:281) 2016-02-23 21:55:13,514 ---- Total input paths to process : 3

INFO [main] (org.apache.hadoop.mapreduce.JobSubmitter:494) 2016-02-23 21:55:13,572 ---- number of splits:3

INFO [main] (org.apache.hadoop.mapreduce.JobSubmitter:583) 2016-02-23 21:55:13,689 ---- Submitting tokens for job: job_local1201379028_0001

INFO [main] (org.apache.hadoop.mapreduce.Job:1300) 2016-02-23 21:55:13,961 ---- The url to track the job: http://localhost:8080/

INFO [main] (org.apache.hadoop.mapreduce.Job:1345) 2016-02-23 21:55:13,962 ---- Running job: job_local1201379028_0001

INFO [Thread-3] (org.apache.hadoop.mapred.LocalJobRunner:471) 2016-02-23 21:55:13,973 ---- OutputCommitter set in config null

INFO [Thread-3] (org.apache.hadoop.mapred.LocalJobRunner:489) 2016-02-23 21:55:13,985 ---- OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

INFO [Thread-3] (org.apache.hadoop.mapred.LocalJobRunner:448) 2016-02-23 21:55:14,069 ---- Waiting for map tasks

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:224) 2016-02-23 21:55:14,070 ---- Starting task: attempt_local1201379028_0001_m_000000_0

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.yarn.util.ProcfsBasedProcessTree:181) 2016-02-23 21:55:14,112 ---- ProcfsBasedProcessTree currently is supported only on Linux.

INFO [main] (org.apache.hadoop.mapreduce.Job:1366) 2016-02-23 21:55:14,964 ---- Job job_local1201379028_0001 running in uber mode : false

INFO [main] (org.apache.hadoop.mapreduce.Job:1373) 2016-02-23 21:55:14,966 ---- map 0% reduce 0%

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.Task:587) 2016-02-23 21:55:16,341 ---- Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@831cd9

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:753) 2016-02-23 21:55:16,347 ---- Processing split: hdfs://192.168.2.100:9000/library/invertedindex/file2.txt:0+61

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1202) 2016-02-23 21:55:16,441 ---- (EQUATOR) 0 kvi 26214396(104857584)

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:995) 2016-02-23 21:55:16,441 ---- mapreduce.task.io.sort.mb: 100

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:996) 2016-02-23 21:55:16,441 ---- soft limit at 83886080

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:997) 2016-02-23 21:55:16,441 ---- bufstart = 0; bufvoid = 104857600

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:998) 2016-02-23 21:55:16,441 ---- kvstart = 26214396; length = 6553600

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:402) 2016-02-23 21:55:16,446 ---- Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

Map Methond Invoked!!!

Spark:file2.txt

is:file2.txt

the:file2.txt

most:file2.txt

exciting:file2.txt

thing:file2.txt

happening:file2.txt

in:file2.txt

big:file2.txt

data:file2.txt

today:file2.txt

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:17,586 ----

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1457) 2016-02-23 21:55:17,589 ---- Starting flush of map output

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1475) 2016-02-23 21:55:17,589 ---- Spilling map output

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1476) 2016-02-23 21:55:17,589 ---- bufstart = 0; bufend = 193; bufvoid = 104857600

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1478) 2016-02-23 21:55:17,589 ---- kvstart = 26214396(104857584); kvend = 26214356(104857424); length = 41/6553600

Combiner Methond Invoked!!!

keyArray[0] SparkkeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] bigkeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] datakeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] excitingkeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] happeningkeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] inkeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] iskeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] mostkeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] thekeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] thingkeyArray[1] sum file2.txt:1

Combiner Methond Invoked!!!

keyArray[0] todaykeyArray[1] sum file2.txt:1

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1660) 2016-02-23 21:55:17,657 ---- Finished spill 0

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.Task:1001) 2016-02-23 21:55:17,667 ---- Task:attempt_local1201379028_0001_m_000000_0 is done. And is in the process of committing

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:17,679 ---- map

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.Task:1121) 2016-02-23 21:55:17,679 ---- Task 'attempt_local1201379028_0001_m_000000_0' done.

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:249) 2016-02-23 21:55:17,679 ---- Finishing task: attempt_local1201379028_0001_m_000000_0

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:224) 2016-02-23 21:55:17,680 ---- Starting task: attempt_local1201379028_0001_m_000001_0

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.yarn.util.ProcfsBasedProcessTree:181) 2016-02-23 21:55:17,681 ---- ProcfsBasedProcessTree currently is supported only on Linux.

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.Task:587) 2016-02-23 21:55:17,806 ---- Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@96c5ca

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:753) 2016-02-23 21:55:17,810 ---- Processing split: hdfs://192.168.2.100:9000/library/invertedindex/file3.txt:0+30

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1202) 2016-02-23 21:55:17,845 ---- (EQUATOR) 0 kvi 26214396(104857584)

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:995) 2016-02-23 21:55:17,846 ---- mapreduce.task.io.sort.mb: 100

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:996) 2016-02-23 21:55:17,846 ---- soft limit at 83886080

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:997) 2016-02-23 21:55:17,846 ---- bufstart = 0; bufvoid = 104857600

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:998) 2016-02-23 21:55:17,846 ---- kvstart = 26214396; length = 6553600

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:402) 2016-02-23 21:55:17,848 ---- Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

Map Methond Invoked!!!

Hello:file3.txt

Spark:file3.txt

Hello:file3.txt

again:file3.txt

Spark:file3.txt

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:17,891 ----

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1457) 2016-02-23 21:55:17,891 ---- Starting flush of map output

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1475) 2016-02-23 21:55:17,891 ---- Spilling map output

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1476) 2016-02-23 21:55:17,891 ---- bufstart = 0; bufend = 90; bufvoid = 104857600

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1478) 2016-02-23 21:55:17,891 ---- kvstart = 26214396(104857584); kvend = 26214380(104857520); length = 17/6553600

Combiner Methond Invoked!!!

keyArray[0] HellokeyArray[1] sum file3.txt:2

Combiner Methond Invoked!!!

keyArray[0] SparkkeyArray[1] sum file3.txt:2

Combiner Methond Invoked!!!

keyArray[0] againkeyArray[1] sum file3.txt:1

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1660) 2016-02-23 21:55:17,958 ---- Finished spill 0

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.Task:1001) 2016-02-23 21:55:17,964 ---- Task:attempt_local1201379028_0001_m_000001_0 is done. And is in the process of committing

INFO [main] (org.apache.hadoop.mapreduce.Job:1373) 2016-02-23 21:55:17,968 ---- map 33% reduce 0%

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:17,970 ---- map

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.Task:1121) 2016-02-23 21:55:17,970 ---- Task 'attempt_local1201379028_0001_m_000001_0' done.

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:249) 2016-02-23 21:55:17,970 ---- Finishing task: attempt_local1201379028_0001_m_000001_0

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:224) 2016-02-23 21:55:17,970 ---- Starting task: attempt_local1201379028_0001_m_000002_0

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.yarn.util.ProcfsBasedProcessTree:181) 2016-02-23 21:55:17,972 ---- ProcfsBasedProcessTree currently is supported only on Linux.

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.Task:587) 2016-02-23 21:55:18,104 ---- Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@290d

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:753) 2016-02-23 21:55:18,108 ---- Processing split: hdfs://192.168.2.100:9000/library/invertedindex/file1.txt:0+21

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1202) 2016-02-23 21:55:18,146 ---- (EQUATOR) 0 kvi 26214396(104857584)

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:995) 2016-02-23 21:55:18,146 ---- mapreduce.task.io.sort.mb: 100

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:996) 2016-02-23 21:55:18,147 ---- soft limit at 83886080

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:997) 2016-02-23 21:55:18,147 ---- bufstart = 0; bufvoid = 104857600

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:998) 2016-02-23 21:55:18,147 ---- kvstart = 26214396; length = 6553600

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:402) 2016-02-23 21:55:18,148 ---- Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

Map Methond Invoked!!!

Spark:file1.txt

is:file1.txt

so:file1.txt

powerful:file1.txt

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:18,154 ----

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1457) 2016-02-23 21:55:18,154 ---- Starting flush of map output

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1475) 2016-02-23 21:55:18,154 ---- Spilling map output

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1476) 2016-02-23 21:55:18,154 ---- bufstart = 0; bufend = 69; bufvoid = 104857600

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1478) 2016-02-23 21:55:18,154 ---- kvstart = 26214396(104857584); kvend = 26214384(104857536); length = 13/6553600

Combiner Methond Invoked!!!

keyArray[0] SparkkeyArray[1] sum file1.txt:1

Combiner Methond Invoked!!!

keyArray[0] iskeyArray[1] sum file1.txt:1

Combiner Methond Invoked!!!

keyArray[0] powerfulkeyArray[1] sum file1.txt:1

Combiner Methond Invoked!!!

keyArray[0] sokeyArray[1] sum file1.txt:1

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.MapTask:1660) 2016-02-23 21:55:18,344 ---- Finished spill 0

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.Task:1001) 2016-02-23 21:55:18,350 ---- Task:attempt_local1201379028_0001_m_000002_0 is done. And is in the process of committing

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:18,354 ---- map

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.Task:1121) 2016-02-23 21:55:18,355 ---- Task 'attempt_local1201379028_0001_m_000002_0' done.

INFO [LocalJobRunner Map Task Executor #0] (org.apache.hadoop.mapred.LocalJobRunner:249) 2016-02-23 21:55:18,355 ---- Finishing task: attempt_local1201379028_0001_m_000002_0

INFO [Thread-3] (org.apache.hadoop.mapred.LocalJobRunner:456) 2016-02-23 21:55:18,355 ---- map task executor complete.

INFO [Thread-3] (org.apache.hadoop.mapred.LocalJobRunner:448) 2016-02-23 21:55:18,358 ---- Waiting for reduce tasks

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.LocalJobRunner:302) 2016-02-23 21:55:18,358 ---- Starting task: attempt_local1201379028_0001_r_000000_0

INFO [pool-6-thread-1] (org.apache.hadoop.yarn.util.ProcfsBasedProcessTree:181) 2016-02-23 21:55:18,369 ---- ProcfsBasedProcessTree currently is supported only on Linux.

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.Task:587) 2016-02-23 21:55:18,496 ---- Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@125331d

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.ReduceTask:362) 2016-02-23 21:55:18,500 ---- Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@2b8e64

INFO [pool-6-thread-1] (org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl:196) 2016-02-23 21:55:18,562 ---- MergerManager: memoryLimit=363285696, maxSingleShuffleLimit=90821424, mergeThreshold=239768576, ioSortFactor=10, memToMemMergeOutputsThreshold=10

INFO [EventFetcher for fetching Map Completion Events] (org.apache.hadoop.mapreduce.task.reduce.EventFetcher:61) 2016-02-23 21:55:18,571 ---- attempt_local1201379028_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

INFO [localfetcher#1] (org.apache.hadoop.mapreduce.task.reduce.LocalFetcher:141) 2016-02-23 21:55:18,833 ---- localfetcher#1 about to shuffle output of map attempt_local1201379028_0001_m_000002_0 decomp: 79 len: 83 to MEMORY

INFO [main] (org.apache.hadoop.mapreduce.Job:1373) 2016-02-23 21:55:18,969 ---- map 100% reduce 0%

INFO [localfetcher#1] (org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput:100) 2016-02-23 21:55:19,059 ---- Read 79 bytes from map-output for attempt_local1201379028_0001_m_000002_0

INFO [localfetcher#1] (org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl:314) 2016-02-23 21:55:19,091 ---- closeInMemoryFile -> map-output of size: 79, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->79

INFO [localfetcher#1] (org.apache.hadoop.mapreduce.task.reduce.LocalFetcher:141) 2016-02-23 21:55:19,128 ---- localfetcher#1 about to shuffle output of map attempt_local1201379028_0001_m_000000_0 decomp: 217 len: 221 to MEMORY

INFO [localfetcher#1] (org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput:100) 2016-02-23 21:55:19,131 ---- Read 217 bytes from map-output for attempt_local1201379028_0001_m_000000_0

INFO [localfetcher#1] (org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl:314) 2016-02-23 21:55:19,131 ---- closeInMemoryFile -> map-output of size: 217, inMemoryMapOutputs.size() -> 2, commitMemory -> 79, usedMemory ->296

INFO [localfetcher#1] (org.apache.hadoop.mapreduce.task.reduce.LocalFetcher:141) 2016-02-23 21:55:19,137 ---- localfetcher#1 about to shuffle output of map attempt_local1201379028_0001_m_000001_0 decomp: 62 len: 66 to MEMORY

INFO [localfetcher#1] (org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput:100) 2016-02-23 21:55:19,139 ---- Read 62 bytes from map-output for attempt_local1201379028_0001_m_000001_0

INFO [localfetcher#1] (org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl:314) 2016-02-23 21:55:19,139 ---- closeInMemoryFile -> map-output of size: 62, inMemoryMapOutputs.size() -> 3, commitMemory -> 296, usedMemory ->358

INFO [EventFetcher for fetching Map Completion Events] (org.apache.hadoop.mapreduce.task.reduce.EventFetcher:76) 2016-02-23 21:55:19,140 ---- EventFetcher is interrupted.. Returning

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:19,141 ---- 3 / 3 copied.

INFO [pool-6-thread-1] (org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl:674) 2016-02-23 21:55:19,141 ---- finalMerge called with 3 in-memory map-outputs and 0 on-disk map-outputs

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.Merger:597) 2016-02-23 21:55:19,272 ---- Merging 3 sorted segments

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.Merger:696) 2016-02-23 21:55:19,273 ---- Down to the last merge-pass, with 3 segments left of total size: 334 bytes

INFO [pool-6-thread-1] (org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl:751) 2016-02-23 21:55:19,277 ---- Merged 3 segments, 358 bytes to disk to satisfy reduce memory limit

INFO [pool-6-thread-1] (org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl:781) 2016-02-23 21:55:19,278 ---- Merging 1 files, 358 bytes from disk

INFO [pool-6-thread-1] (org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl:796) 2016-02-23 21:55:19,279 ---- Merging 0 segments, 0 bytes from memory into reduce

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.Merger:597) 2016-02-23 21:55:19,280 ---- Merging 1 sorted segments

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.Merger:696) 2016-02-23 21:55:19,284 ---- Down to the last merge-pass, with 1 segments left of total size: 346 bytes

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:19,286 ---- 3 / 3 copied.

INFO [pool-6-thread-1] (org.apache.hadoop.conf.Configuration.deprecation:1049) 2016-02-23 21:55:19,776 ---- mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

Reduce Methond Invoked!!!

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.Task:1001) 2016-02-23 21:55:20,835 ---- Task:attempt_local1201379028_0001_r_000000_0 is done. And is in the process of committing

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:20,839 ---- 3 / 3 copied.

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.Task:1162) 2016-02-23 21:55:20,840 ---- Task attempt_local1201379028_0001_r_000000_0 is allowed to commit now

INFO [pool-6-thread-1] (org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter:439) 2016-02-23 21:55:20,851 ---- Saved output of task 'attempt_local1201379028_0001_r_000000_0' to hdfs://192.168.2.100:9000/library/outputinvertedindex5/_temporary/0/task_local1201379028_0001_r_000000

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.LocalJobRunner:591) 2016-02-23 21:55:20,852 ---- reduce > reduce

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.Task:1121) 2016-02-23 21:55:20,852 ---- Task 'attempt_local1201379028_0001_r_000000_0' done.

INFO [pool-6-thread-1] (org.apache.hadoop.mapred.LocalJobRunner:325) 2016-02-23 21:55:20,852 ---- Finishing task: attempt_local1201379028_0001_r_000000_0

INFO [Thread-3] (org.apache.hadoop.mapred.LocalJobRunner:456) 2016-02-23 21:55:20,853 ---- reduce task executor complete.

INFO [main] (org.apache.hadoop.mapreduce.Job:1373) 2016-02-23 21:55:20,969 ---- map 100% reduce 100%

INFO [main] (org.apache.hadoop.mapreduce.Job:1384) 2016-02-23 21:55:21,969 ---- Job job_local1201379028_0001 completed successfully

INFO [main] (org.apache.hadoop.mapreduce.Job:1391) 2016-02-23 21:55:22,037 ---- Counters: 38

File System Counters

FILE: Number of bytes read=4505

FILE: Number of bytes written=1014098

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=376

HDFS: Number of bytes written=301

HDFS: Number of read operations=37

HDFS: Number of large read operations=0

HDFS: Number of write operations=6

Map-Reduce Framework

Map input records=3

Map output records=20

Map output bytes=352

Map output materialized bytes=370

Input split bytes=366

Combine input records=20

Combine output records=18

Reduce input groups=15

Reduce shuffle bytes=370

Reduce input records=18

Reduce output records=15

Spilled Records=36

Shuffled Maps =3

Failed Shuffles=0

Merged Map outputs=3

GC time elapsed (ms)=78

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=1050165248

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=112

File Output Format Counters

Bytes Written=301