【GlusterFS学习之二】:GlusterFS的Self-Heal特性

上一篇介绍了GlusterFS的安装部署过程,本章介绍一下GlusterFS的一个重要的特性,Self-heal,也就是自修复性。

1.What is the meaning by Self-heal on replicated volumes?

This feature is available for replicated volumes. Suppose, we have a replicated volume [minimum replica count 2]. Assume that due to some failures one or more brick among the replica bricks go down for a while and user happen to delete a file from the mount point which will get affected only on the online brick.

When the offline brick comes online at a later time, it is necessary to have that file removed from this brick also i.e. a synchronization between the replica bricks called as healing must be done. Same is the case with creation/modification of files on offline bricks. GlusterFS has an inbuilt self-heal daemon to take care of these situations whenever the bricks become online.

2.自修复在GlusterFS上的表现

在上一节的基础上我们已经进行了volume的创建,然后将客户端进行了挂载操作,形成了一个稳定的系统。

在客户端创建文件:

在server端的两个重复块验证:

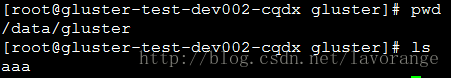

server1:

server2:

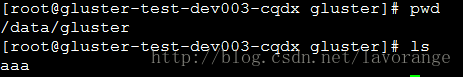

现在将server端的glusterfs守护通过pid的值kill掉,pid从volume的状态信息获取:

注意:观察server端的自修复守护进程的表现

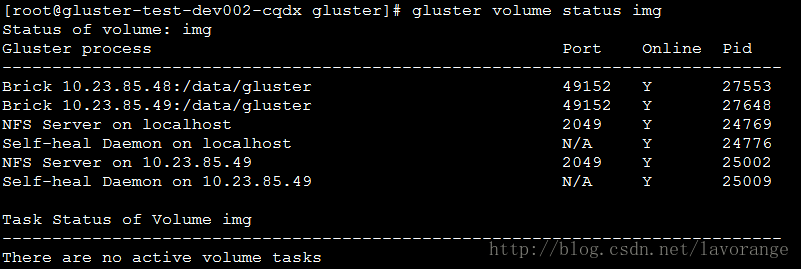

现在kill掉server1端的守护进程:

现在server1的块已经下线了。

现在从挂载点/mnt将文件aaa删除,检查brick块的内容。

client端操作:

![]()

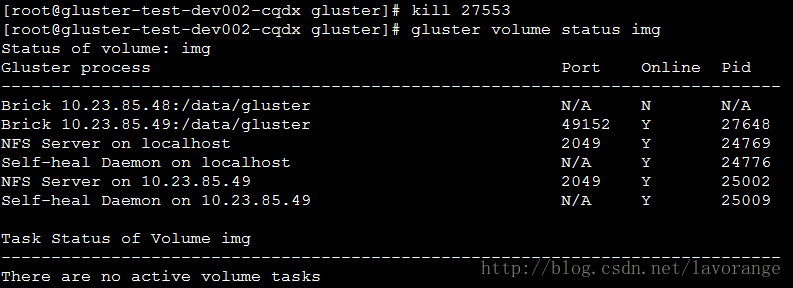

server1(offline):

server2(online):

可以看到aaa文件仍然在server1的brick中。

现在将server1的brick重新设置上线:

现在server1 brick在线了。

然后检查块内的内容:

server1 brick:

server2 brick:

可以看到server1 brick中原有的文件aaa已经被自修复进行删除掉了。

注意:在大文件的情况下,有可能需要一段时间来进行自修复操作。

Author:忆之独秀

Email:[email protected]

注明出处:http://blog.csdn.net/lavorange/article/details/44994993